Feature fusion coefficient learnable image semantic segmentation method

A semantic segmentation and fusion coefficient technology, applied in the field of deep learning and computer vision, can solve problems such as helplessness performance, increased calculation amount and calculation time, blindness, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0051]The present invention will be further described below through specific embodiments and accompanying drawings. The embodiments of the present invention are for better understanding of the present invention by those skilled in the art, and do not limit the present invention in any way.

[0052] A method for image semantic segmentation with learnable feature fusion coefficients of the present invention, the specific steps are as follows:

[0053] First, train a deep convolutional network classification model from images to class labels on the image classification dataset:

[0054] We generally use the VGG16 network directly, the pre-trained classification model parameters, to initialize our semantic segmentation network, instead of training it ourselves.

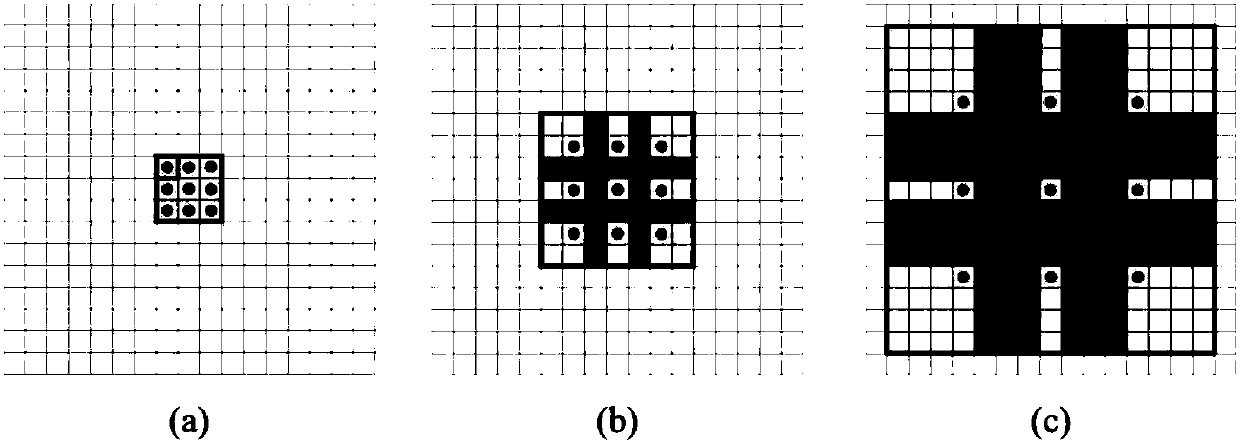

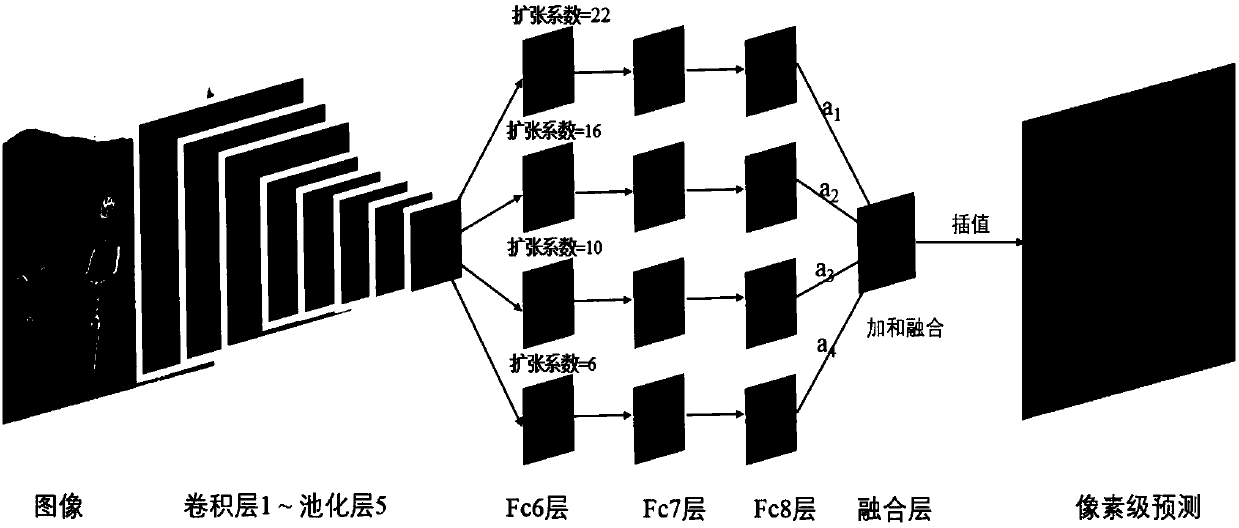

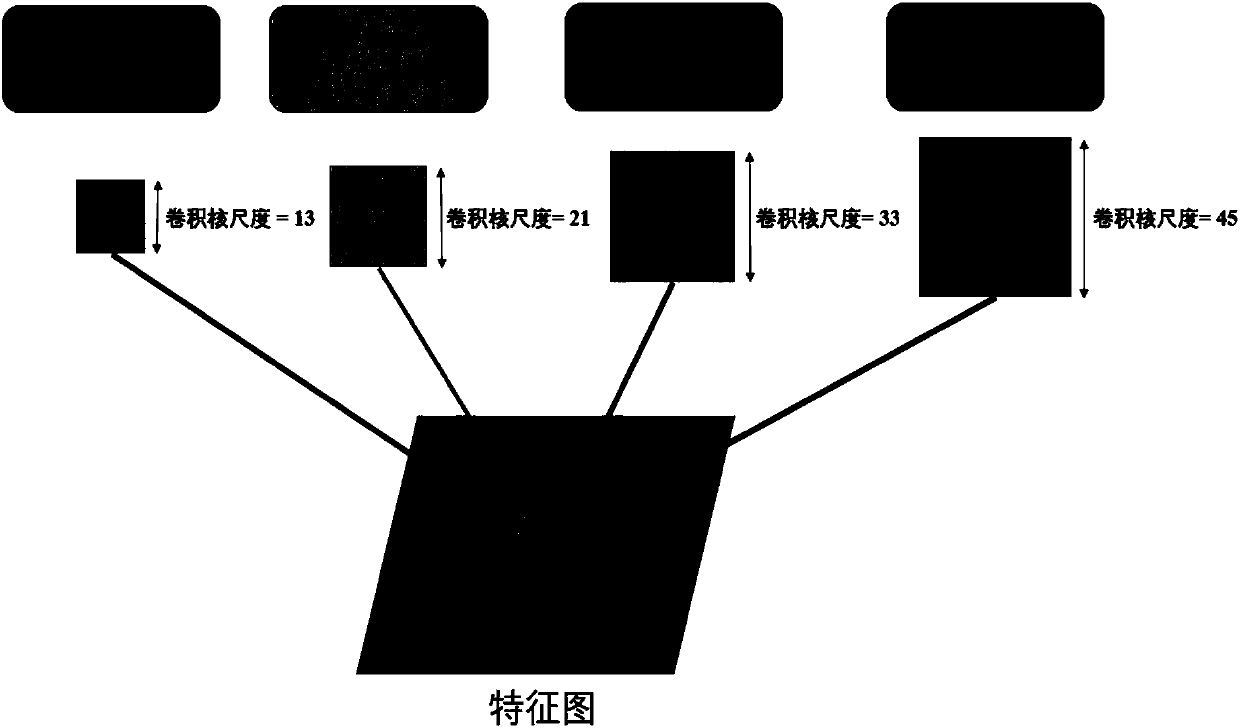

[0055] Secondly, the fully connected layer of the deep convolutional neural network classification model is converted into a convolutional layer to obtain a fully convolutional deep neural network model, which can perfor...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com