Two-stream neural network-based human body image action identification method

A neural network and action recognition technology, applied in the field of computer vision, to achieve the effect of improving recognition accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0041] The invention relates to a human body image action recognition method based on a dual-stream neural network, comprising the following steps:

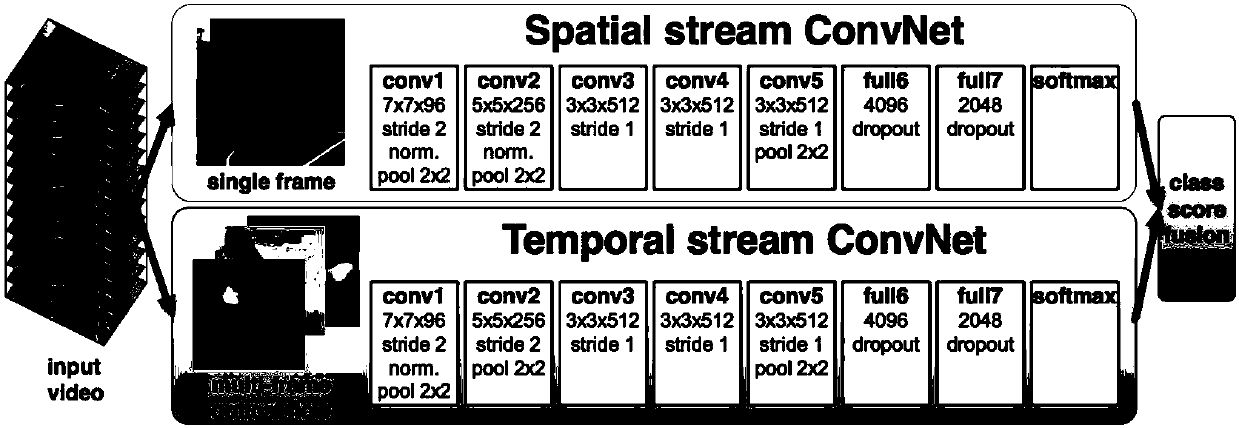

[0042] S1. Construct temporal neural network and spatial neural network;

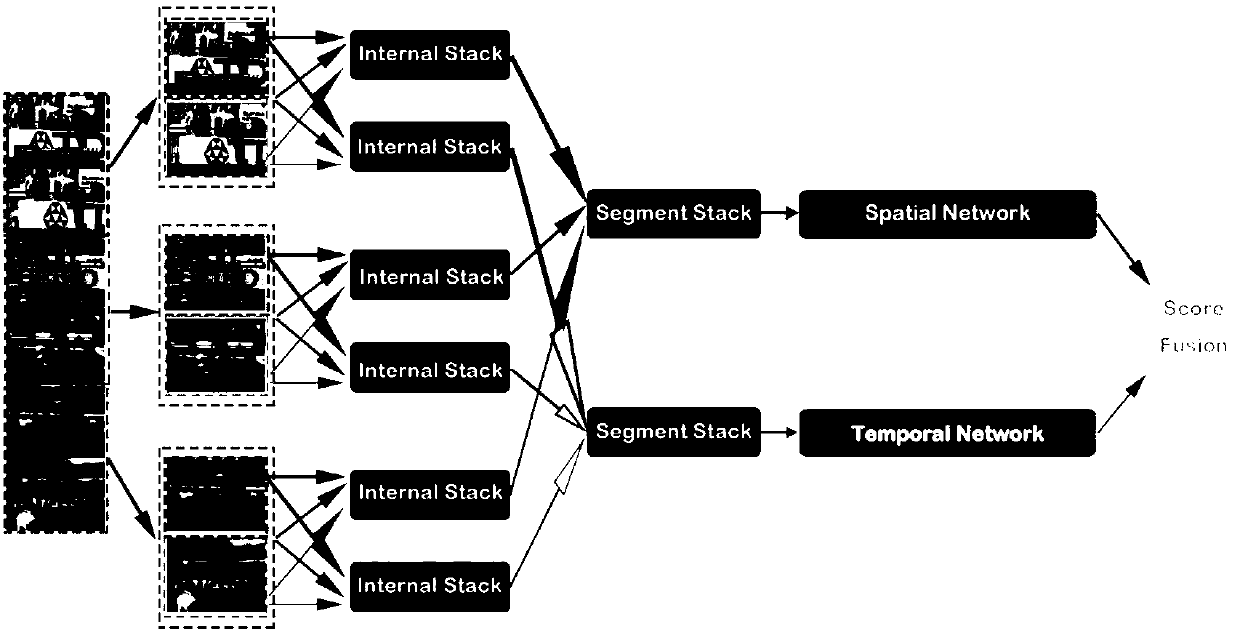

[0043] S2. Prepare enough training videos for the temporal neural network and the spatial neural network, then extract information from the training video to train the temporal neural network and the spatial neural network, such as figure 1 As shown, the steps to extract information are as follows:

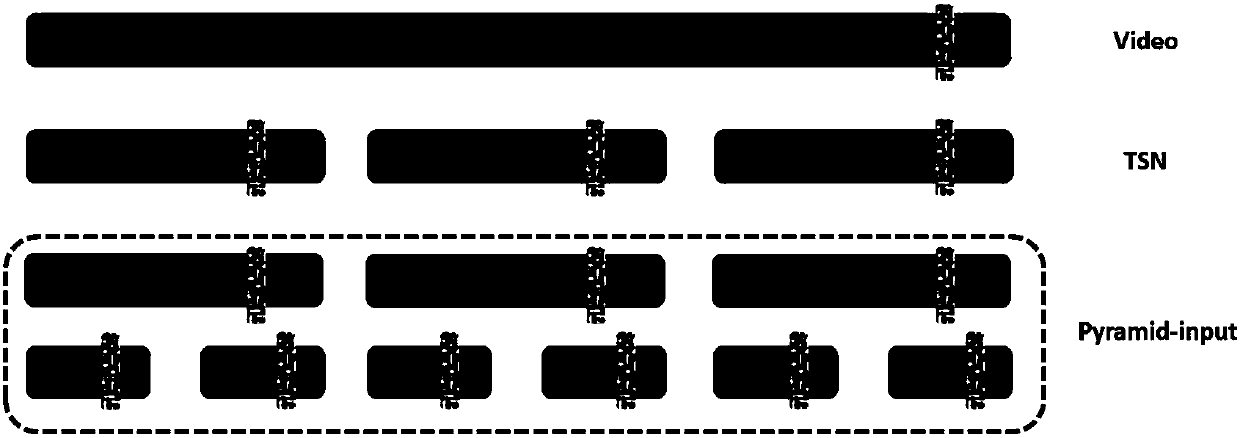

[0044] S21. The number of video frame segments is set to be k, and the initial value of k is 1;

[0045] S22. The video frame of the training video is divided into 3 sections, and then RGB information and optical flow map information of multiple video frames are collected respectively;

[0046] S23. Make k=k+1 and then carry out the processing of step S22 to each video frame, divide each video frame into 2 sections again, then collect RGB information and opt...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com