Resource allocation method and Cache

A resource allocation and high-speed cache technology, applied in the field of multi-processors, can solve problems such as waste of Cache resources, different requirements for using Cache resources, and no consideration of changes in the requirements of processor dynamic access.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

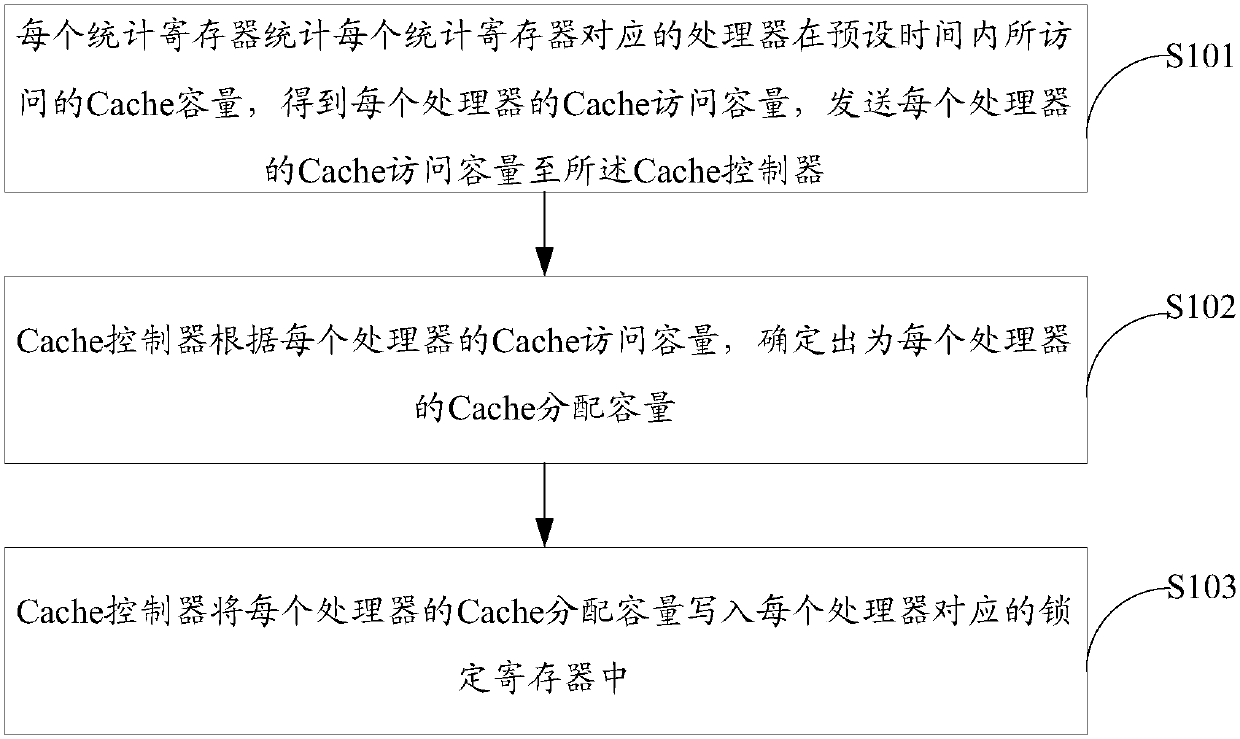

[0020] The following will clearly and completely describe the technical solutions in the embodiments of the present invention with reference to the drawings in the embodiments of the present invention.

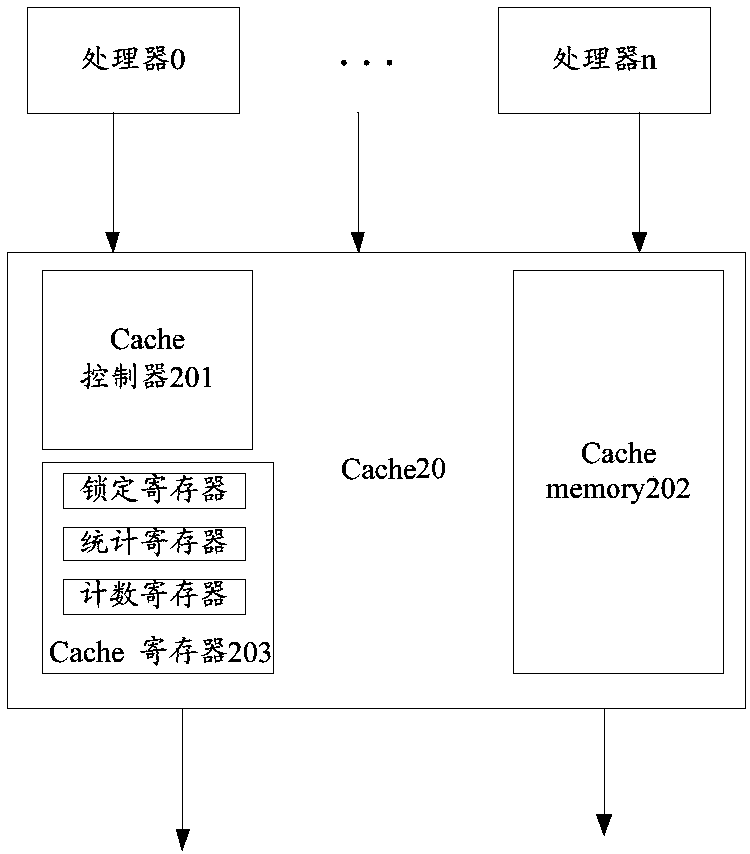

[0021] Embodiments of the present invention provide a resource allocation method, which is applied to a Cache shared by multiple processors, wherein each processor corresponds to an identification code (ID, Identification), and the ID is used to identify the processor; Moreover, before using the Cache, the Cache capacity has been fixedly configured for each processor. Here, the Cache can be a set-associated structure, and the Cache capacity can include multiple ways, and each way includes a fixed number of lines. For example, when the Cache capacity includes 4 When there are four processors, the Cache capacity for each processor can be configured as 4 ways, and each way can include 10 lines;

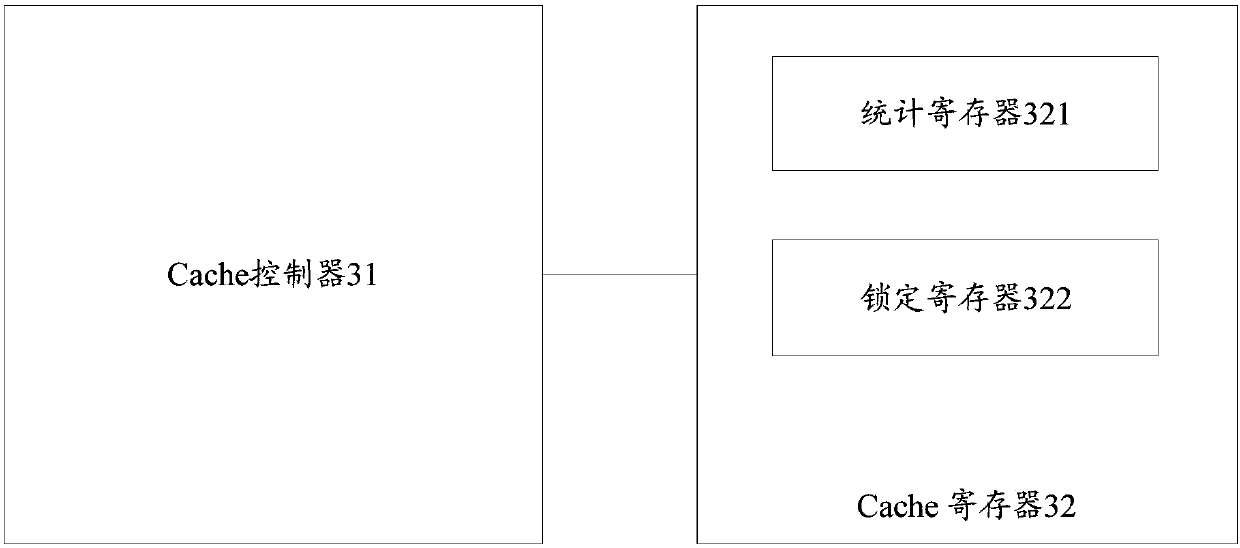

[0022] Wherein, the above-mentioned Cache includes: a Cache controller and a Cache r...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com