Multi-view stereo vision method integrated with spatial propagation and pixel-level optimization

A space propagation and stereo vision technology, applied in the field of computer vision, can solve the problems of incomplete accuracy and low efficiency of 3D point cloud, achieve the effects of reducing repeated calculations, improving redundant calculations, and accelerating convergence time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach

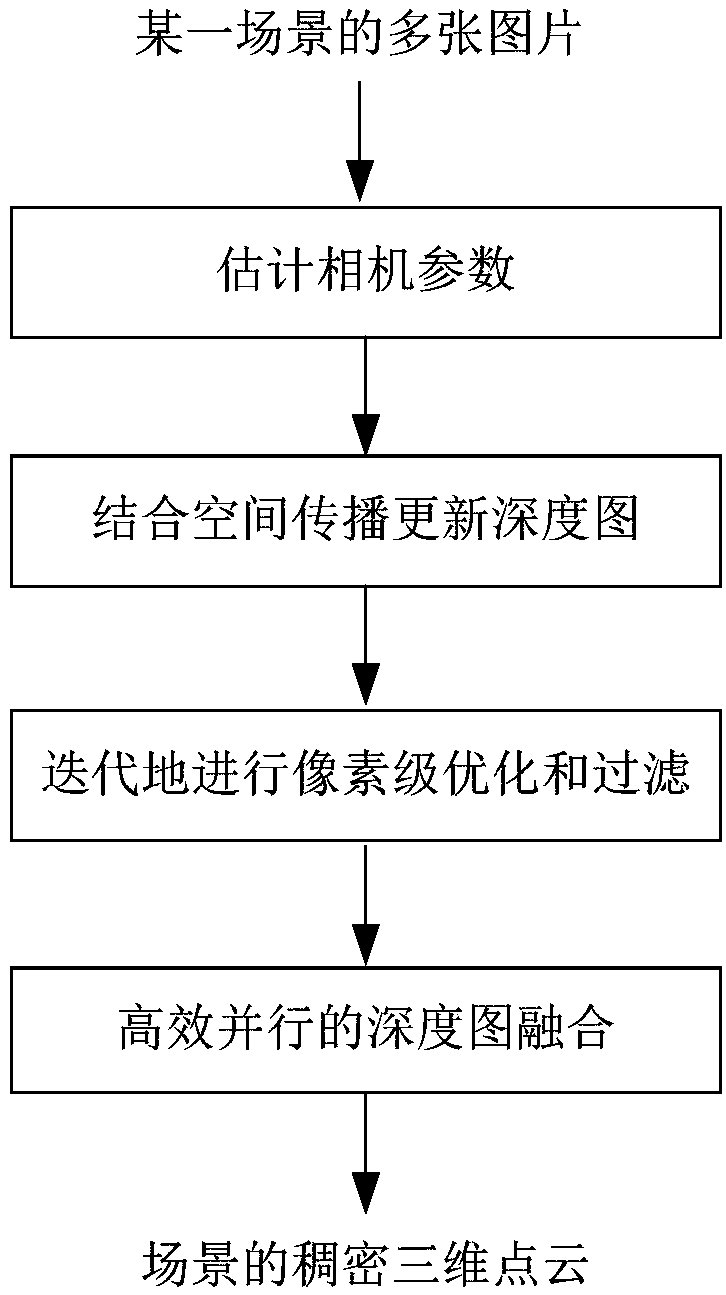

[0042] Its specific implementation is as follows:

[0043] (1) Obtain camera parameters according to multiple pictures of the scene, extract the depth map of each picture according to the camera parameters, and update the depth map of each picture by using space propagation;

[0044] (2) Iteratively perform pixel-level optimization and filtering on the updated depth map to obtain the processed picture;

[0045] (3) Perform depth map fusion on each pixel in the processed image in parallel to obtain a 3D point cloud of the scene.

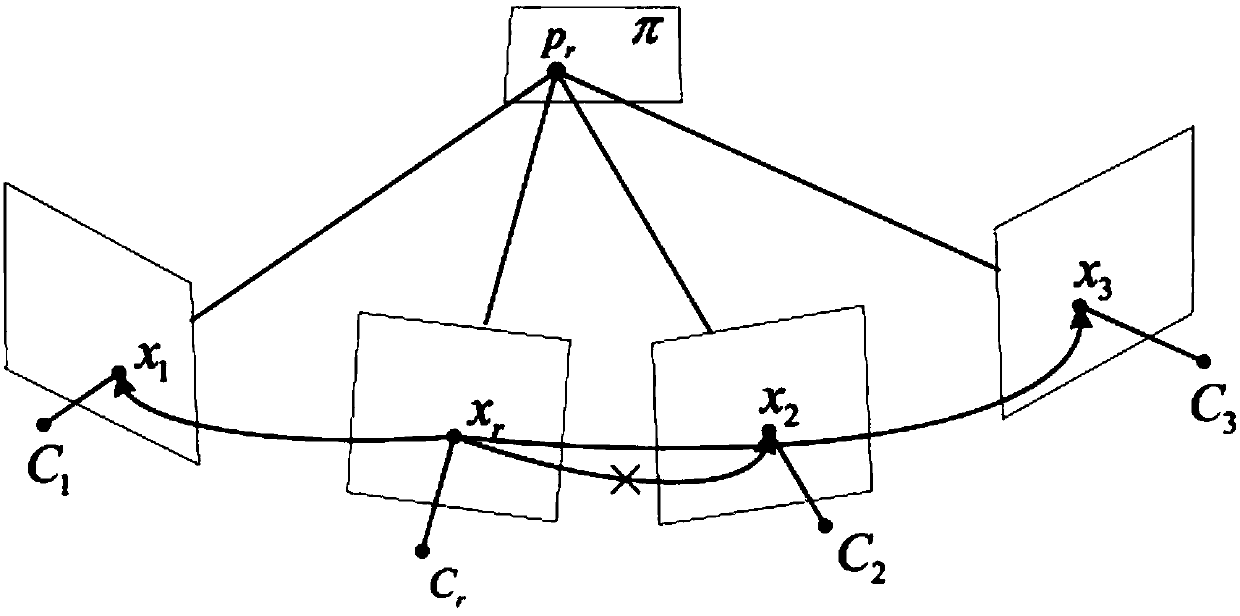

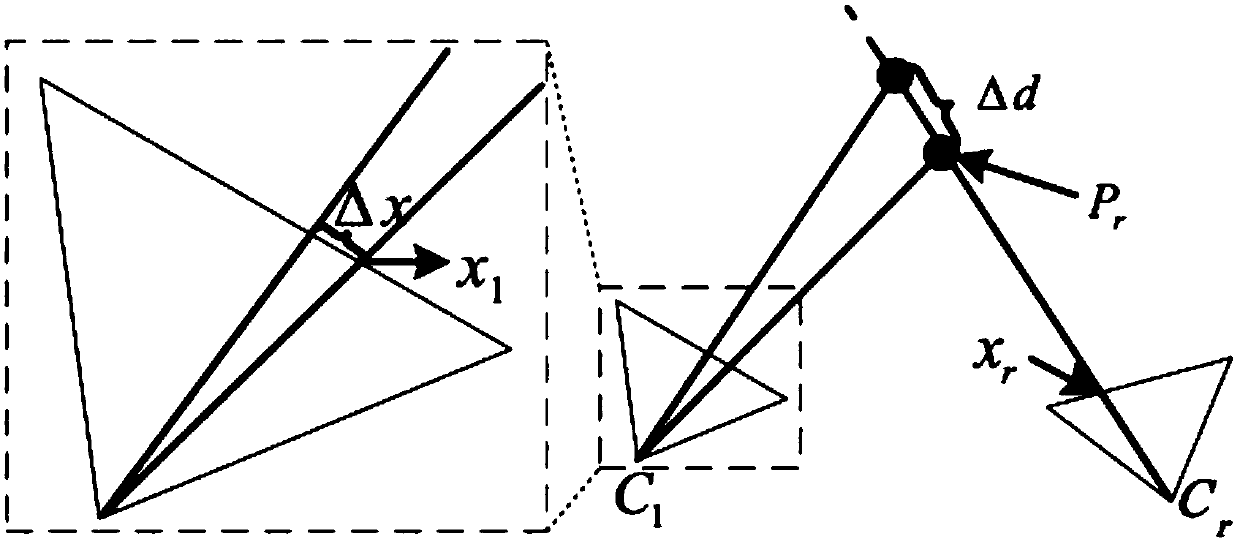

[0046] In the embodiment of the present invention, such as figure 2 As shown, the step (1) specifically includes:

[0047] (1-1) Use any one of the multiple pictures of the scene as a reference picture C r , get the neighbor picture C of the reference picture from multiple pictures of the scene 1 , C 2 , C 3 , obtain the camera parameters of the reference picture and the camera parameters of the neighboring pictures through the motion recovery ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com