Goods-selling method and device based on image comparison and self-service vending machine

A vending machine and image technology, applied in the field of image processing, can solve the problems of the camera being too late to track, unable to identify, and difficult to identify.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0053] According to the following detailed description of specific embodiments of the application in conjunction with the accompanying drawings, those skilled in the art will be more aware of the above and other objectives, advantages and features of the application.

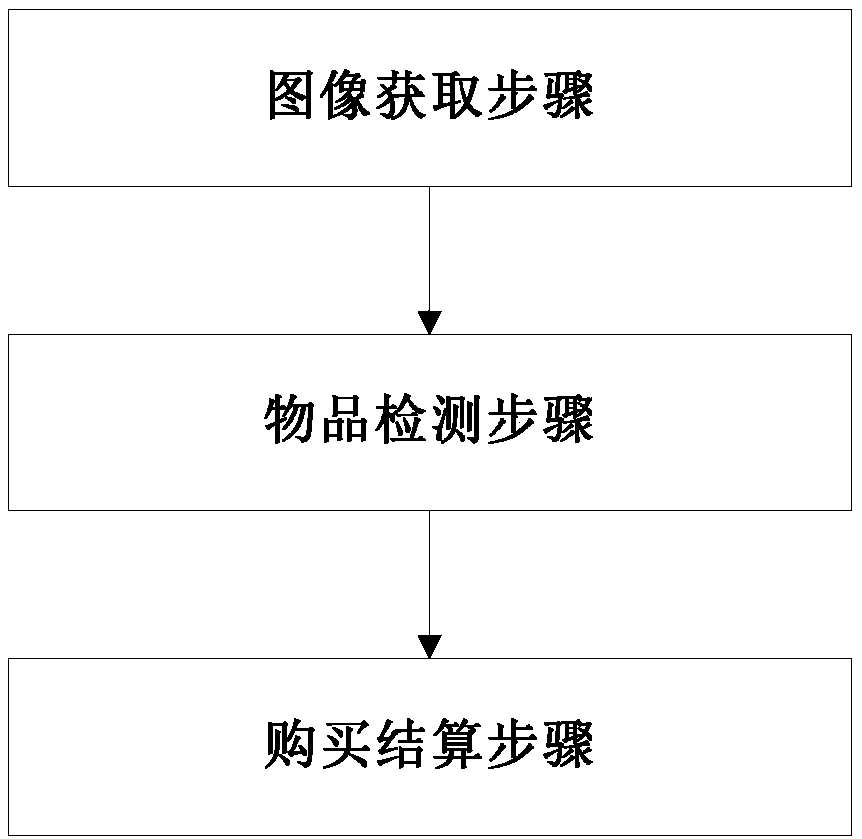

[0054] figure 1 A vending method according to an aspect of the present application is shown, which is applied to an unmanned vending machine, and the method includes:

[0055] Image acquisition step: when the door of the unmanned vending machine is opened or is about to be opened, acquire the image of the items on the shelf in the unmanned vending machine as the original image, and when the door of the unmanned vending machine is opened After opening, acquire images of items on the shelf at preset time intervals;

[0056] Item detection step: comparing the features of each acquired image with the previous image of the image to determine the item that the user has extracted from the shelf or the item that has be...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com