Image depth estimation method and system based on CNN (Convolutional Neural Network) and depth filter

An image depth and depth estimation technology, applied in the field of 3D vision, can solve the problems of absolute scale loss, pure rotation cannot be calculated, and the scope of application is narrow, so as to reduce the number of iterations required, overcome absolute scale loss, and overcome object edge blur. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0040] In order to make the purpose, technical solution and advantages of the present invention more clear, the present invention will be further described in detail below in conjunction with the accompanying drawings and examples.

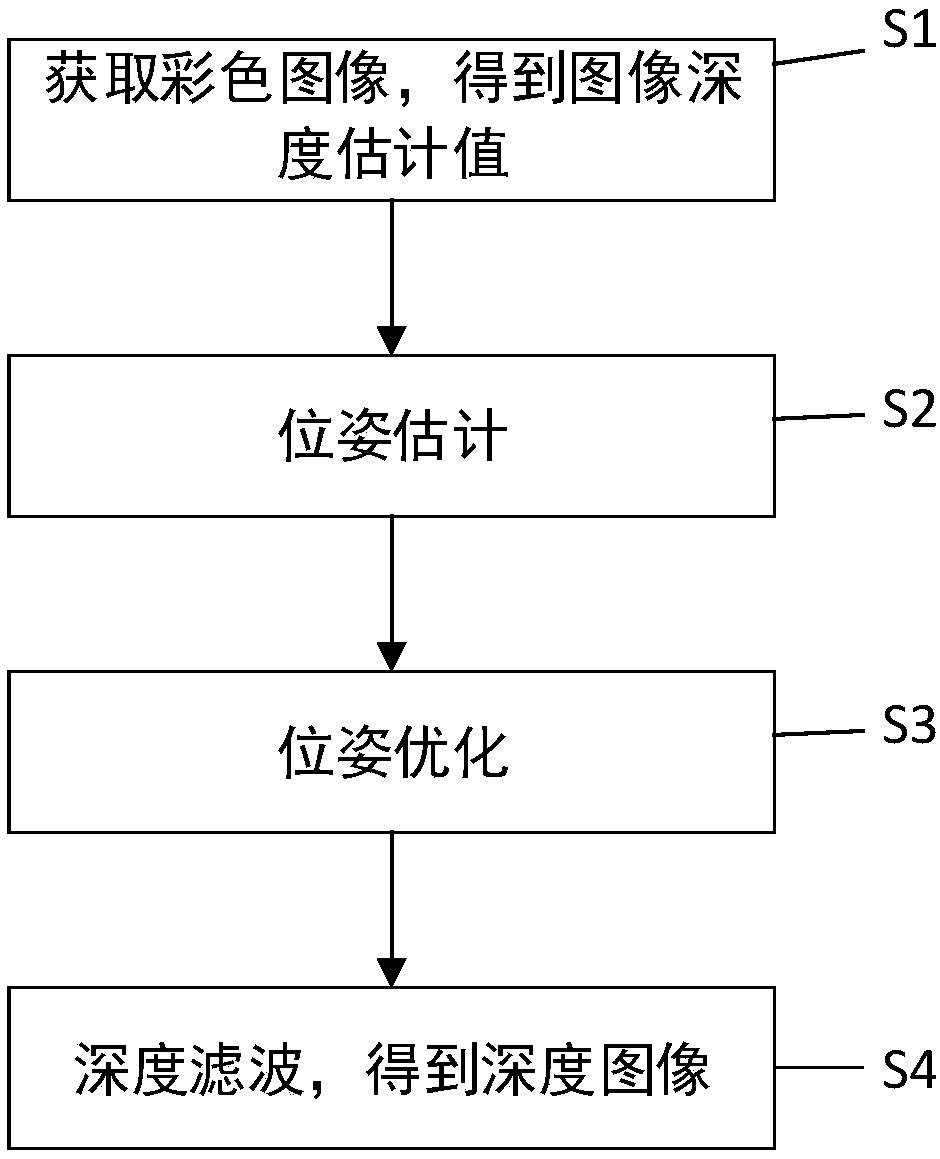

[0041] The specific process of image depth estimation method based on CNN and depth filter is as follows: figure 1 As shown, the method is divided into four parts: obtaining image depth estimates, pose estimation, pose optimization, and depth image acquisition.

[0042] 1. Obtain image depth estimates

[0043] Obtain multiple color images continuously shot by the camera on the same shooting target, select one of the color images as a reference image, and the remaining color images as associated images, and obtain the depth estimation value corresponding to each pixel of the reference image through CNN.

[0044] 2. Pose estimation

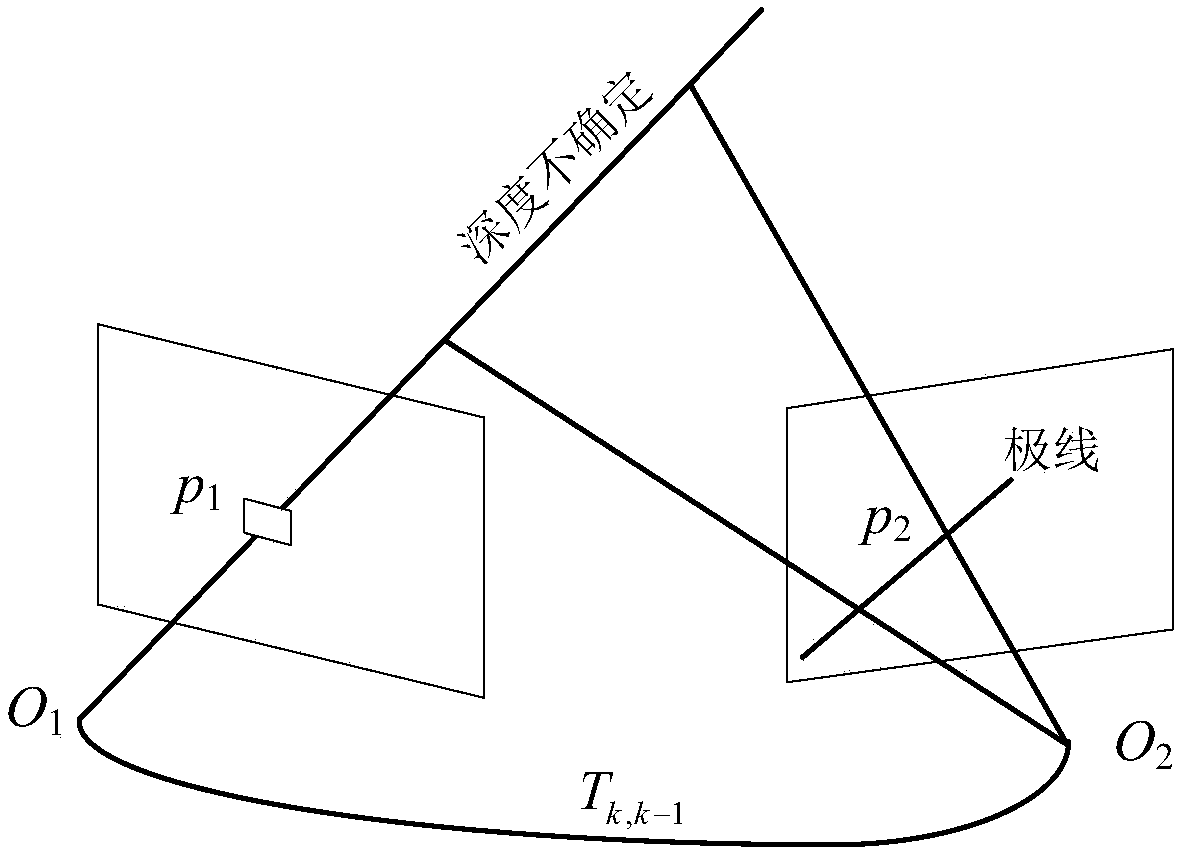

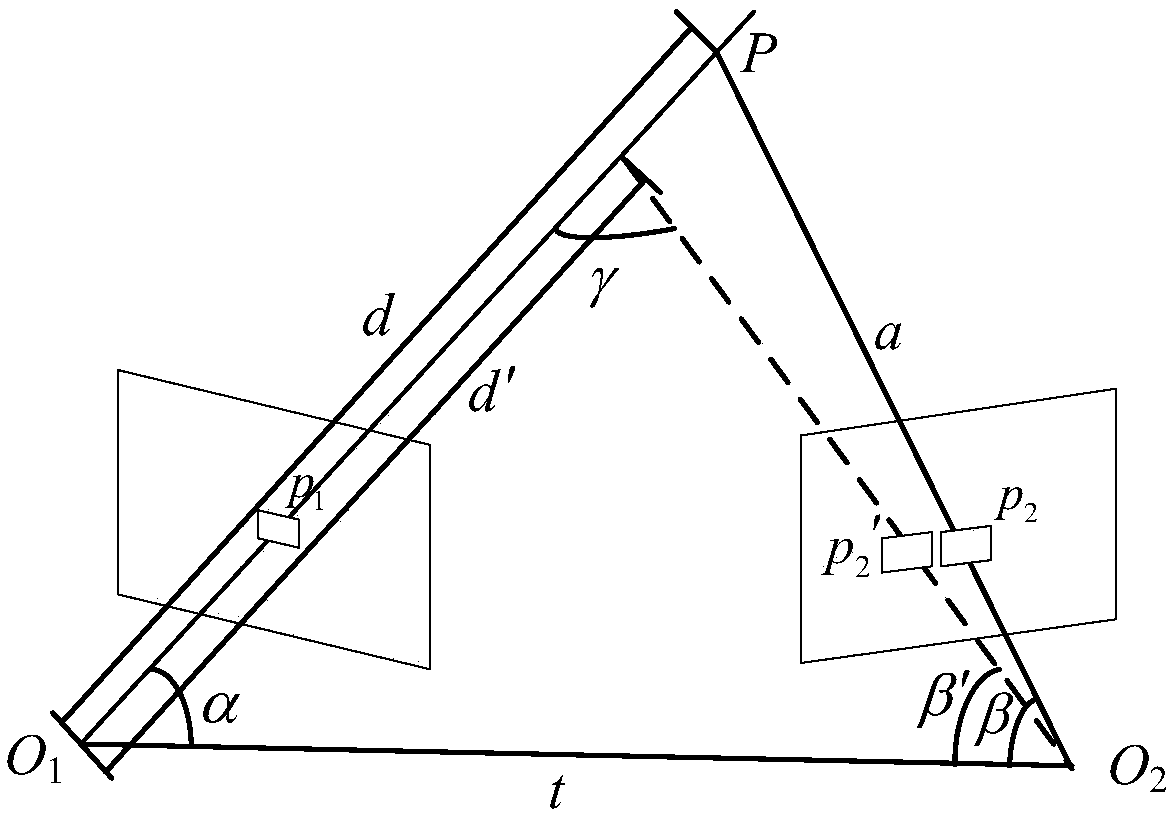

[0045] In the pose (camera translation and rotation) estimation stage, set the image collected at time k as I k , ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com