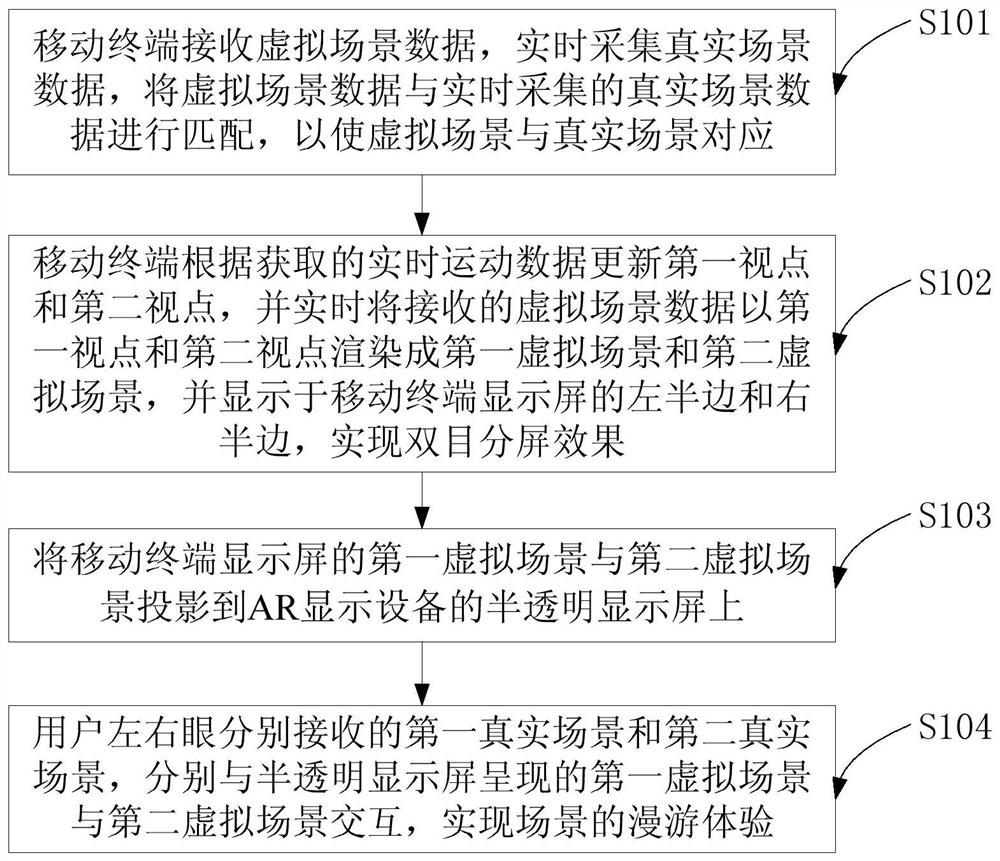

A mixed reality-based scene roaming experience method and experience system

A technology of mixed reality and real scenes, applied in the field of scene roaming experience methods and experience systems, can solve the problems of no three-dimensional effect, inability to understand the decoration pattern, and users' inability to personally experience the real effect of the design scene, so as to avoid the effect of motion sickness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0029] The mixed reality-based scene roaming experience system provided by an embodiment of the present invention includes: a server, and a service

[0030] The mobile terminal can be a mobile phone, a notebook, a tablet computer, etc., with environmental perception and motion tracking technology, capable of

[0037] Specifically, Unity3d can be used as the mobile terminal rendering engine and the server automatic scene construction application. shift

[0043] (a) determine any number of virtual markers in the virtual scene data.

[0046] The identification picture can be a picture with complex texture information, and the shape of the picture is set according to the virtual marking point,

[0049] (d) Calculate the virtual center of gravity of all virtual markers and the real center of gravity of all real markers.

[0059] Corresponding to the number of facets of the 3D model, the rendering material has a greater impact on the visual effect of the rendering. Therefore, the move ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com