A Vegetation Classification and Identification Method

A recognition method and vegetation technology, applied in character and pattern recognition, image analysis, image enhancement, etc., to achieve improved ability, high-precision surface vegetation coverage classification and recognition, and the effect of increasing accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

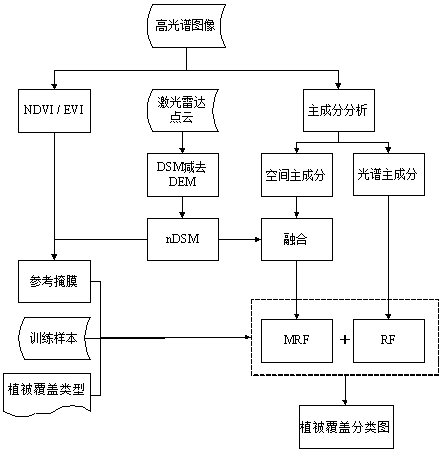

[0032] Such as figure 1 As shown, a vegetation classification and recognition method for hyperspectral image and laser radar point cloud data fusion, the method includes:

[0033] S1: Data preprocessing, including lidar point cloud LiDAR (Light Detection And Ranging) preprocessing and hyperspectral image HSI (HyperSpectral Image) preprocessing;

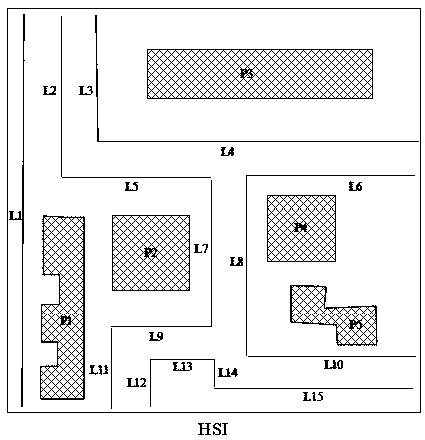

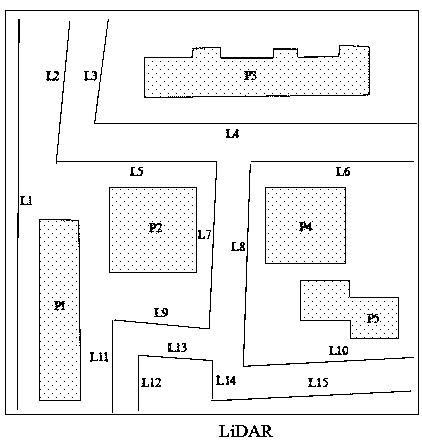

[0034] S2: Hyperspectral image and lidar point cloud data registration, by establishing a robust feature line / surface registration primitive library, realize the precision registration between heterogeneous lidar point cloud and hyperspectral image, and uniform geocoding to A defined spatial reference system;

[0035] S3: Use the digital surface model DSM (Digital Surface Model) and digital terrain model DTM (Digital Terrain Model) generated by the lidar point cloud to generate a normalized digital surface model nDSM (Normalized Digital Surface Model);

[0036] S4: Use the hyperspectral image to calculate two spectral vegetation ind...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com