A fully automatic extraction method of high spatial resolution cultivated land plots based on deep learning

A high-spatial-resolution, deep-learning technology, applied in the field of farmland block extraction of high-spatial-resolution remote sensing images, can solve the problem of high cost and achieve cost-saving, high-efficiency, and fine-grained extraction

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

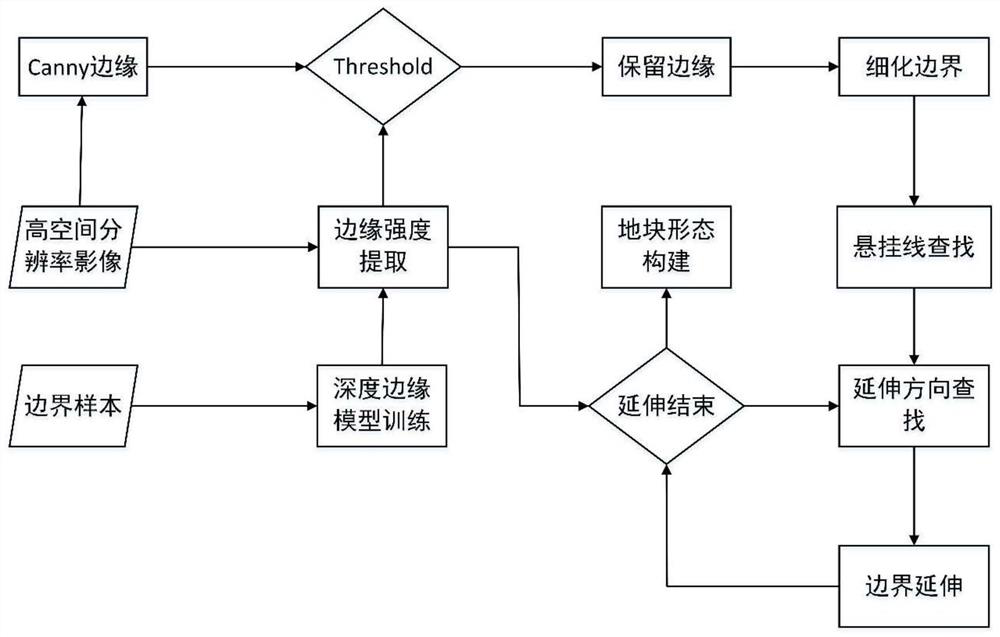

[0022] figure 1 The main realization idea of the present invention is illustrated, wherein the key technical part includes the automatic retrieval of edge label samples, the training of the deep learning model (HED) and the edge post-processing assisted by the Canny edge operator extraction boundary, and the post-processing includes edge processing Extracted accuracy verification.

[0023] The specific steps are as follows:

[0024] 1) Collect and sort out the images of the research area, establish an image database, and use the sample labels prepared in the previous stage to establish a farmland edge sample database;

[0025] 2) Use the Canny edge operator to extract the boundary of the remote sensing image of the study area;

[0026] 3) Based on the selected edge label sample data and corresponding image data as constraints, the HED model is improved, including the number of network layers and pooling size;

[0027] 4) Use the improved HED model to extract the boundary ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com