Deaf-mute sign language interaction method and deaf-mute sign language interaction device

An interactive method, a technology for the deaf-mute, applied in the direction of educational appliances, instruments, teaching aids, etc., can solve the problem that the deaf-mute cannot comprehensively meet the communication needs of the deaf-mute, the weight is heavy, and it is difficult to realize real-time and face-to-face wireless communication. Barriers to communication etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

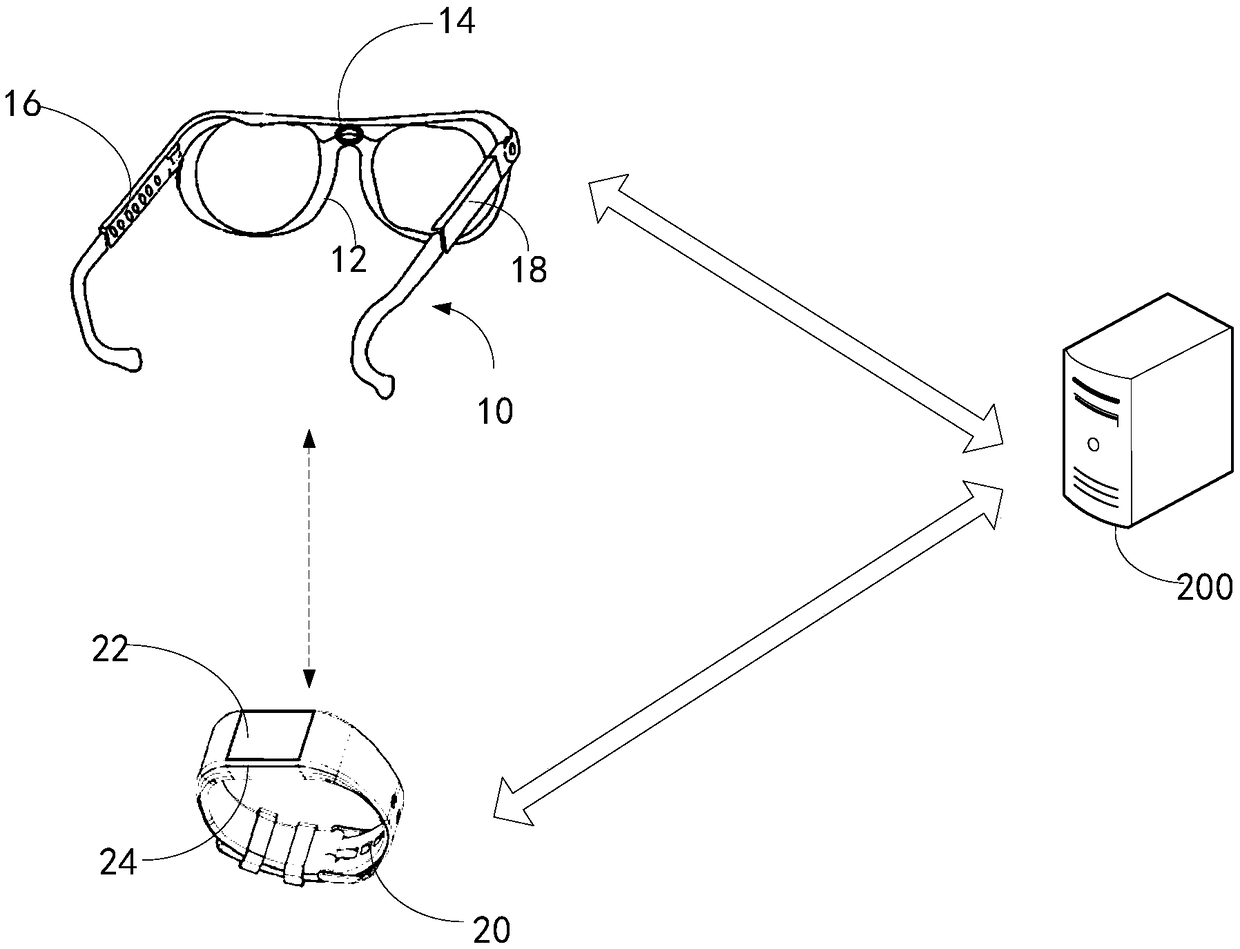

[0041] Please also refer to figure 1 as well as Figure 4 , the sign language interaction device for the deaf-mute in this embodiment includes a first wearable device 10 and a second wearable device 20 . The first wearable device 10 is provided with a first local connection module 32 . The second wearable device 20 is provided with a second local connection module 55 . The first local area connection module 32 and the second local area connection module 55 may be WIFI modules, or local area wireless network modules such as Bluetooth modules or Zigbee modules.

[0042] The first wearable device 10 also includes an image acquisition module 14 , a first wireless connection module 34 , a first identification module 35 , a first communication module 36 , a feature learning module 37 and a first system update unit 38 .

[0043] The image acquisition module 14 can be a camera module, which has pixel requirements that meet the needs of image analysis. Such as figure 1 As shown, the...

Embodiment 2

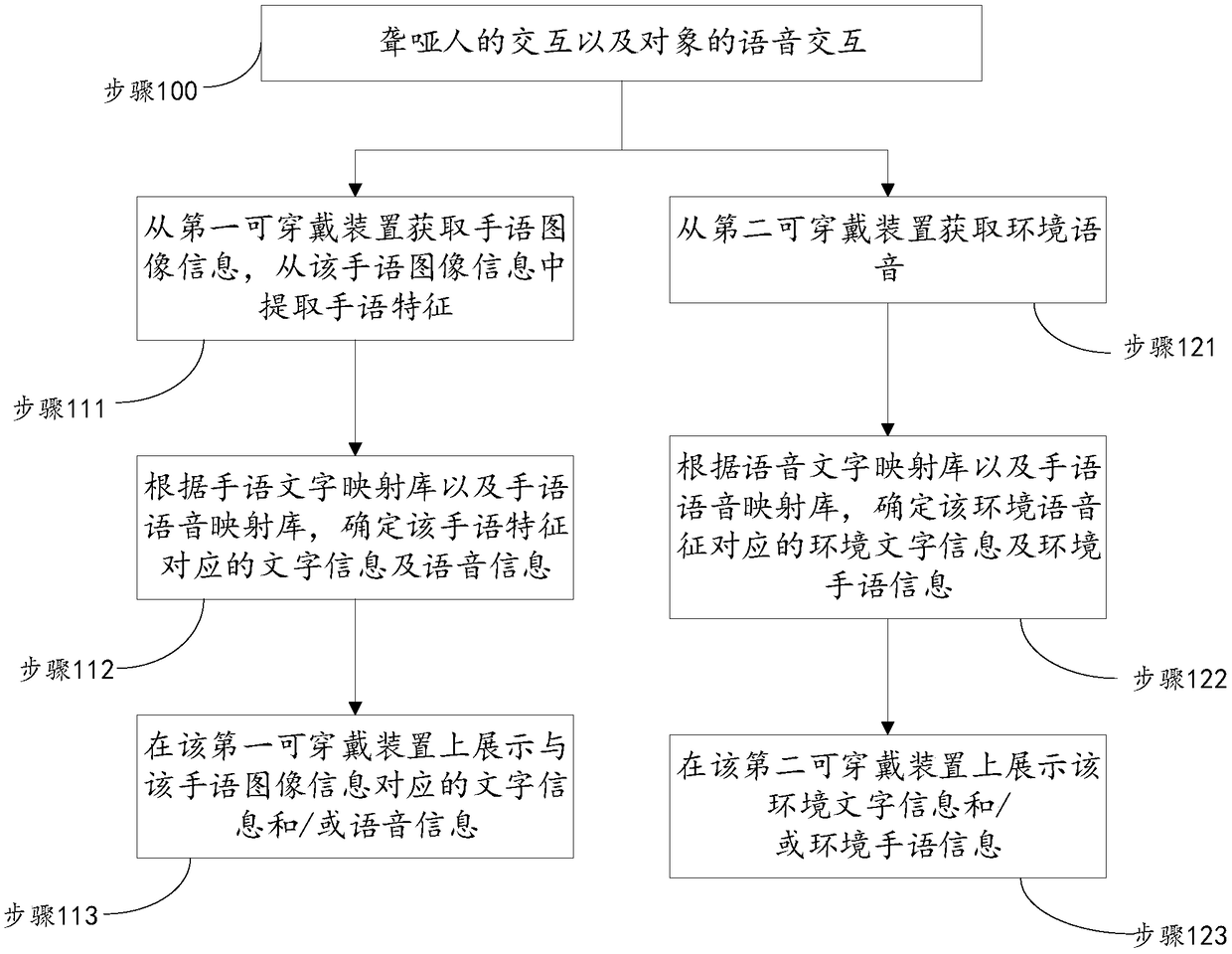

[0056] Please refer to figure 2 , the deaf-mute sign language interaction method of the present embodiment mainly includes the following steps:

[0057] The processing steps on the side of the first wearable device 10 are:

[0058] Step 111: Obtain sign language image information from the first wearable device 10, and extract sign language features from the sign language image information;

[0059] Step 112: According to the sign language text mapping database and the sign language voice mapping database, determine the text information and voice information corresponding to the sign language feature;

[0060] Step 113: Display text information and / or voice information corresponding to the sign language image information on the first wearable device 10;

[0061] The processing steps on the side of the second wearable device 20 are:

[0062] Step 121: Obtain ambient voice from the second wearable device 20;

[0063] Step 122: Determine the environmental text information and...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com