New video semantic extraction method based on deep learning model

A technology of deep learning and extraction methods, applied in character and pattern recognition, instruments, computer parts, etc., to achieve the effect of improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0038] In order to have a clearer understanding of the technical features, purposes and effects of the present invention, the specific implementation manners of the present invention will now be described with reference to the accompanying drawings.

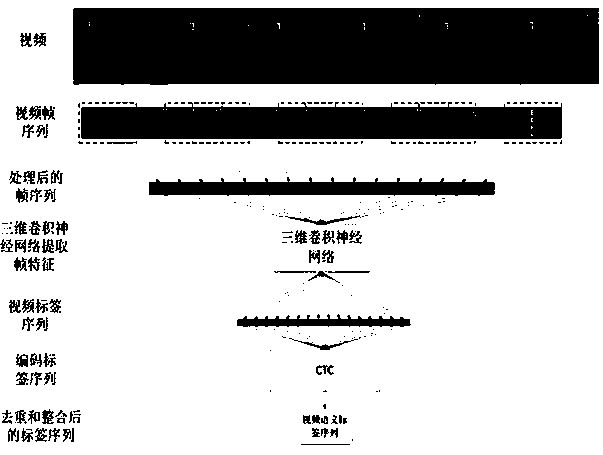

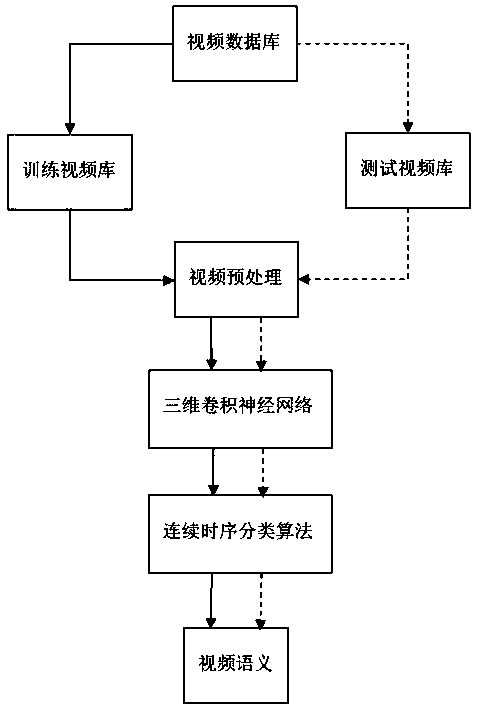

[0039] A schematic flow chart of a new video semantic extraction method based on a deep learning model proposed by the present invention is as follows: figure 1shown, including the following steps:

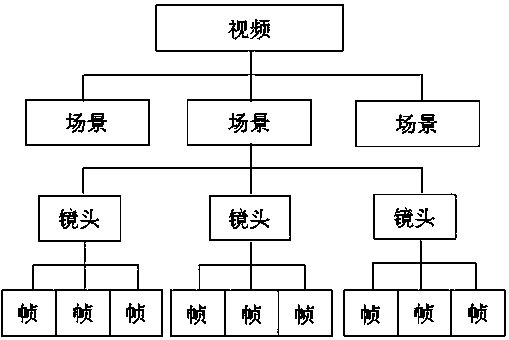

[0040] S1. Based on the physical structure of the video, the semantically structured video data is obtained by combining and segmenting the video frame sequence: the physical structure of the video data is from top to bottom: video, scene, shot, and frame. The schematic diagram of the structure is as follows figure 2 As shown; referring to the physical structure of video data, the semantic structure of video is defined from top to bottom: video, behavior, sub-action, frame, and its structural diagram is as follows image 3 shown; ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com