A robot-oriented multi-modal fusion emotion computing method and system

A kind of emotional computing, multi-modal technology, applied in the field of information, can solve the problem of no fusion, no multi-modal emotional computing for robots, no fusion method, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

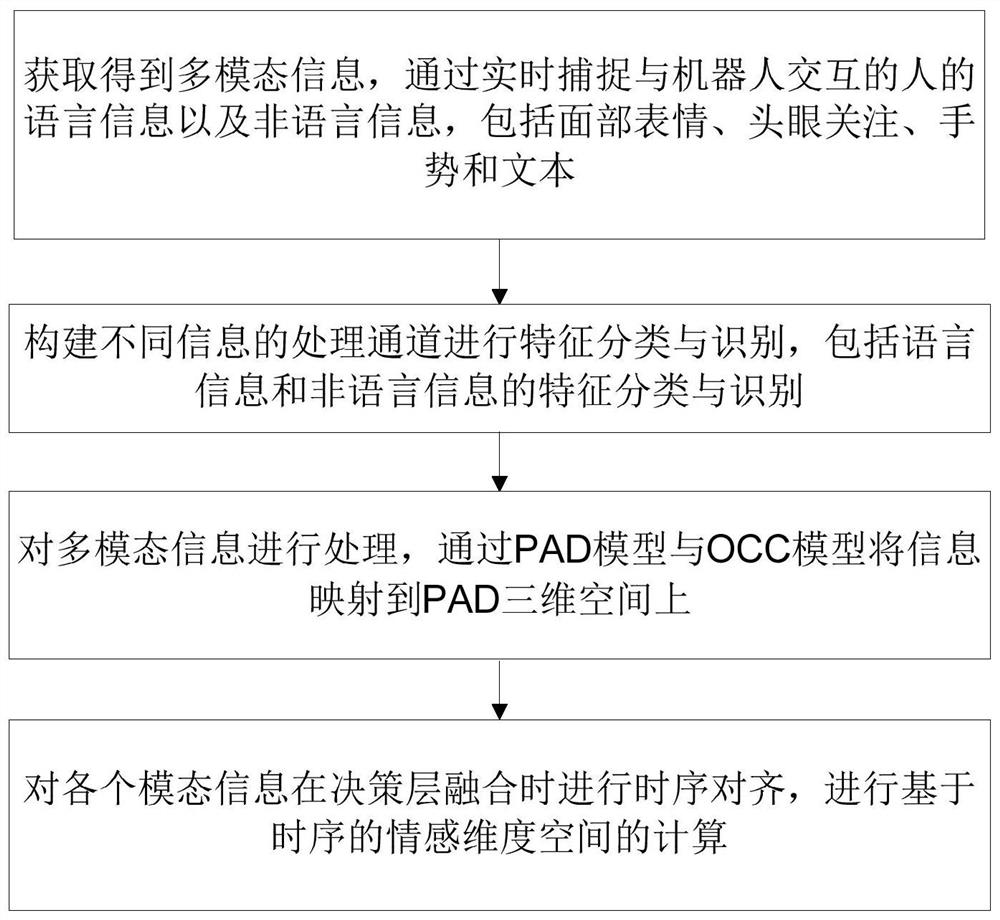

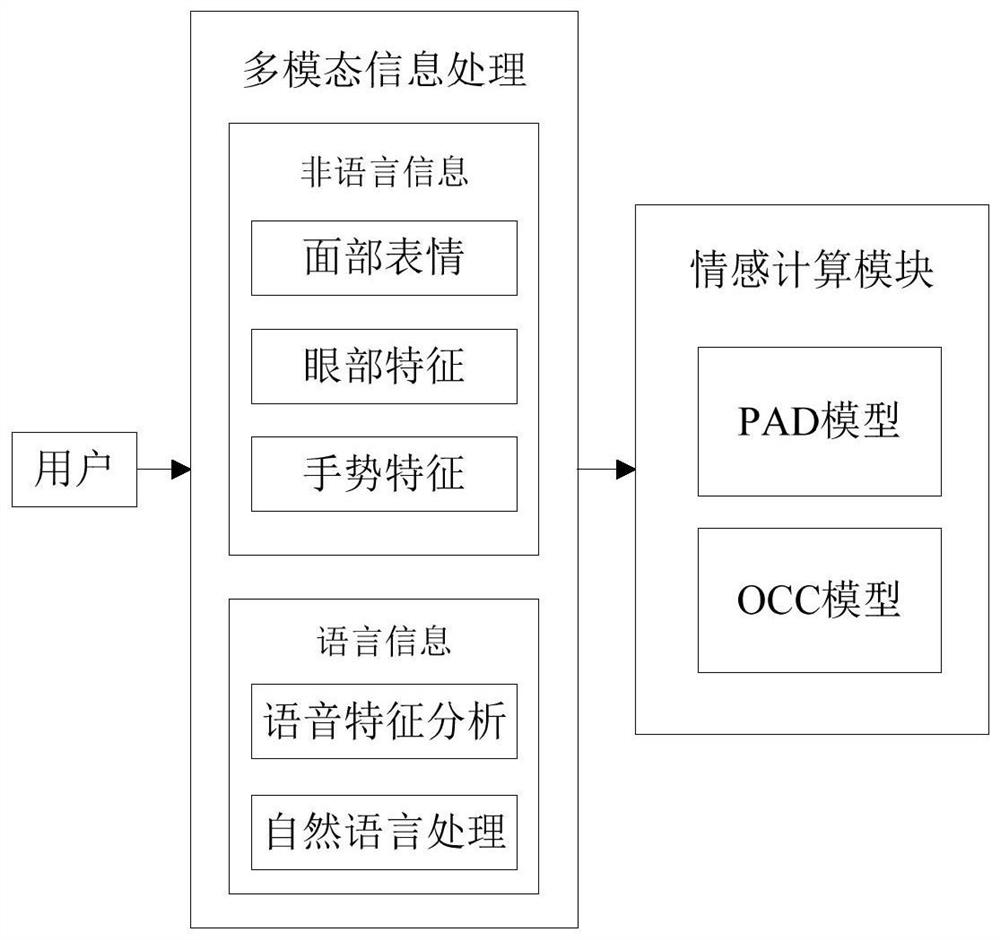

[0049] see figure 1 with figure 2 , the present invention is a kind of robot-oriented multimodal fusion emotion calculation method, comprising the following steps:

[0050] Step 1. Obtain multi-modal information, by capturing the language information and non-language information of people interacting with the robot in real time, including facial expressions, head and eye attention, gestures and text;

[0051] Step 2. Construct different information processing channels for feature classification and identification, including feature classification and identification of linguistic information and non-linguistic information;

[0052] Step 3, process the multimodal information, and map the information to the PAD three-dimensional space through the PAD model (P-pleasure, A-arousal, D-dominance) and the OCC model;

[0053]Step 4. Perform temporal alignment of each modal information when merging at the decision-making layer, and perform calculation of emotional dimension space bas...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com