A virtual cache sharing method and system

A virtual cache and cache technology, applied in the field of virtual cache sharing method and system, can solve the problem that cache content cannot be shared, and achieve the effect of simple and convenient realization

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

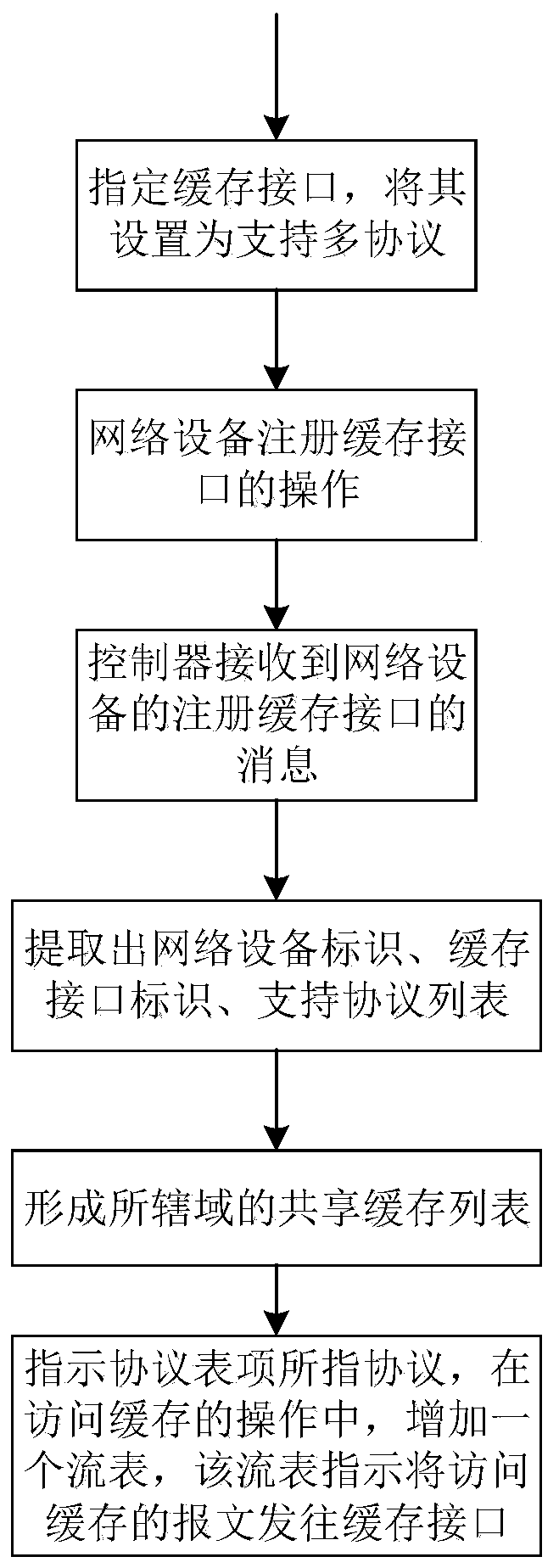

Method used

Image

Examples

Embodiment

[0116] Such as Figure 7 As shown, two virtual networks are formed on this network, one virtual network supports NDN (Named DataNetworking), and the other virtual network supports MF (Mobility First).

[0117] In this diagram, refer to the attached Figure 6 , device 1, device 2 and device 3, the cache interface is registered, and the attached Figure 5 The flow entry shown. The present invention will be described below in conjunction with this scenario.

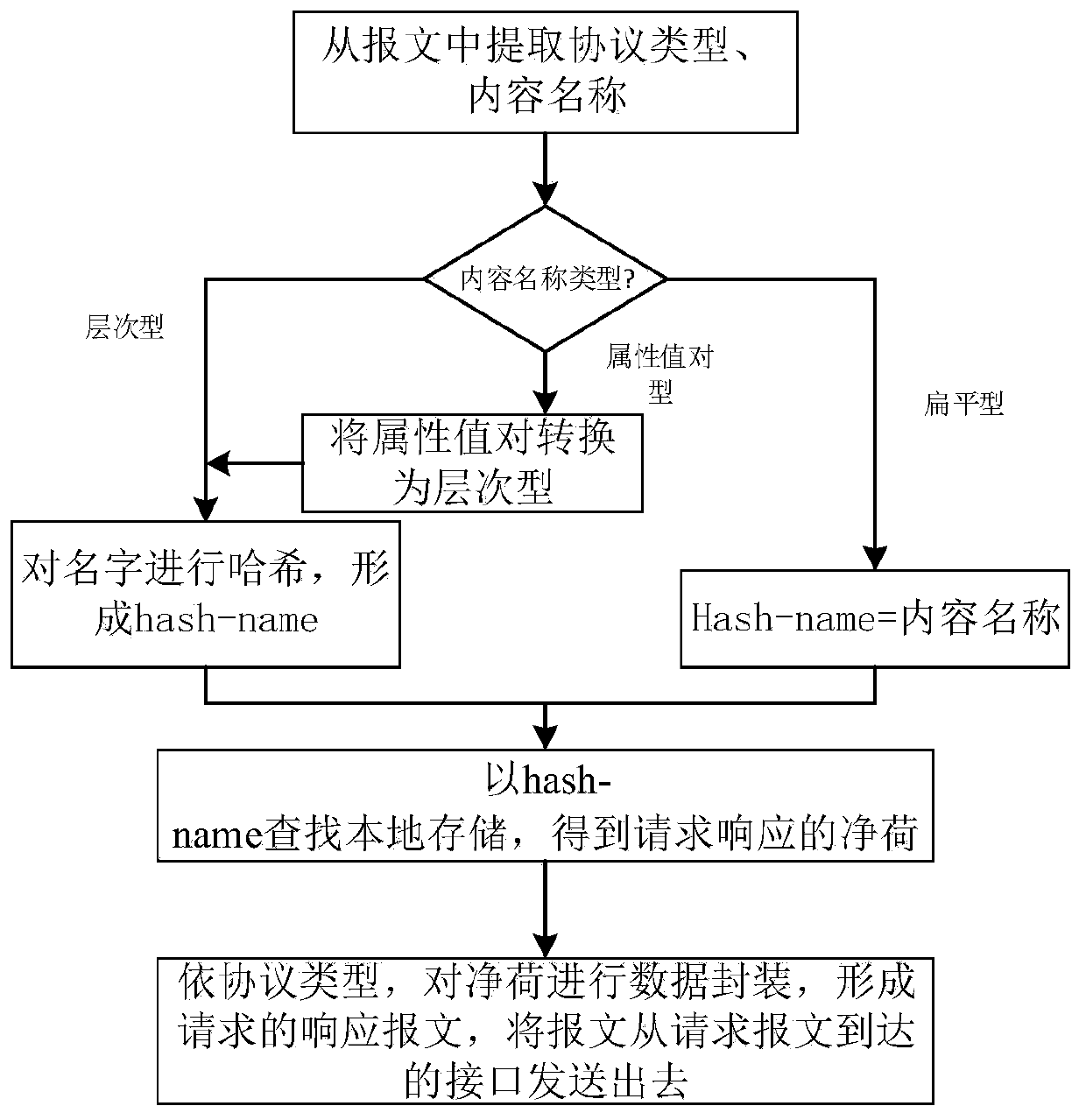

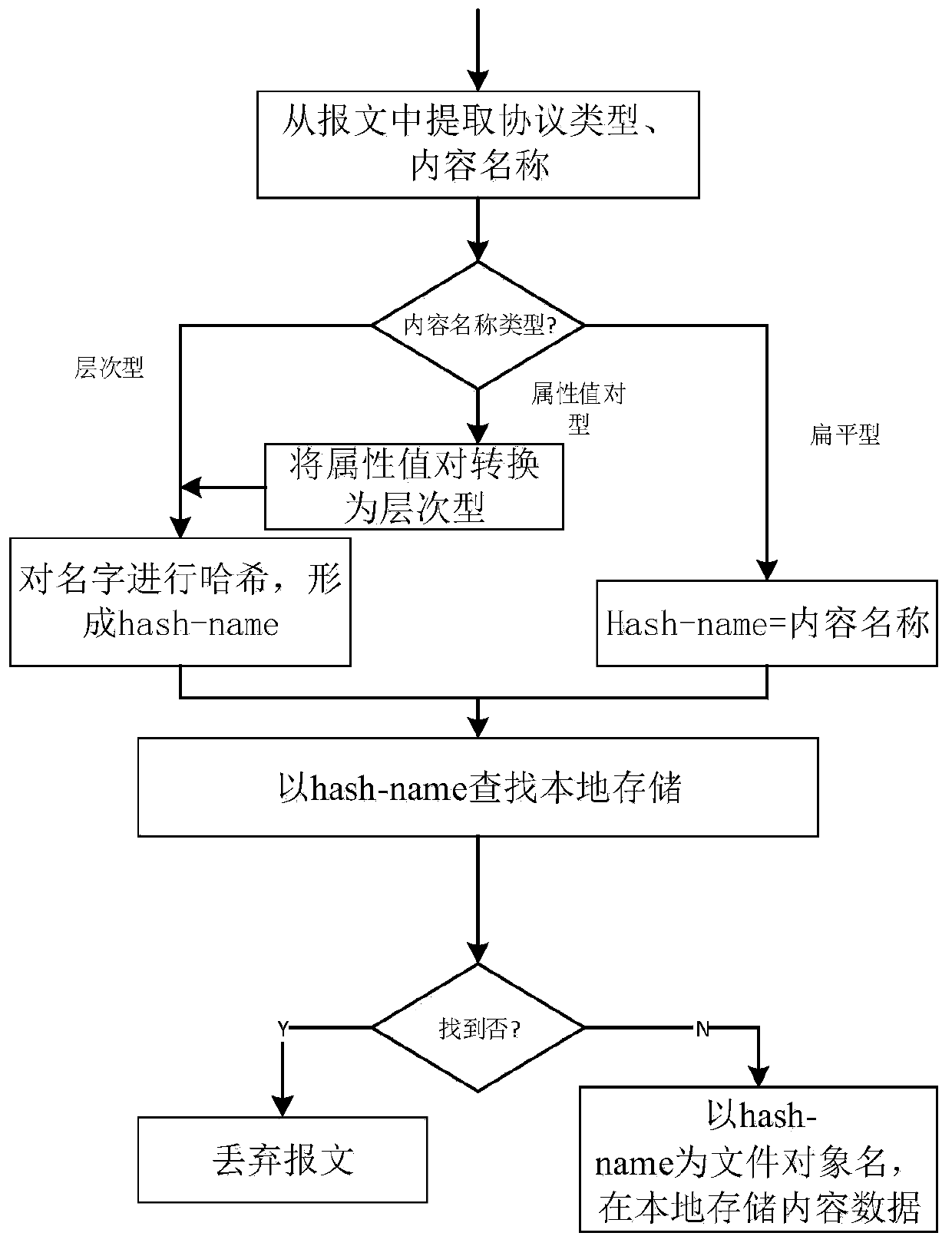

[0118] Assume that the NDN content request message arrives at device 1, and the content name is " / publisherA / content / photo1". Device 1 according to attached Figure 5 The flow entry sends the content request packet to the storage device through the cache interface.

[0119] storage device attachment figure 2 For processing, it is assumed that " / publisherA / content / photo1" is hashed and hash-name="12345" (for convenience of processing, indicated). After searching locally, find the file named "12345", read the content o...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com