A two-person interaction behavior identification method based on the priori knowledge

A priori knowledge and recognition method technology, applied in the field of two-person interactive behavior recognition, can solve the problems of insufficient recognition accuracy of two-person or even single-person behavior, low recognition accuracy of two-person interactive behavior, and inability to effectively extract key features, etc., to reduce a large number of Redundant information, improved recognition effect, and low power consumption

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0035] The present invention will be described in detail below in conjunction with the accompanying drawings and examples.

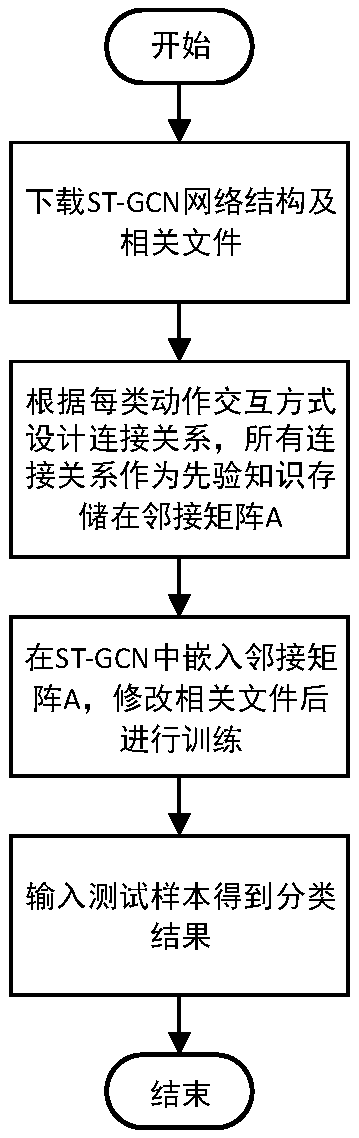

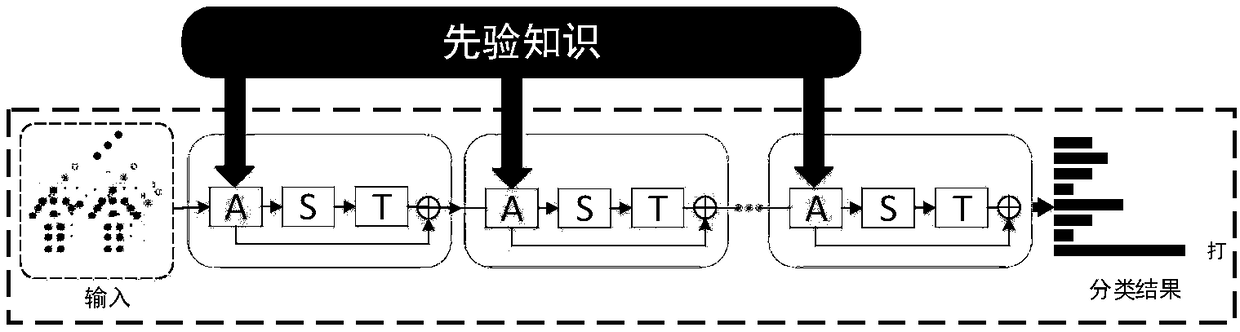

[0036] refer to figure 1 , the implementation steps of the present invention are as follows:

[0037] Step 1, prepare the behavior recognition network ST-GCN network structure file and related files.

[0038] 1a) Download the relevant files of behavior recognition network ST-GCN from the github website, which includes: structure files graph.py, tgcn.py and st_gcn.py, parameter setting files train.yaml and test.yaml, generate data sets and data Label code file ntu_gendata.py, training code files processor.py, recognition.py and io.py, visualization parameter setting file demo.yaml, visualization code file demo.py;

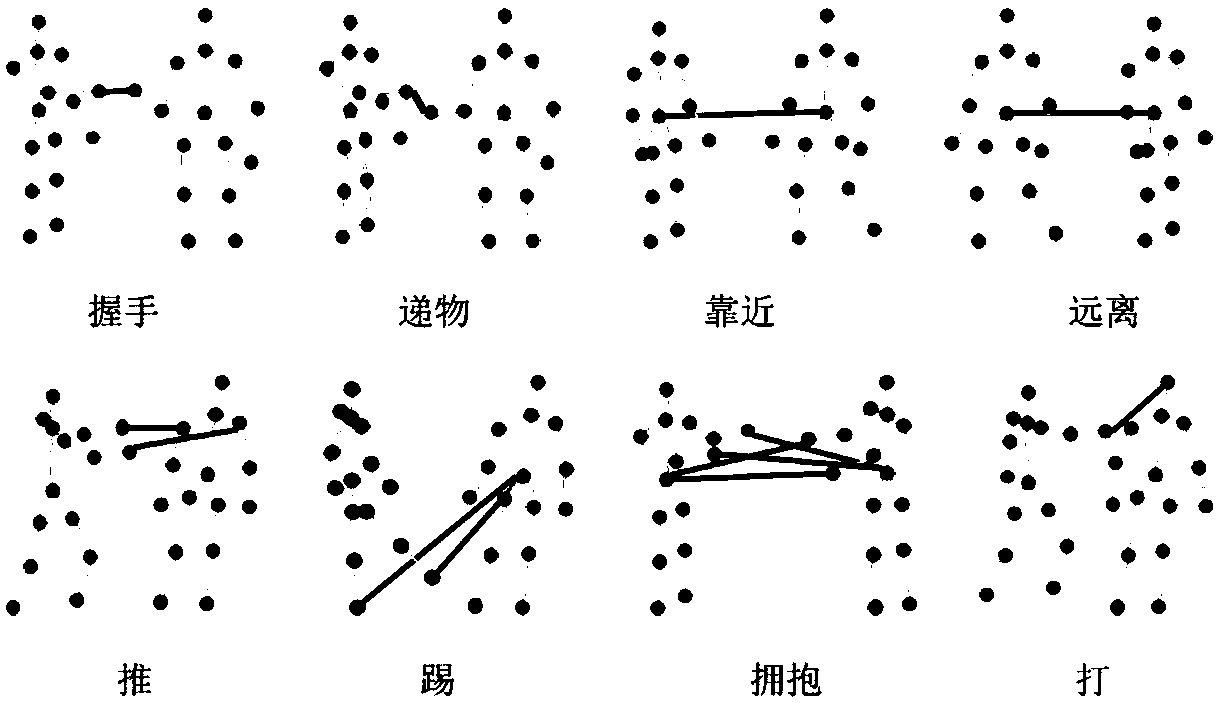

[0039] 1b) Download the two-person interaction data set from the SBU website. There are 282 interactive action data in the SBU data set, including 8 types of two-person interaction actions, the categories are: "handshake", "handing objects",...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com