Patents

Literature

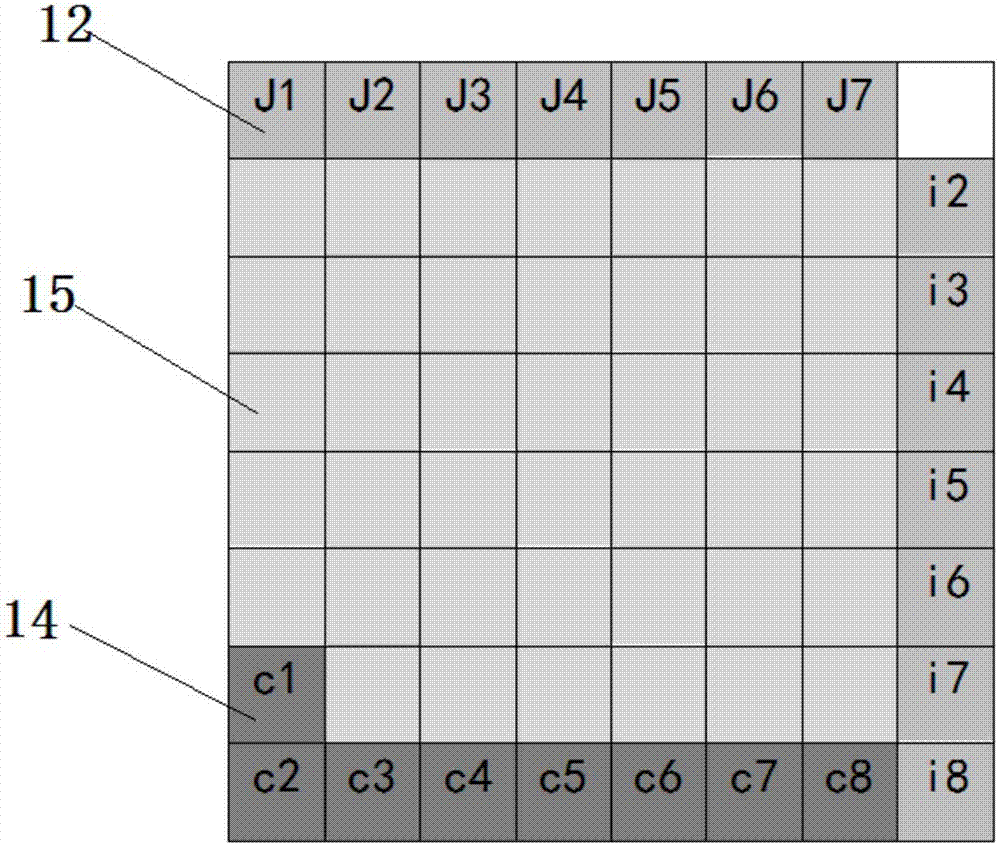

395results about How to "Reduce redundant information" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

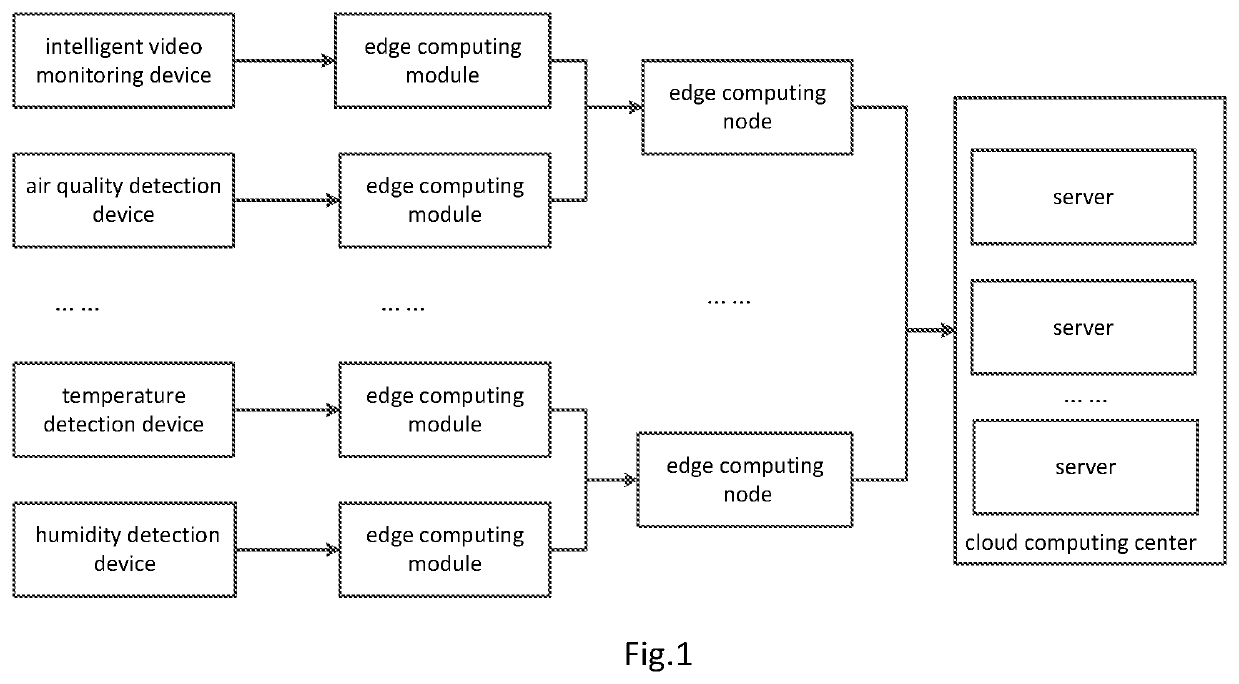

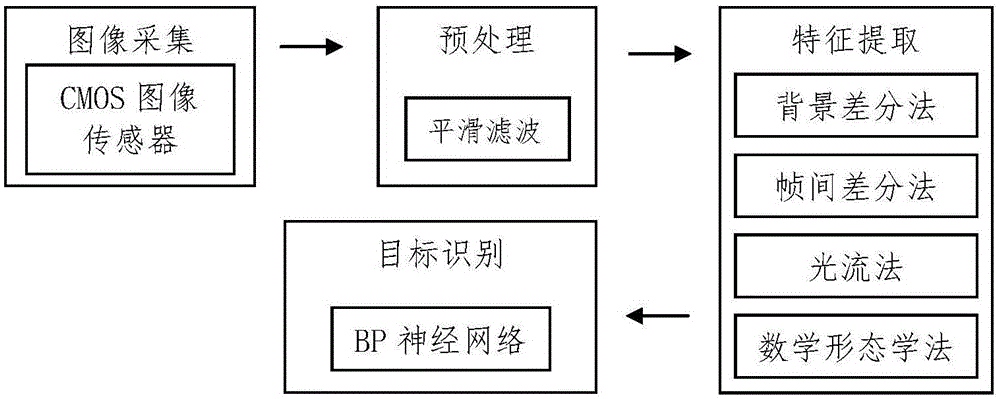

Fine granularity real-time supervision system based on edge computing

ActiveUS20210096911A1Reduce redundant informationGood scheduling characteristicVideo data indexingProgram initiation/switchingVideo monitoringComputing center

The present invention relates to the field of security technology, and in particular to a fine granularity real-time supervision system based on edge computing. A fine granularity real-time supervision system based on edge computing is provided, comprising: an intelligent video monitoring device, an edge computing module, an edge computing node, and a cloud computing center. The system can reduce the redundant information of the system and realizes fine granularity management.

Owner:ESSENCE INFORMATION TECH CO LTD

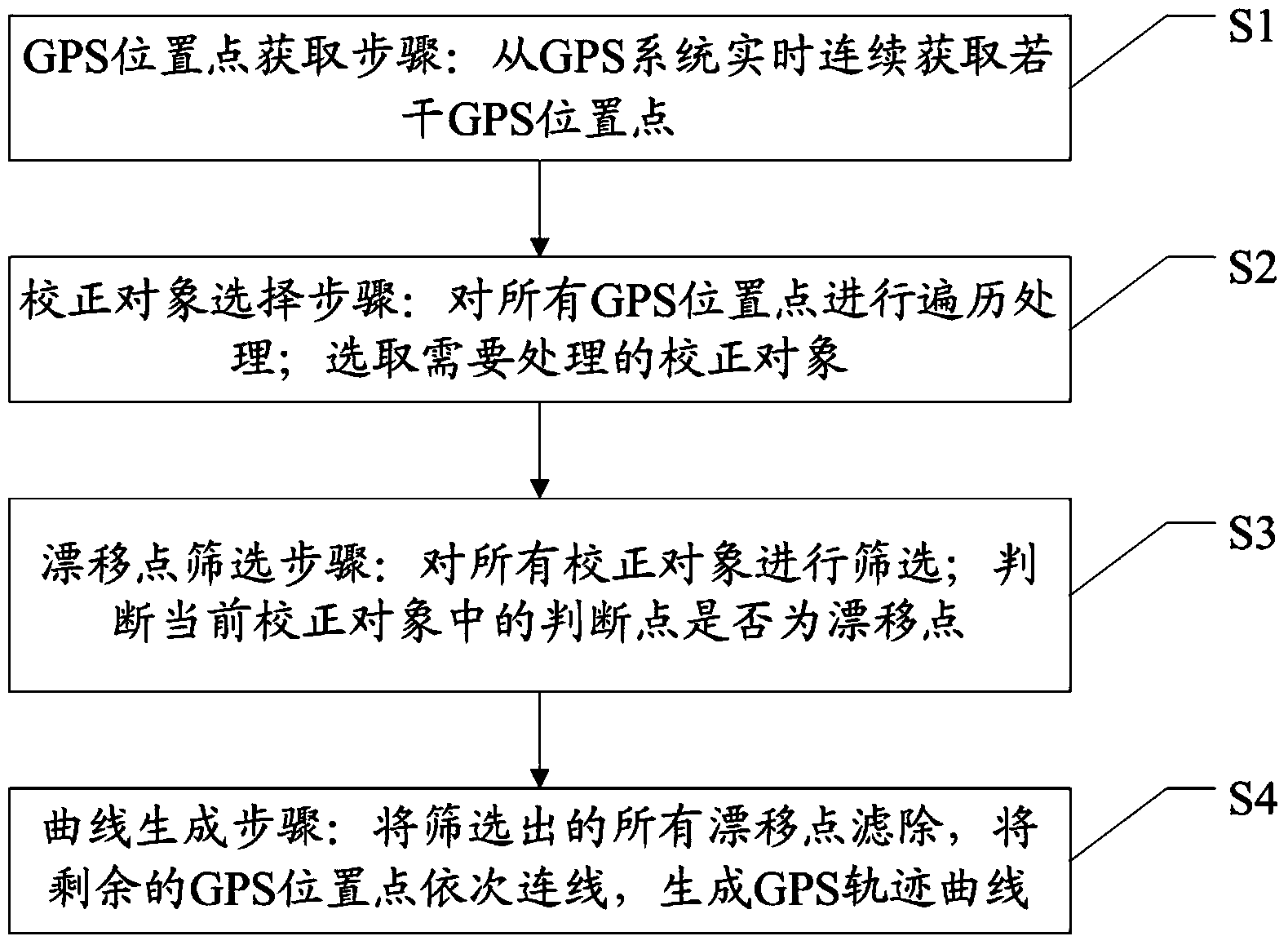

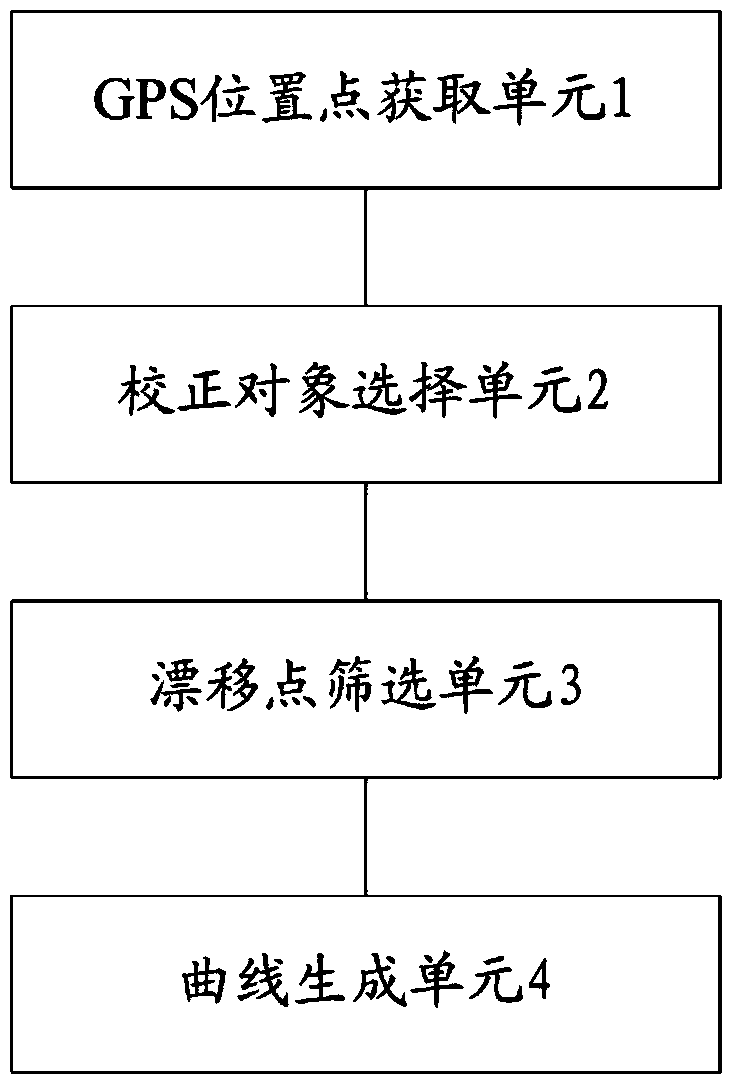

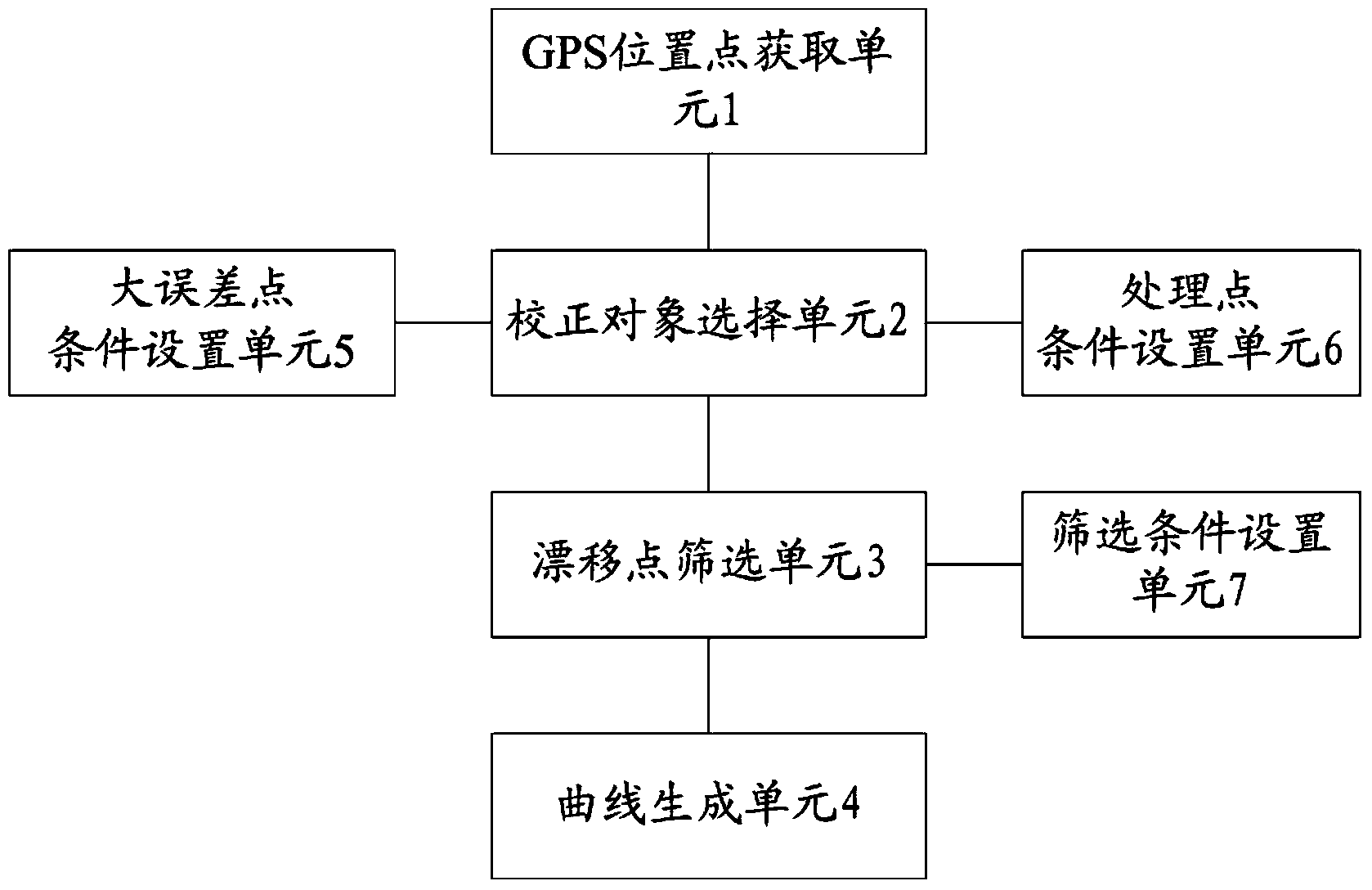

Method and device for generating GPS trajectory curve

InactiveCN103809195ASave storage spaceReduce redundant informationMeasurement arrangements for variableSatellite radio beaconingAlgorithmScreening method

The invention discloses a method for generating a GPS (Global Positioning System) trajectory curve. The method comprises a step of obtaining a GPS location point, a step of selecting a correction object, a step of screening drift points and a step of generating a curve, wherein the step of selecting the correction object comprises determining according to a predetermined processing point condition and selecting the desired correction object, and the step of screening the drift points comprises determining according to a predetermined screening condition, determining whether the processing point in the current correction object is the drift point and filtering out all the processing points screened out when generating the curve. Compared with the prior art, the method has the advantages that sampling points are screened so that redundant information is greatly reduced and the storage space of a server is saved, and a specific screening method is used for processing so that the GPS historical trajectory curve can be well displayed.

Owner:INNO INSTR (CHINA) INC

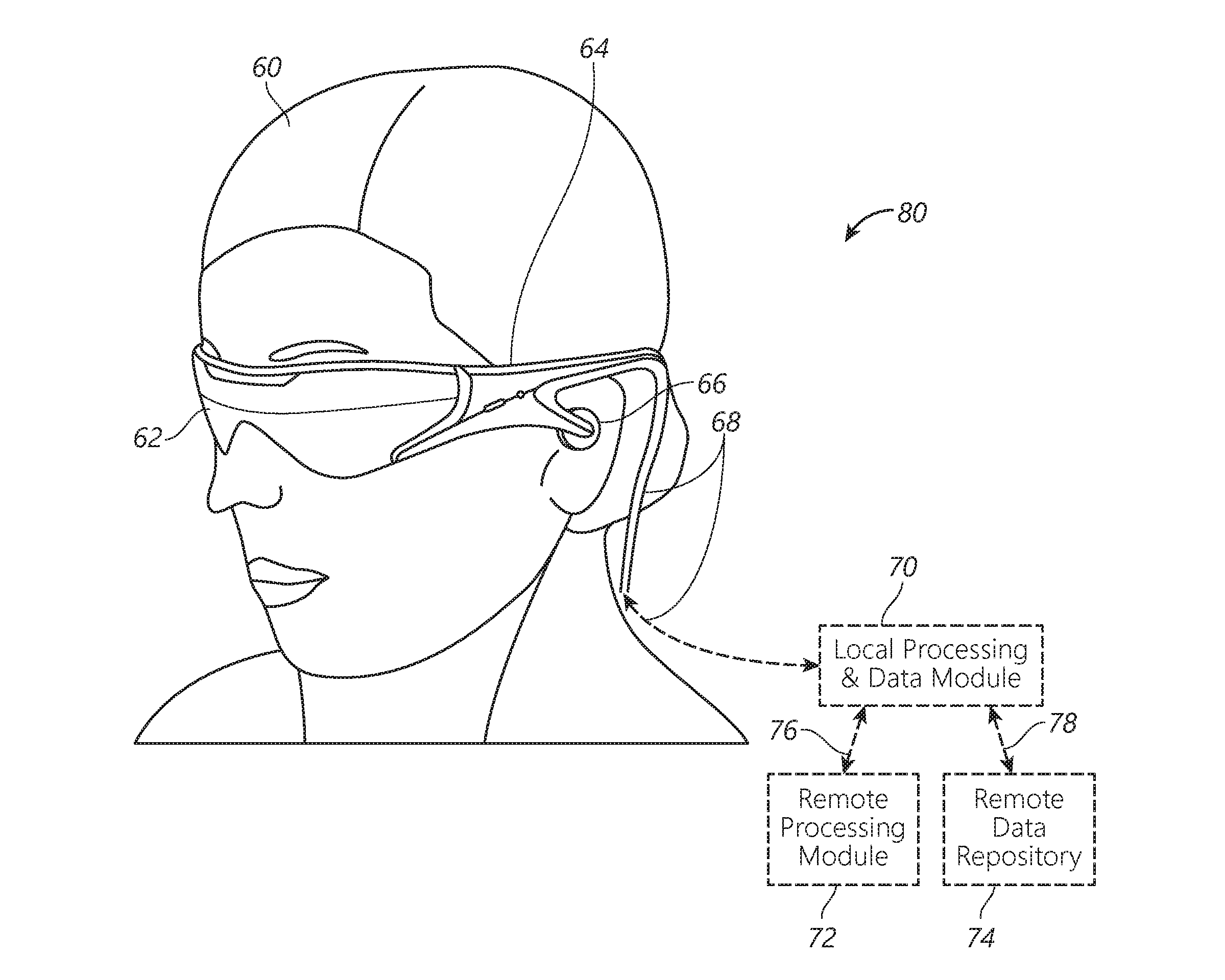

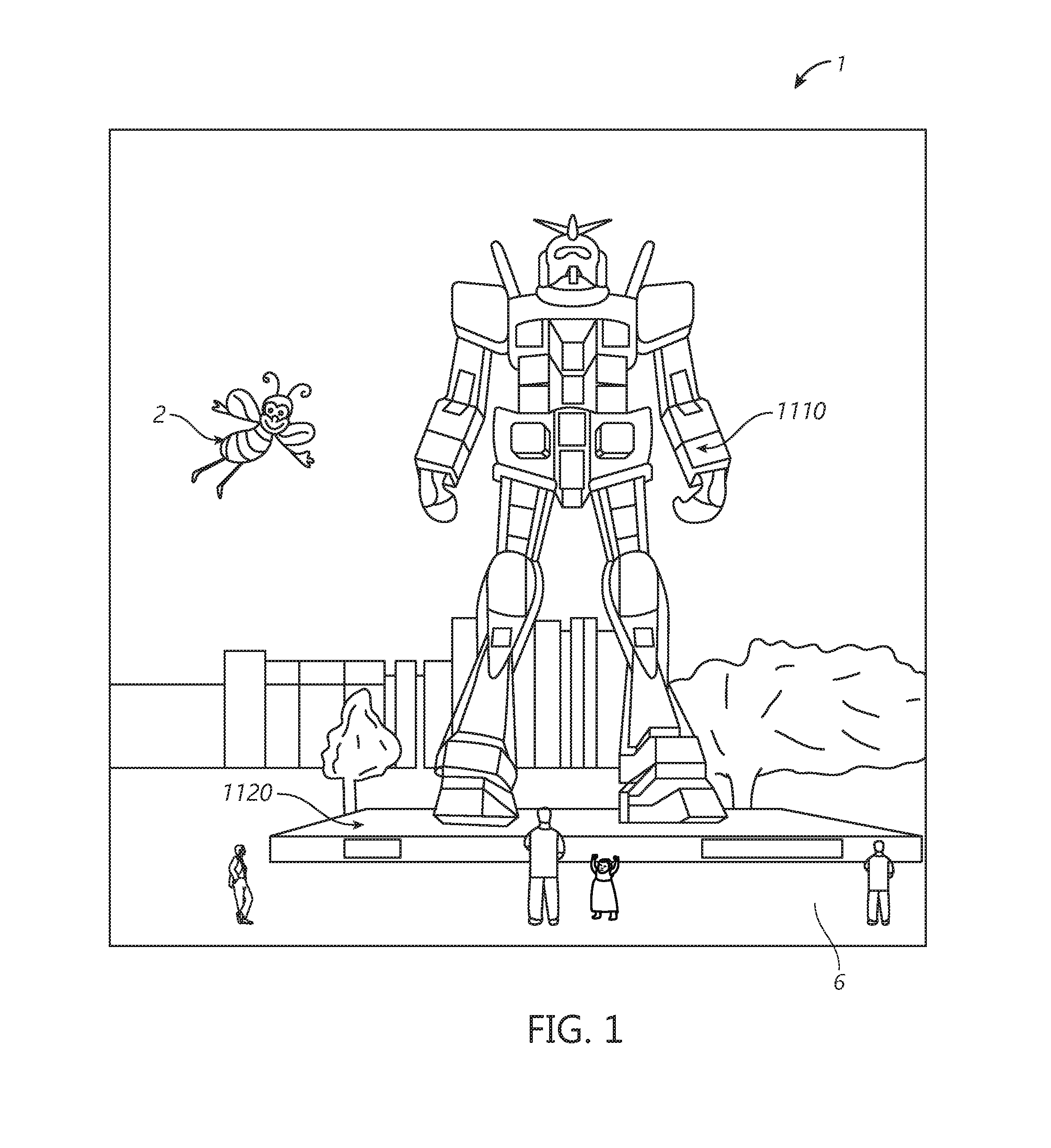

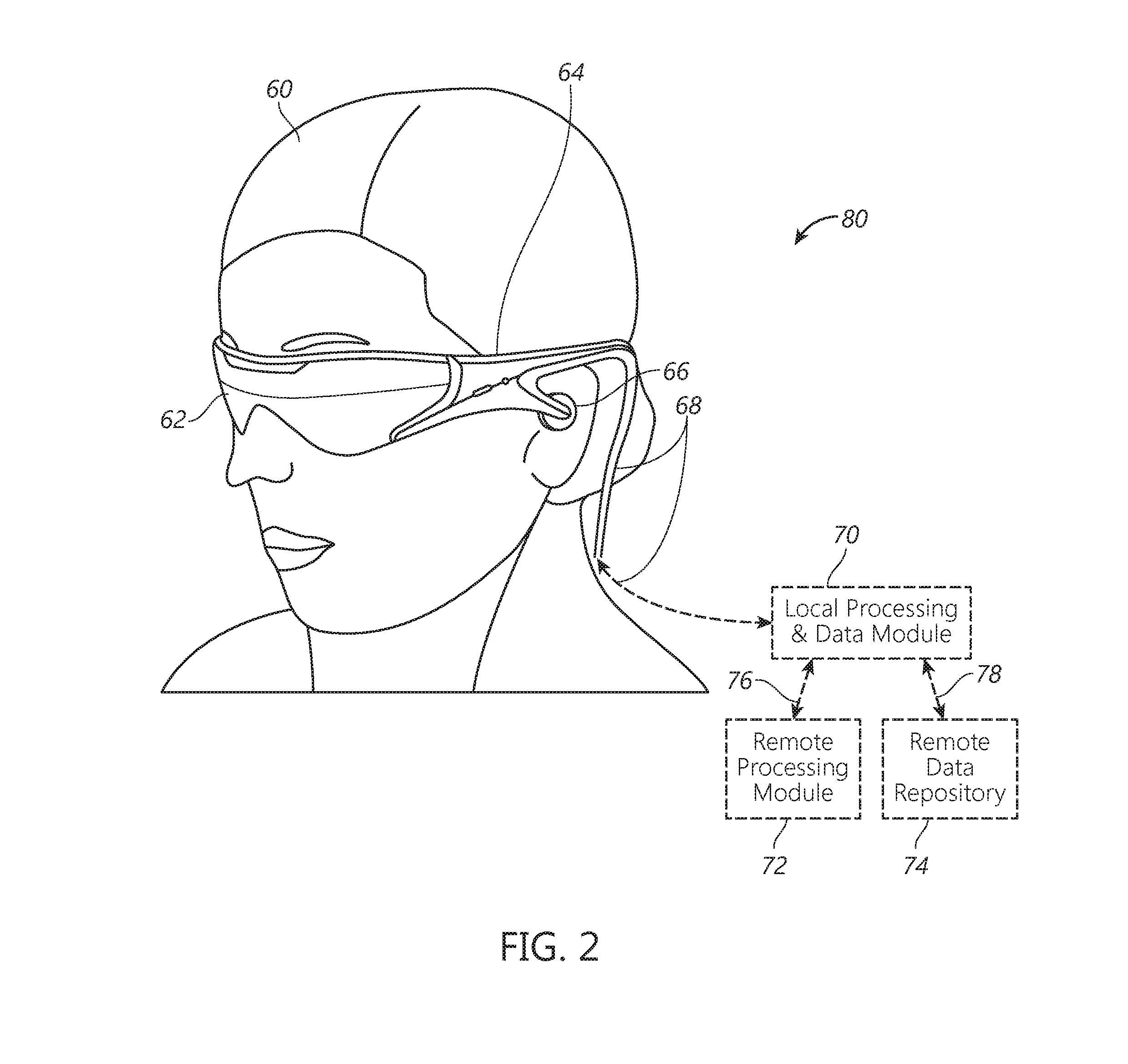

Virtual and augmented reality systems and methods

ActiveUS20170053450A1Reduce power consumptionReduce redundant informationImage analysisCharacter and pattern recognitionDisplay deviceDepth plane

A virtual or augmented reality display system that controls a display using control information included with the virtual or augmented reality imagery that is intended to be shown on the display. The control information can be used to specify one of multiple possible display depth planes. The control information can also specify pixel shifts within a given depth plane or between depth planes. The system can also enhance head pose measurements from a sensor by using gain factors which vary based upon the user's head pose position within a physiological range of movement.

Owner:MAGIC LEAP

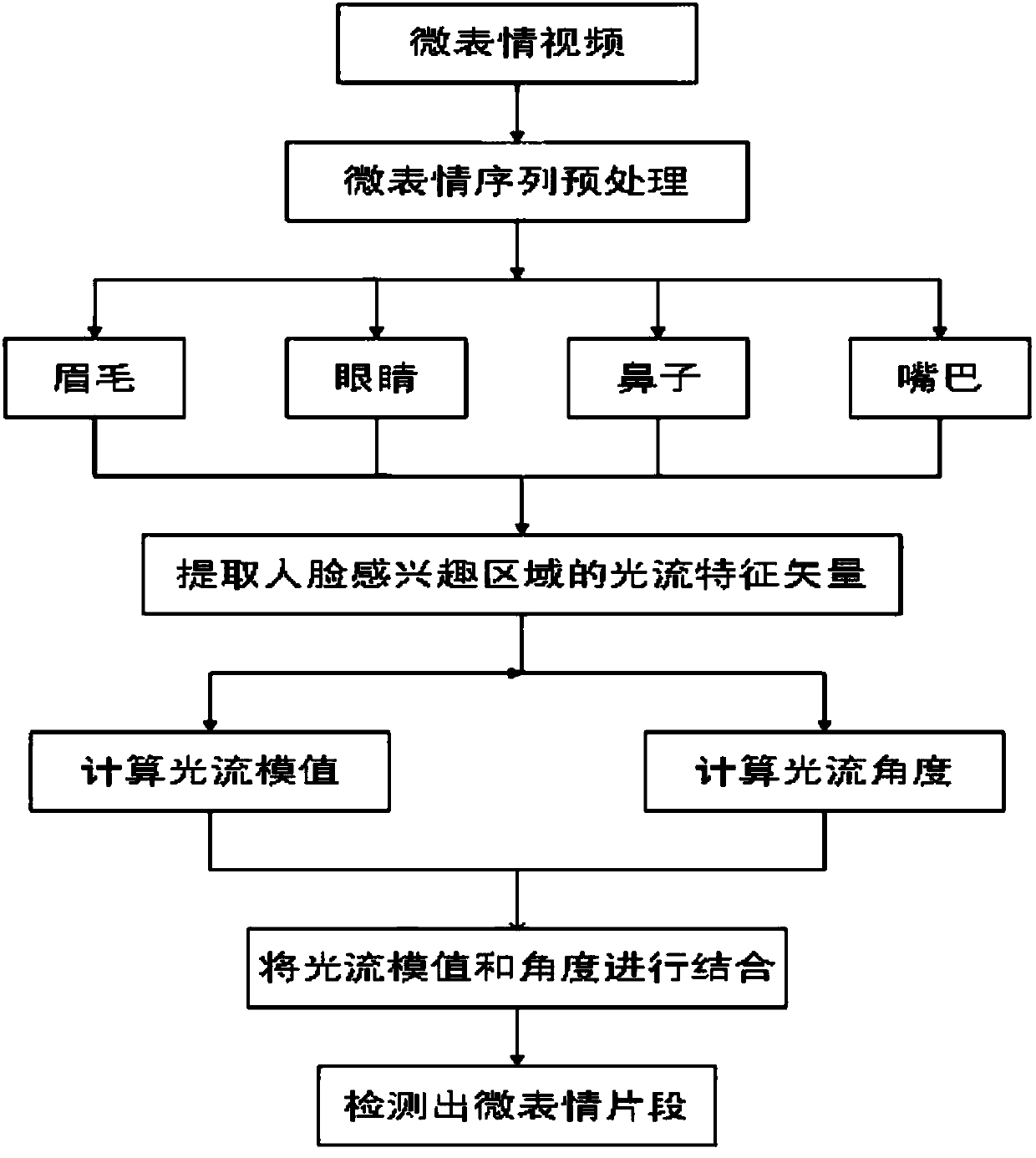

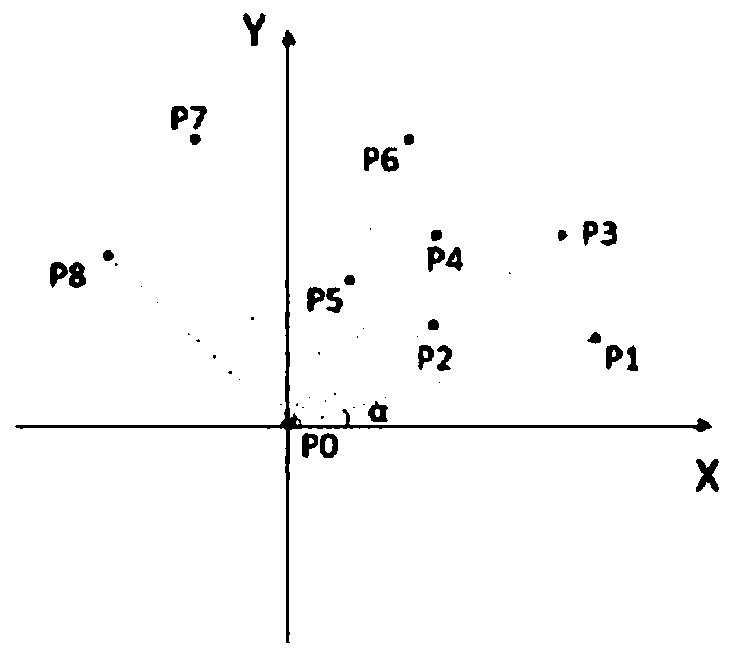

Optical flow characteristic vector mode value and angle combination micro expression detection method based on interested area

ActiveCN107358206AImprove the efficiency of micro-expression detectionEasy to identifyCharacter and pattern recognitionOptical flowVector mode

The invention relates to an optical flow characteristic vector mode value and angle combination micro expression detection method based on an interested area. According to the method, firstly, a micro expression video is pre-processed to acquire a micro expression sequence, key face characteristic points are further extracted, and a face interested area having the best effect is found out according to motion characteristics of different expression FACS motion units; optical flow characteristics of the interested area are extracted. The optical flow vector angle information is firstly introduced, an optical flow vector mode value and the angle information are acquired through calculation, through combination of the two, a more comprehensive and more judicious characteristic detection micro expression segment can be acquired; the optical flow mode value and the angle information are combined, the threshold is determined according to magnitude of the optical flow mode value, and a number shape combination method is utilized to vividly and visually acquire the micro expression segment. The method is advantaged in that micro expression detection efficiency is substantially improved, optical flow characteristic vector extraction is carried out only in the key face area, computational complexity is reduced, the consumption time is shortened, and high robustness is realized.

Owner:WUHAN MELIT COMM

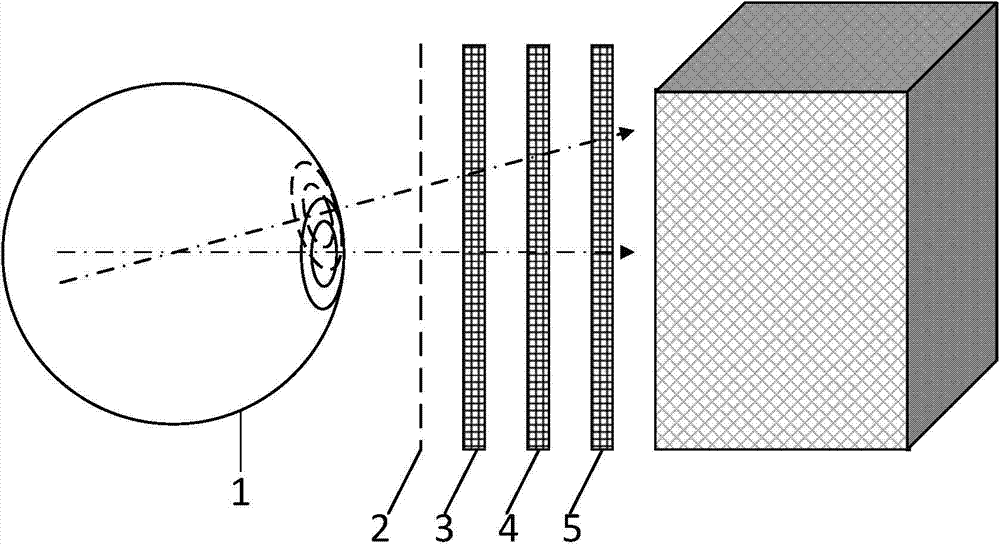

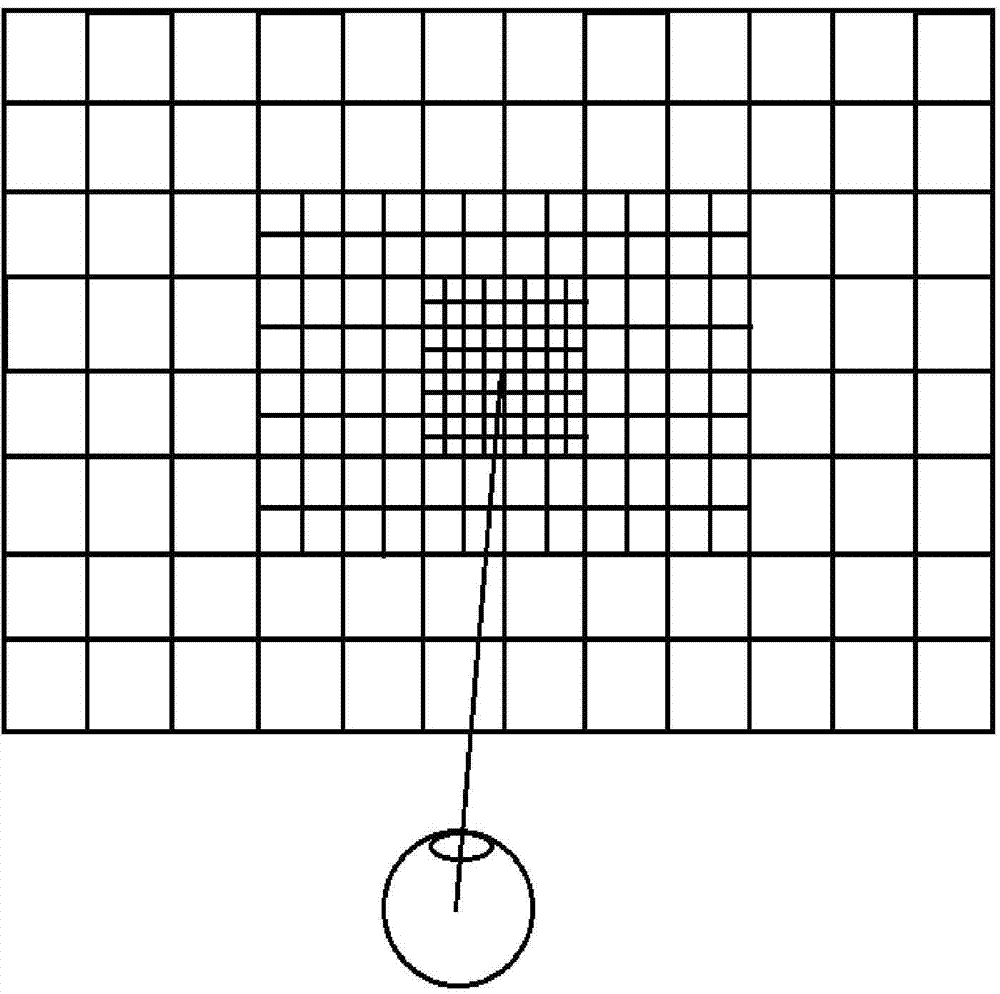

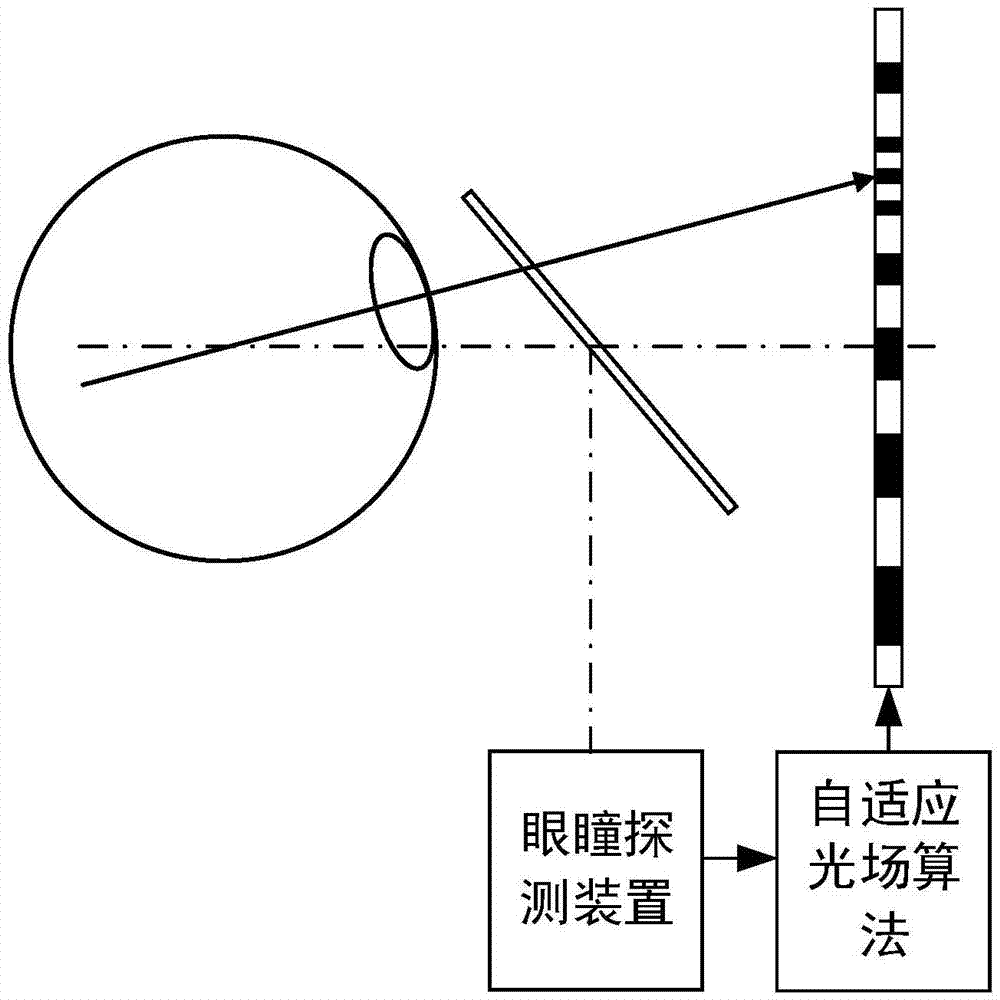

Self-adaptive high-resolution near-to-eye optical field display device and method on basis of eye tracking

ActiveCN104777615AReduce redundant informationImprove visual resolutionOptical elementsTransmittanceA-weighting

The invention discloses a self-adaptive high-resolution near-to-eye optical field display device on the basis of eye tracking. The self-adaptive high-resolution near-to-eye optical field display device comprises a beam splitter, a spatial light modulator array and backlight lighting equipment, wherein the beam splitter, the spatial light modulator array and the backlight lighting equipment are sequentially arranged in the line-of-sight direction of human eyes; the beam splitter is used for acquiring eye pupil position information; the spatial light modulator array is used for modulating transmittance of polarized light entering the human eyes; the backlight lighting equipment is used for providing backlight with uniform brightness for the spatial light modulator array. The invention also discloses a self-adaptive high-resolution near-to-eye optical field display method on the basis of eye tracking. According to the self-adaptive high-resolution near-to-eye optical field display method, a weighting function is set for each viewpoint and by utilizing a multi-viewpoint optical field global optimization method, redundant information of an edge view field is reduced and three-dimensional display visual resolution is improved; meanwhile, a human eye detection device is combined to acquire eye pupil positions in real time, optical field density is sampled and distributed again according to visual characteristics and by utilizing a single viewpoint optical field local optimization method, the computation burden of the optimization method is greatly reduced and self-adaptive high-resolution real-time three-dimensional display is realized.

Owner:ZHEJIANG UNIV

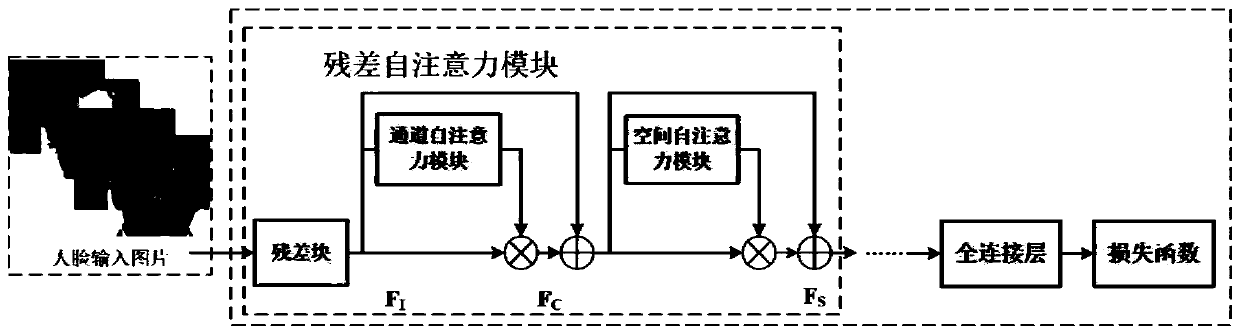

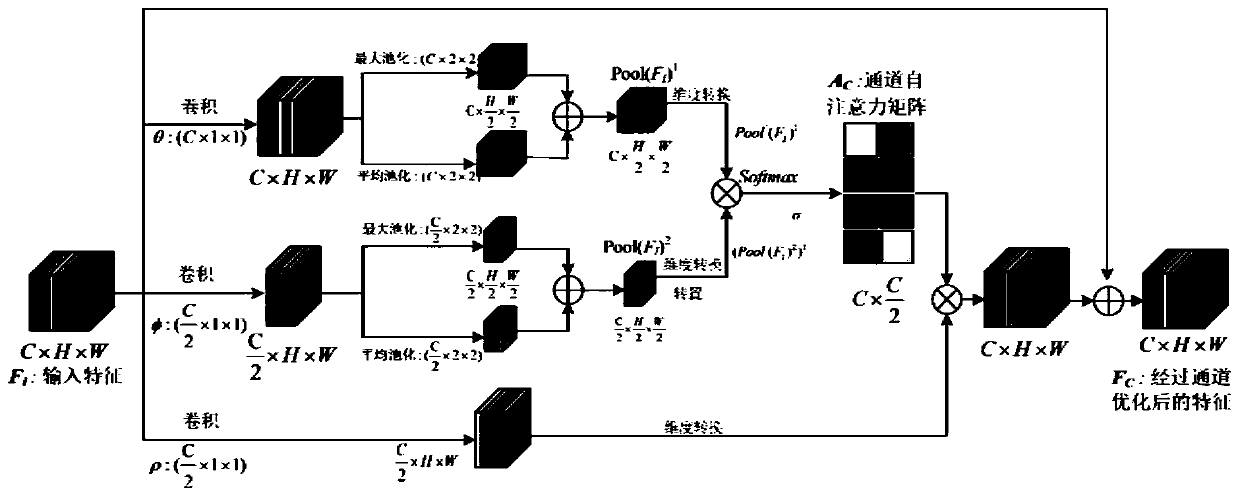

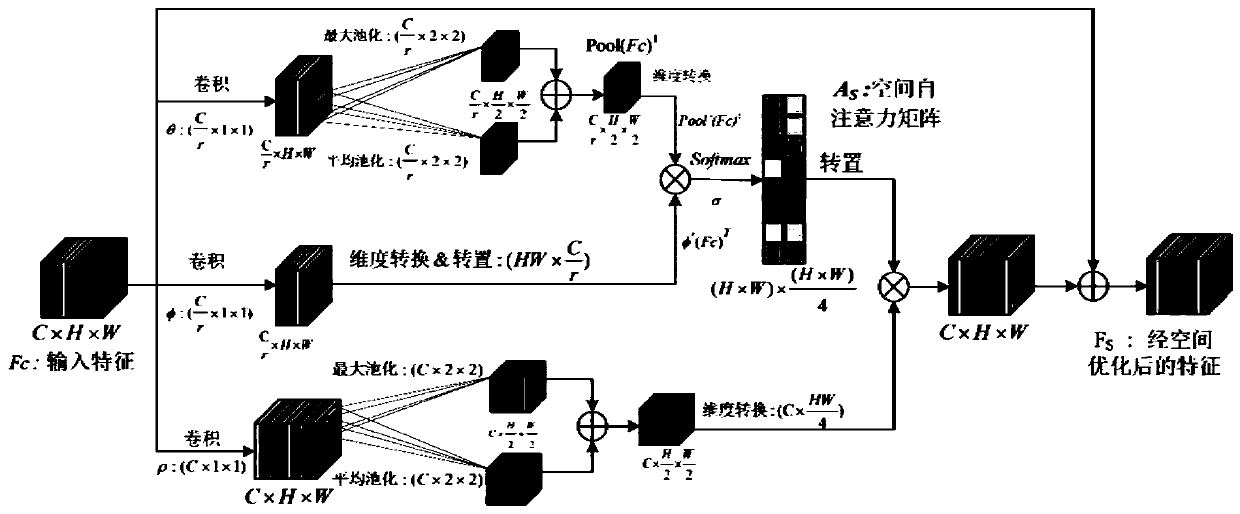

Deep learning face recognition system and method based on self-attention mechanism

InactiveCN110610129AImplement filter selectionReduce redundant informationCharacter and pattern recognitionNeural architecturesSelf attentionThree dimensional data

The invention discloses a deep learning face recognition system and a deep learning face recognition method based on a self-attention mechanism, and belongs to the field of computer vision and mode recognition. According to the invention, a channel self-attention module is constructed. The dimension conversion transposition is carried out on three-dimensional data of a feature map. A cross-correlation relationship matrix between channels is learned to represent the relative relationship between different channels, the optimized features of the channels are obtained through calculation according to original features, different weight assignment is carried out on the different channels, selection of channel filtering is achieved, and redundant information of the feature channels is reduced.A space self-attention module is constructed; modeling the spatial information of the three-dimensional feature map; learning a cross-correlation relationship matrix between the spatial positions of the feature map; according to the method, the spatial position of the face feature map is optimized to represent the relative relation between different positions, the features after spatial position optimization are obtained through calculation with the input features, different positions of the face feature map are endowed with different weights, selection of important feature areas of the face is achieved, and the features are concentrated in the important areas of the face.

Owner:HUAZHONG UNIV OF SCI & TECH

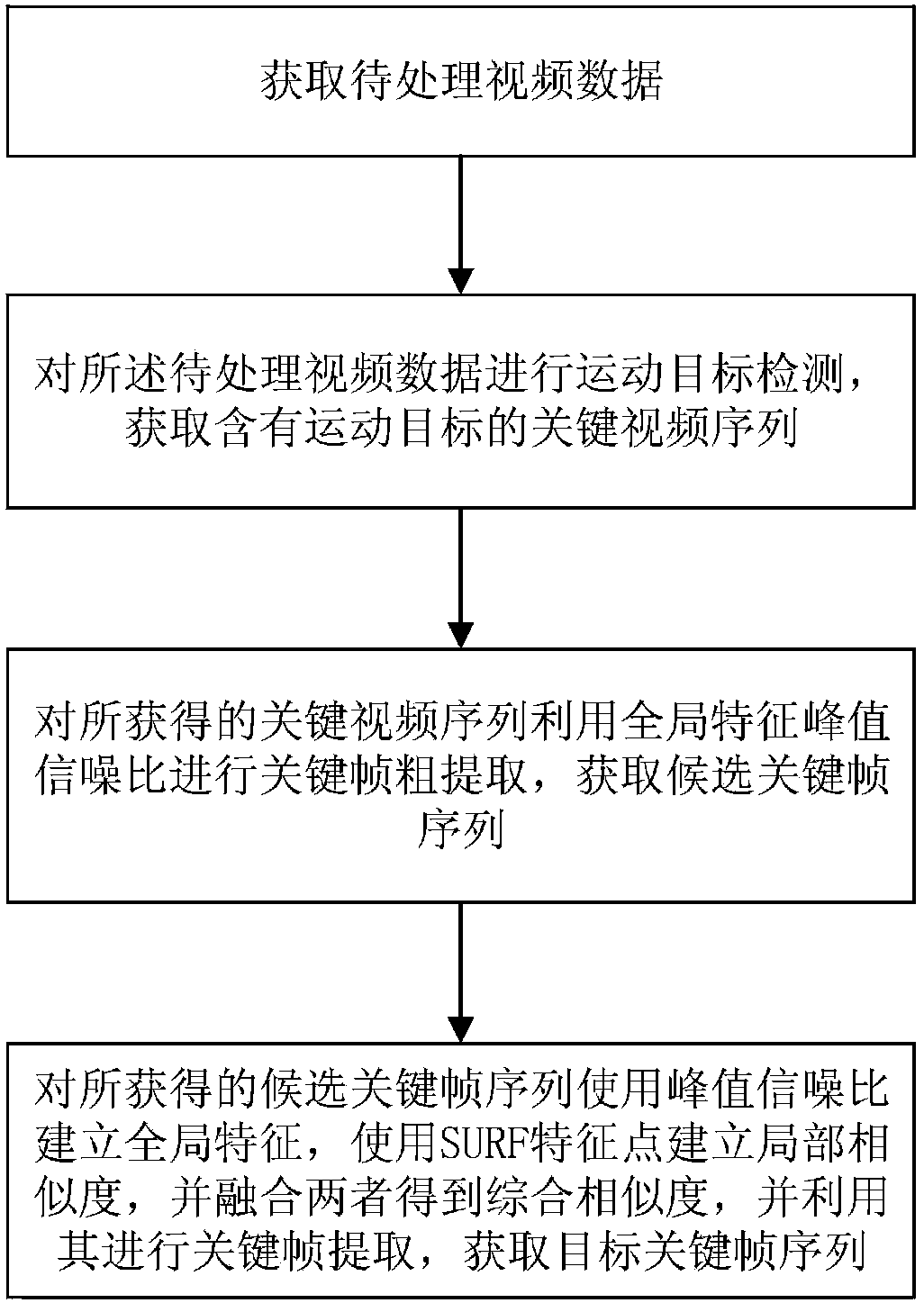

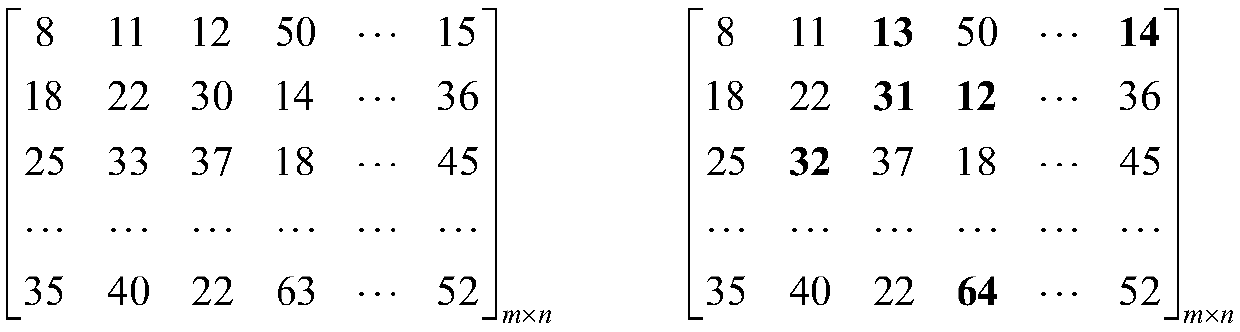

Video keyframe extraction method

ActiveCN107844779AEfficient extractionReduce redundant informationImage enhancementImage analysisVideo sequenceSelf adaptive

The invention discloses a video keyframe extraction method, which comprises the steps of: using a ViBe algorithm fused with an inter-frame difference method to perform moving object detection on an acquired original video sequence, so as to obtain a key video sequence containing a moving object; performing keyframe crude extraction on the key video sequence by using a global characteristic peak signal-to-noise ratio to obtain candidate keyframe sequences; and establishing global similarity of the candidate keyframe sequences by using the peak signal-to-noise ratio, establishing local similarity of the candidate keyframe sequences by using SURF feature points, and performing weighted fusion on the global similarity and the local similarity to obtain comprehensive similarity, performing self-adoptive keyframe extraction on the candidate keyframe sequences by using the comprehensive similarity, and finally acquiring a target keyframe sequence. The video keyframe extraction method providedby the invention can effectively extract the video keyframes, obviously reduce the redundant information of video data, and express the main content of the video concisely. Moreover, the video keyframe extraction method has low algorithm complexity and is suitable for real-time extraction of keyframes of surveillance videos.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

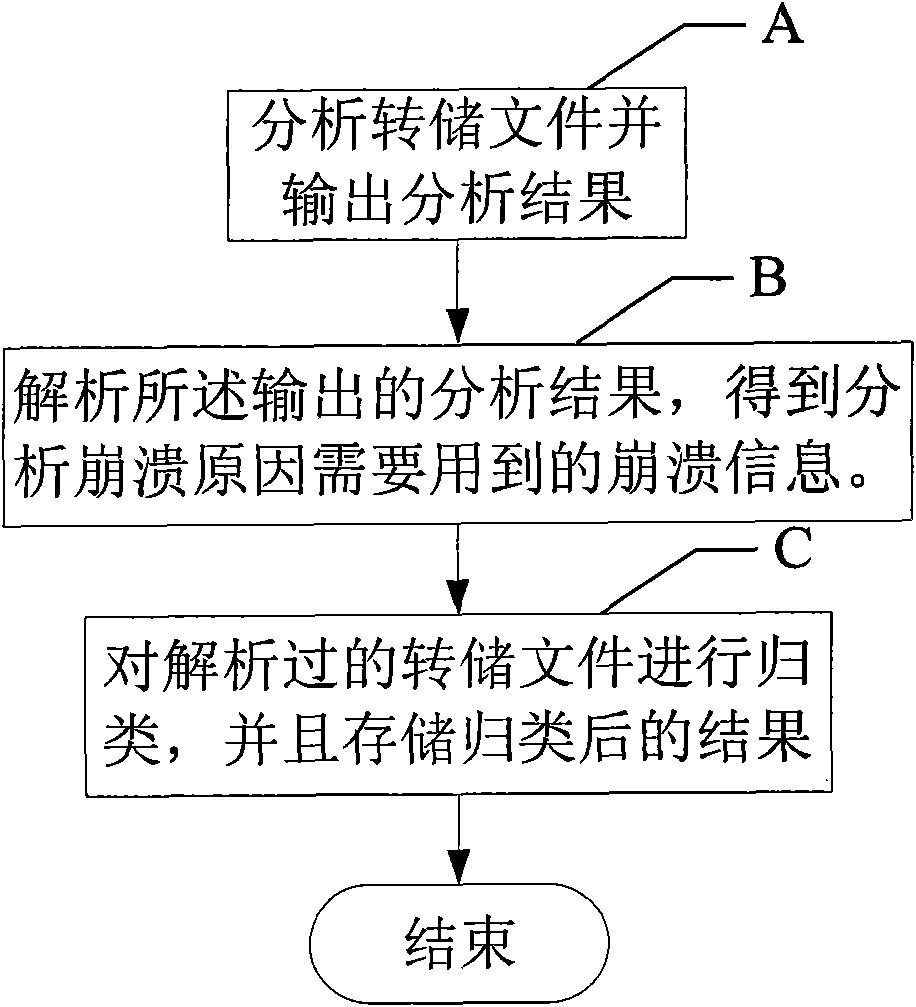

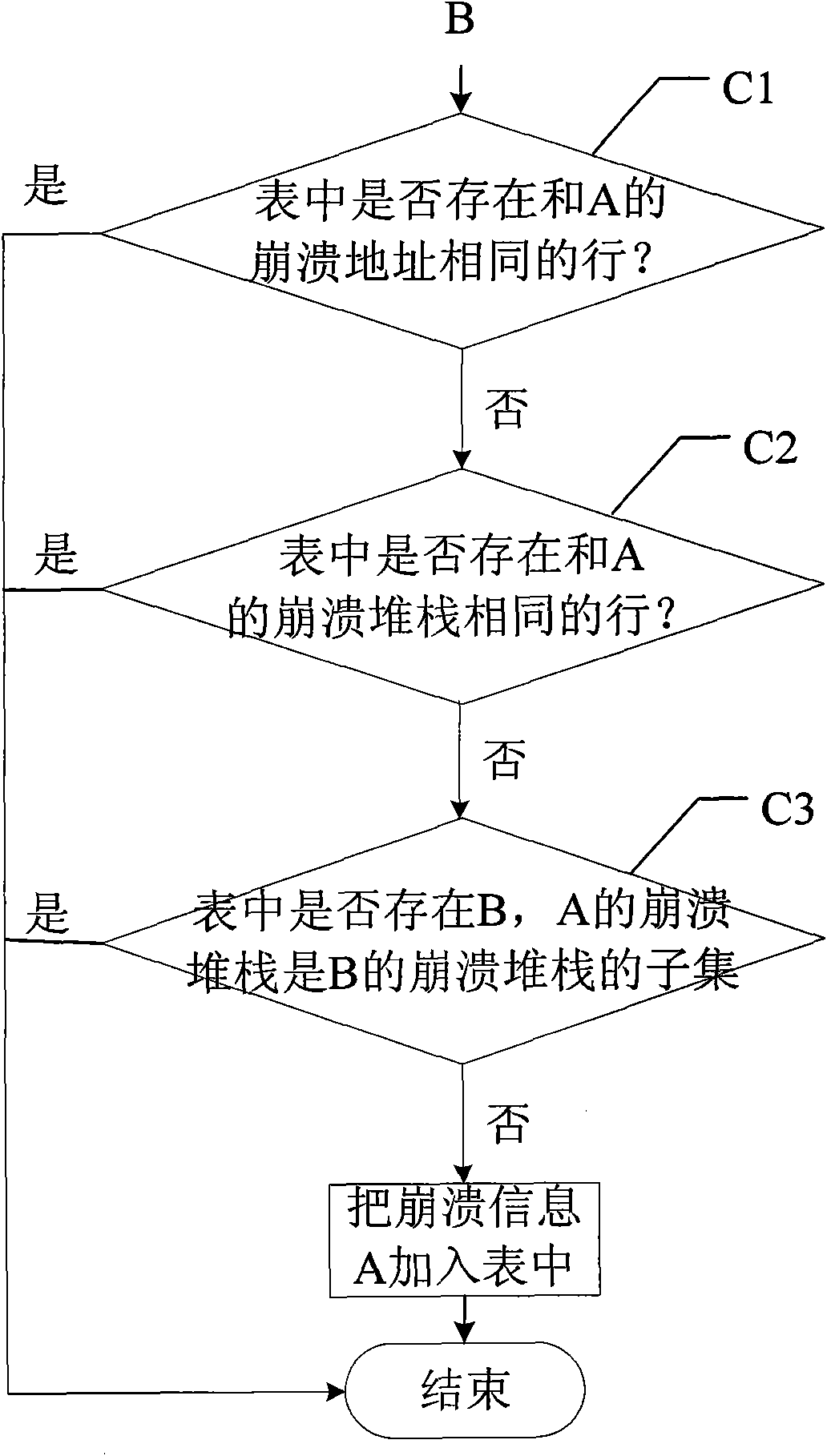

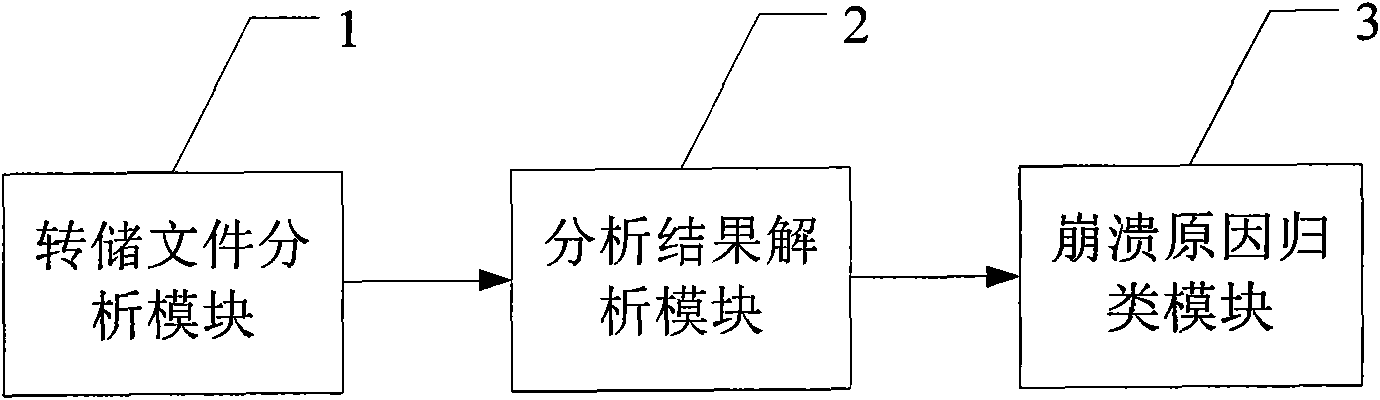

Automatic analysis method and device of crash information of computer software

InactiveCN101944059AAccurate classificationImprove classification accuracyHardware monitoringSoftware failureComputer software

The invention discloses automatic analysis method and device of the crash information of computer software. The method comprises the following steps of: (A) analyzing a dump file and outputting an analysis result; (B) parsing the output analysis result to acquire crash information; and (C) sorting the parsed dump file and storing a sorted result. The invention also discloses the automatic analysis device of the crash information of the computer software. In the invention, the crash dump file is analyzed by automatically calling a debugger provided by a toolkit of Debugging Tools for Windows to acquire the comprehensive and accurate crash information; and the crash information is sorted by using a crash address and a crash stack, therefore, not only the sorting accuracy is improved, but also the redundant information stored in a table is greatly reduced. By applying the invention, calculation staff in the field can accurately acquire the crush reason and provides reference for solving the software failures and optimizing the software.

Owner:ULTRAPOWER SOFTWARE

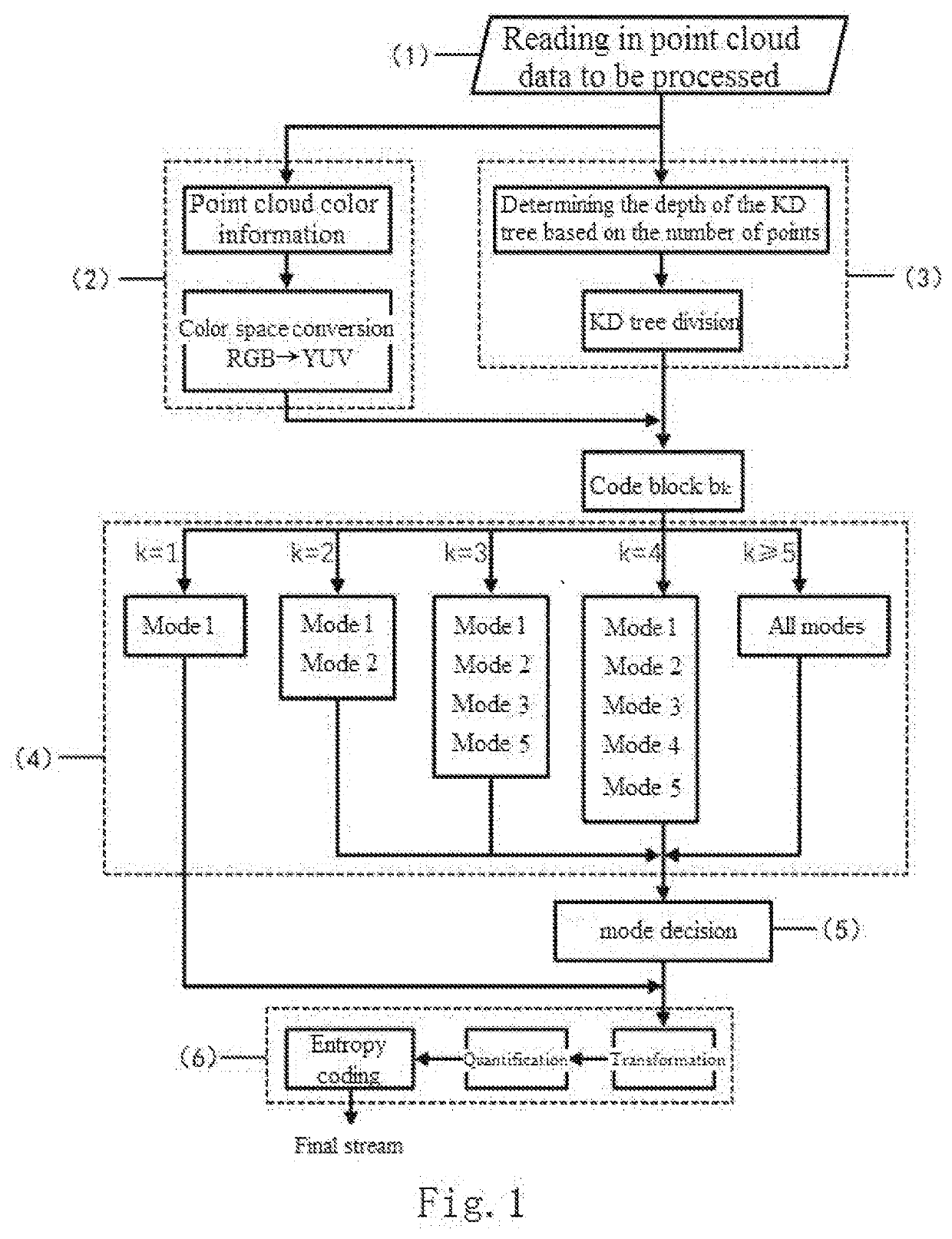

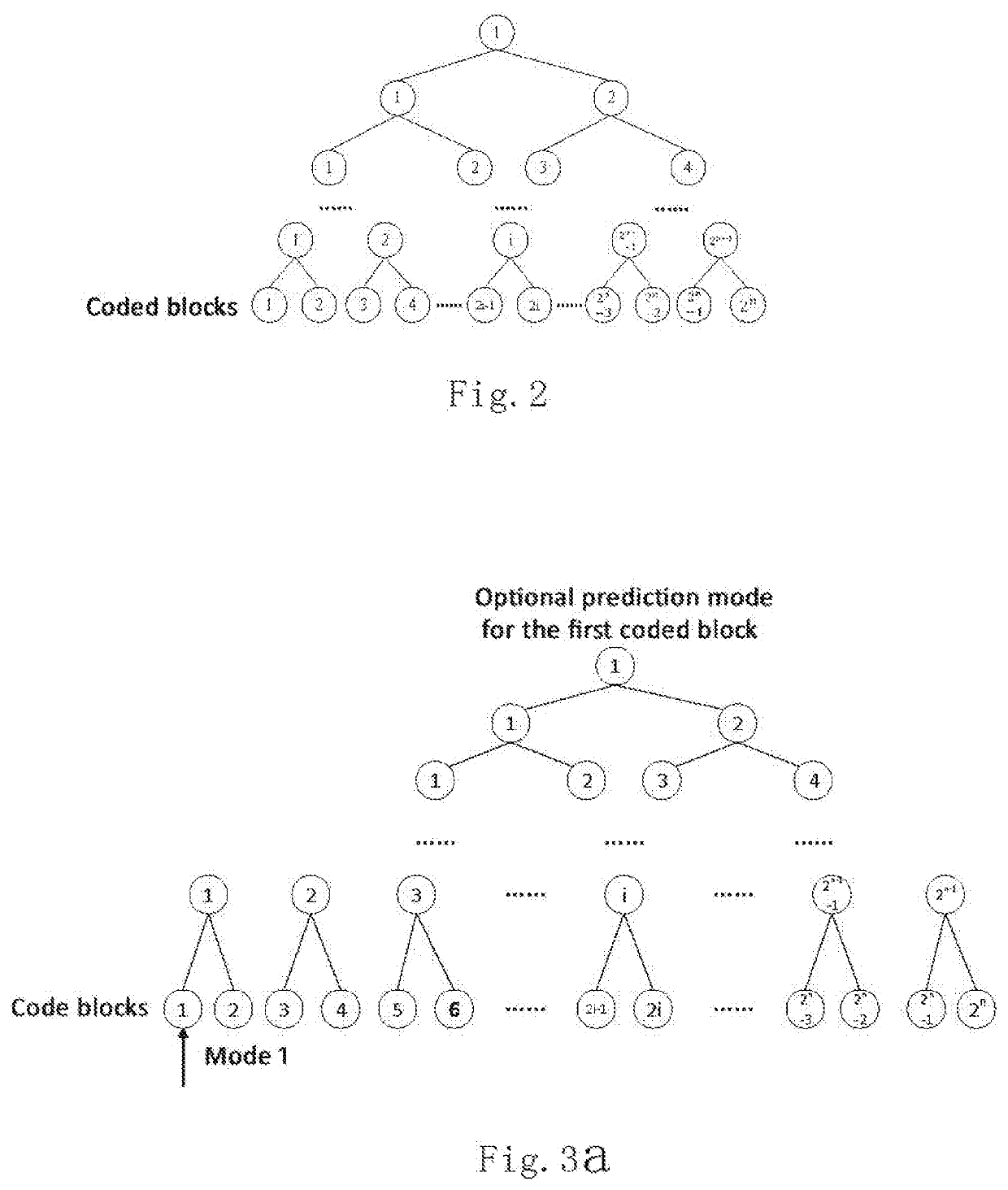

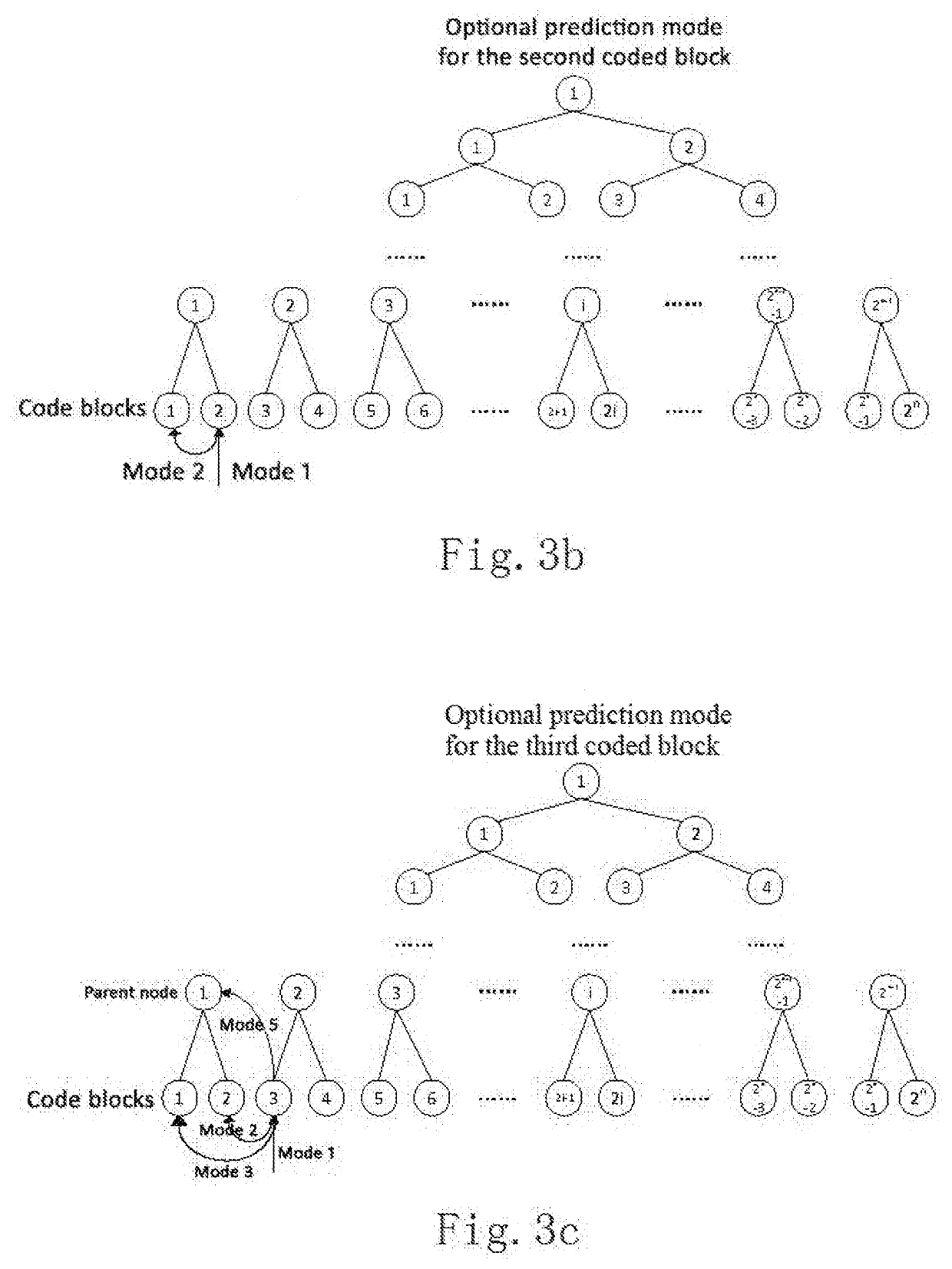

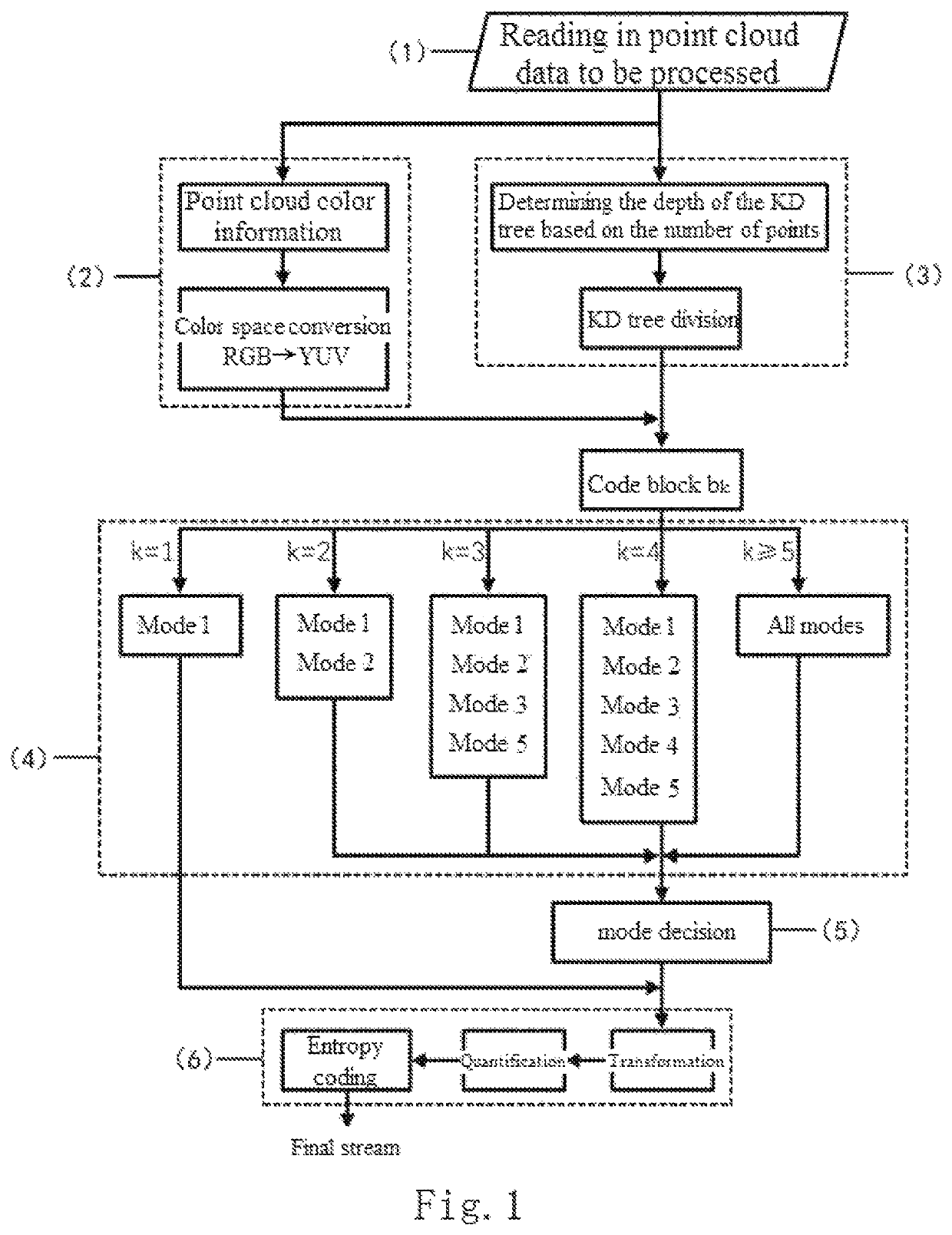

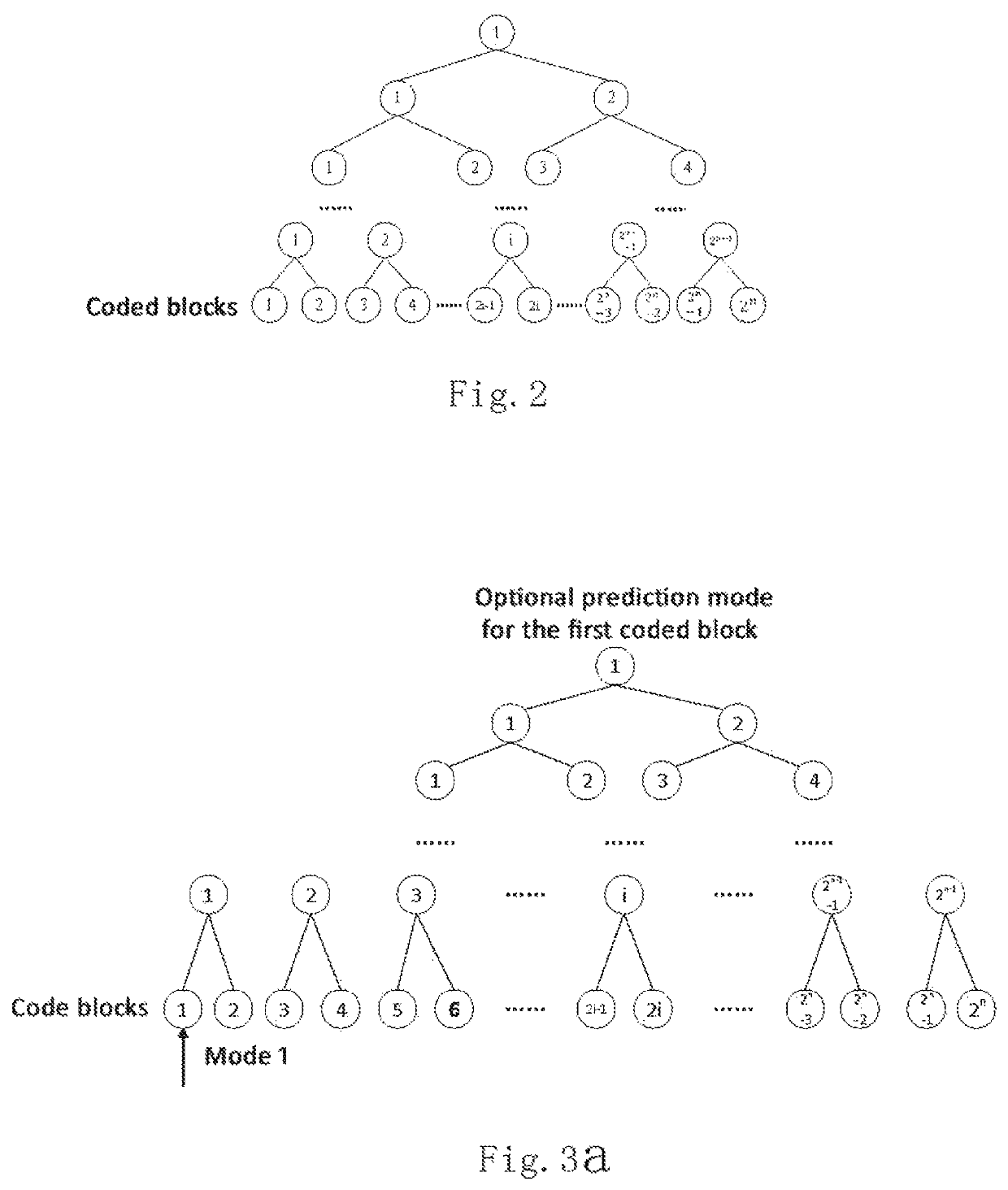

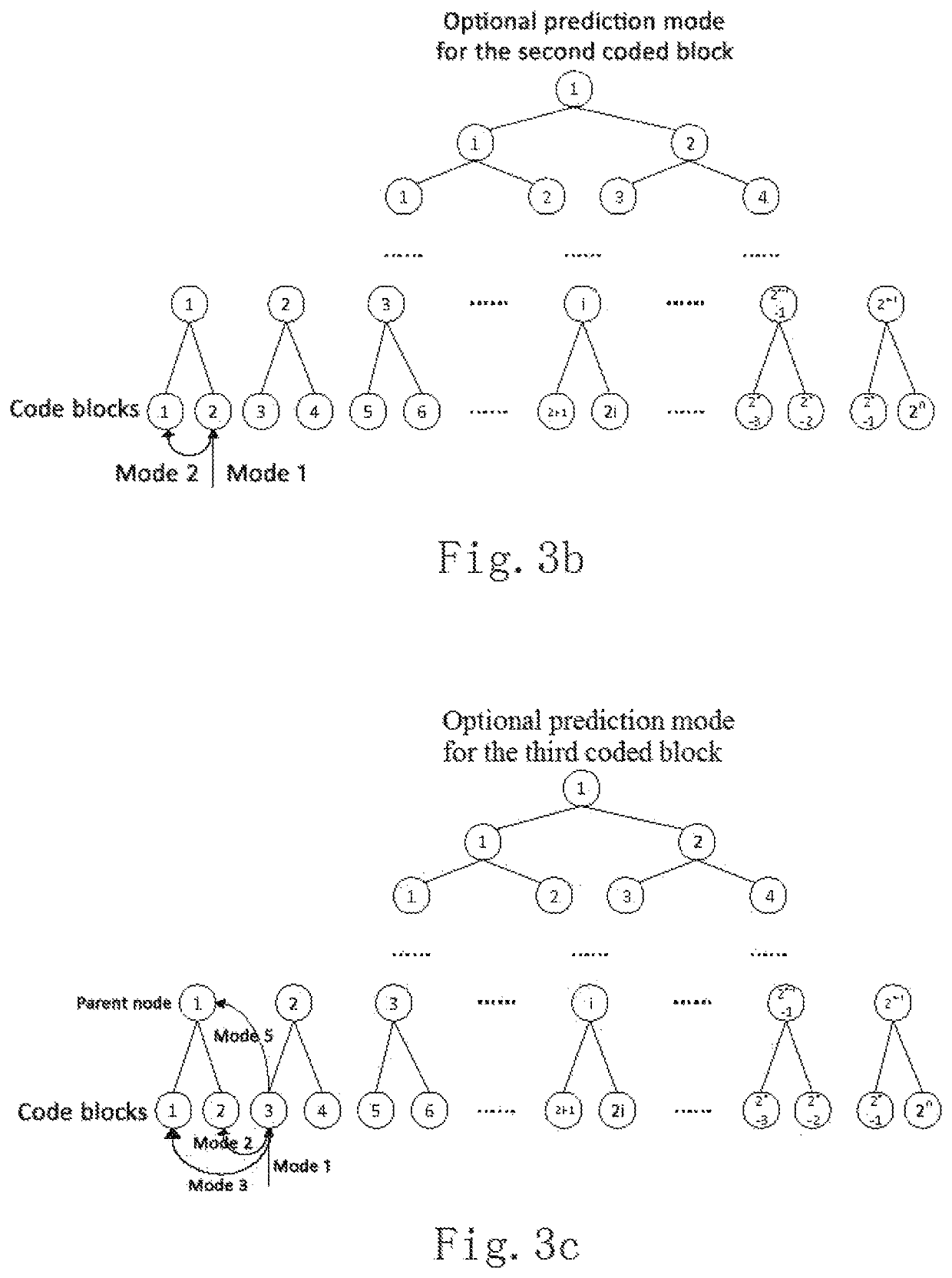

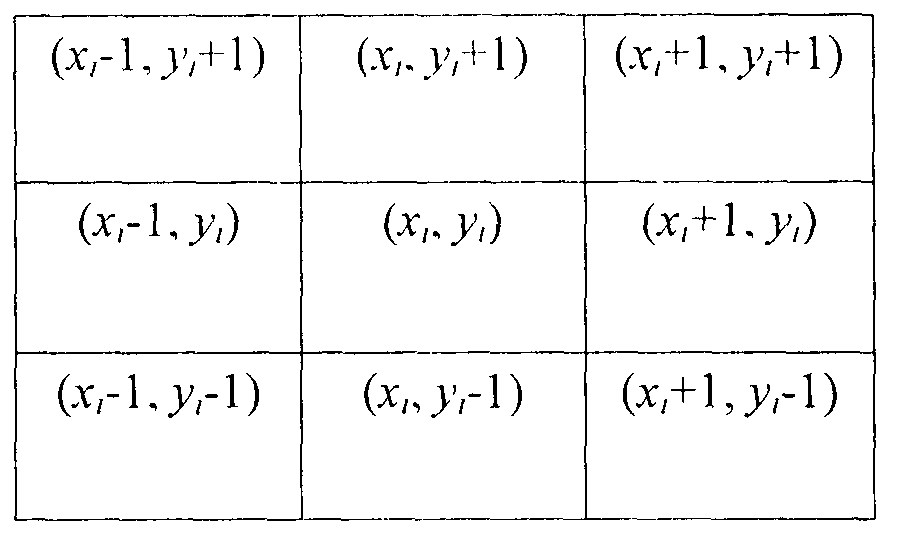

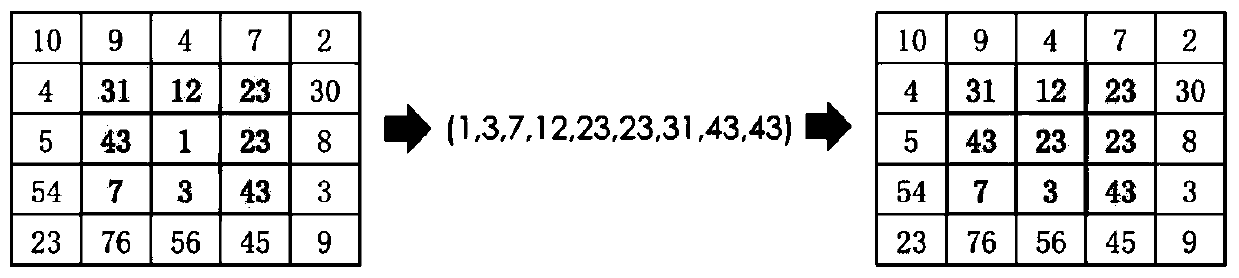

Multi-angle adaptive intra-frame prediction-based point cloud attribute compression method

ActiveUS20200137399A1Improve compression performanceReduce redundant informationDigital video signal modificationCoding blockPoint cloud

Disclosed is a multi-angle adaptive intra-frame prediction-based point cloud attribute compression method. A novel block structure-based intra-frame prediction scheme is provided for point cloud attribute information, where six prediction modes are provided to reduce information redundancy among different coding blocks and improve the point cloud attribute compression performance. The method comprises: (1) inputting a point cloud; (2) performing point cloud attribute color space conversion; (3) dividing a point cloud by using a K-dimensional (KD) tree to obtain coding blocks; (4) performing block structure-based multi-angle adaptive intra-frame prediction; (5) performing intra-frame prediction mode decision; and (6) performing conversion, uniform quantization, and entropy encoding.

Owner:PEKING UNIV SHENZHEN GRADUATE SCHOOL

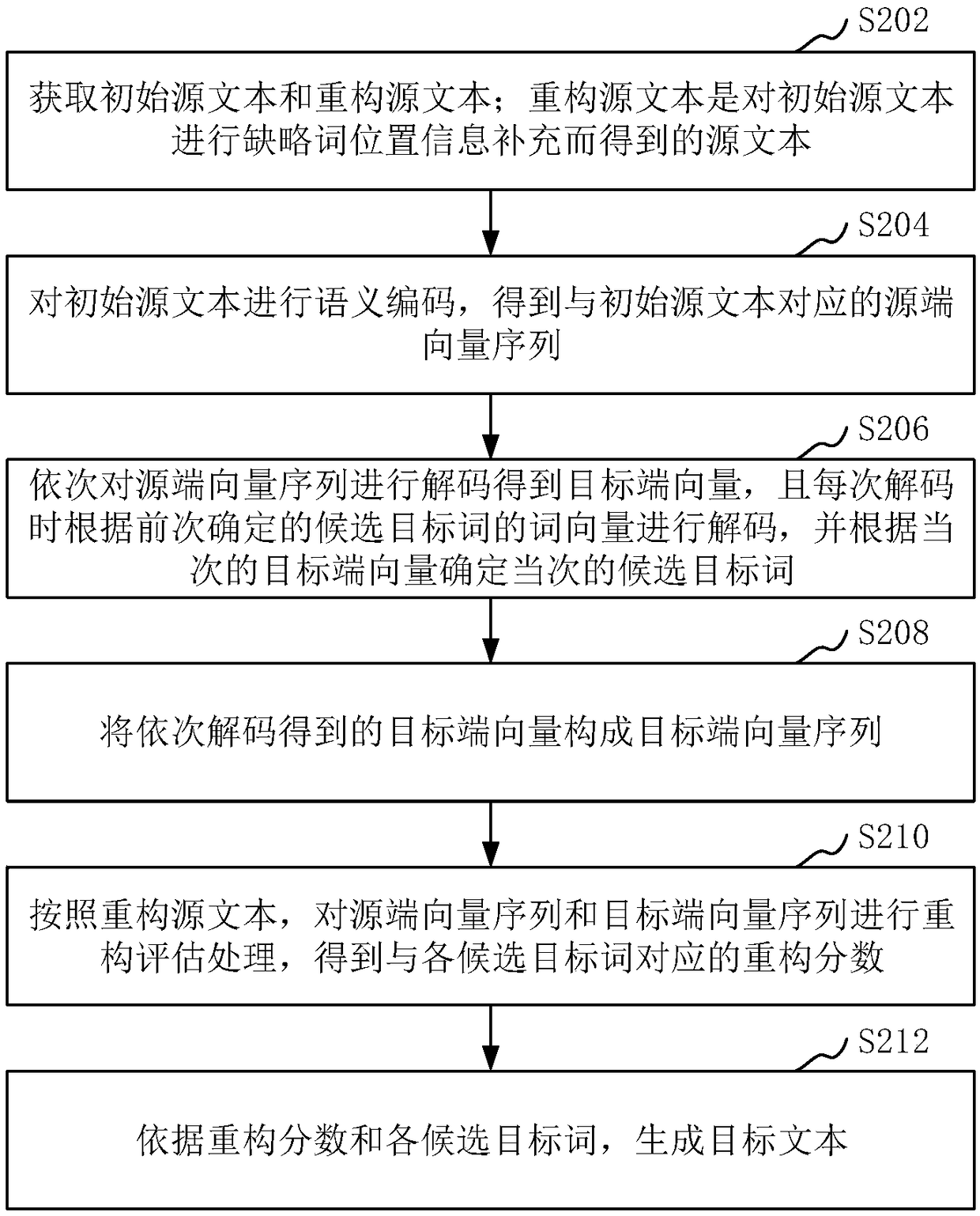

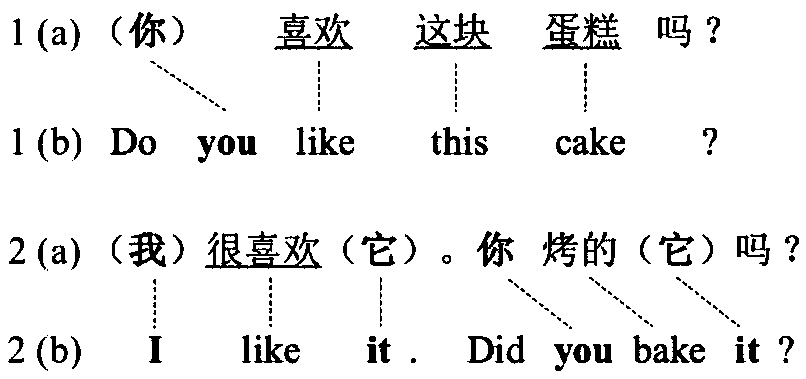

Text translation method, device, storage medium and computer device

ActiveCN109145315AMeasuring RecallAvoid missingNatural language translationSemantic analysisSemantics encodingSource text

The invention relates to a method, device, a readable storage medium and a computer device for text translation, including steps: obtaining an initial source text and reconstructing that source text,wherein the reconstructed source text is the source text obtained by supplementing the initial source text with missing word position information; carrying out semantic coding on the initial source text to obtain a source end vector sequence corresponding to the initial source text; a target end vector being obtained by sequentially decoding that source end vector sequence, and the target end vector being decoded according to the word vector of the candidate target word determined before each decoding, and the candidate target word of the current time being determined according to the target end vector of the current time; forming a target end vector sequence by sequentially decoding the target end vectors; performing reconstruction evaluation processing on the source vector sequence and the target vector sequence according to the reconstruction source text to obtain a reconstruction score corresponding to each candidate target word; a target text being generated according to that reconstruction score and the candidate target word. The scheme provided by the present application can improve the quality of translation.

Owner:TENCENT TECH (SHENZHEN) CO LTD

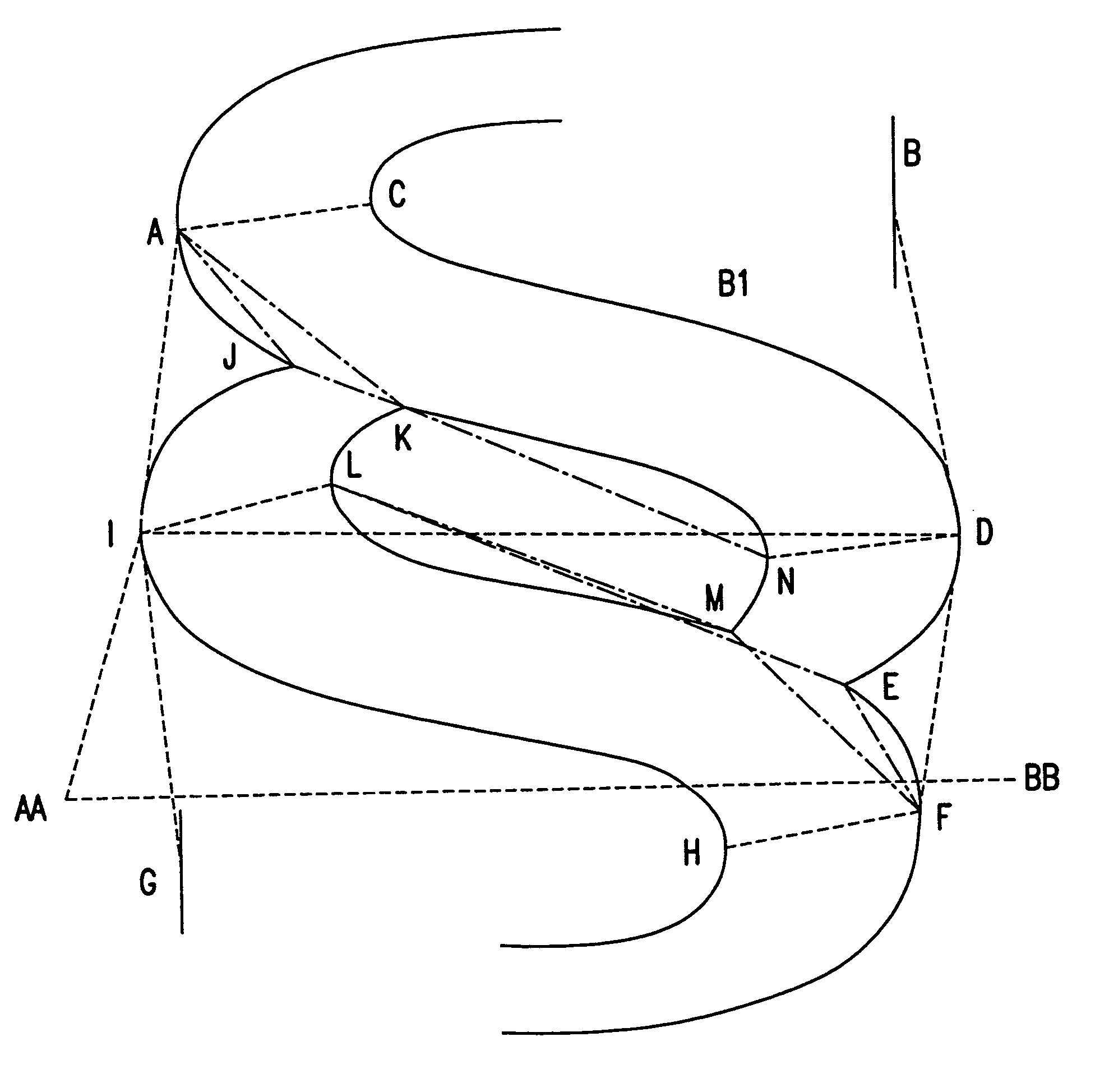

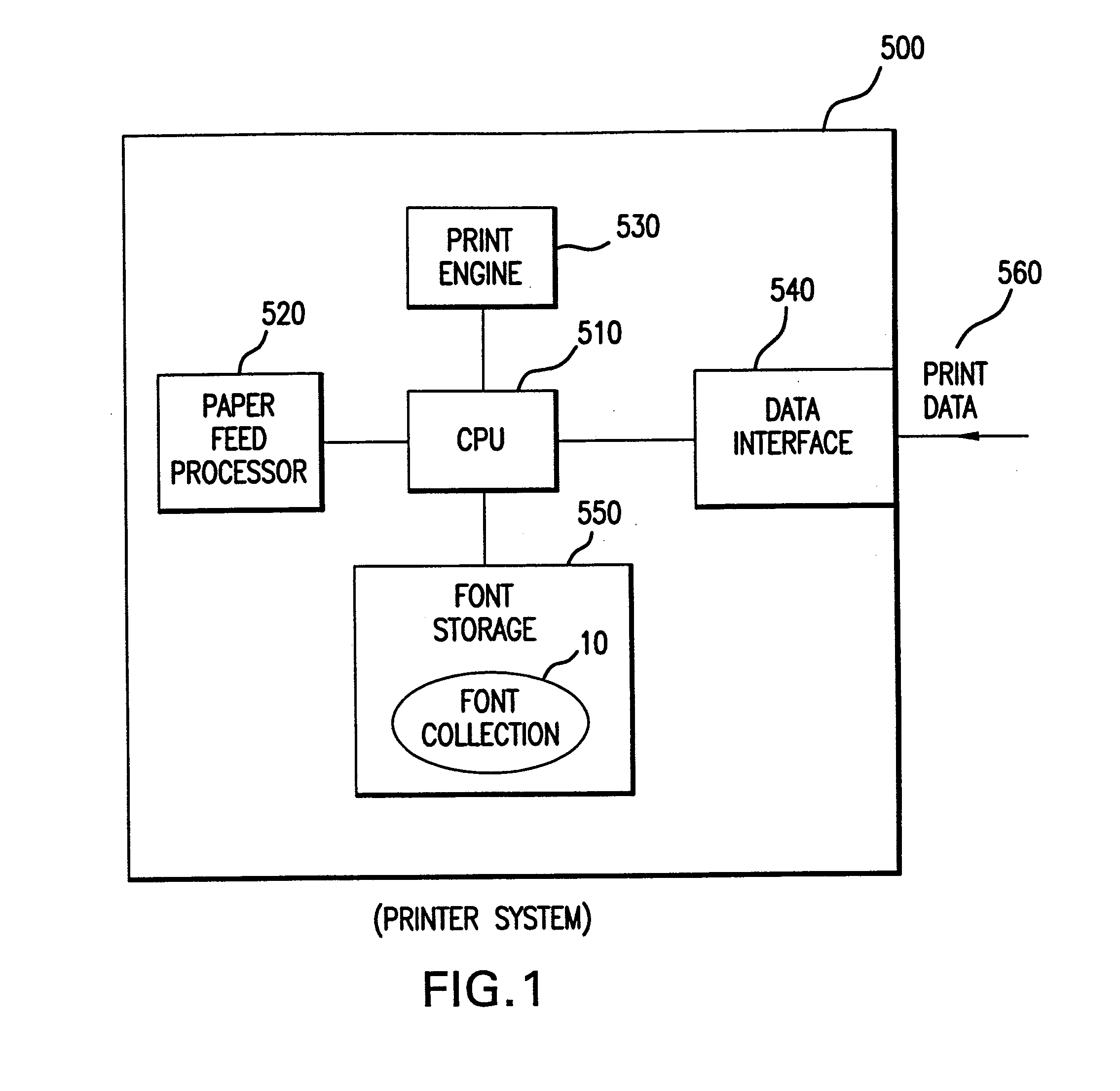

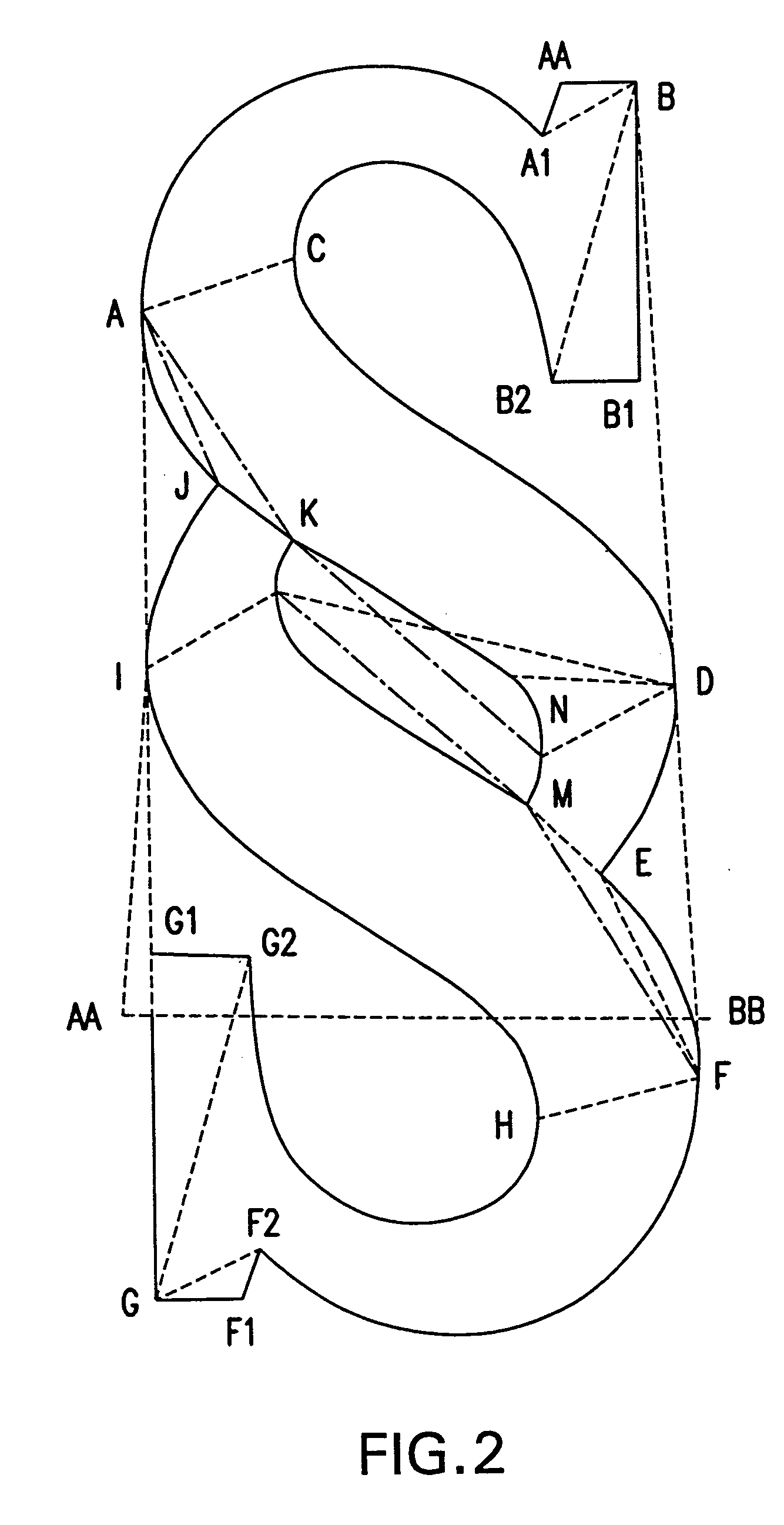

Method and apparatus for font storage reduction

The present invention is aimed at three specific data areas of font compression, each of whose size has become significant as other data areas have been compressed. The three data areas include model factoring, character level feature measurement (local dimensions) factoring, and typeface level feature measurement (global dimensions) factoring. In general, the invention in each area is an apparatus and method used in font compression to reduce redundant information, thereby allowing a reduction in data format (e.g., words to bytes and bytes to bits) resulting in an overall reduction in storage area for a given font collection.

Owner:MONOTYPE IMAGING INC

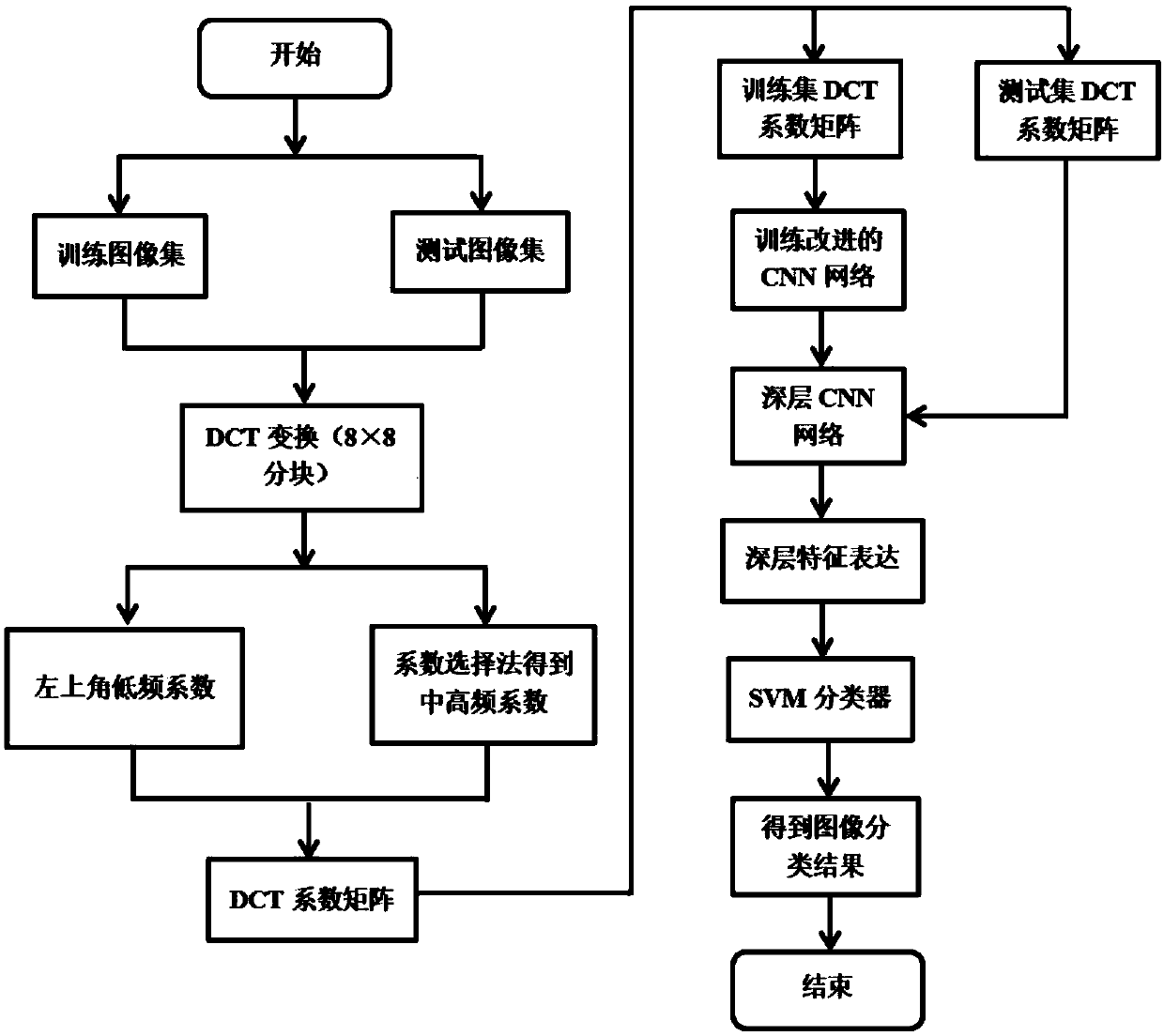

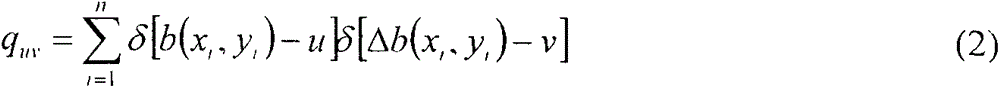

Unmanned aerial vehicle landing landform image classification method based on DCT-CNN model

ActiveCN107748895ASimple calculationShort timeCharacter and pattern recognitionNeural architecturesAcquired characteristicClassification methods

The invention discloses an unmanned aerial vehicle landing landform image classification method based on a DCT-CNN model. The method comprises the following steps of acquiring a training image set anda test image set of unmanned aerial vehicle landing landform images; carrying out DCT conversion on the unmanned aerial vehicle landing landform images and carrying out DCT coefficient screening; aiming at characteristics of complex unmanned aerial vehicle landing landform image scenes and abundant information, constructing a DCT-CNN network model; inputting a DCT coefficient of a training set into the improved DCT-CNN model so as to train, carrying out parameter updating on a network till that a loss function is converged into one small value, and then ending the training; taking a trainingimage characteristic set as a training sample so as to train a SVM classifier; and inputting a test set, using a trained model to carry out layer-by-layer learning on a test image, and finally inputting an acquired characteristic vector into the trained SVM classifier so as to carry out classification, and acquiring a classification result. In the invention, a data redundancy is reduced, trainingtime is greatly shortened, and classification accuracy of the unmanned aerial vehicle landing landform images is effectively increased.

Owner:BEIJING UNIV OF TECH

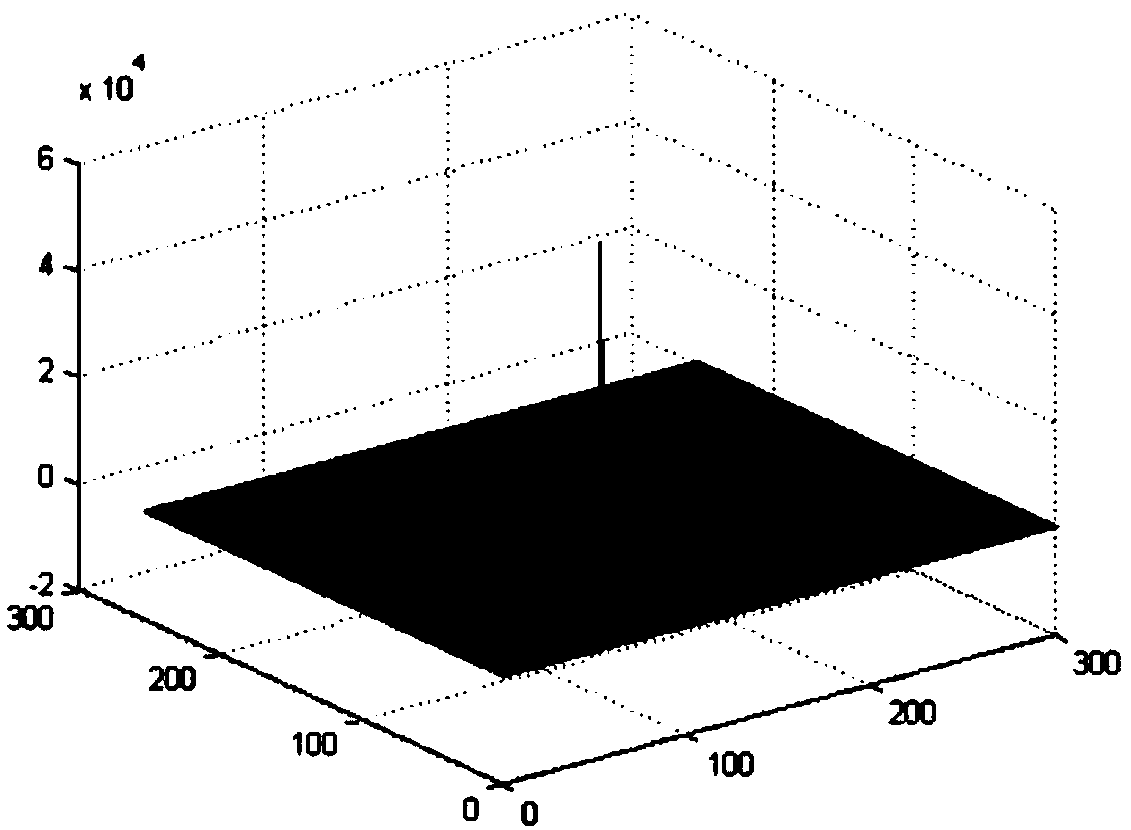

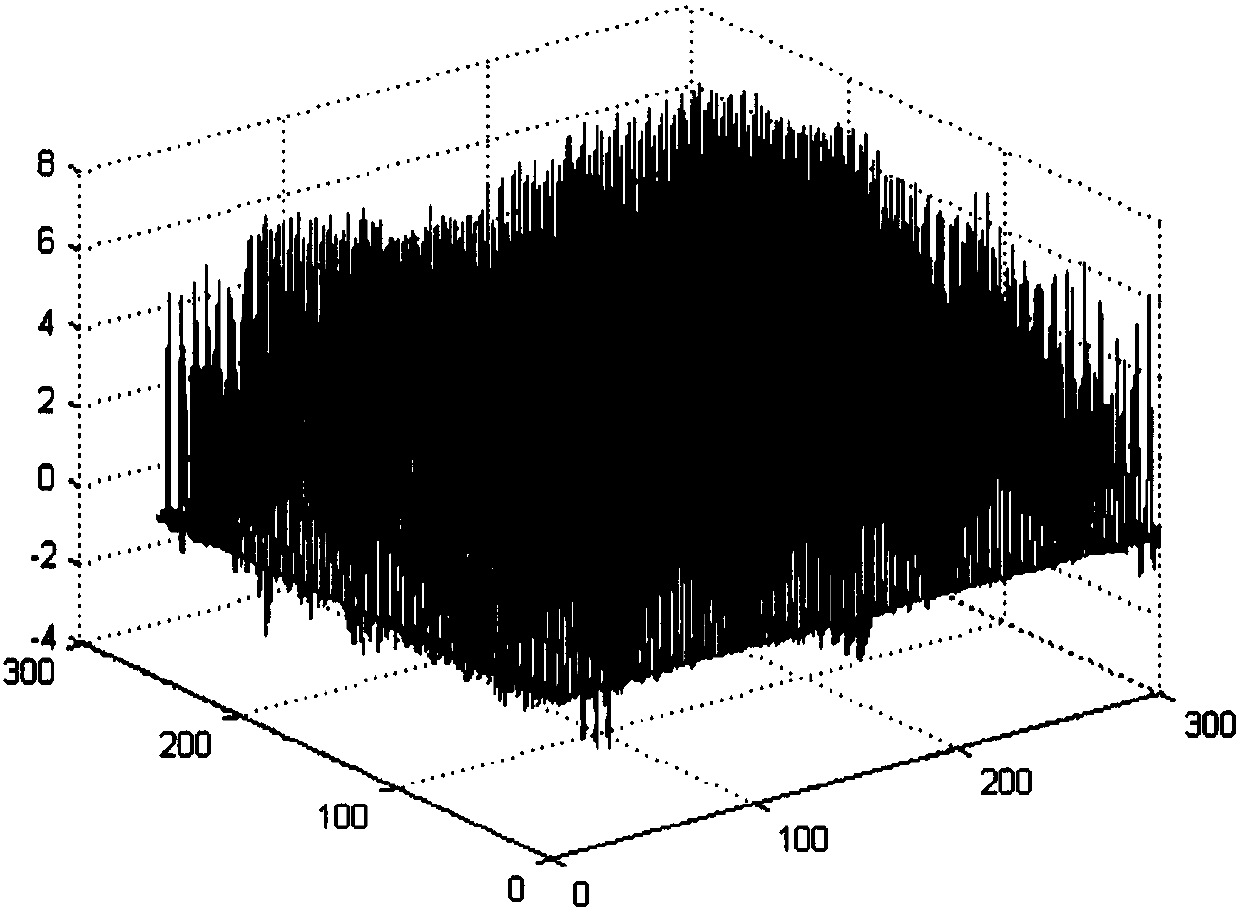

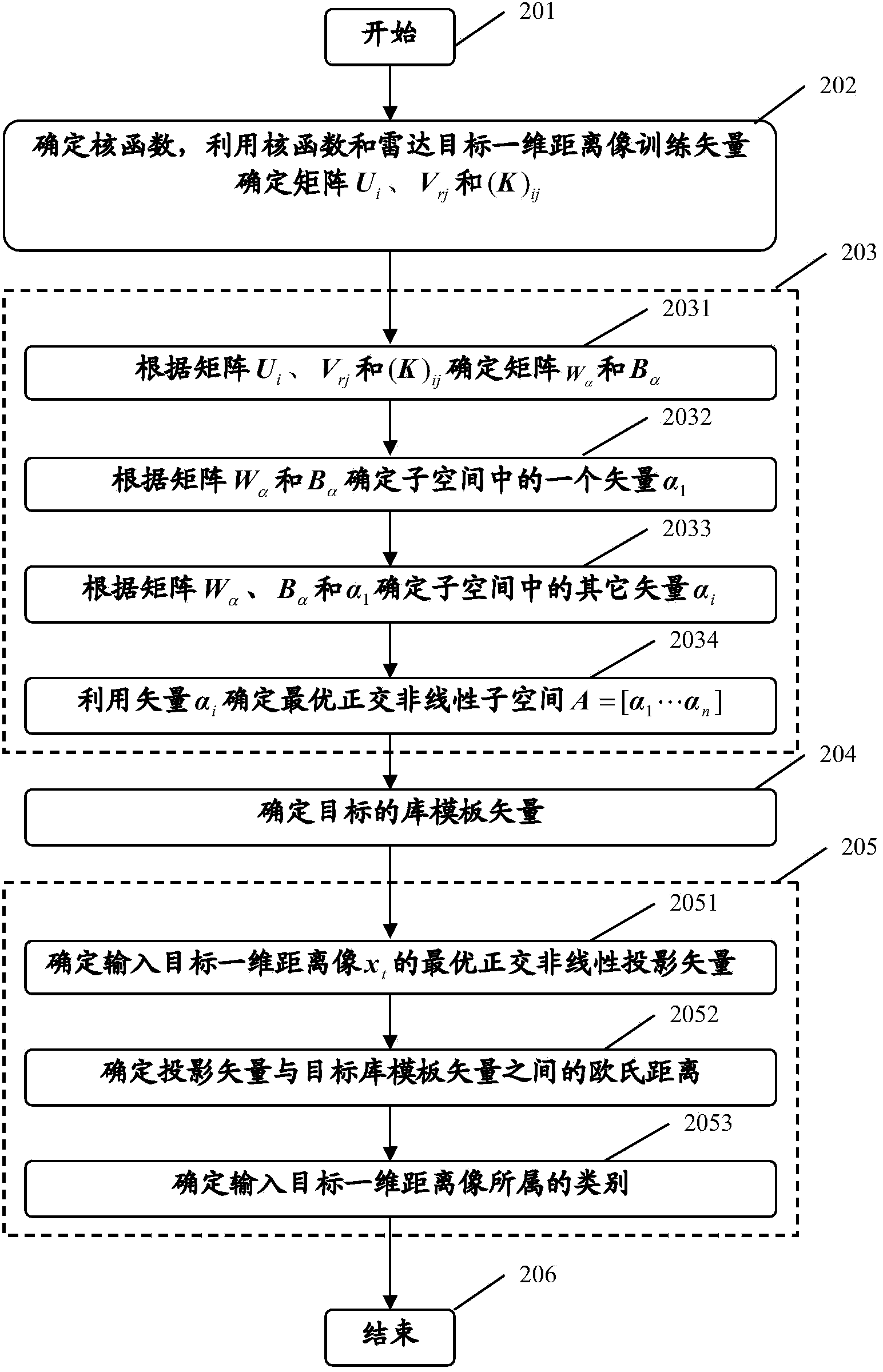

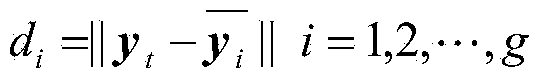

One-dimension range profile optimal orthogonal nolinear subspace identification method for radar targets

InactiveCN103675787ASettle the lossEasy to identifyWave based measurement systemsFeature extractionRadar

The invention belongs to the technical field of radar target identification and provides a one-dimension range profile optimal orthogonal nolinear subspace identification method for radar targets. Nonlinear transformation is conducted on a one-dimension range profile of each category of targets, the one-dimension range profile is mapped to high-dimensional linear characteristic space, an optimal orthogonal nolinear transformational matrix is established in the high-dimensional linear characteristic space, characteristic extraction is conducted, a nearest neighbor rule is adopted for classification, and the category of an input target is finally determined. The method comprises the steps of utilizing a kernel function and the one-dimension range profile of the radar target to train a vector to determine matrixes of Ui, Vrj, (K)ij, W alpha and B alpha; determining a vector alpha i (i=1, 2, ..., n) in optimal orthogonal nolinear subspace, determining the transformational matrix A of the optimal orthogonal nolinear subspace, wherein the A ranges from alpha 1 to alpha n; determining a base template vector of the target; determining an optimal orthogonal nolinear projection vector of the one-dimension range profile xt of the input target; determining the Euclidean distance between the optimal orthogonal nolinear projection vector and the base template vector of the target and determining the category of the one-dimension range profile of the input target. The one-dimension range profile optimal orthogonal nolinear subspace identification method for radar targets can effectively improve target identification performance.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

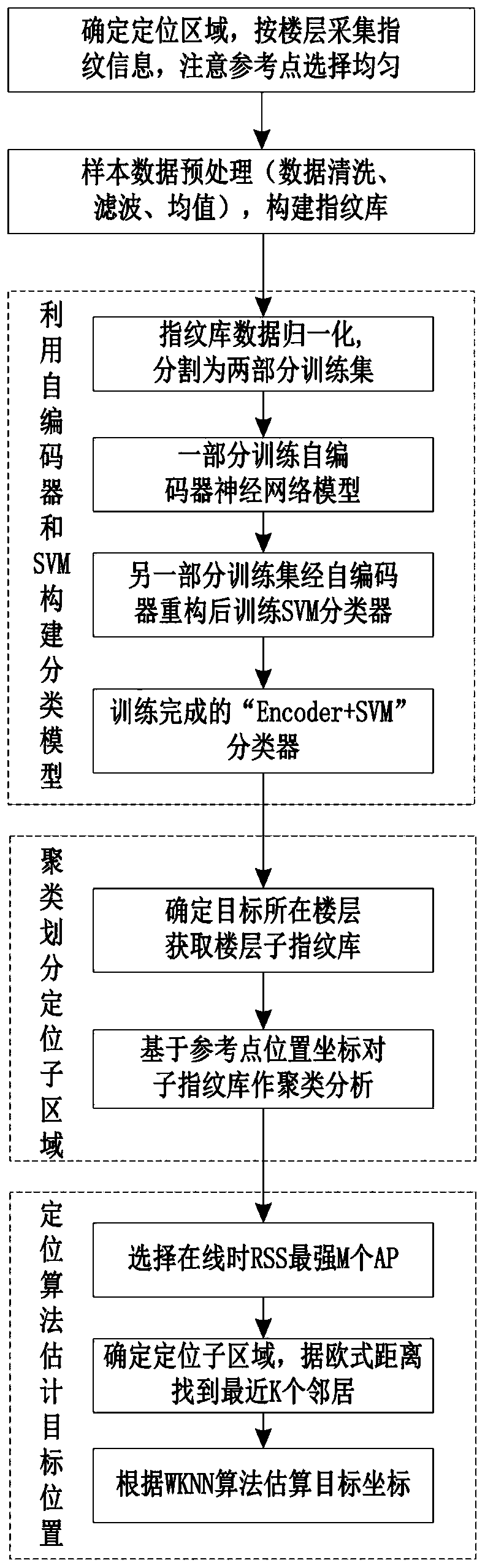

Indoor positioning method based on WiFi

ActiveCN110012428AReduce data dimensionalityReduce redundant information and noise interferenceParticular environment based servicesLocation information based serviceNear neighborSub region

The invention relates to an indoor positioning method based on WiFi, which comprises the following steps of: in an offline stage, acquiring fingerprint vectors of N reference point positions in an indoor positioning area, and storing the fingerprint information of the N reference points into a fingerprint database DB; roughly positioning at an online stage, namely determining a target floor; utilizing the K-means algorithm to carry out clustering analysis on the sub-fingerprint libraries DBjk of the corresponding floors, and further dividing positioning sub-regions; in the real-time positioning stage, firstly, carrying out AP selection, and then using a KNN classification algorithm for determining a sub-region where a target is located; and finally, finding out K nearest neighbors, and estimating the position (x, y) of the target in a weighted average mode. According to the method, for a large-range indoor positioning scene, the intensity information of all APs is reserved; for indoorfloor positioning, an SVM classifier is used, an encoder is added to a classifier model, the data dimension is reduced through introduction of the encoder, redundant information and noise interferenceare effectively reduced, and the classification precision is improved.

Owner:HEFEI UNIV OF TECH

Optical flow computation method using time domain visual sensor

InactiveCN105160703AHigh precisionImprove real-time performance3D modellingTime domainFrame sequence

The present invention discloses an optical flow computation method using a time domain visual sensor, and specific implementation steps are given out. Different from a current method for performing optical flow computation by adopting "a frame sequence image (video)", the method disclosed by the present invention uses a visual information acquisition device-time domain visual sensor to perform optical flow computation of a field of view. Furthermore, because each pixel autonomously performs change detection and asynchronous output, motion and change of an object in a scenario can be sensed in real time, a continuity assumption of "no change in brightness and no change in local speed" in a differential optical flow computation method is well satisfied, and the real-time property of optical flow computation is greatly improved while the precision of optical flow computation is significantly improved. Therefore, the method is very suitable for optical flow computation and subsequent tracking and speed measurement of the high-speed moving object.

Owner:TIANJIN NORMAL UNIVERSITY

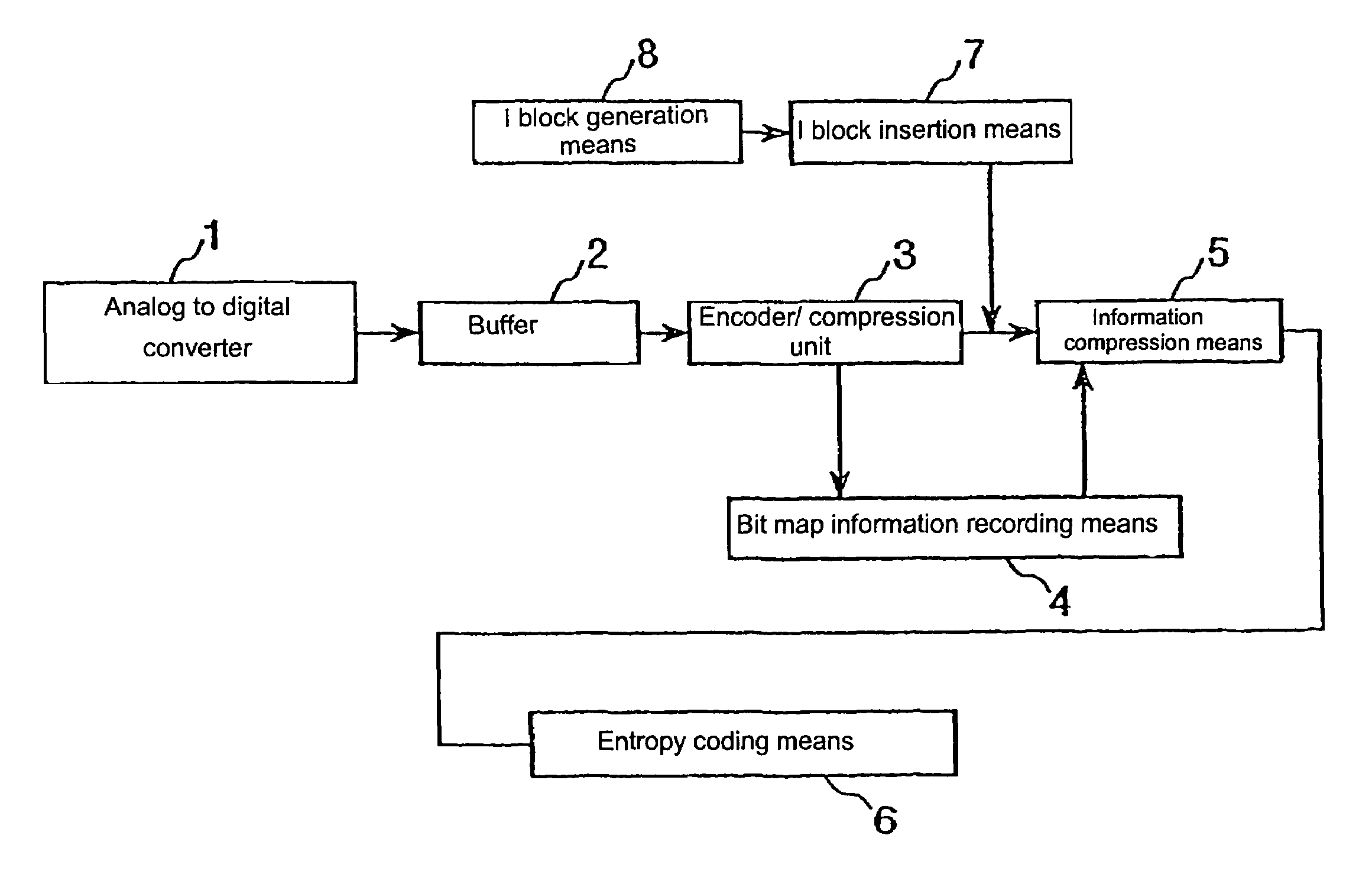

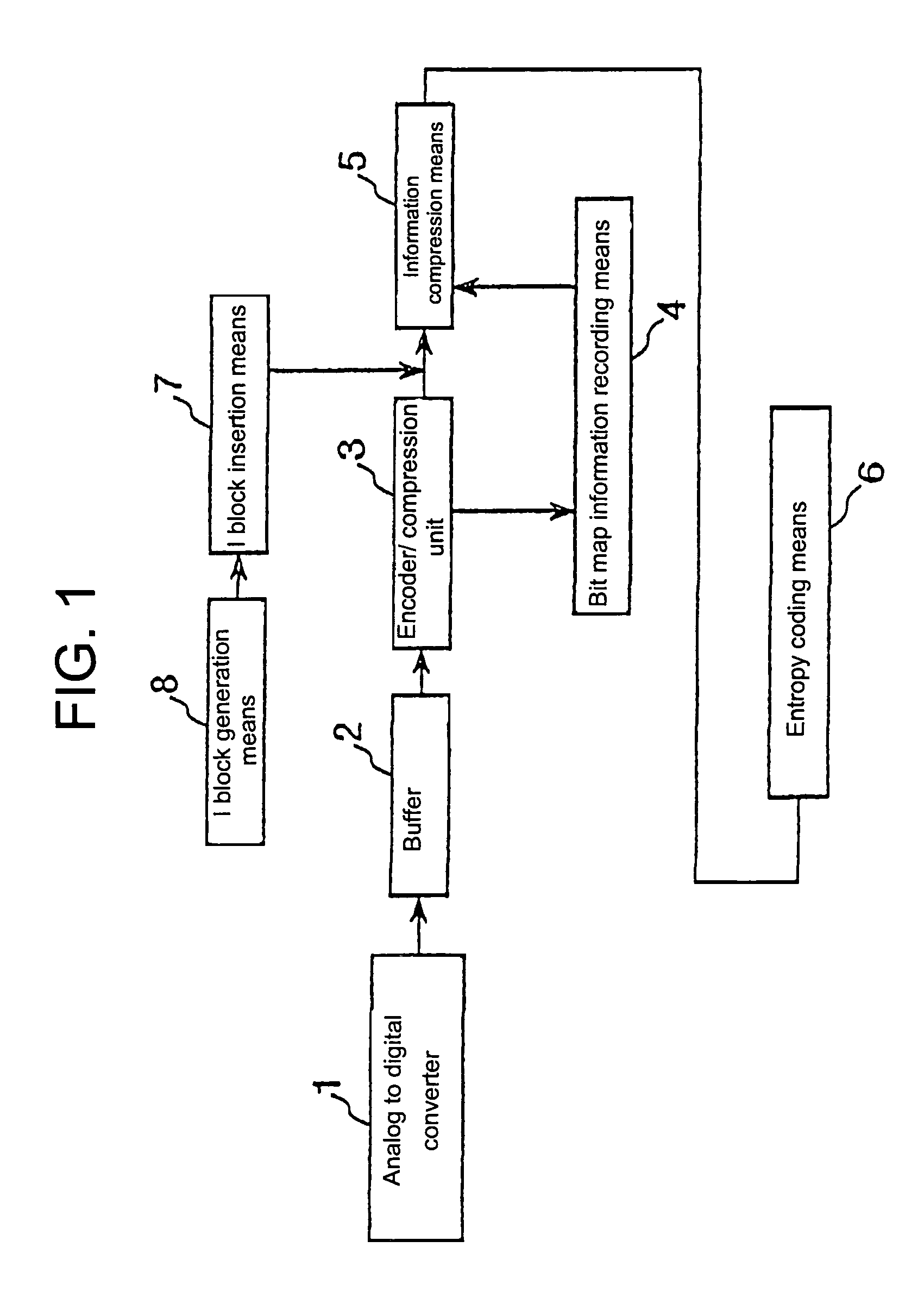

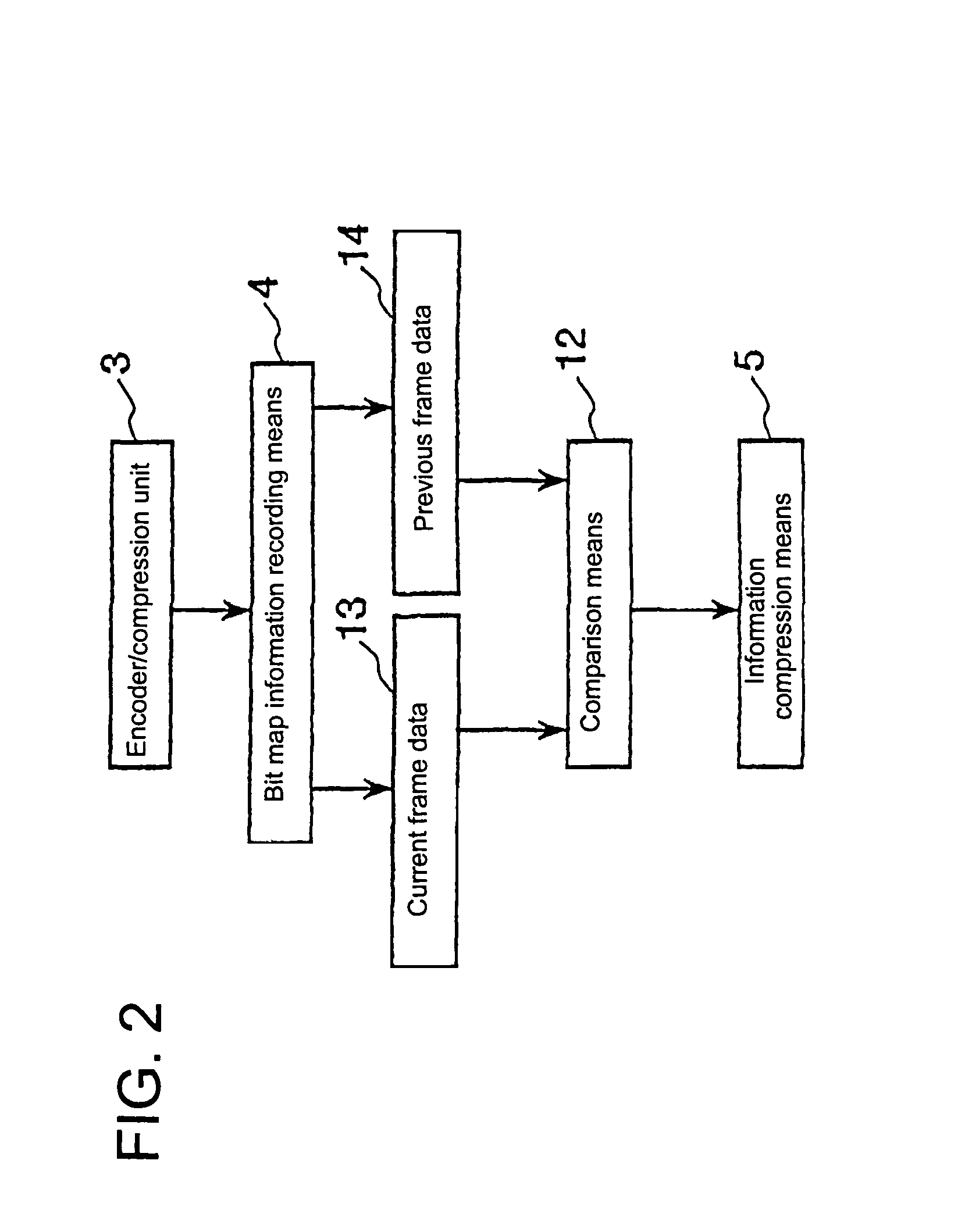

Method and system for compressing motion image information

InactiveUS7085424B2Improve image qualityDeterioration of image qualityColor television with pulse code modulationColor television with bandwidth reductionTime segmentComputer graphics (images)

A method and system for compressing motion image information, which can compress data that can be subjected to predictive encoding. An image within a frame is divided into blocks before an inter-frame compression procedure begins, and each block is approximated with a single plane represented by at least three components for pixels within each block. Pixels between the original image and the image expanded after compressed can be compared, and when a pixel that causes greater difference than a given parameter to occur exists, intra-frame compression is performed using a smaller block size. Furthermore, when the respective I blocks, which are spatially divided, are dispersed between each frame along the temporal axis, no I block is inserted into any block within the frame that has been updated due to difference between frames being greater than parameter P during a designated period of time.

Owner:OFFICE NOA

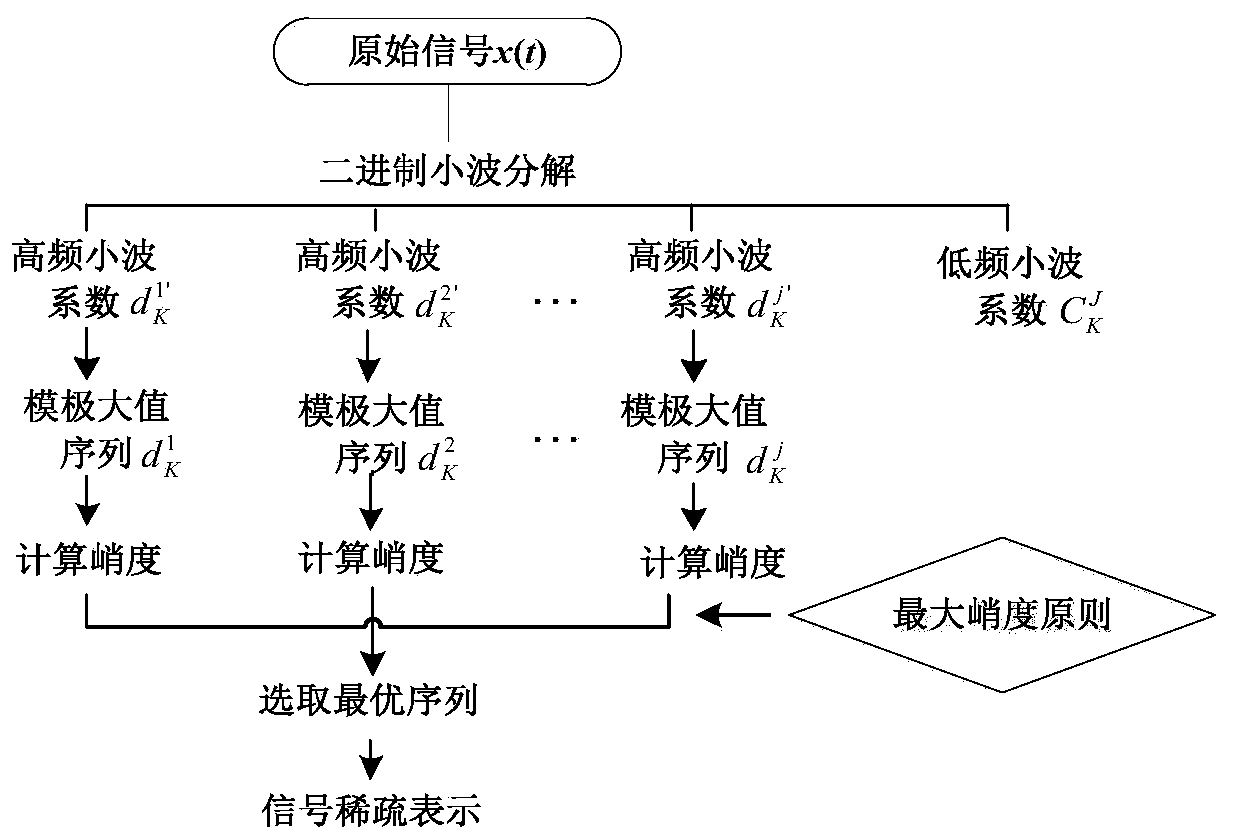

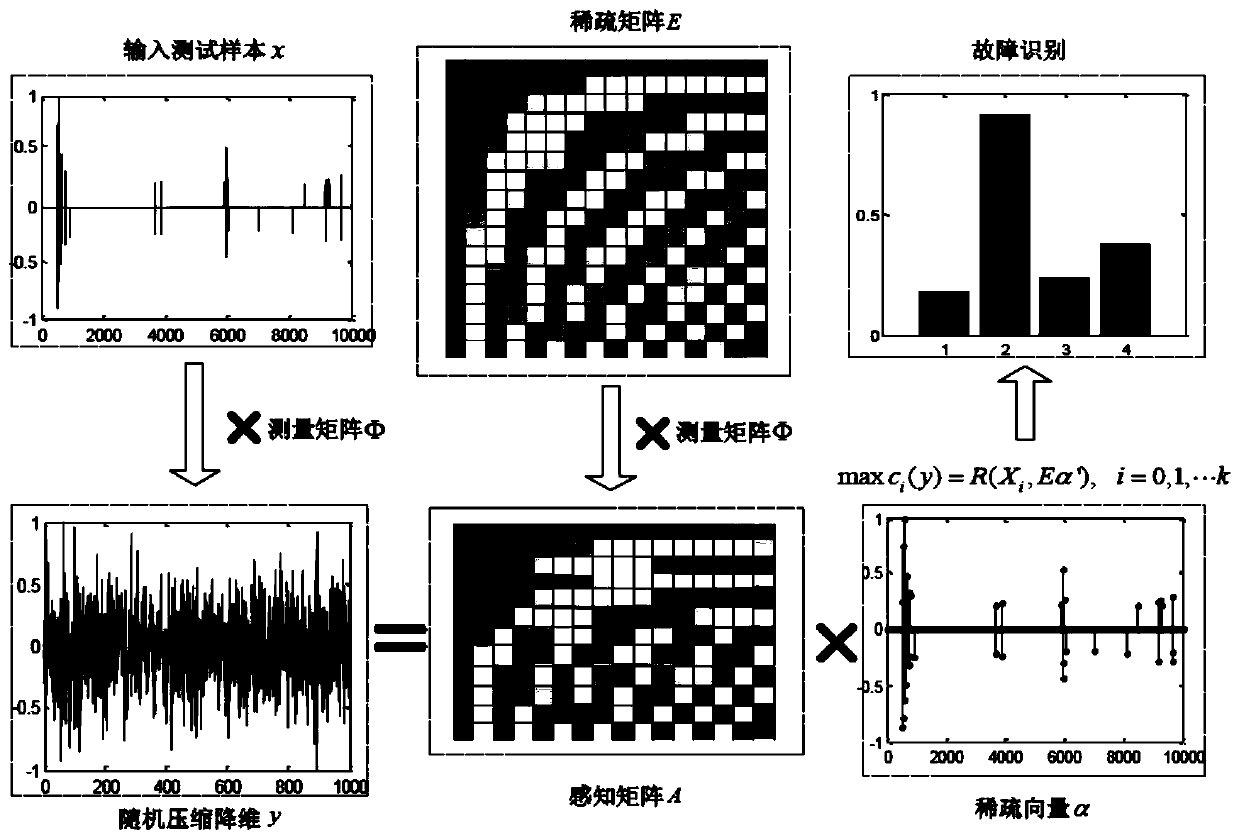

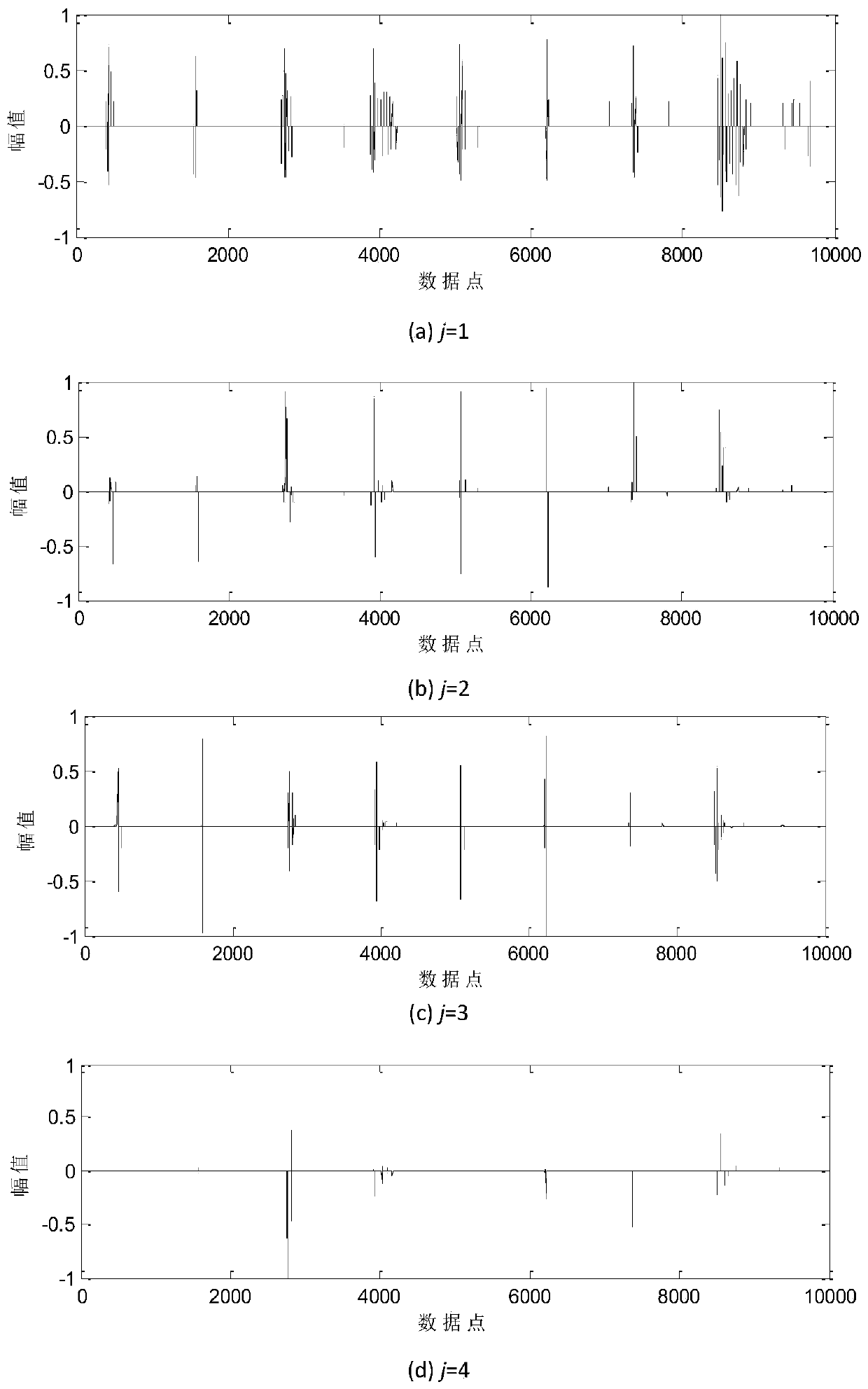

Improved self-adaptive sparse sampling fault classification method

InactiveCN109993105AReduce redundant informationReduce complexityMachine bearings testingCharacter and pattern recognitionClassification methodsTime shifting

An improved self-adaptive sparse sampling fault classification method belongs to the technical field of fault diagnosis. A traditional sparse classification method is improved. Firstly, a wavelet module maximum value and a kurtosis method are used for carrying out feature enhancement processing on signals, and on the premise that signal sparsity is guaranteed, a unit matrix is adopted to replace aredundant dictionary. Secondly dimension reduction is carried out on data by adopting a Gaussian random measurement matrix, thereby reducing redundant information in the signal, and reserving effective and small amount of data. Then, a sparse coefficient is solved by adopting a sparsity adaptive matching pursuit (SAMP) algorithm, and the compressed signal is reconstructed; and finally, a cross correlation coefficient is adopted as a judgment basis of the category of the fault, so that an improved adaptive sparse sampling fault classification method is provided. Experimental verification proves that redundant information in signals is effectively reduced, the influence of time shift deviation on fault type judgment is avoided, meanwhile, the operation complexity is reduced, and the calculation speed and the reconstruction precision are improved.

Owner:BEIJING UNIV OF CHEM TECH

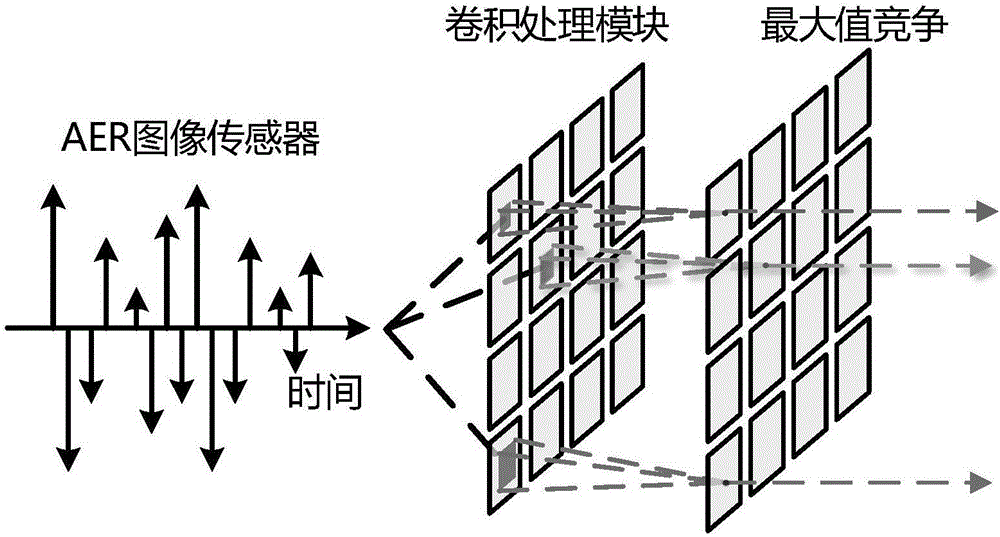

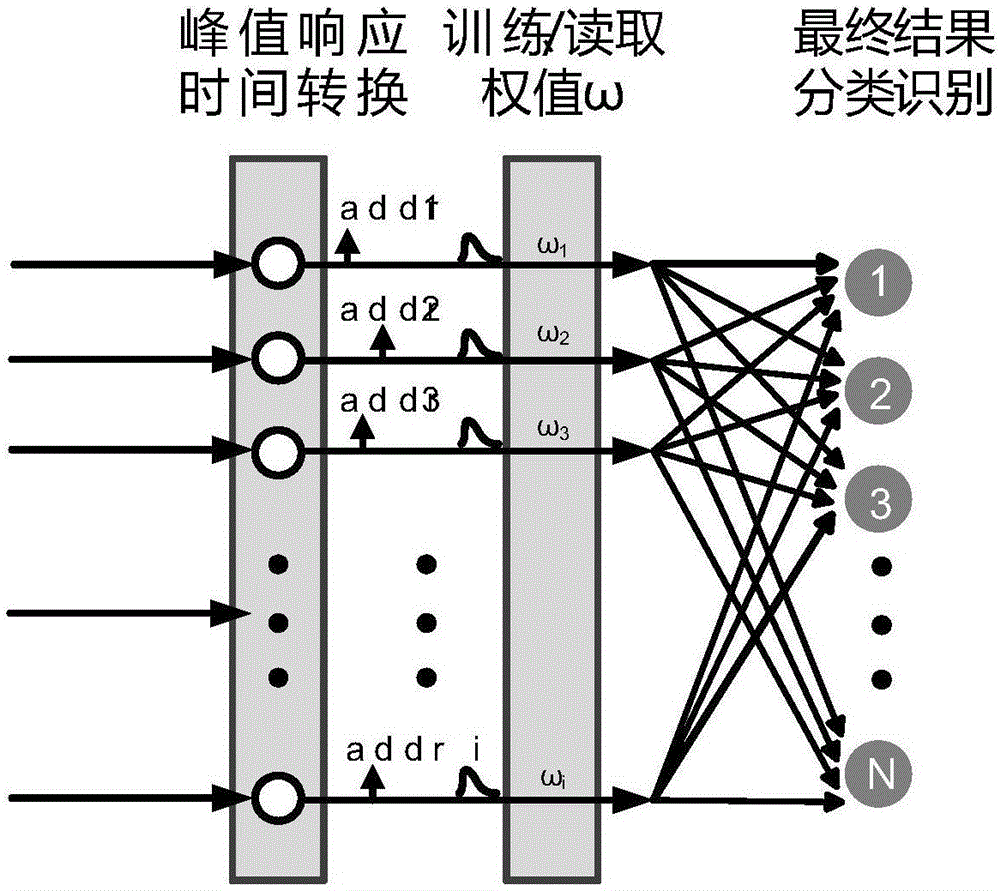

Bionic target identification system based on event driving

InactiveCN106407990AReduce the amount of processed data and redundant informationIncrease equivalent processing frame rateCharacter and pattern recognitionNeural learning methodsImage sensorVisual system

The present invention relates to the field of image processing technology. In order to realize real-time acquisition and processing of image information collected from an AER image sensor, an event-based processing method is always adopted in a rear-end bionic visual system processing stage to realize the target recognition function and provide target position parameters. A bionic target identification system based on event driving comprises an image sensor representing AER based on address-event, and a rear-end bionic processing system, wherein the AEM image sensor is used as an information acquisition source of moving objects, and the acquired image data is inputted in parallel to the rear-end bionic processing system. The bionic target identification system further comprises a feature extraction module for processing input image data by using feature extraction algorithm based on event driving, and a target identification module based on event driving, wherein the target identification module employs SNN (spiking neural network) to process the output of the feature extraction module. The bionic target identification system is mainly used for image processing.

Owner:TIANJIN UNIV

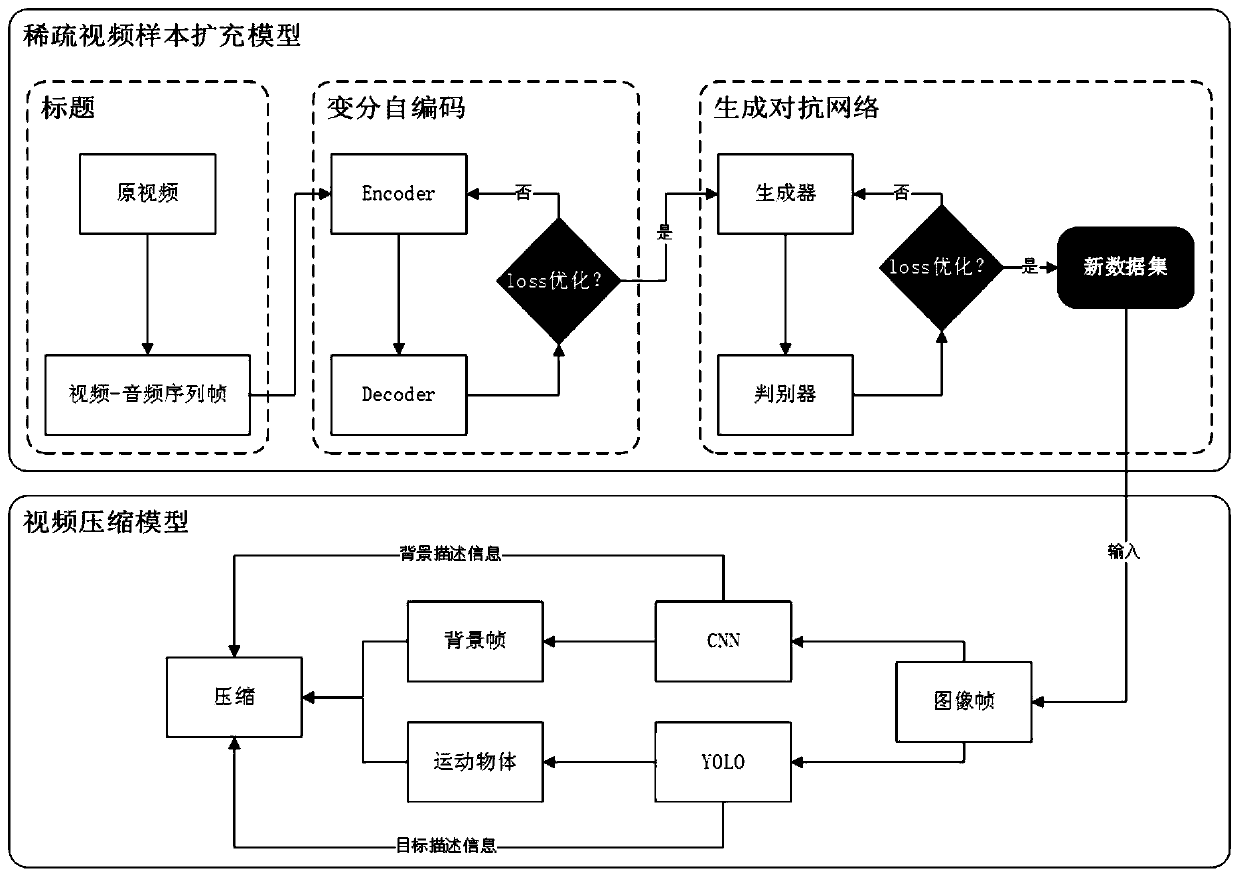

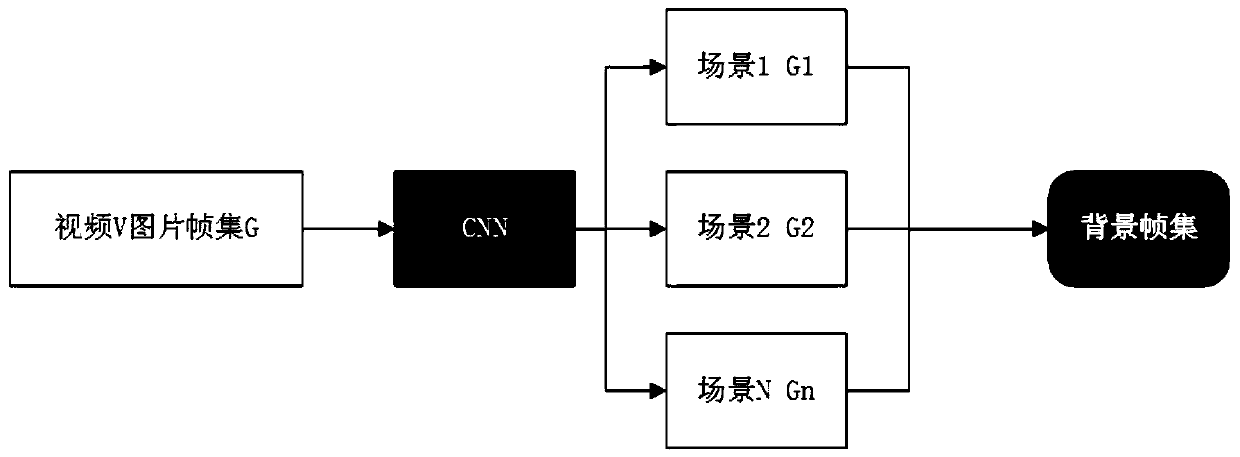

Video compression method based on sparse samples

InactiveCN111565318AIncrease the compression ratioIncrease transfer rateCharacter and pattern recognitionClosed circuit television systemsPattern recognitionNoise (video)

The invention relates to a video compression method based on sparse samples, and belongs to the technical field of video compression. The method comprises the steps: S1, data preprocessing; S2, firstly, learning each frame of a video in a data set by utilizing a variation auto-encoder through a video generation method combining the variation auto-encoder and a generative adversarial network, constructing a hidden space with good continuity, and enabling each point in the hidden space to correspond to one frame in the video; inputting the noise and the text into a generator of the generative adversarial network, enabling the generator to generate a plurality of associated points in the latent variable space, and finally generating continuous images are generated through a decoder of a variational auto-encoder; S3, inputting the generated continuous images into a video compression model, screening background frames through a CNN network, and then identifying a target in each frame of image by using a YOLO neural network. According to the invention, the video compression efficiency can be improved, and the network transmission delay and the consumption of local resources are reduced.

Owner:CHONGQING INST OF GREEN & INTELLIGENT TECH CHINESE ACADEMY OF SCI

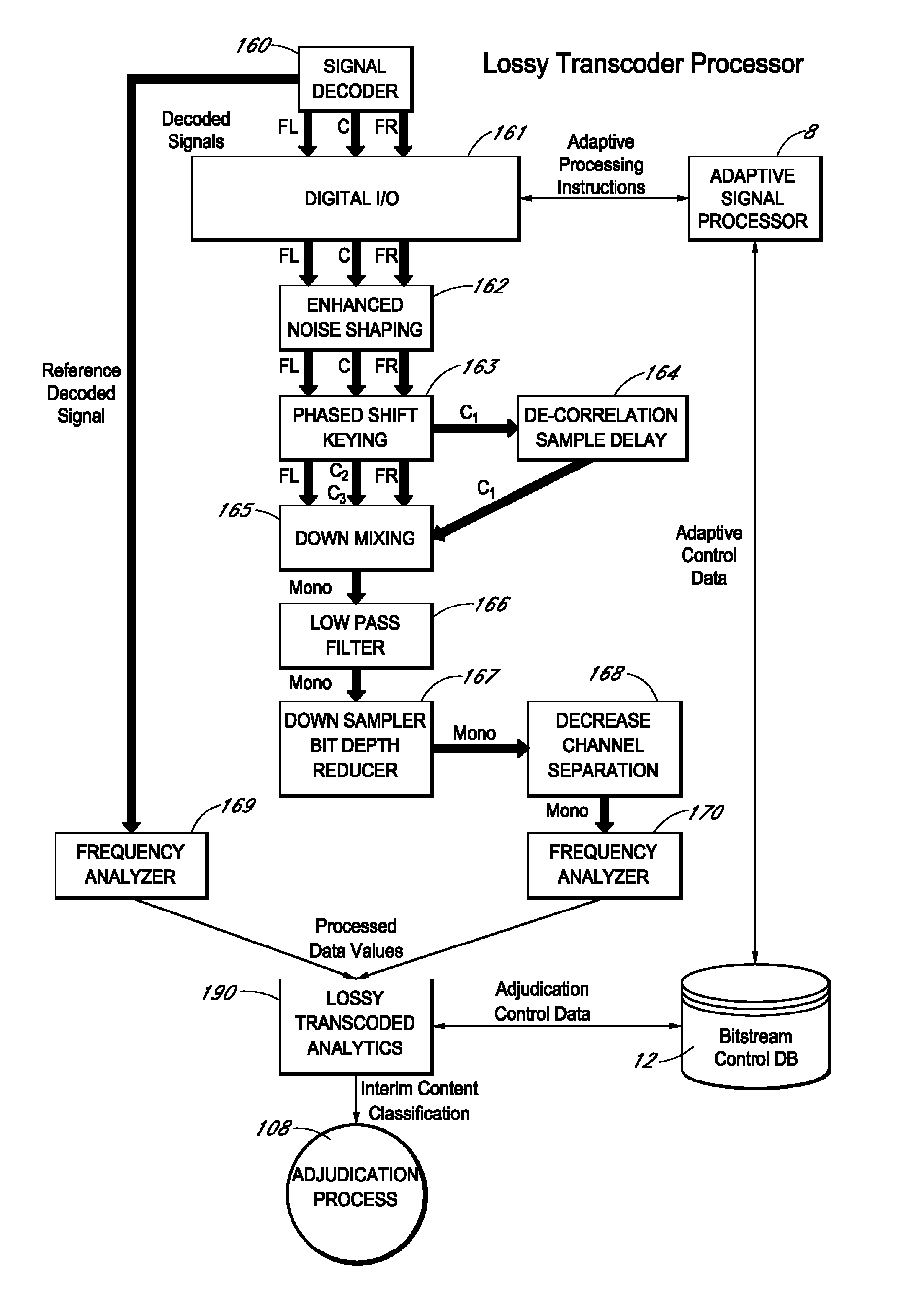

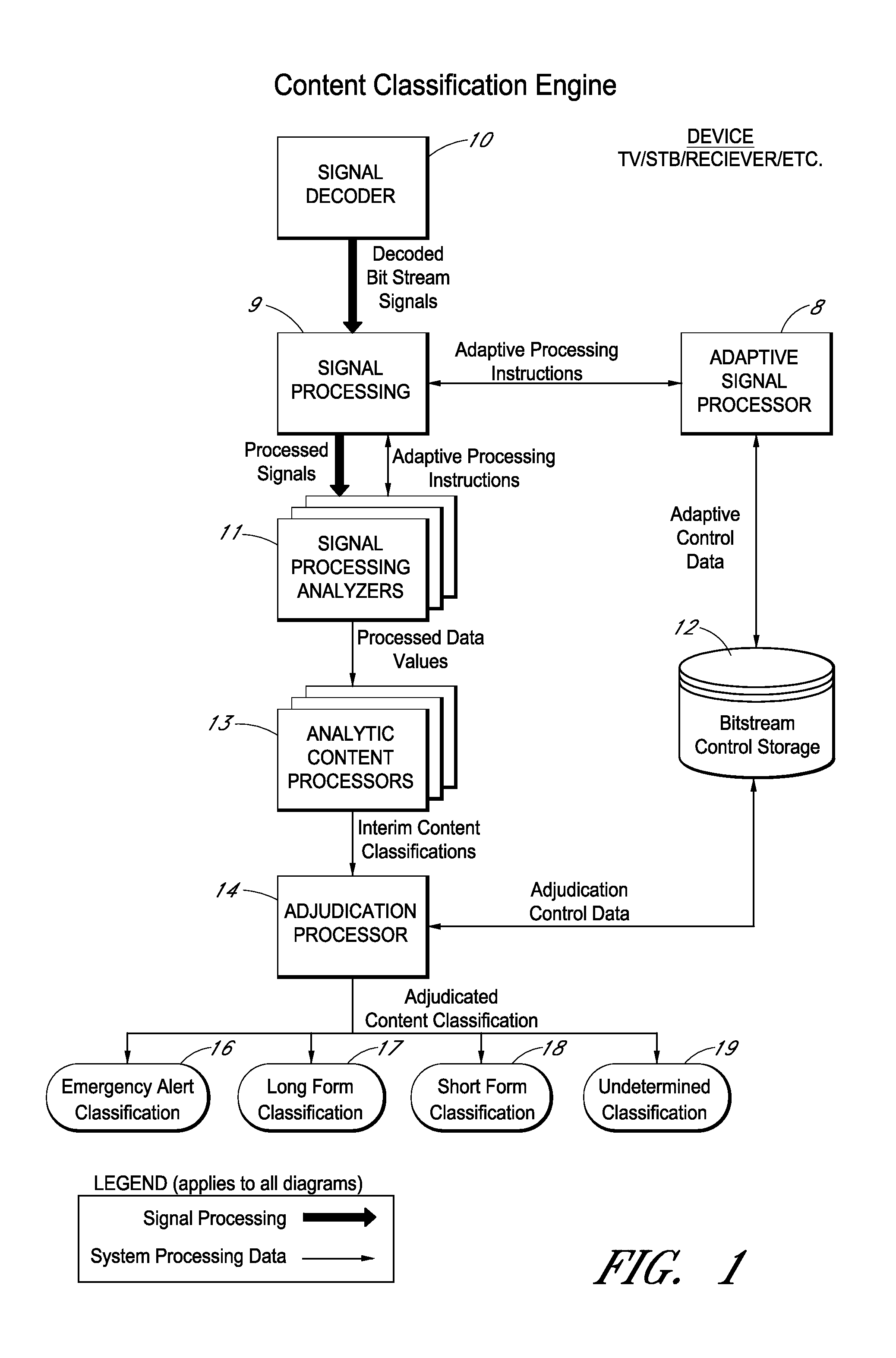

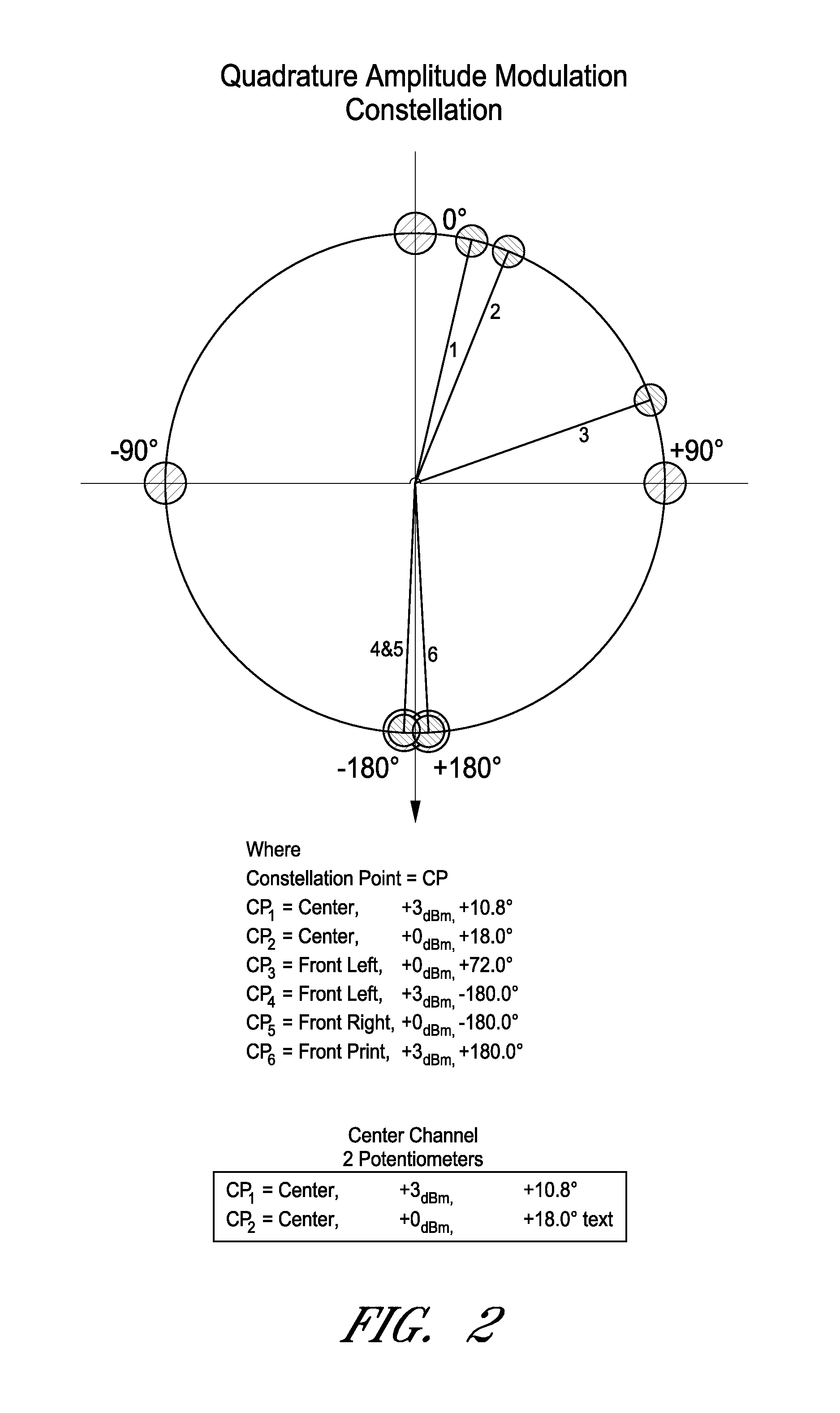

Methods and systems for identifying content types

InactiveUS8825188B2Increase the differenceReduce redundant informationBroadcast information characterisationColor television signals processingComputer hardwareFrequency spectrum

System and methods are provided configured to reveal differences in content, such as commercial and program content. An input port may be configured to receive a first plurality of bit stream audio channels. A circuit may optionally be configured to enhance artifacting, noise, and / or bit rate errors and may optionally reduce redundant signal information. A signal processor circuit may be configured to transform the first plurality of bit stream audio channels into a frequency domain. A spectrum analysis system may be configured to compare properties of the first plurality of bit stream audio channels to one or more reference signals. An identification circuit may be configured to identify different content types included in the first plurality of bit stream audio channels.

Owner:CYBER RESONANCE CORP

Multi-angle adaptive intra-frame prediction-based point cloud attribute compression method

ActiveUS10939123B2Improve compression performanceReduce redundant informationDigital video signal modificationCoding blockPoint cloud

Disclosed is a multi-angle adaptive intra-frame prediction-based point cloud attribute compression method. A novel block structure-based intra-frame prediction scheme is provided for point cloud attribute information, where six prediction modes are provided to reduce information redundancy among different coding blocks and improve the point cloud attribute compression performance. The method comprises: (1) inputting a point cloud; (2) performing point cloud attribute color space conversion; (3) dividing a point cloud by using a K-dimensional (KD) tree to obtain coding blocks; (4) performing block structure-based multi-angle adaptive intra-frame prediction; (5) performing intra-frame prediction mode decision; and (6) performing conversion, uniform quantization, and entropy encoding.

Owner:PEKING UNIV SHENZHEN GRADUATE SCHOOL

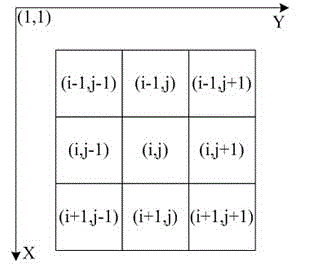

Improved Camshift target tracking method

InactiveCN104463914AImprove stabilityImprove accuracyImage enhancementImage analysisMean-shiftHistogram

The invention belongs to the field of picture processing and target tracking, and particularly provides an improved Camshift target tracking method. A target model is set up through chroma-differential two-dimensional union features. The maximum differential value of the chroma of eight neighborhoods of each pixel is used as the differential value of the pixel and used for describing relative position information of the pixel and detailed information of a picture. According to a chroma-differential two-dimensional union histogram of the target model, a chroma-differential two-dimensional feature union probability distribution diagram of the tracked picture is obtained through back projection. Target locating is achieved in a tracking window through the mean shift method. Excessive adjustment of target sizes and directions is limited. The method has higher interference resistance under a complex background condition, and target tracking stability can be effectively improved. The method is suitable for a moving target tracking system.

Owner:TIANJIN POLYTECHNIC UNIV

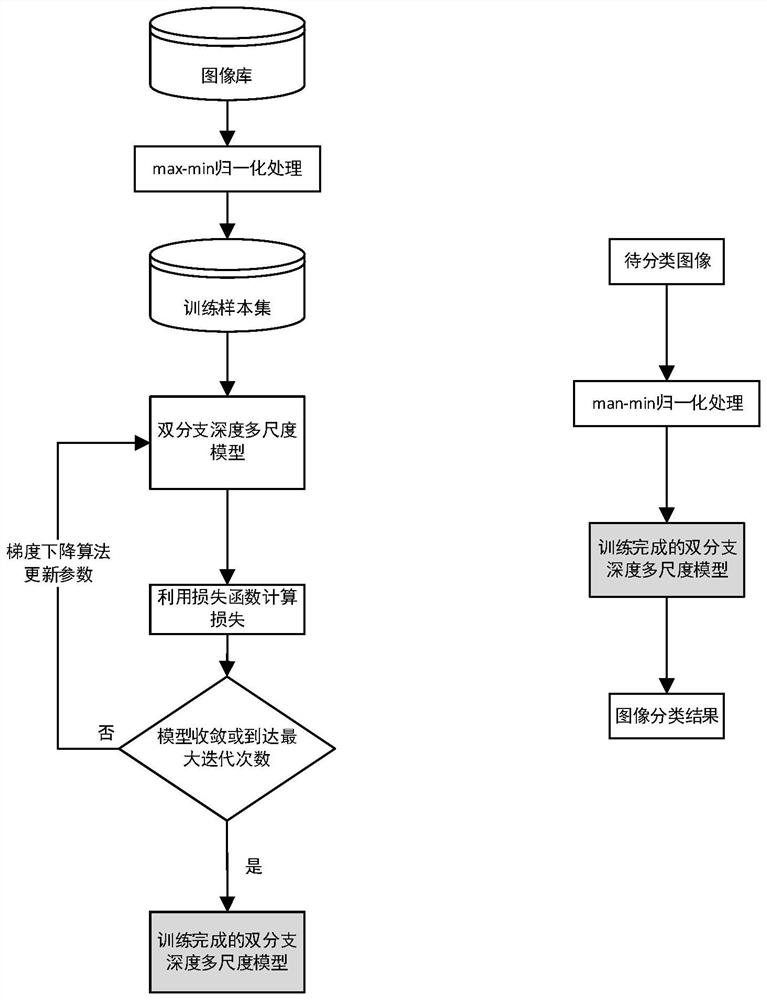

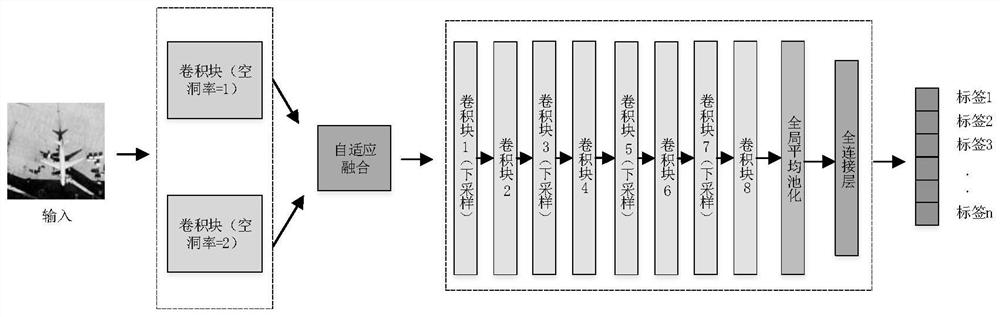

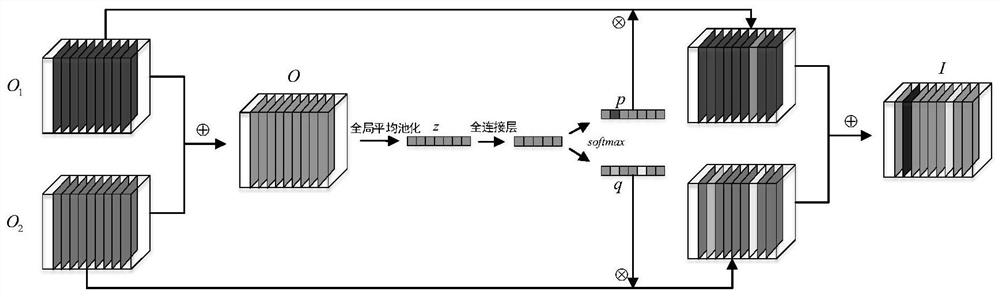

Remote sensing image classification method, storage medium and computing equipment

PendingCN112101190AAdd multi-scale featuresGuaranteed validityScene recognitionNeural architecturesSelf adaptiveNetwork model

The invention discloses a remote sensing image classification method, a storage medium and computing equipment, and the method comprises the steps: building a remote sensing image set, carrying out the standardization of the remote sensing image set, and obtaining a training sample set and a test sample set; setting a multi-scale feature extraction module, and generating feature maps of two scalesby setting different hole convolution in two parallel convolution modules; setting a self-adaptive feature fusion module, wherein the self-adaptive feature fusion module can adaptively select usefulinformation in the two generated features of different scales and perform fusion; building a whole neural network model; performing iterative training on the whole neural network model by using the training sample set; and randomly selecting a sample from the test samples as a position category sample, and classifying unknown samples needing to be predicted by using the trained neural network. According to the method, redundant information is reduced, multi-scale features are selected more flexibly, the stability of the network is improved, and then the classification capacity of the network model is improved.

Owner:XIDIAN UNIV

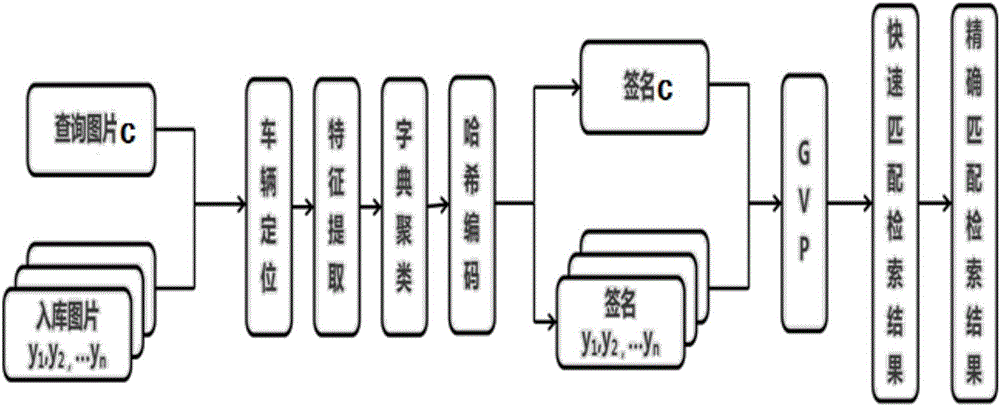

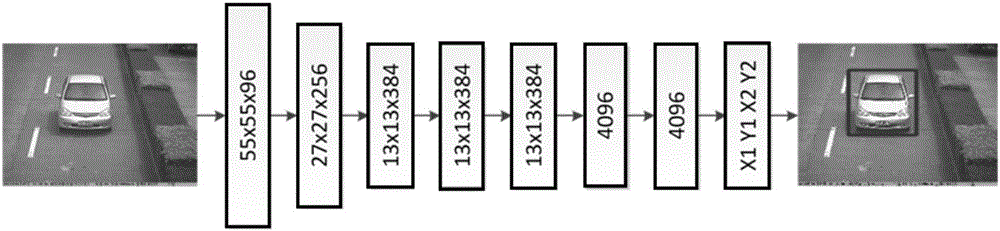

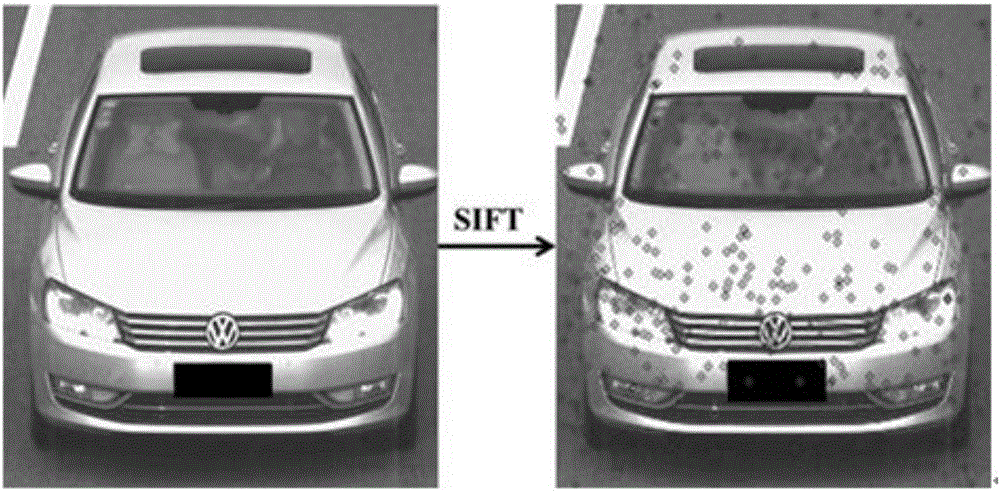

Quick retrieval method and system of vehicle image on the basis of feature geometric constraint

InactiveCN106528662AQuick matchExact matchCharacter and pattern recognitionNeural architecturesImaging processingHistogram of oriented gradients

The invention discloses a quick retrieval method and system of a vehicle image on the basis of a feature geometric constraint. The method comprises the following steps of: adopting a convolutional neural network to position the vehicle image, wherein the vehicle image comprises a query picture and a storage picture; carrying out feature extraction on the positioned image to obtain image features; adopting a hierarchical clustering method and a minimal hash method to carry out compressed encoding on the image features to obtain the hash code of the vehicle image features; according to the hash code of the vehicle image, adopting a visual phrase maintained by a geometric attribute to carry out image similarity calculation to obtain a quick matching retrieval result; and adopting a histogram of oriented gradients to carry out accurate matching on the quick matching retrieval result to obtain an accurate matching retrieval result. The method has the advantages of high retrieval accuracy and high retrieval speed, and can be widely applied to the field of image processing.

Owner:SUN YAT SEN UNIV +1

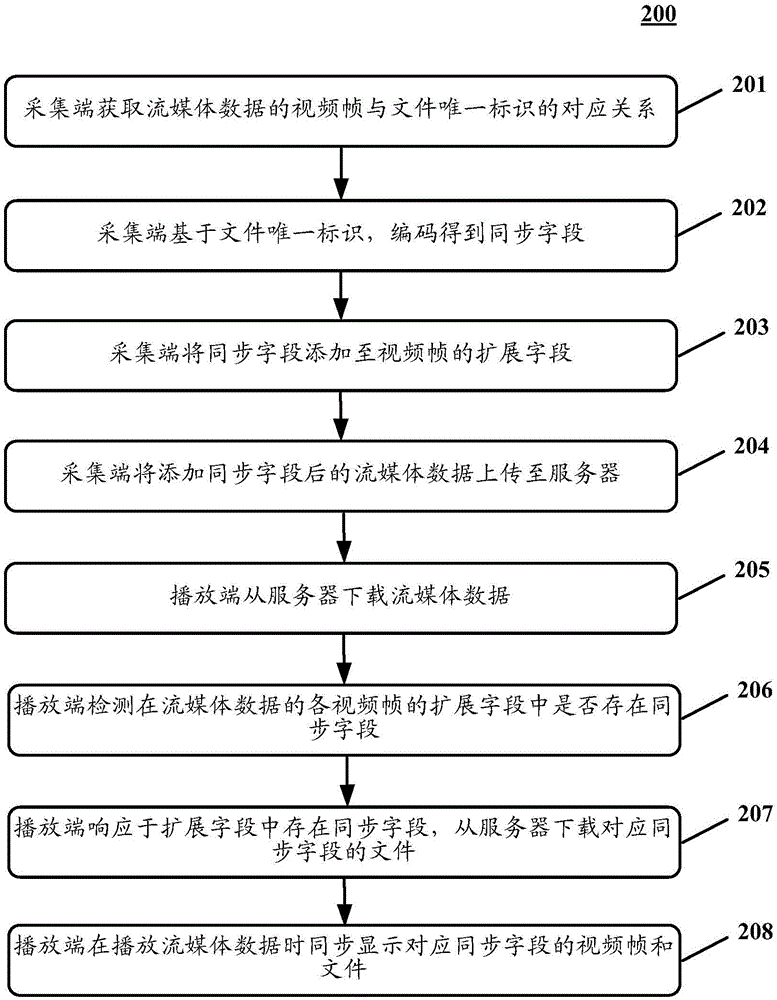

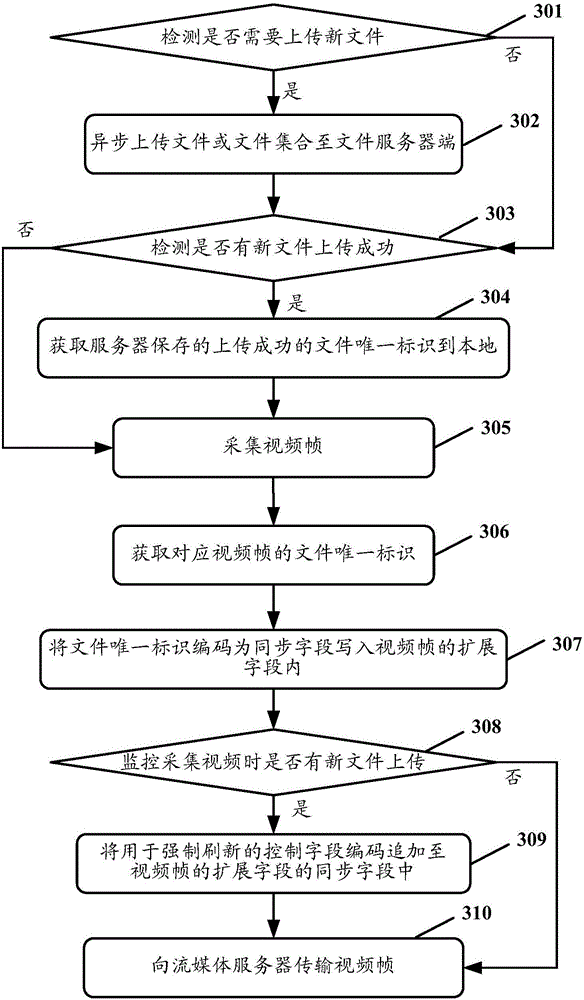

Method and apparatus for synchronously displaying file in live video

ActiveCN106488291AReduce trafficSimplify sync operationsSelective content distributionTraffic volumeLive video

The invention discloses a method and an apparatus for synchronously displaying a file in live video. A specific embodiment of the method comprises the steps of enabling a collection end to obtain a corresponding relation between a video frame of a streaming media data and a file unique identification, performing coding based on the file unique identification to obtain a synchronous field, adding the synchronous field to an extended field of the video frame, and uploading the streaming media data after being added with the synchronous field to a server; and enabling a playing end to download the streaming media data from the server, detecting whether synchronous fields exist in expanded fields of each video frame in the streaming media data or not, responding to synchronous fields existing in the expanded fields, downloading files corresponding to the synchronous fields from the server, and synchronously displaying the video frames and files of the synchronous fields in playing the streaming media data. By adoption of the embodiment, the required flow in the live video is reduced; and in addition, a simple synchronous method and relatively low error rate are achieved.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

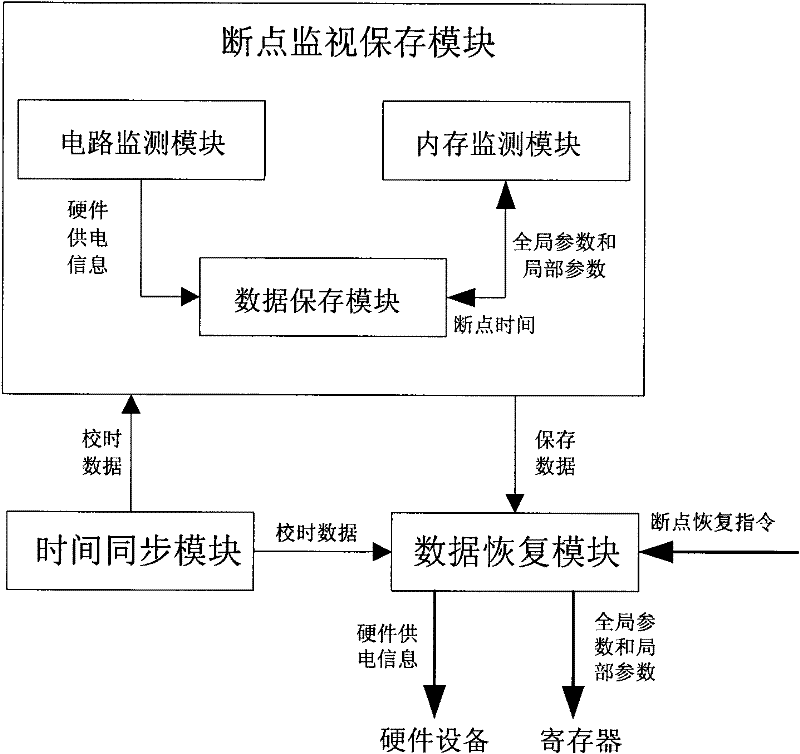

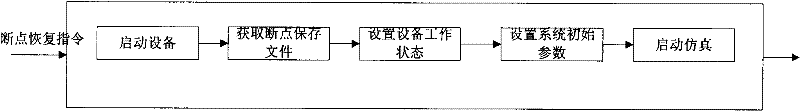

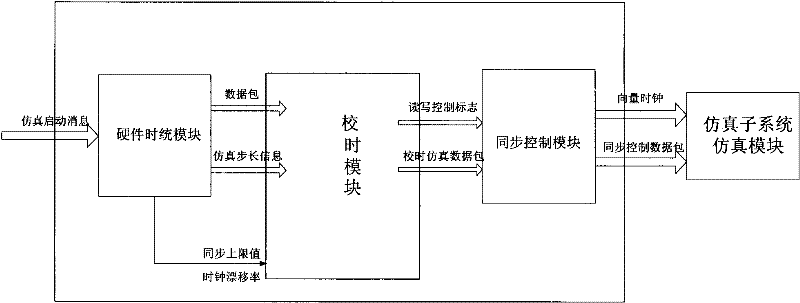

Breakpoint simulation controller and control method for ground simulation system

ActiveCN102298334AImprove Simulation EfficiencyImprove the simulation effectSimulator controlContinuationComputer module

The invention relates to a breakpoint simulation controller and a breakpoint simulation control method for a ground simulation system. The controller comprises a breakpoint monitoring storage module, a data recovery module and a time synchronizing module; under the semi-physical simulation condition, the breakpoint monitoring storage module records the global variable of the simulation system; when the simulation restarts, the data recovery module takes the data as input, so that continuation of system simulation is realized, the simulation efficiency and the simulation effect are greatly improved, and massive time, energy and cost are saved; meanwhile, the controller comprises the time synchronizing module, so that synchronization of accuracy record and recovery time of the system operating breakpoint is ensured; and by using the controller and the method, judgment of multi-process breakpoints of the complex simulation system is solved, the process data are stored in time, and data and means are provided for recovering the operation of the system.

Owner:BEIJING INST OF SPACECRAFT SYST ENG

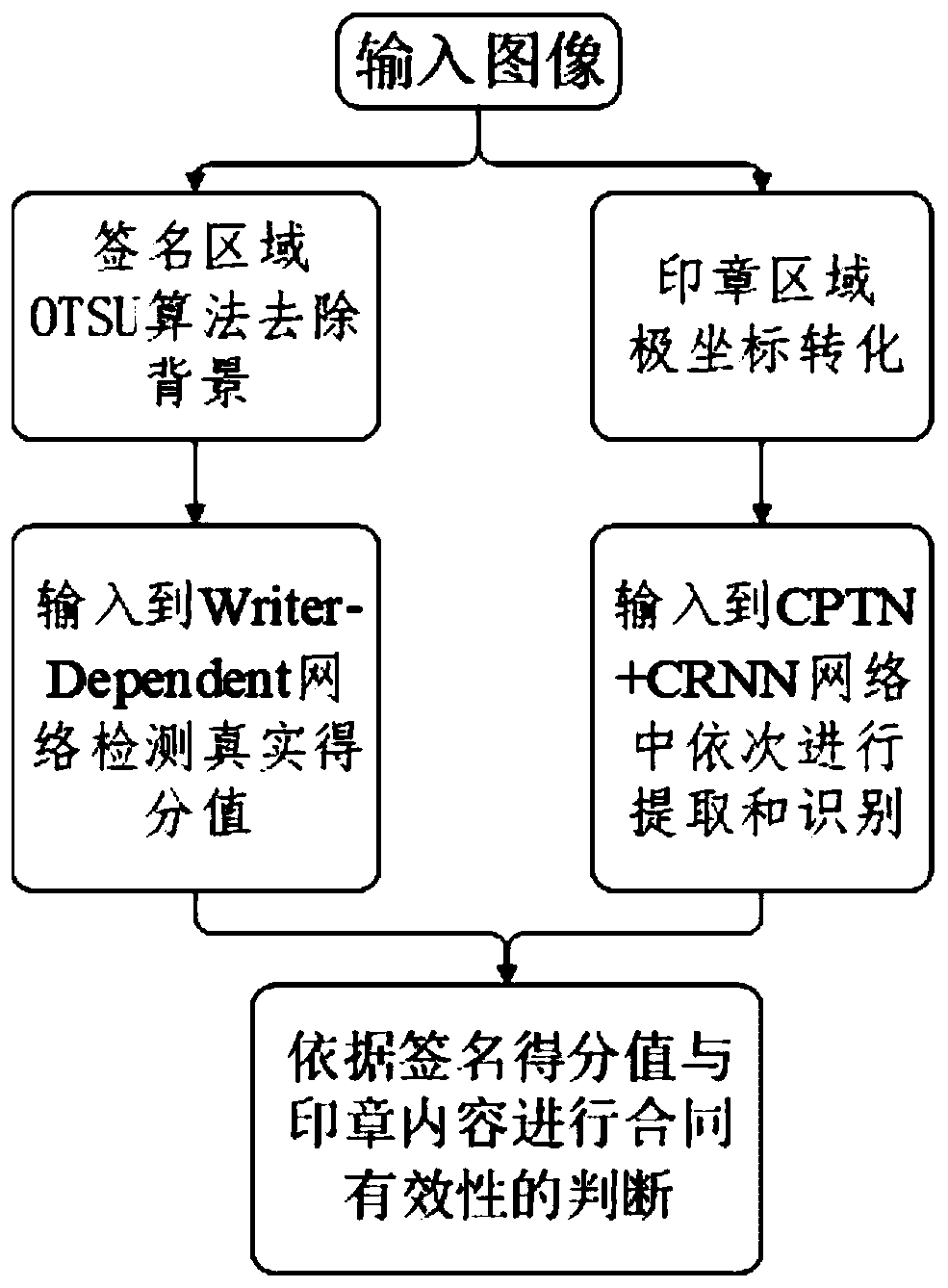

Method for extracting and identifying graphic and text information of scanned document

PendingCN111401372AFast and Efficient DetectabilityFast and efficient identificationCharacter and pattern recognitionPattern recognitionSvm classifier

The invention relates to a method for extracting and identifying graphic and text information of a scanned document, which comprises the following steps of: 1) preprocessing a scanned document image,carrying out layout segmentation on the preprocessed image, and selecting items, including but not limited to, a signature and a seal; (2) preprocessing the signature extracted in the step (1), removing a background by adopting an OTSU algorithm, inputting the signature into a Writer-Dependent network to extract a characteristic value of the signature, and inputting the characteristic value into an SVM classifier obtained by using real signature training to obtain the authenticity of the signature; (3) conducting polar coordinate transformation on the seal extracted in the step (1) after preprocessing, so that annular characters in the seal are expanded into transversely-arranged characters, inputting the expanded characters into a CPTN + CRNN network to be sequentially extracted and recognized, and outputting the character content of the seal; 4) judging the validity of the document. The method can replace manual work to analyze and judge the document.

Owner:STATE GRID CORP OF CHINA +1

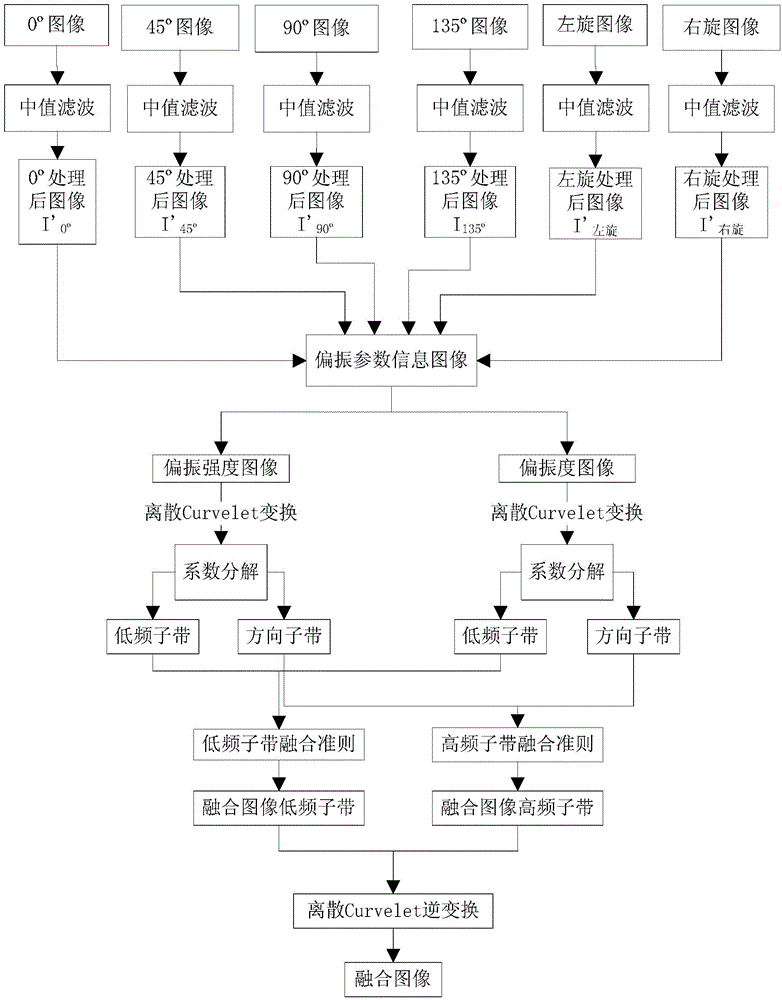

Polarized image fusion method based on discrete continuous curvelet

InactiveCN104657965AReduce redundant informationReduce decomposition stepsImage enhancementImaging processingReal time display

The invention discloses a polarized image fusion method based on discrete continuous curvelet, and belongs to the field of image processing. The method comprises the following step: firstly, performing discrete continuous curvelet to polarization strength and polarization ratio images to obtain low-frequency sub-band coefficients and each direction sub-band coefficient; then selectively fusing low-frequency sub-band coefficient for the low-frequency sub-band coefficient by using weighted average criterion, selectively fusing each direction sub-band coefficient for each direction sub-band coefficient by using area energy maximal criterion, and finally performing inverse discrete continuous curvelet to obtain a final fusion image. The discrete continuous curvelet is realized fast by adopting the Wrapping-based method, and the redundant information of the conversion result is low. Experimental results prove that the algorithm disclosed by the invention is very effective; furthermore, the edge and space grain information of the fused image are clear, the computing time of the algorithm is short, and the image information can be displayed well in real time.

Owner:CHANGCHUN UNIV OF SCI & TECH

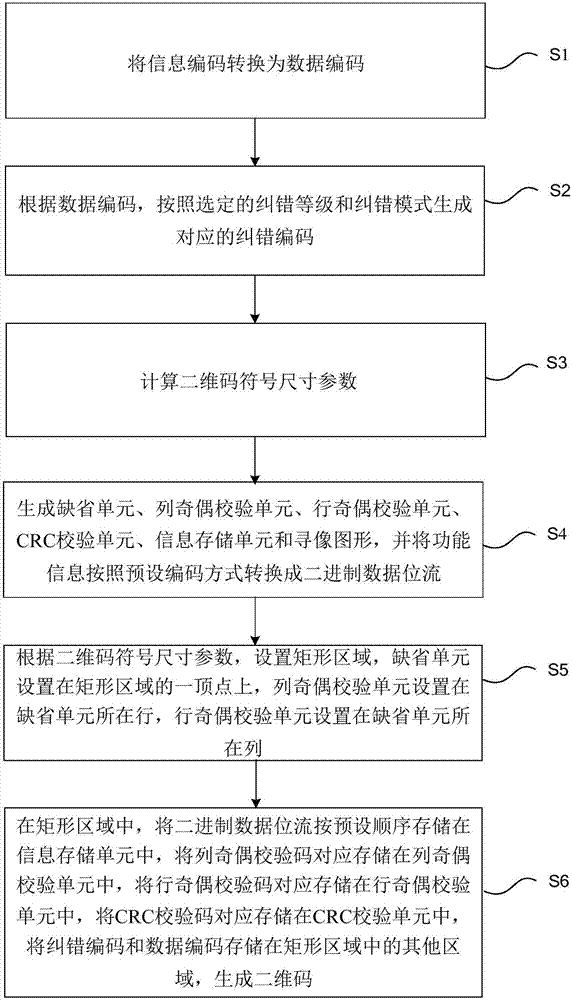

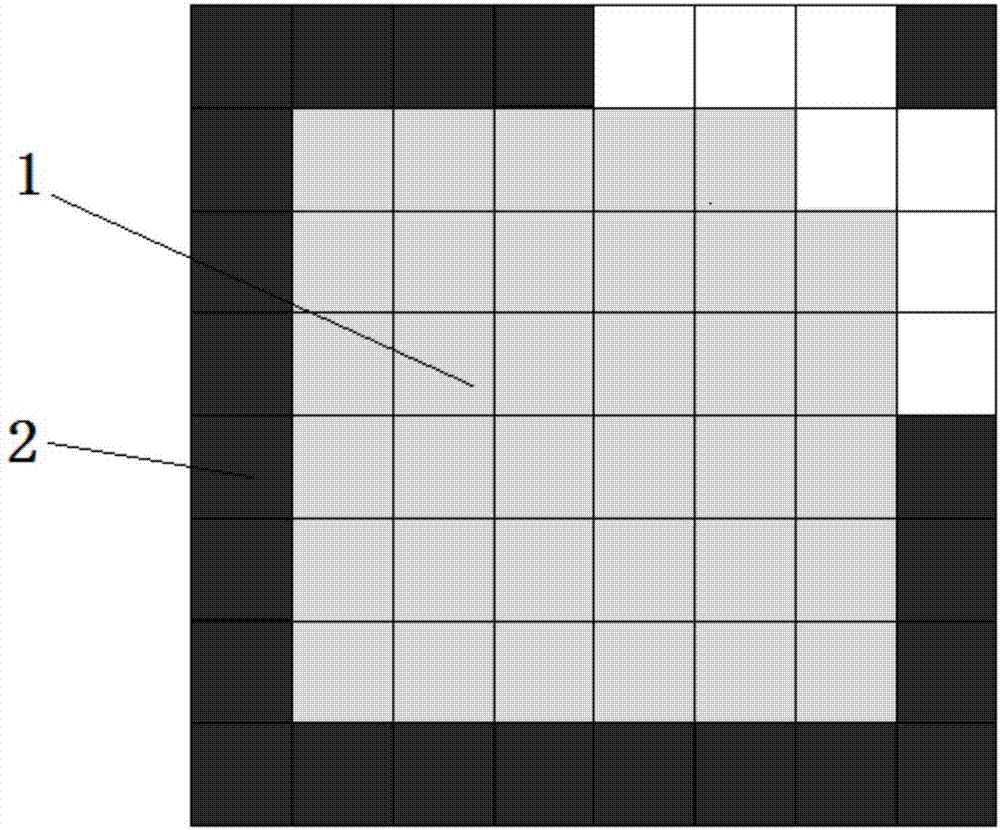

Matrix two-dimensional code coding method and decoding method

PendingCN107545289AReduce in quantityStrong interleaving abilityError detection onlyRecord carriers used with machinesComputer hardwareInformation storage

The invention provides a matrix two-dimensional code coding method and decoding method. The method comprises steps that an information code is converted into a data code; a corresponding error correction code is generated according to a selected error correction grade and an error correction mode; a two-dimensional code symbol dimension parameter is calculated; a default unit, a column odd-even check unit, a row odd-even check unit, a CRC verification unit, an information storage unit and a view finding graph are generated, the function information is converted into a binary system data bit flow according to a preset coding mode, a rectangular area is set, and a two-dimensional code is generated. The method is advantaged in that the column odd-even check unit, the row odd-even check unit and the CRC verification unit are set through a matrix two-dimensional code to verify the information stored in the two-dimensional code, interlacing ability is strong, mistakes in certain forms can becorrected, no correction graph is needed, the quantity of bar codes is small, the data coding mode is simple, coding and decoding efficiency is high, and the redundancy information is little.

Owner:闫河

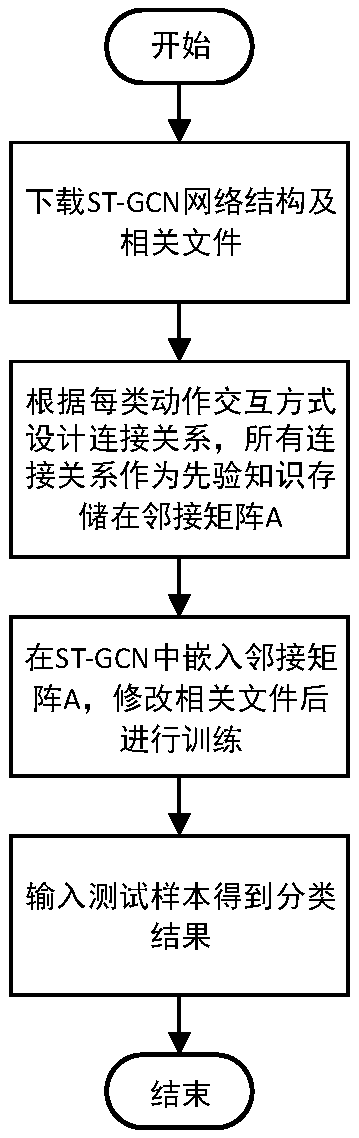

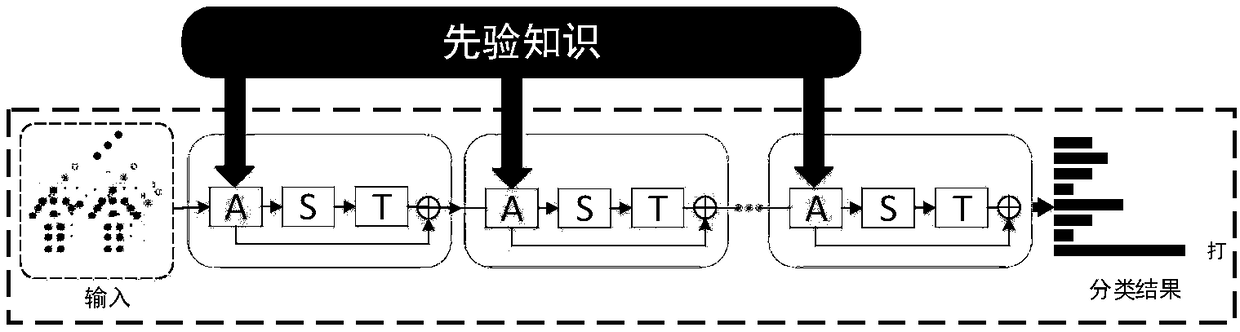

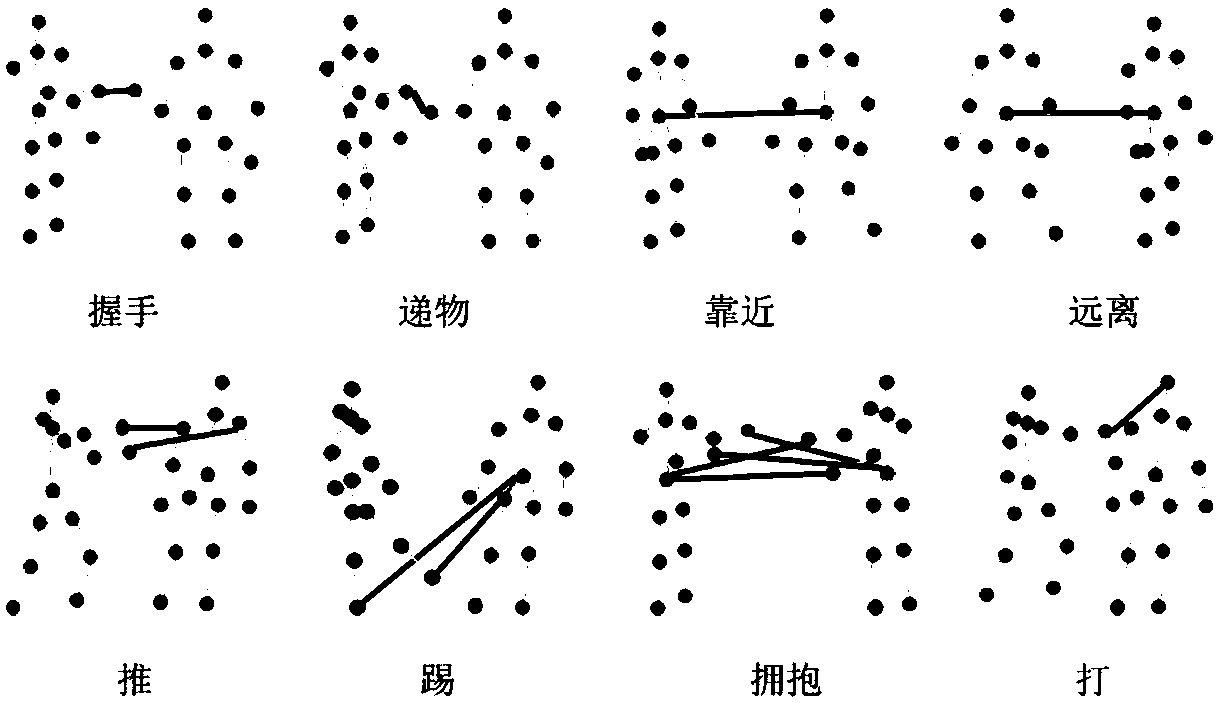

A two-person interaction behavior identification method based on the priori knowledge

ActiveCN109446927AReduce redundant informationSmall amount of calculationCharacter and pattern recognitionNeural architecturesState of artVideo monitoring

The invention discloses a two-person interaction behavior identification method based on the priori knowledge, which mainly solves the problem that the prior art cannot accurately identify the two-person interaction behavior. The implementation scheme comprises preparing the basic behavior recognition network ST-GCN network structure files and related files; 2 establishing a priori knowledge connection relationship by each type of interactive action, and modifying that network structure file and the train parameter file according to the connection relationship of the priori knowledge; 3 usingthe modified file to train the two-person interactive behavior recognition network to get the trained model; 4 using the trained model to recognize the existing data, the data extracted by Kinect or the data collected by openpose. The method of the invention improves the recognition accuracy of the two-person interaction behavior, has strong adaptability and good real-time performance, and can beused for video monitoring and video analysis.

Owner:XIDIAN UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com