Patents

Literature

82 results about "Semantics encoding" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

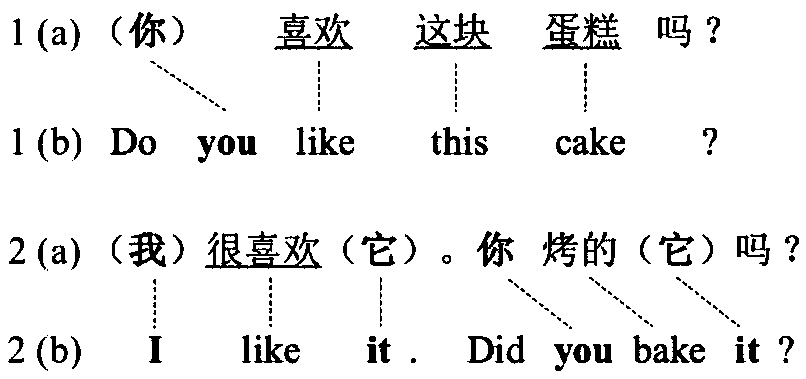

A semantics encoding is a translation between formal languages. For programmers, the most familiar form of encoding is the compilation of a programming language into machine code or byte-code. Conversion between document formats are also forms of encoding. Compilation of TeX or LaTeX documents to PostScript are also commonly encountered encoding processes. Some high-level preprocessors such as OCaml's Camlp4 also involve encoding of a programming language into another.

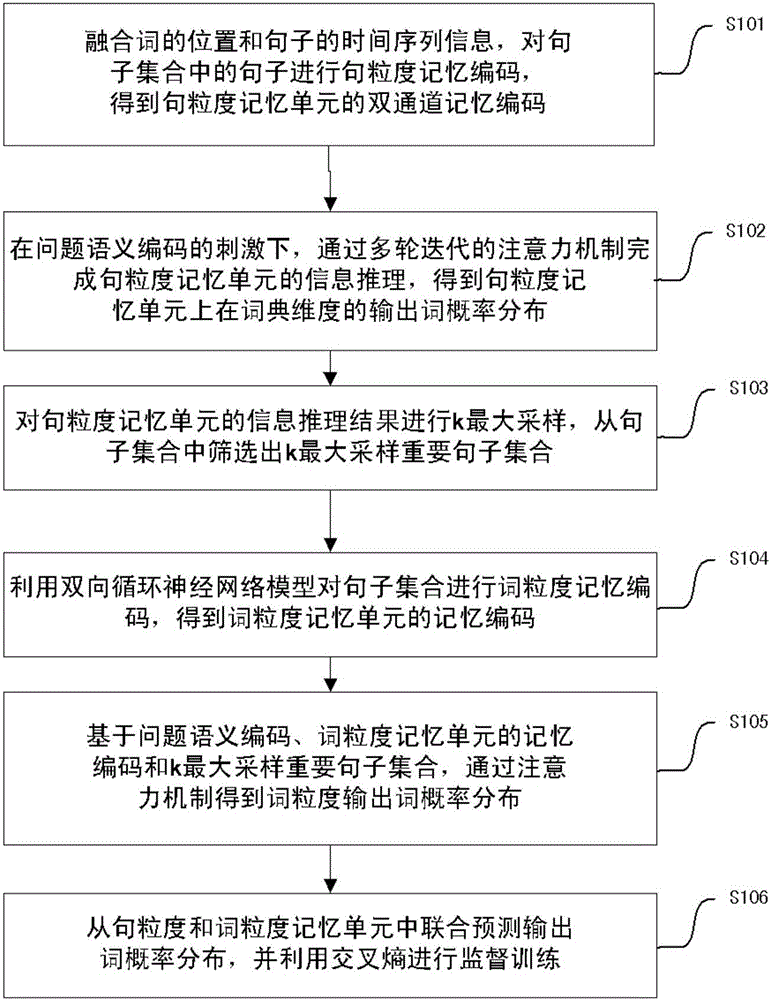

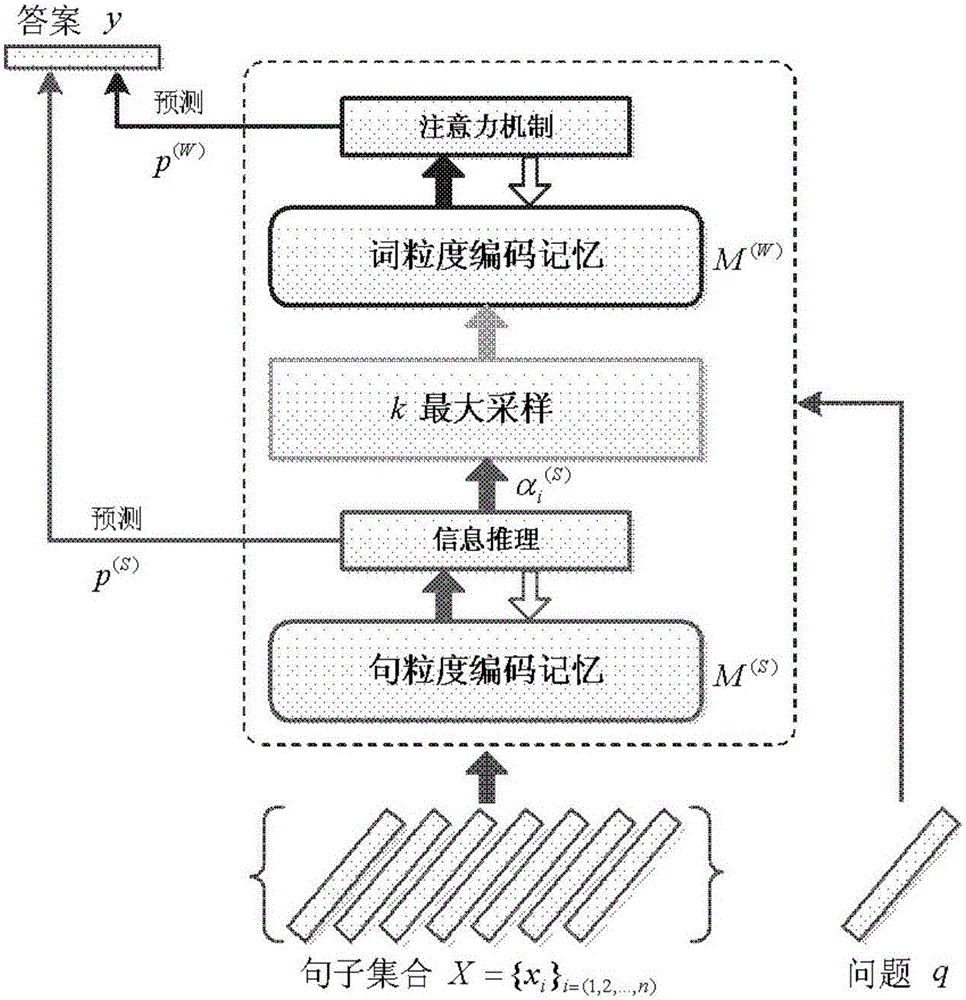

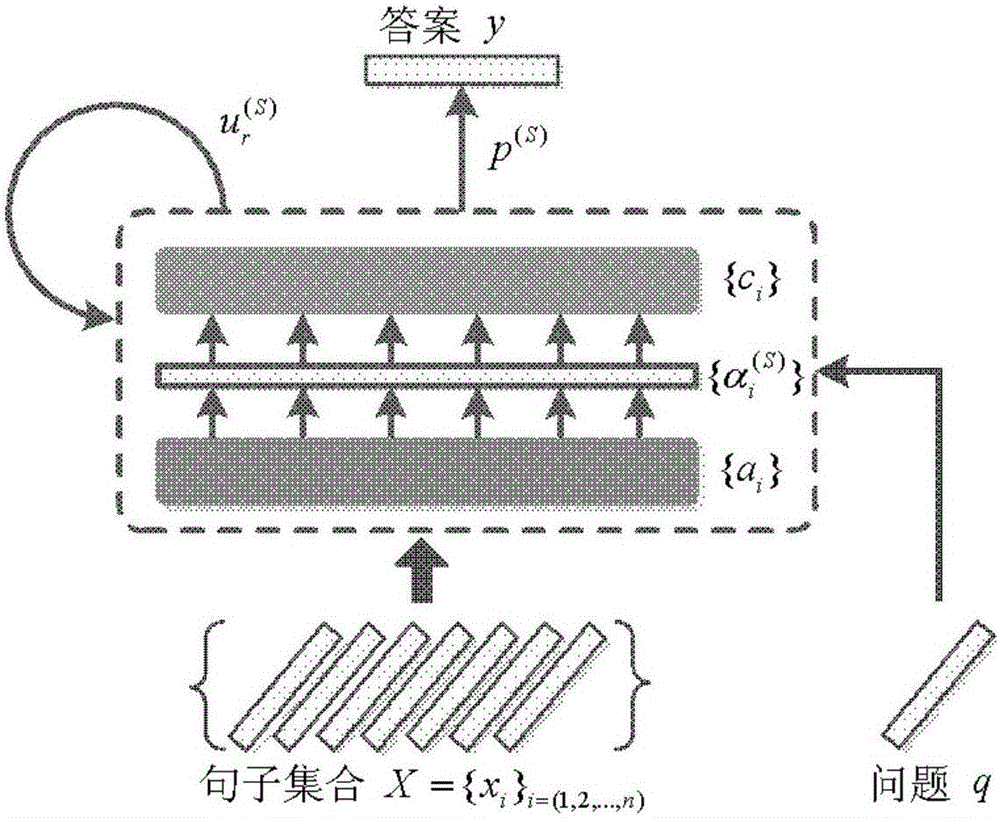

Q&A method based on hierarchal memory network

ActiveCN106126596AImprove accuracyImprove timelinessSemantic analysisSpecial data processing applicationsAlgorithmGranularity

The invention provides a Q&A method based on a hierarchal memory network. The method comprises the following steps: firstly performing sentence granularity memory encoding, and finishing the information inference of the sentence granularity memory unit through an attention mechanism in multi-rounds of iterations under the stimulation of the problem semantic encoding, screening the sentence through k maximum sampling, and further performing the word granularity memory encoding on the basis of the sentence granularity encoding, namely, performing the memory encoding on two levels to form the hierarchal memory encoding; the output word probability distribution is predicted through the combination of the sentence granularity memory unit and the word granularity memory unit, the accuracy of the automatic Q&A is improved, and the answer selection problem of low-frequency word and unlisted word is effectively solved.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

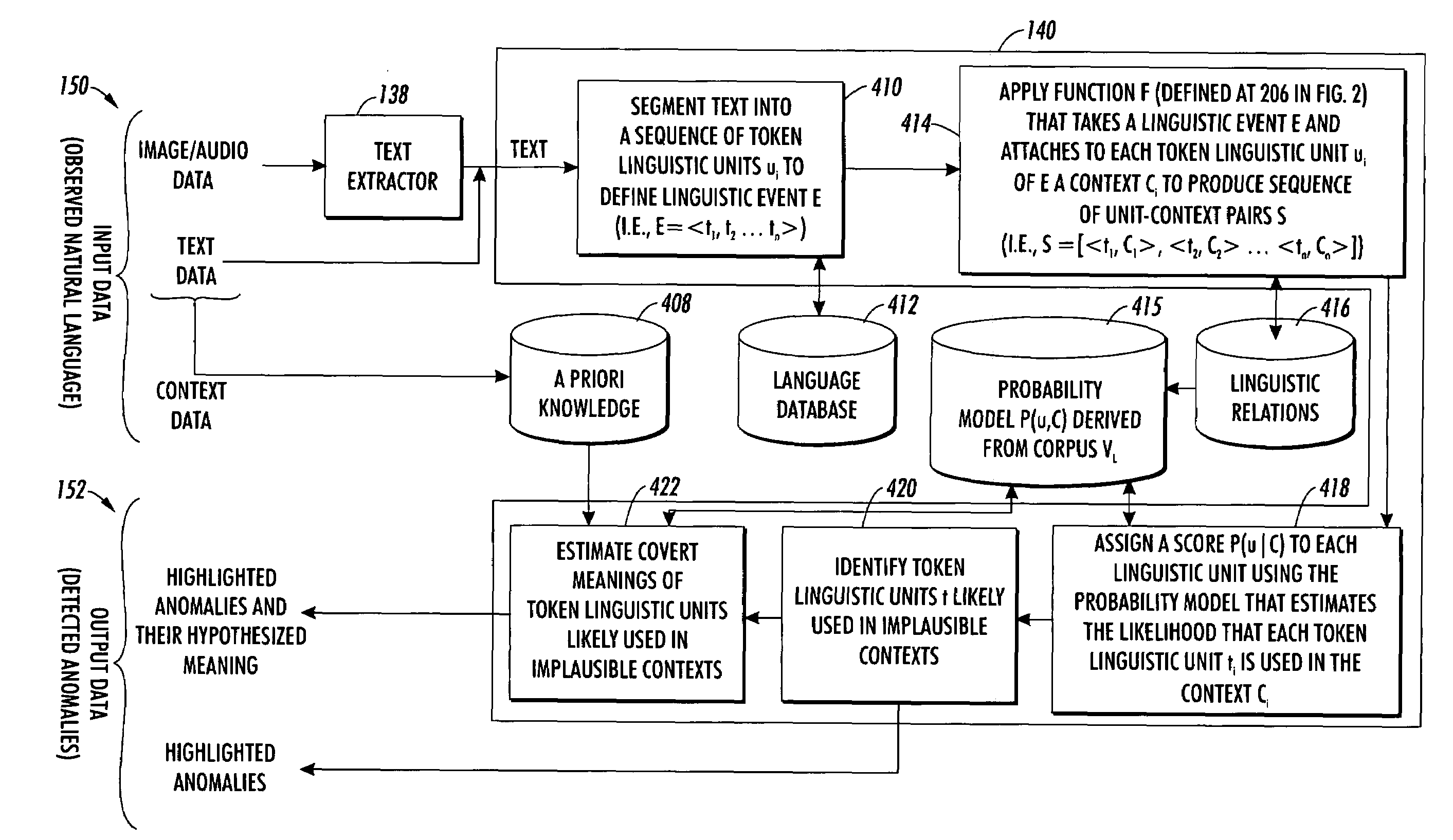

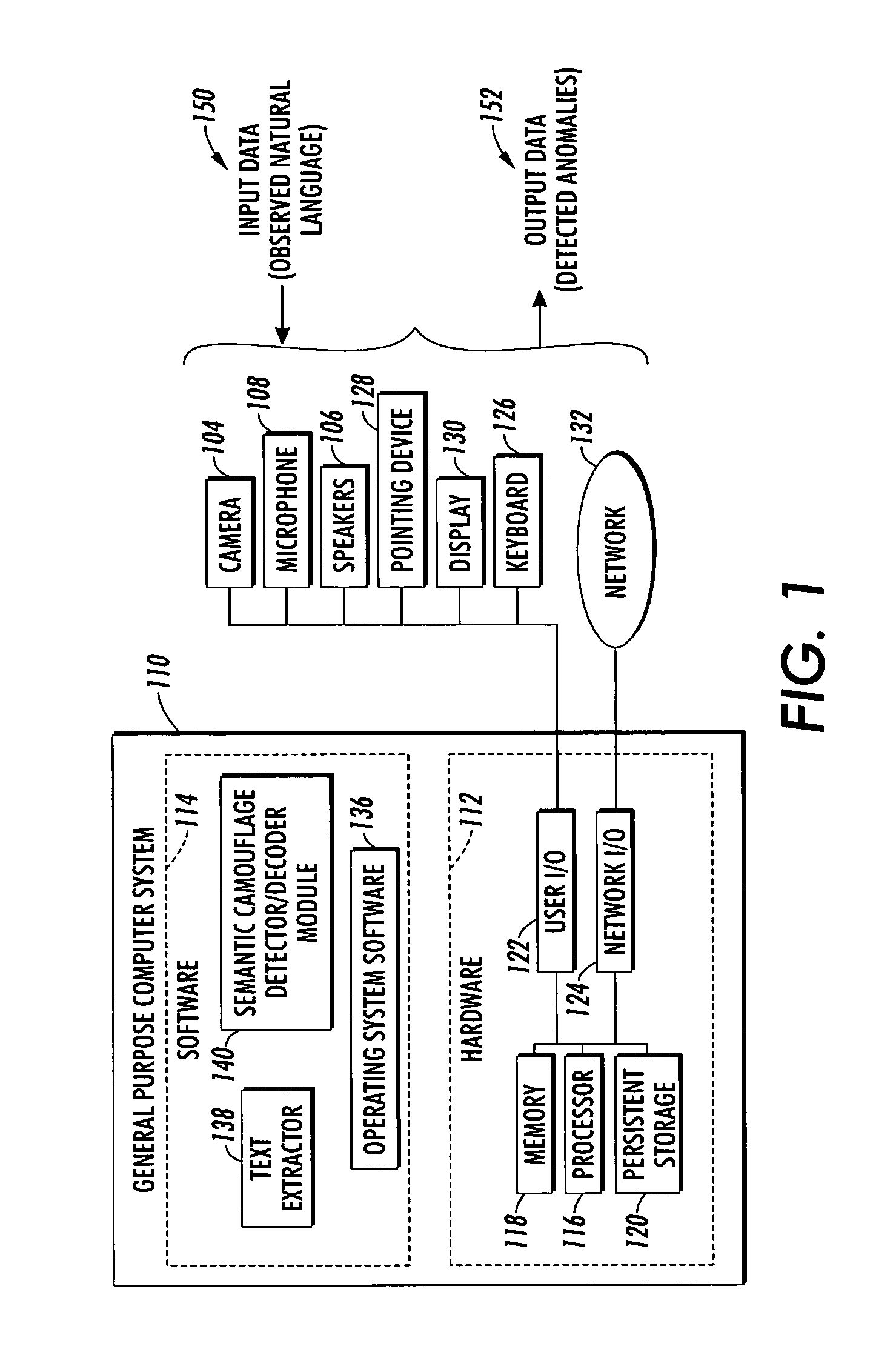

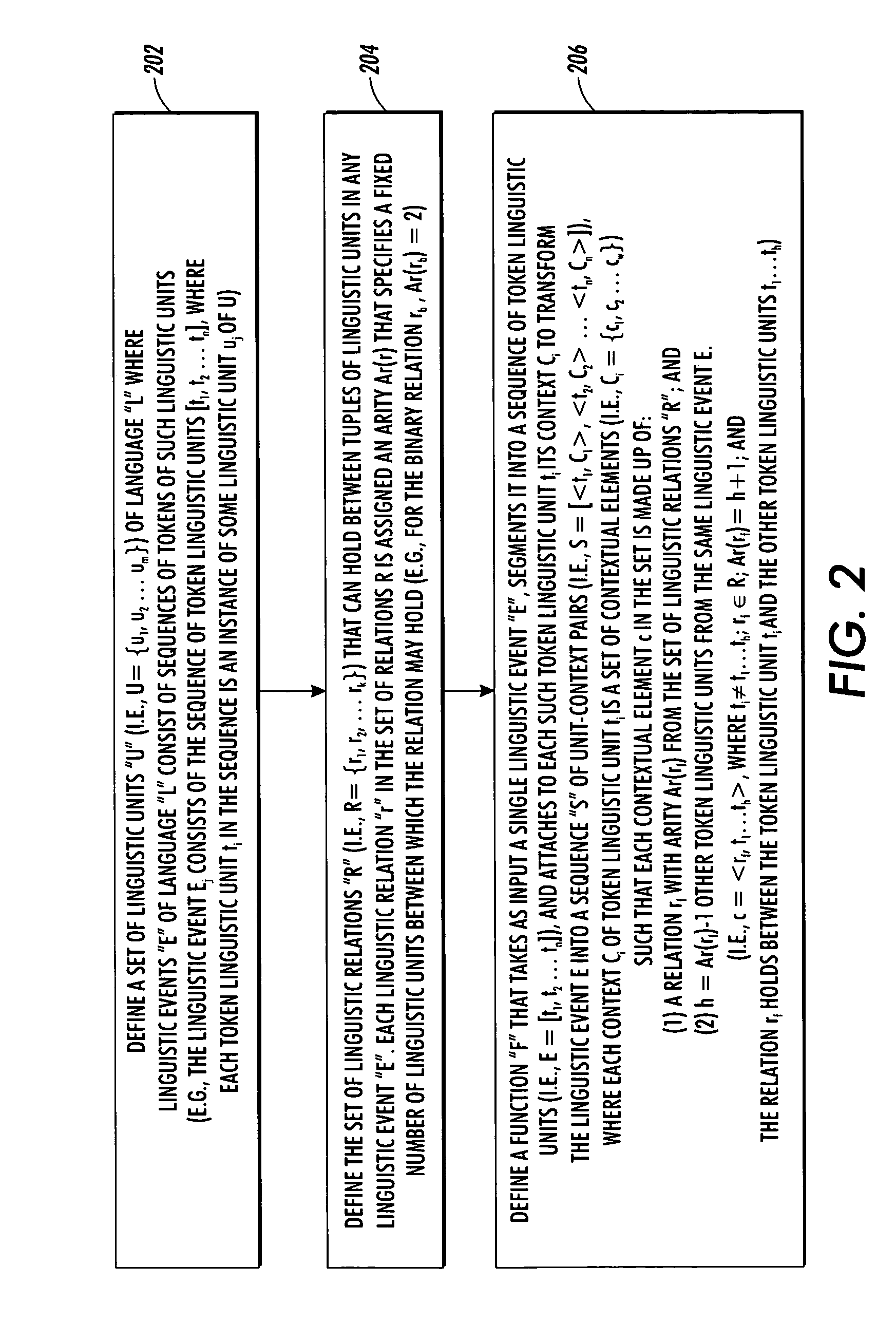

System and method for detecting and decoding semantically encoded natural language messages

A system detects and decodes semantic camouflage in natural language messages. The system is adapted to identify entities such as words or phrases in overt messages that are being used to disguise different and unrelated entities or concepts. The system automatically determines the semantic plausibility of the overt message and identifies entities that appear in implausible contexts. In addition, the system automatically estimates covert meanings for the entities identified in the overt message that appear in implausible contexts.

Owner:XEROX CORP

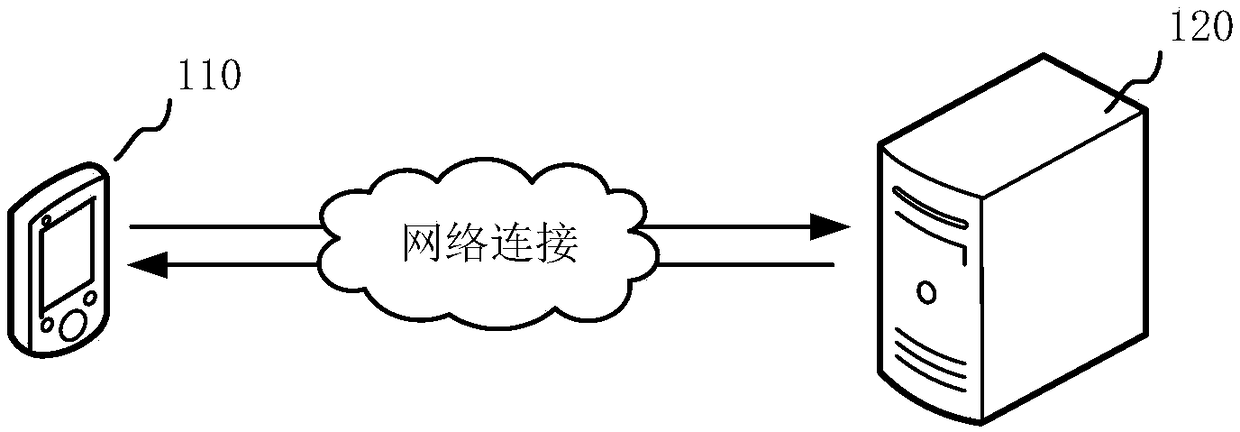

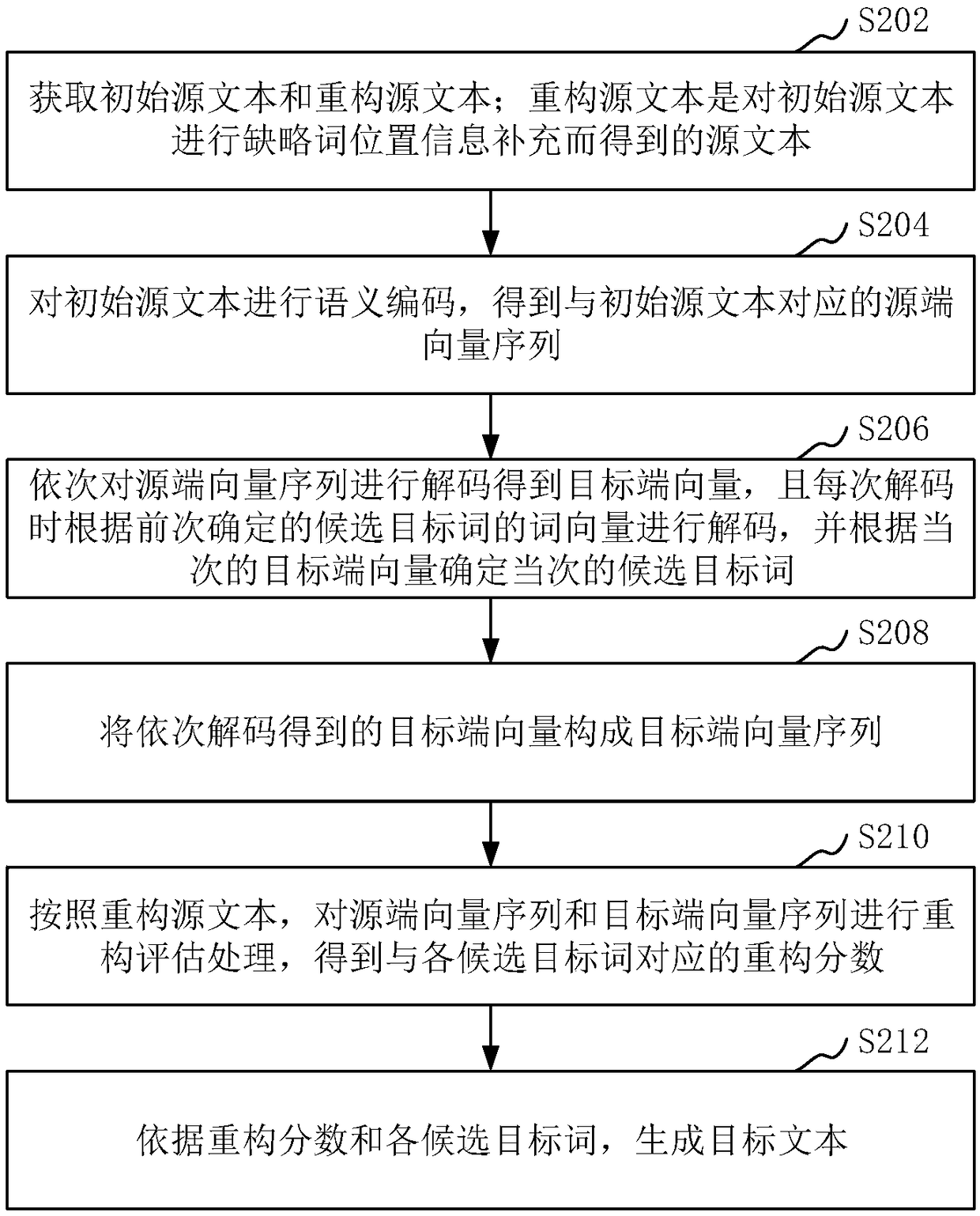

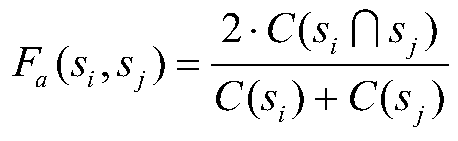

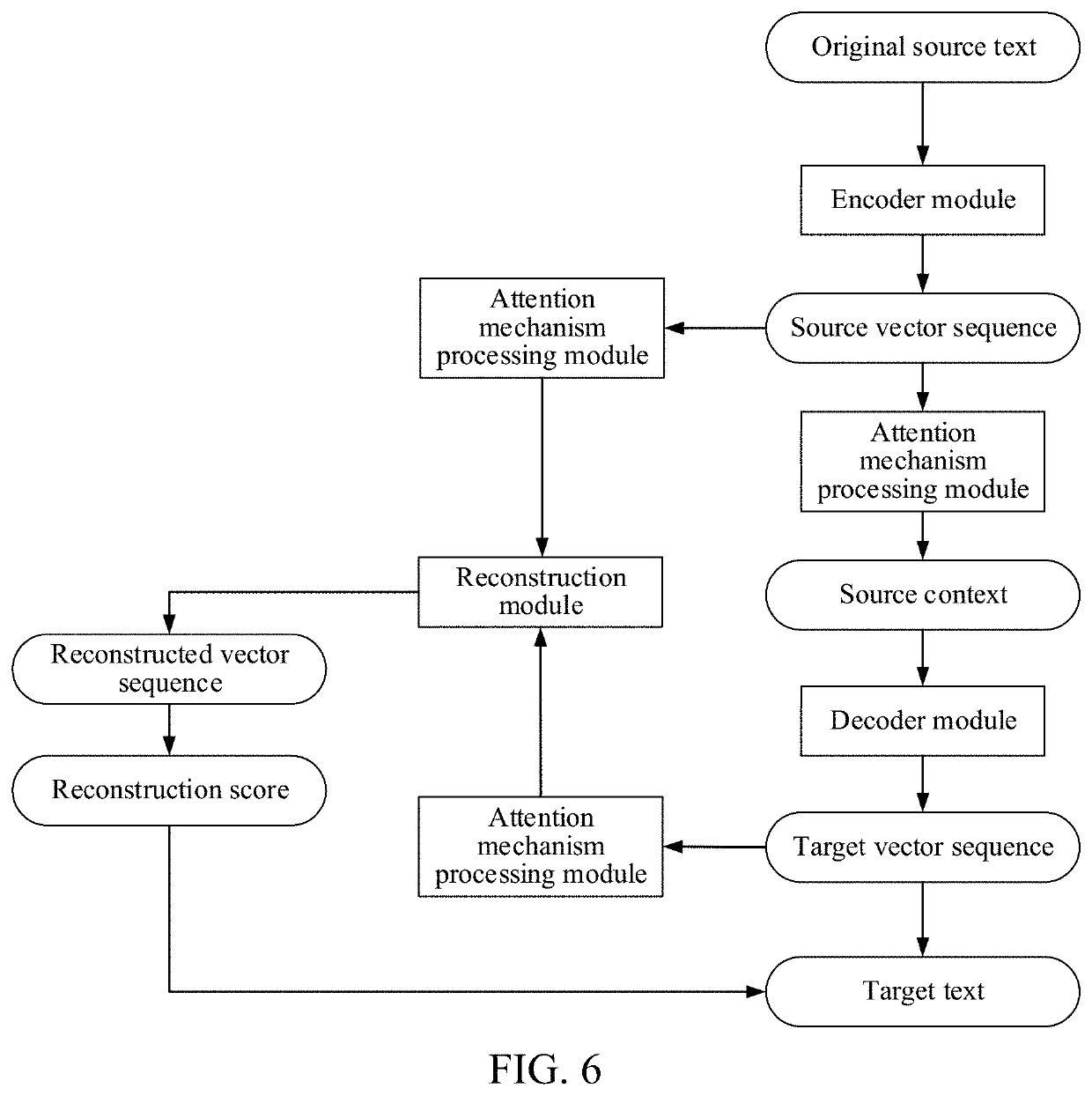

Text translation method, device, storage medium and computer device

ActiveCN109145315AMeasuring RecallAvoid missingNatural language translationSemantic analysisSemantics encodingSource text

The invention relates to a method, device, a readable storage medium and a computer device for text translation, including steps: obtaining an initial source text and reconstructing that source text,wherein the reconstructed source text is the source text obtained by supplementing the initial source text with missing word position information; carrying out semantic coding on the initial source text to obtain a source end vector sequence corresponding to the initial source text; a target end vector being obtained by sequentially decoding that source end vector sequence, and the target end vector being decoded according to the word vector of the candidate target word determined before each decoding, and the candidate target word of the current time being determined according to the target end vector of the current time; forming a target end vector sequence by sequentially decoding the target end vectors; performing reconstruction evaluation processing on the source vector sequence and the target vector sequence according to the reconstruction source text to obtain a reconstruction score corresponding to each candidate target word; a target text being generated according to that reconstruction score and the candidate target word. The scheme provided by the present application can improve the quality of translation.

Owner:TENCENT TECH (SHENZHEN) CO LTD

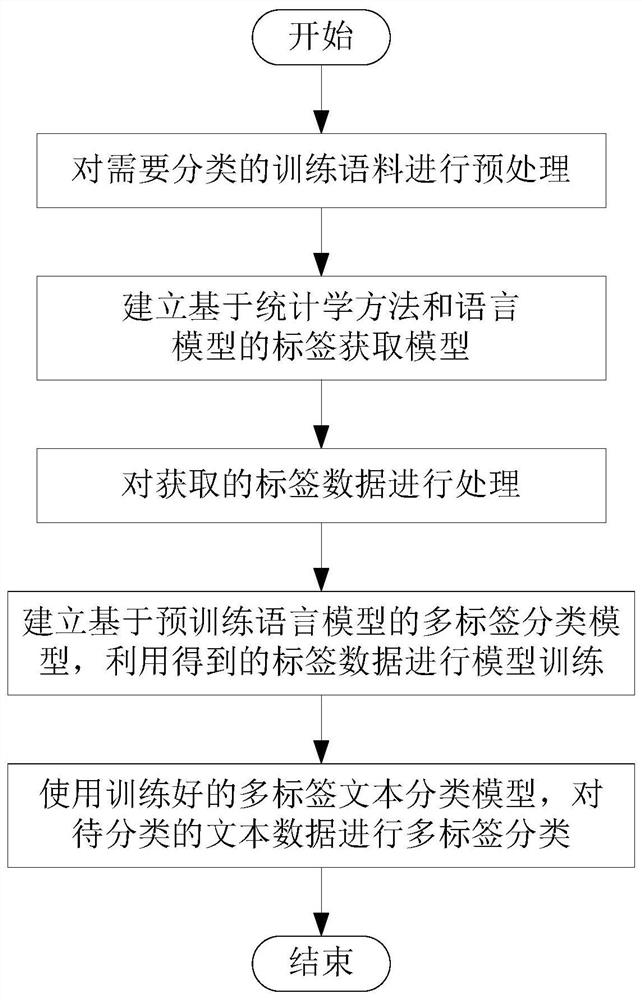

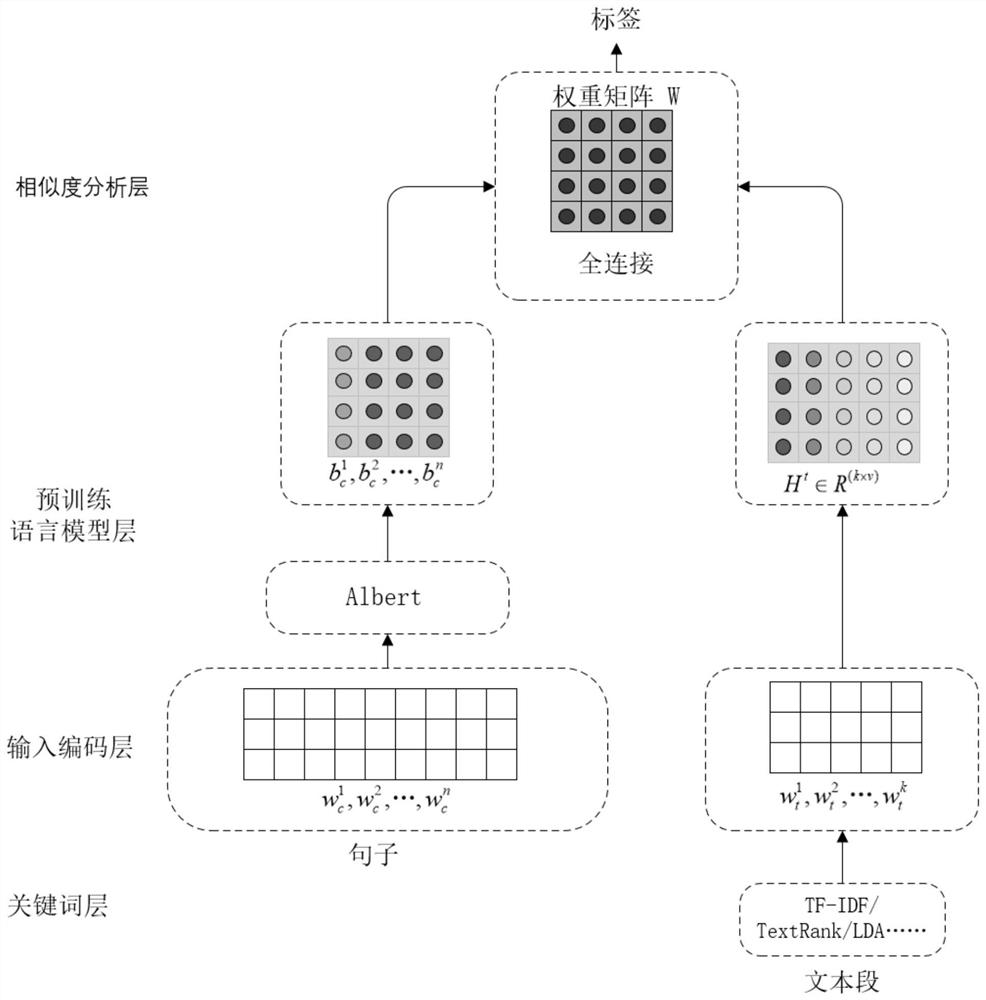

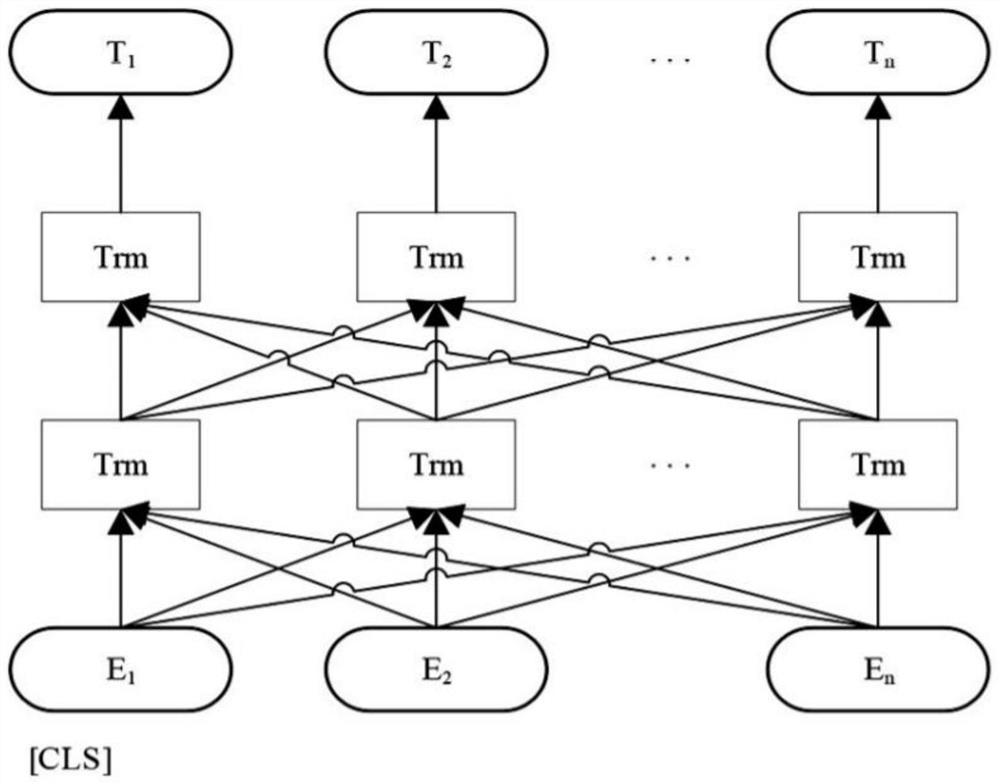

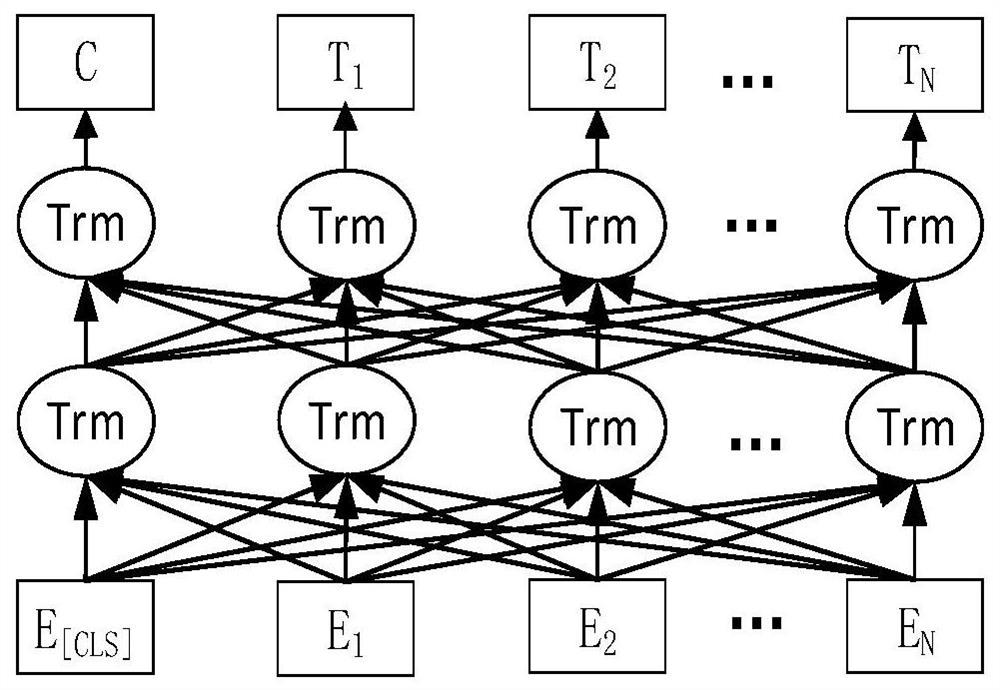

Multi-label text classification method based on statistics and pre-trained language model

ActiveCN112214599AImprove accuracySemantic analysisCharacter and pattern recognitionData setLinguistic model

The invention discloses a multi-label text classification method based on statistics and a pre-training language model. The multi-label text classification method comprises the following steps: S1, preprocessing training corpora needing to be classified; S2, establishing a label acquisition model based on a statistical method and a language model; S3, processing the obtained label data; S4, establishing a multi-label classification model based on a pre-training language model, and performing model training by utilizing the obtained label data; S5, performing multi-label classification on the text data to be classified by using the trained multi-label text classification model. According to the method, a statistical method and a pre-trained language model label obtaining method are combined, the ALBERT language model is used for obtaining the semantic coding information of the text, a data set does not need to be manually labeled, and the label obtaining accuracy can be improved.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

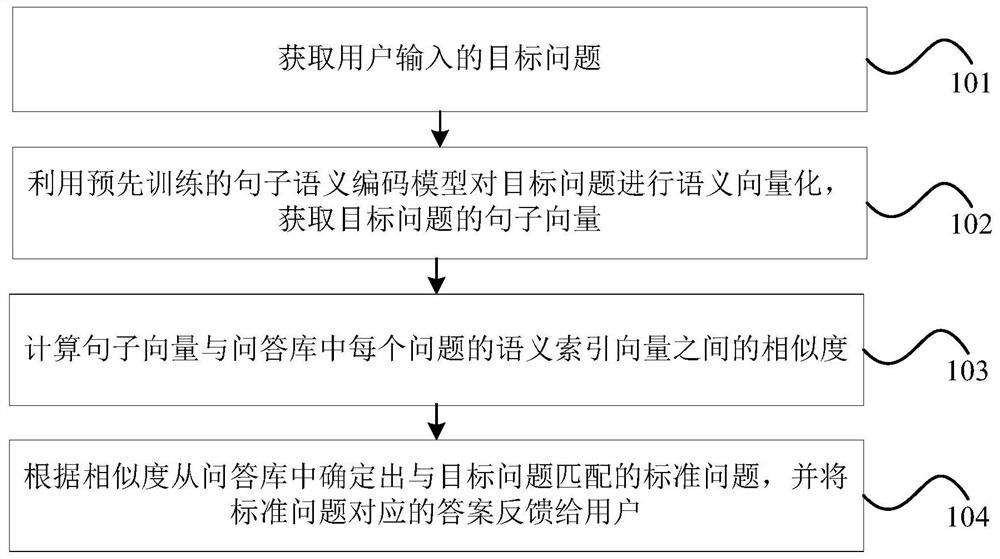

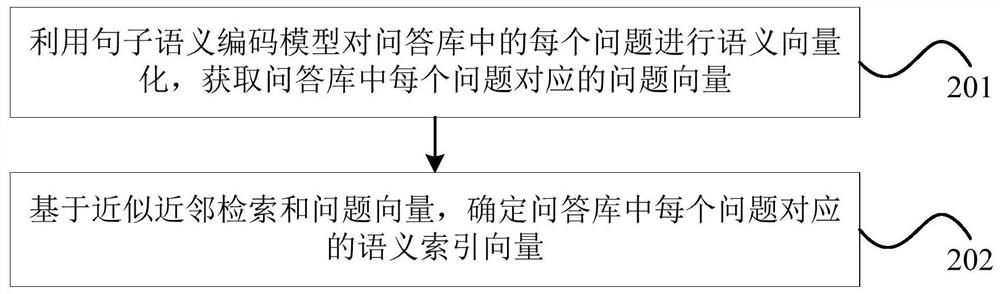

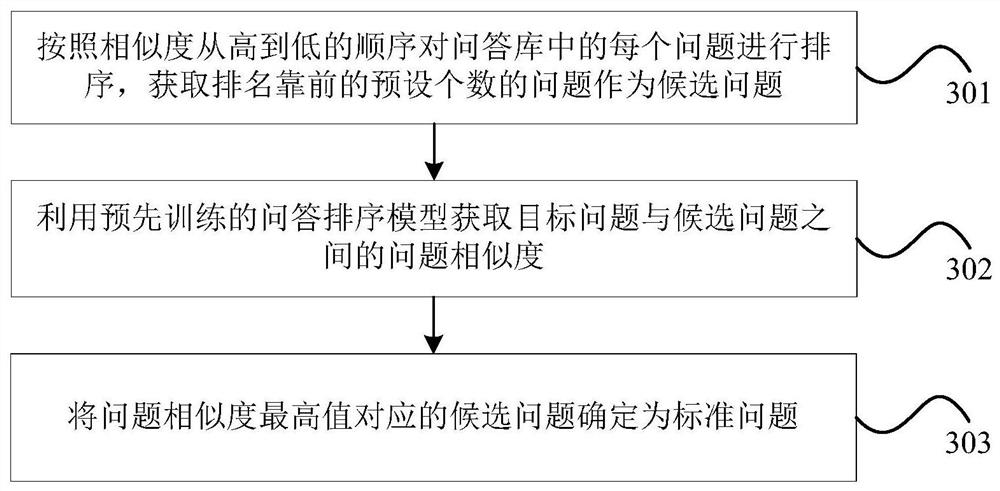

Automatic question and answer processing method and device, computer equipment and storage medium

PendingCN111858859AImprove experienceImprove accuracySemantic analysisText database queryingSemantic vectorUser needs

The invention provides an automatic question and answer processing method and device, computer equipment and a storage medium, and the automatic question and answer processing method comprises the steps: obtaining a target question inputted by a user; performing semantic vectorization on the target question by utilizing a pre-trained sentence semantic coding model to obtain a sentence vector of the target question; calculating the similarity between the sentence vector and a semantic index vector of each question in a question and answer library; and determining a standard question matched with the target question from the question and answer library according to the similarity, and feeding back an answer corresponding to the standard question to the user. Through the automatic question and answer processing method, question matching can be carried out based on semantic correlation of the questions, and the accuracy of semantic understanding of the target questions is improved, and thetechnical problems that in the prior art, question matching is carried out based on literal correlation, so that returned answers cannot meet user requirements, and the accuracy is low are solved.

Owner:BEIJING BAIDU NETCOM SCI & TECH CO LTD

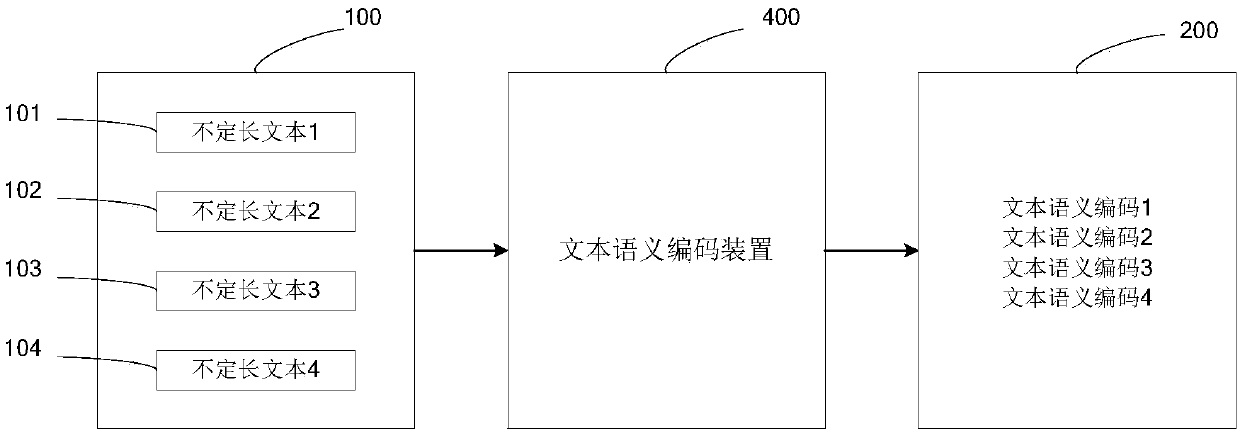

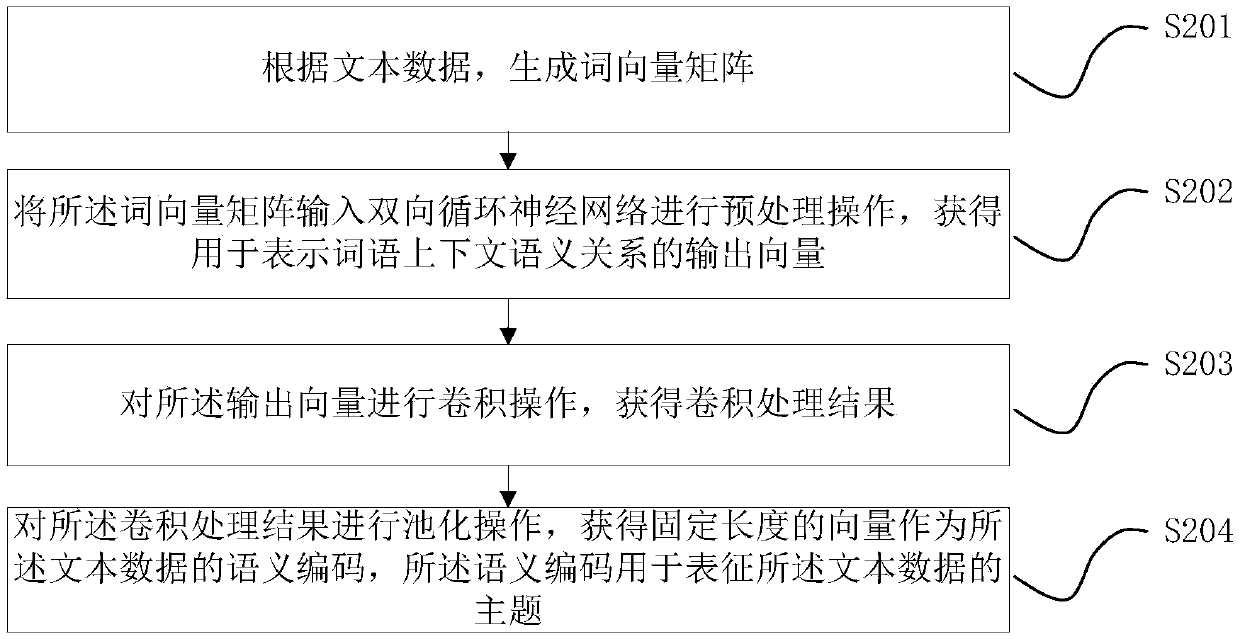

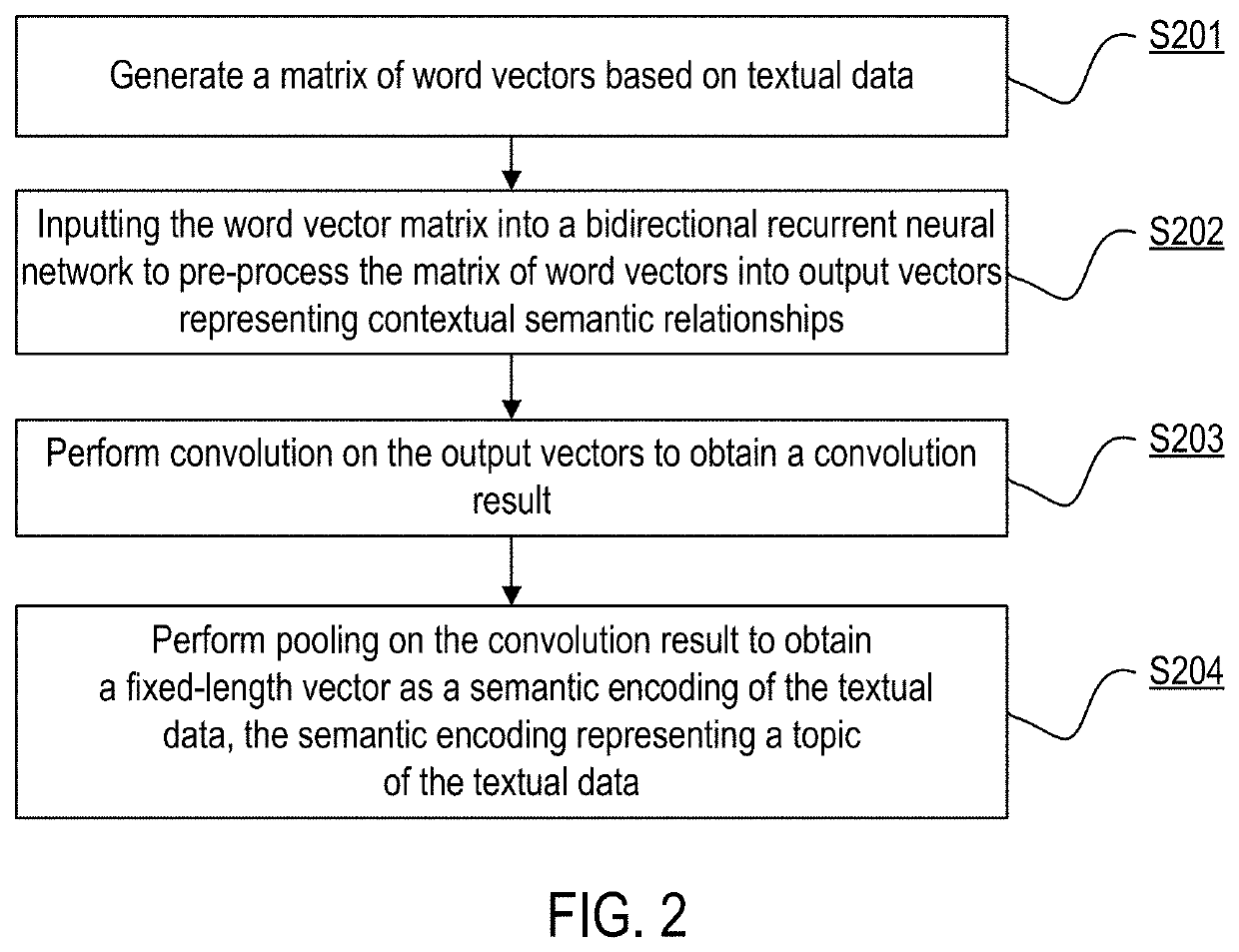

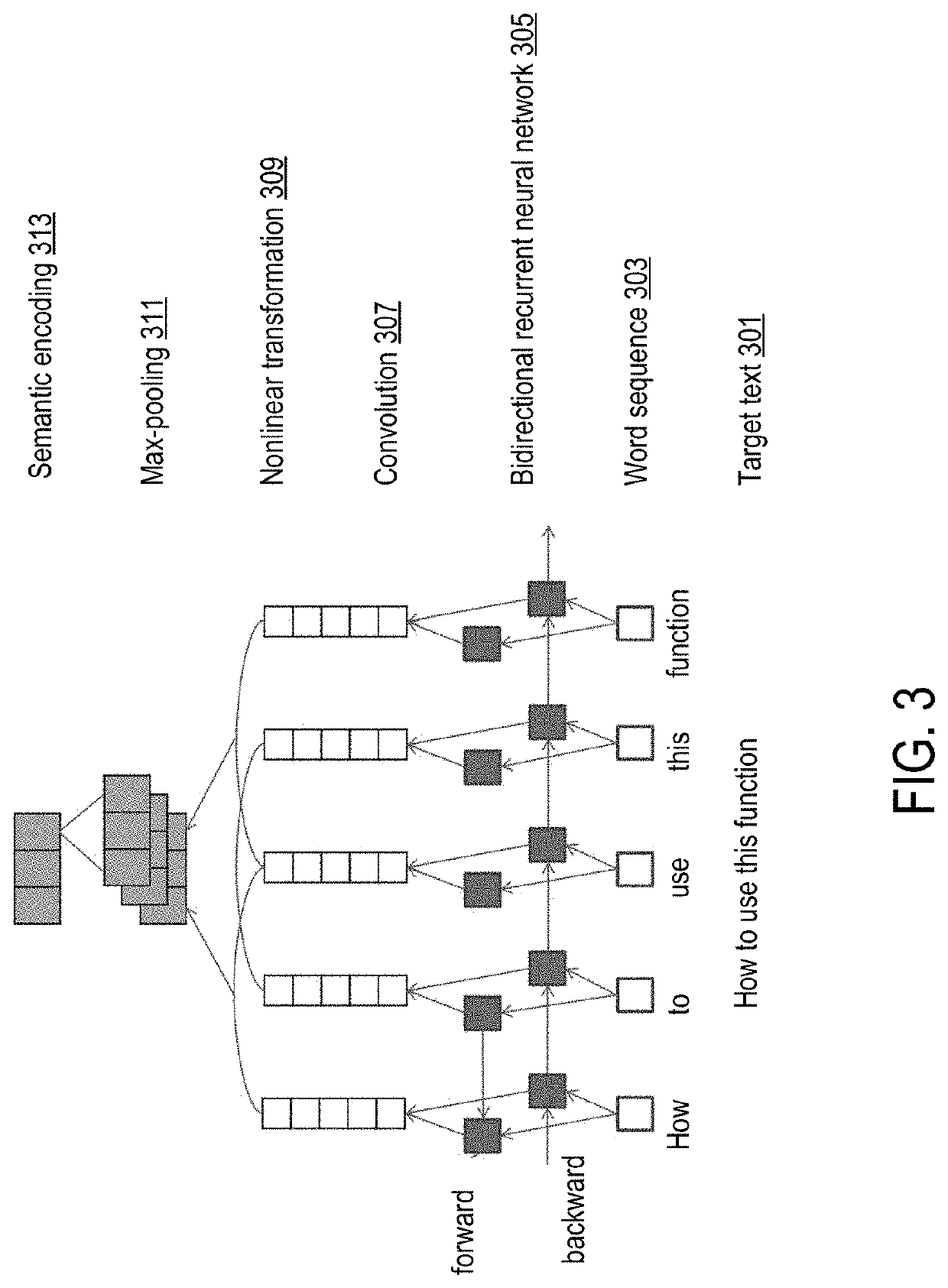

Text semantic coding method and device

PendingCN110019793AText Semantic Encoding ImplementationSemantic analysisNeural architecturesSemantics encodingFixed length

Owner:ALIBABA GRP HLDG LTD

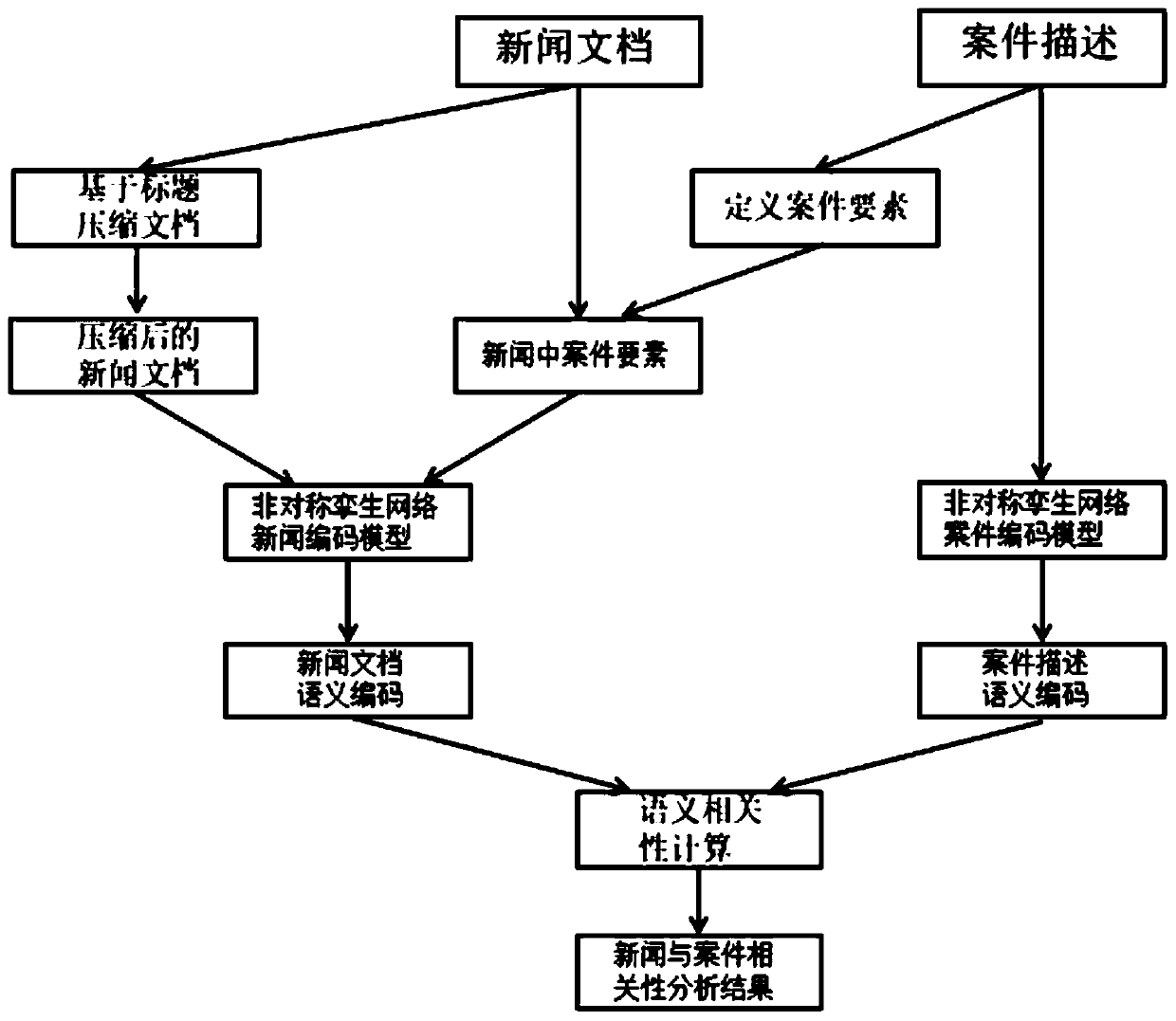

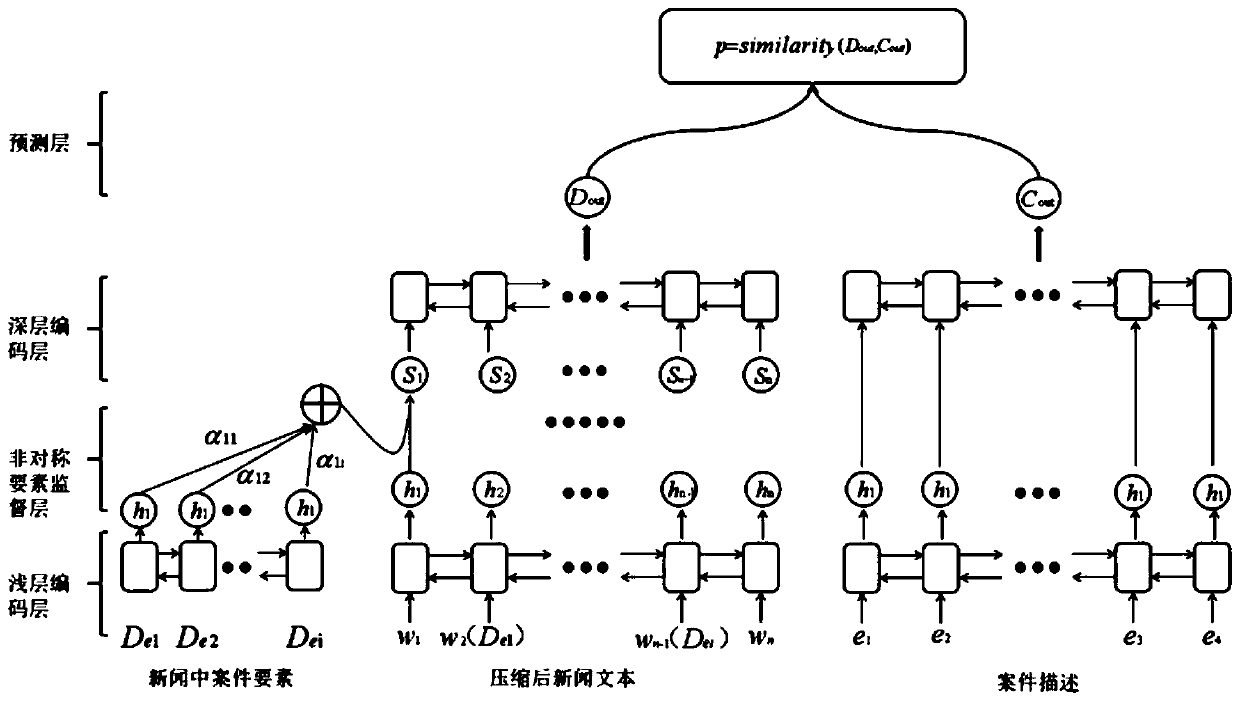

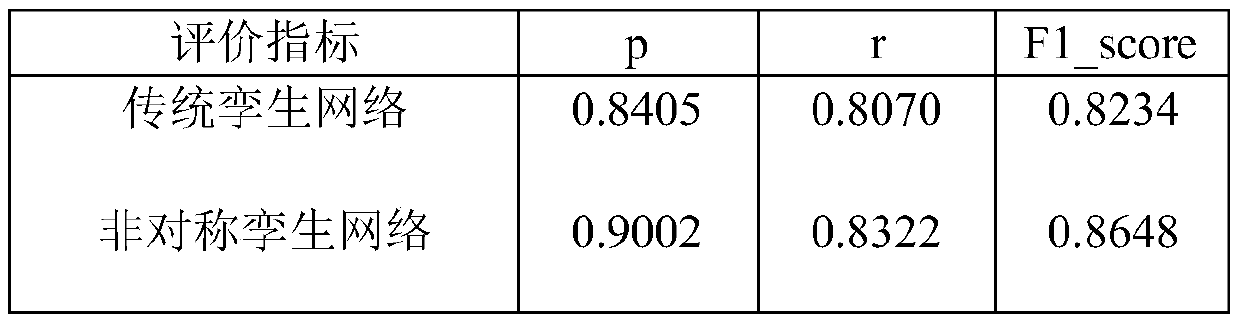

News and case similarity calculation method based on asymmetric twin network

ActiveCN110717332ASolve the problem of redundant contentEasy to learnSemantic analysisText processingDocument similarityTheoretical computer science

The invention relates to a news and case similarity calculation method based on an asymmetric twin network, and belongs to the technical field of natural language processing. The method comprises thefollowing steps: firstly, selecting a sentence representation document most relevant to a news title by calculating the similarity between sentences and titles in a text so as to remove redundant sentences in the news text; describing and modeling a document and a case by using an asymmetric twin network, fusing the case element serving as supervision information into the asymmetric twin network to encode a news document and case description in consideration of key semantic information of the case contained in the case element, and finally judging the correlation between news and the case by calculating document similarity. According to the method, similarity calculation is carried out on the news text and the case description based on the asymmetric twin network, semantic coding modelingcan be carried out on the unbalanced news text and case description, and the accuracy of similarity calculation can be improved.

Owner:KUNMING UNIV OF SCI & TECH

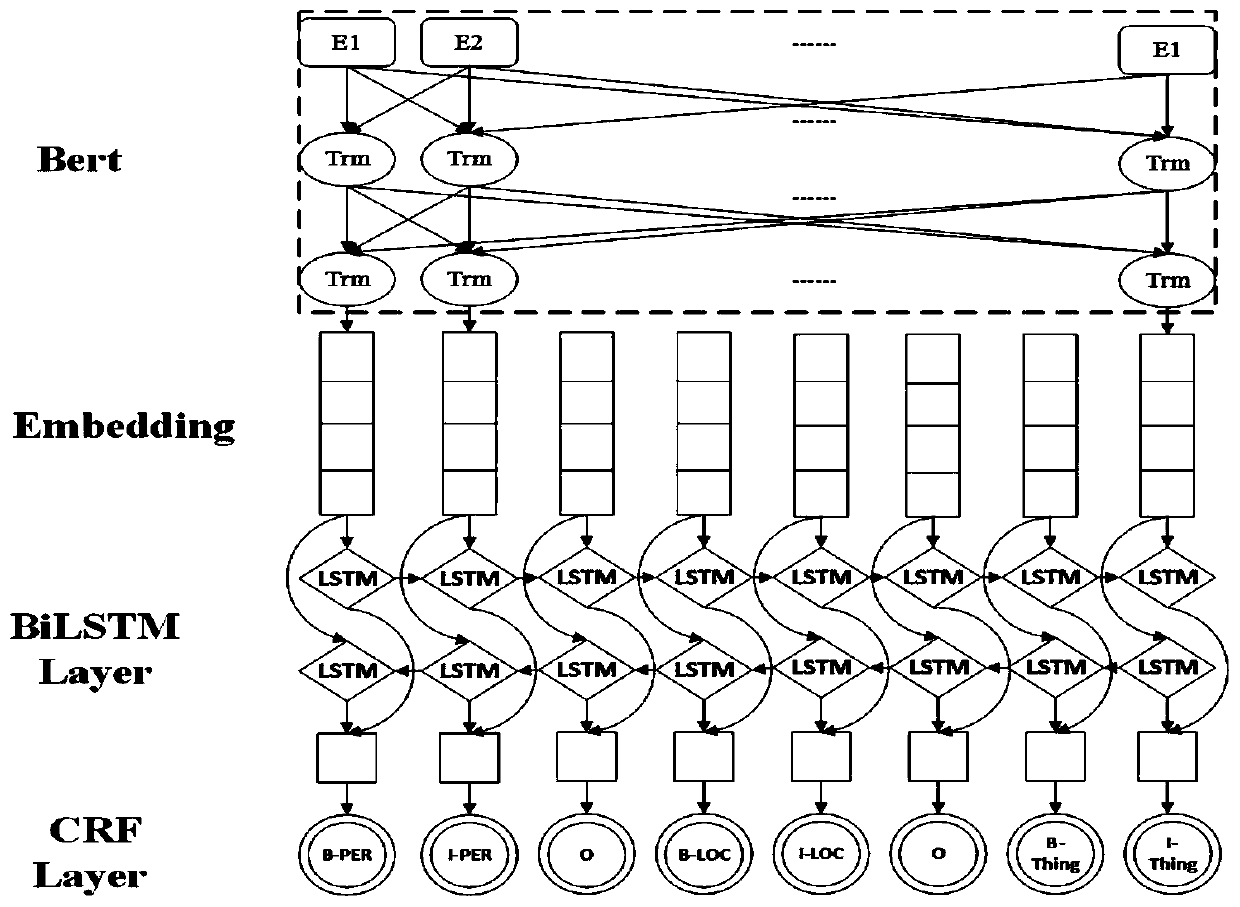

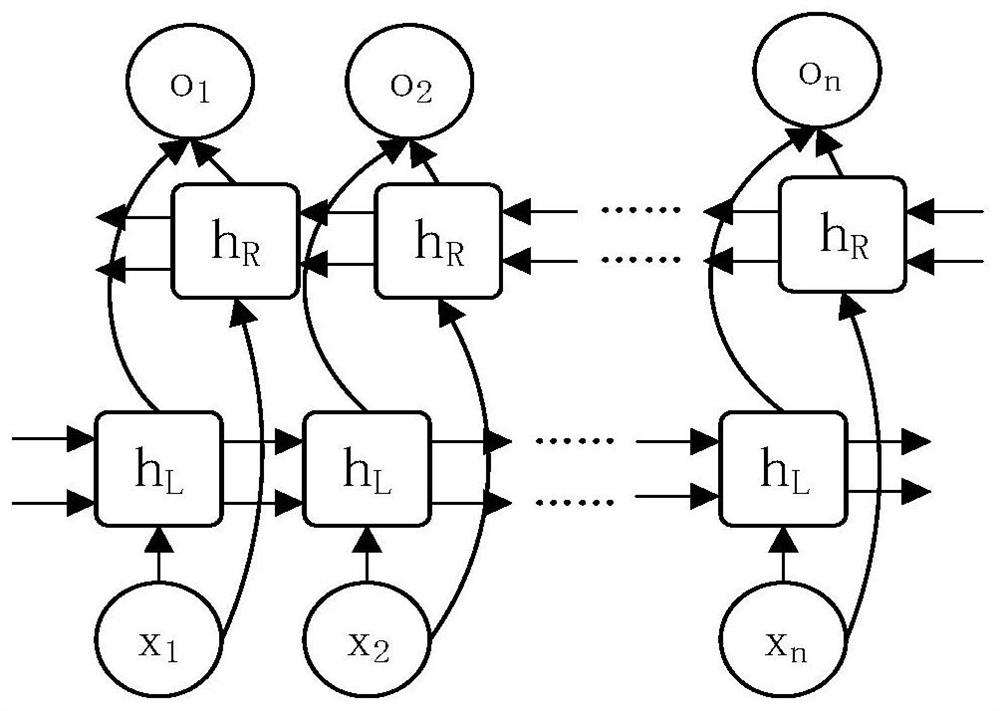

Tourism named entity identification method based on BBLC model

ActiveCN111310471AImprove accuracyImprove recallNatural language data processingNeural architecturesAlgorithmNamed-entity recognition

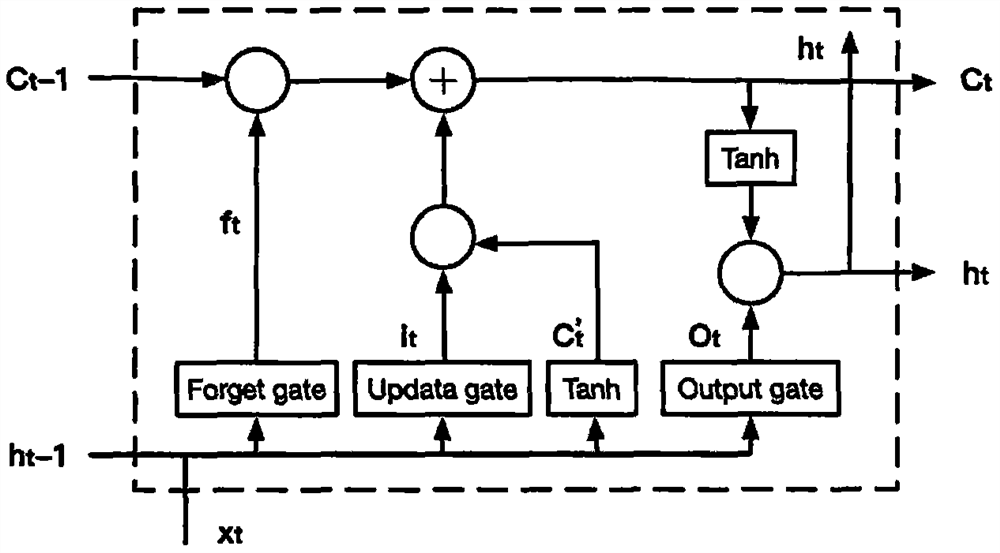

The invention discloses a tourism named entity recognition method based on a BBLC model, and the method comprises the steps: carrying out the BIO marking of statements in a corpus, and obtaining a BIOmarking set; inputting the BIO annotation set into a BERT pre-training language model, and outputting vector representation of each word in the statement, namely a word embedding sequence in each statement; 3, taking the word embedding sequence as the input of each time step of the bidirectional LSTM, and carrying out the further semantic coding to obtain a statement feature matrix; taking the statement feature matrix as input of a CRF model, labeling and decoding the statement x to obtain a word label sequence of the statement x, outputting a probability value that a label of the statement xis equal to y, solving an optimal path by using a dynamically planned Viterbi algorithm, and outputting a label sequence with the maximum probability. According to the method, local context information can be obtained by adding the BERT pre-training language model, the accuracy, recall rate and F value are higher, the generalization ability and robustness are stronger, and the defects of a traditional model can be overcome.

Owner:SHAANXI NORMAL UNIV

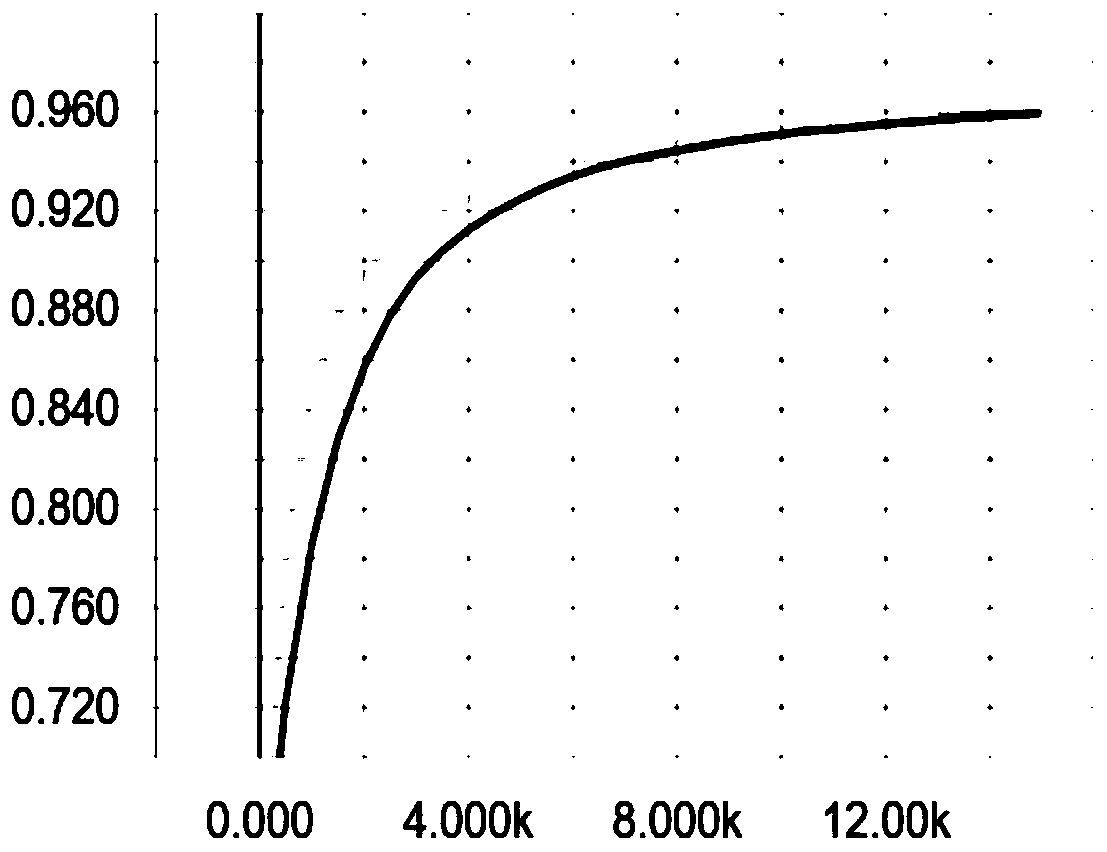

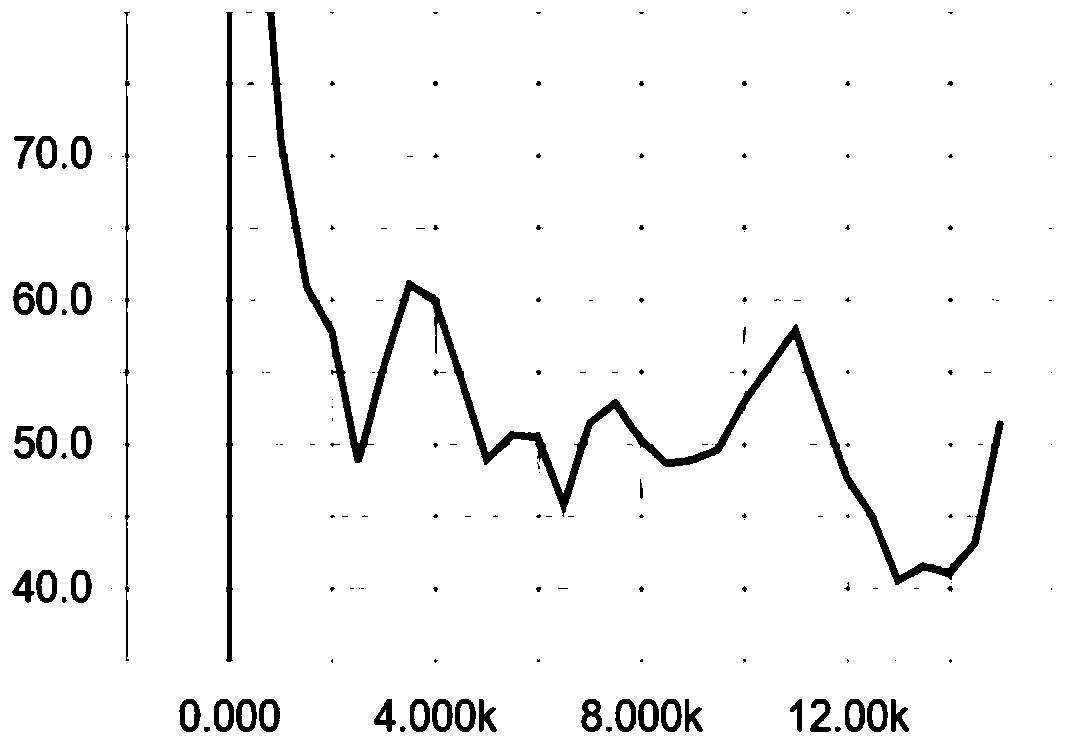

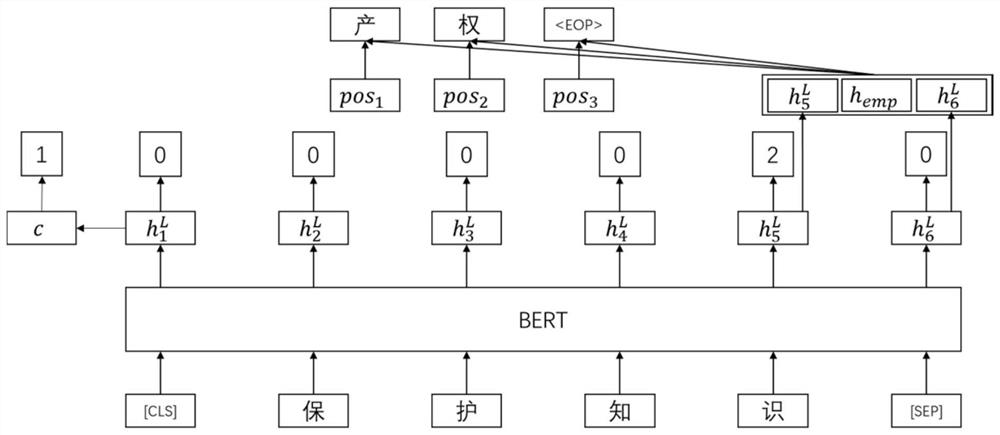

Patent term extraction method

PendingCN112784051AImprove accuracyAccurate identificationSemantic analysisNeural architecturesPattern recognitionSemantics encoding

The invention discloses a patent term extraction method which comprises the following steps: converting each character of a labeled character-level corpus into a word vector by utilizing a BERT pre-training language model layer, inputting the word vector into a BiLSTM layer for semantic coding, and automatically extracting sentence features; and decoding and outputting the prediction label sequence with the maximum probability by using a CRF layer to obtain the labeling type of each character, and extracting and classifying entities in the sequence. According to the patent term extraction method provided by the embodiment of the invention, the BERT is utilized to vectorize the professional field patent text, the accuracy of the term extraction result can be effectively improved, the extraction effect is better than that of a current mainstream deep learning term extraction model, the accuracy, the recall rate and the F1 value are remarkably improved in the professional field patent text term extraction, and professional field patent long sequence terms with many characters can be accurately and rapidly identified.

Owner:BEIJING INFORMATION SCI & TECH UNIV

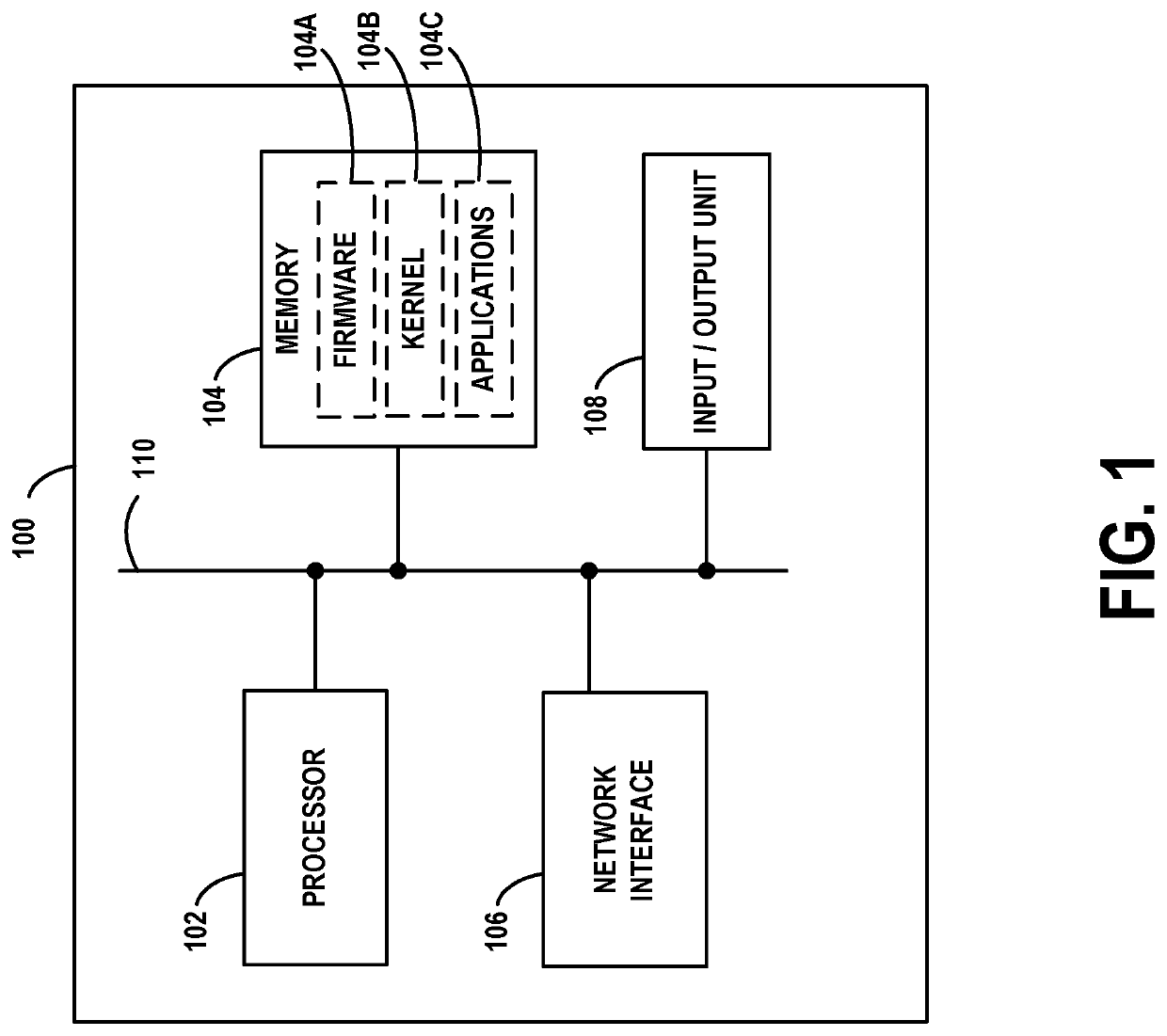

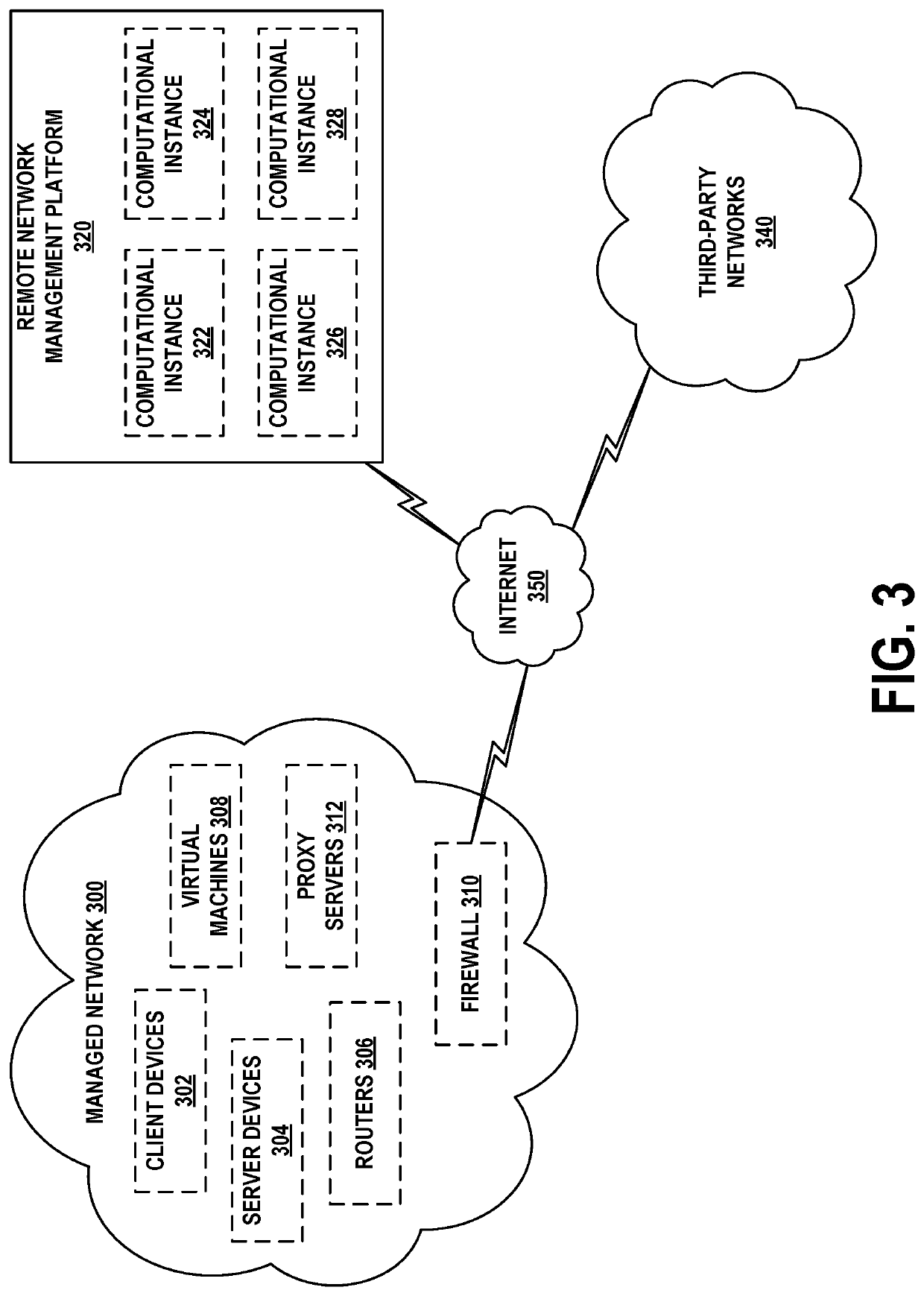

Persistent word vector input to multiple machine learning models

Word vectors are multi-dimensional vectors that represent words in a corpus of text and that are embedded in a semantically-encoded vector space. Word vectors can be used for sentiment analysis, comparison of the topic or content of sentences, paragraphs, or other passages of text or other natural language processing tasks. However, the generation of word vectors can be computationally expensive. Accordingly, when a set of word vectors is needed for a particular corpus of text, a set of word vectors previously generated from a corpus of text that is sufficiently similar to the particular corpus of text, with respect to some criteria, may be re-used for the particular corpus of text. Such similarity could include the two corpora of text containing the same or similar sets of words or containing incident reports or other time-coded sets of text from overlapping or otherwise similar periods of time.

Owner:SERVICENOW INC

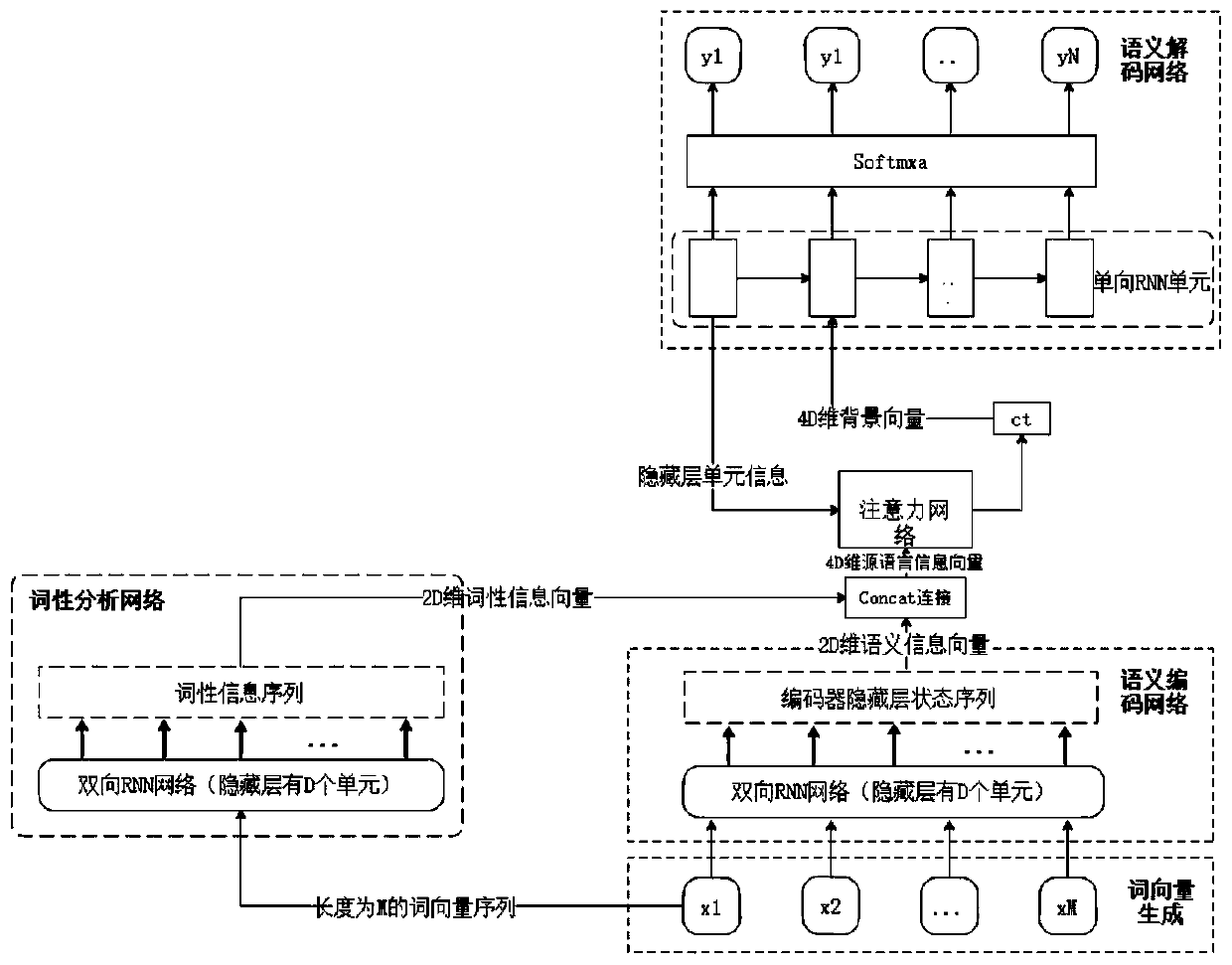

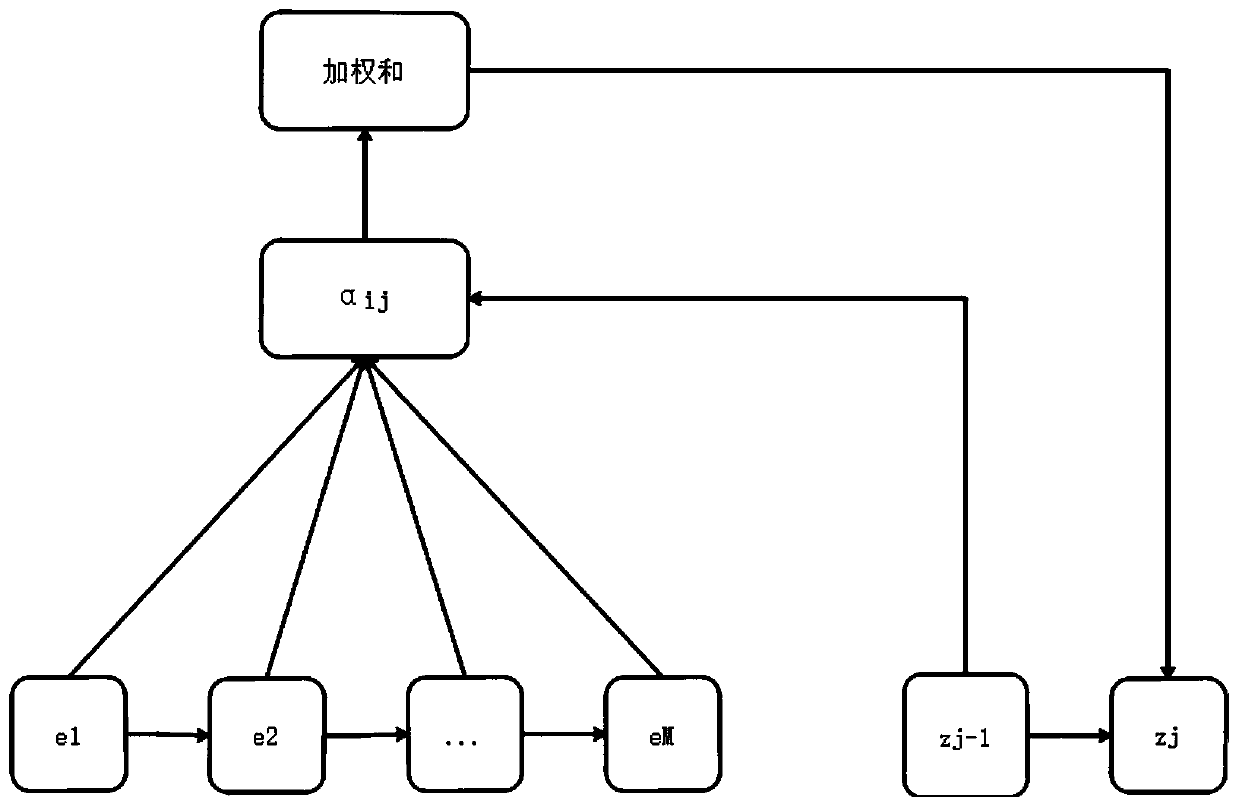

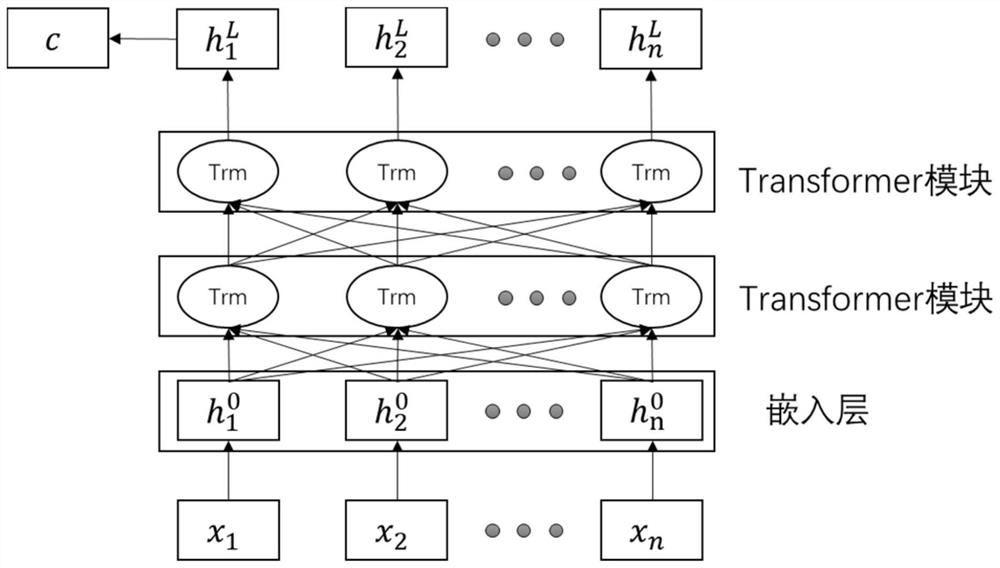

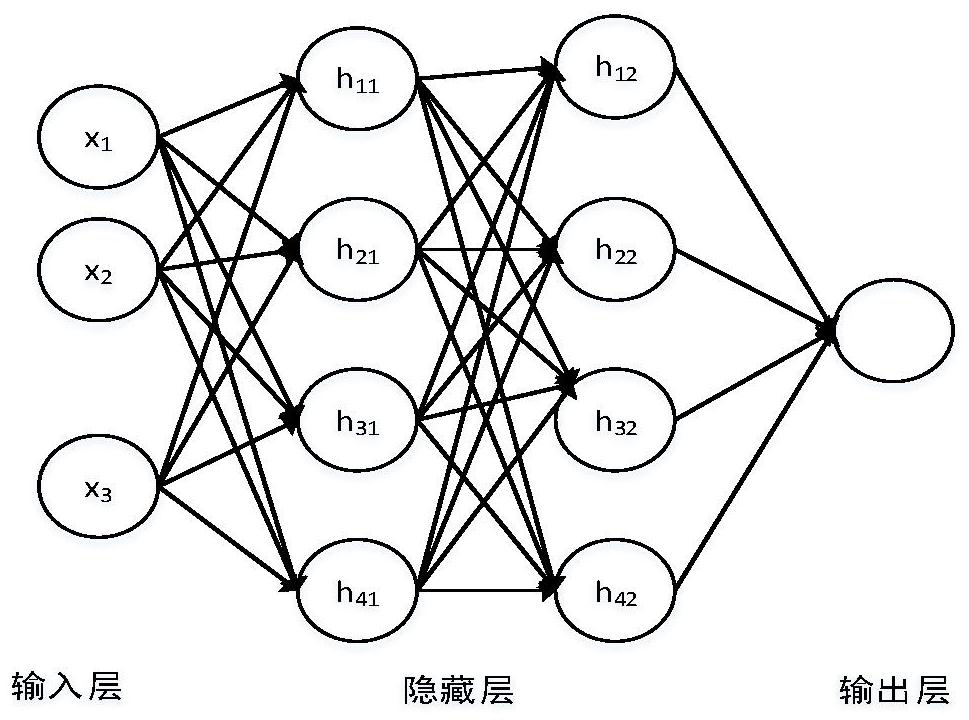

Chinese language processing model and method based on deep neural network

ActiveCN110188348AImprove accuracyGuarantee strict confrontationSemantic analysisNeural architecturesPart of speechNetwork output

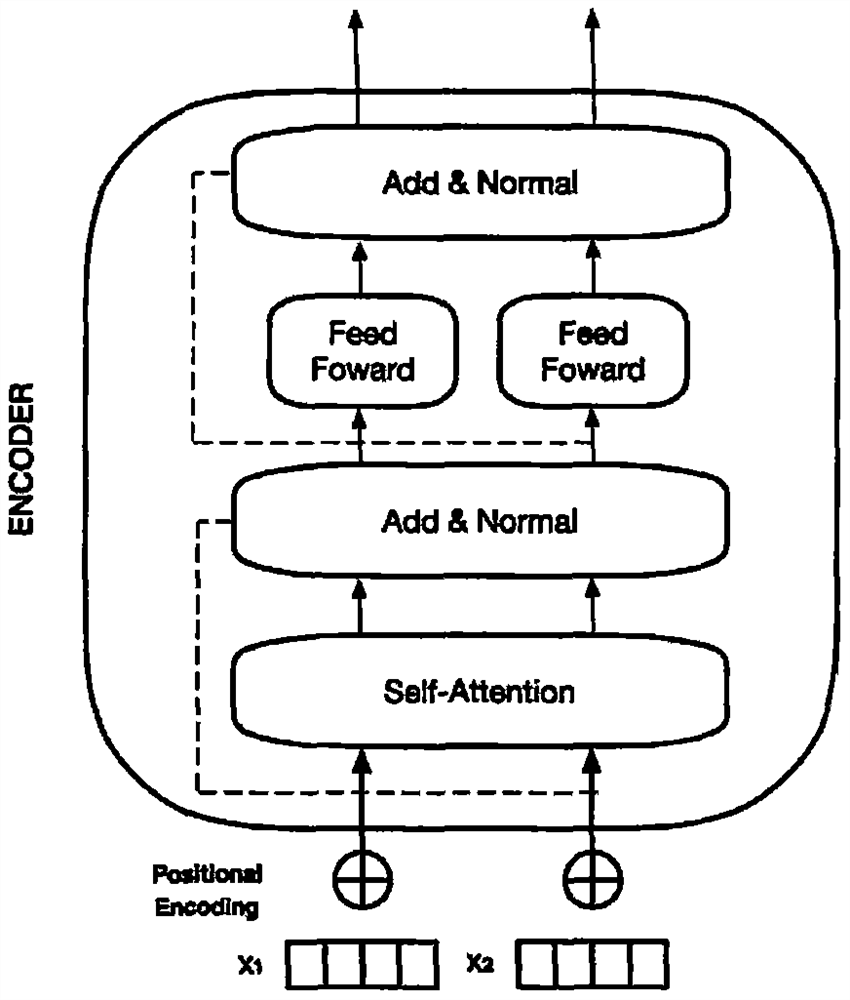

The invention discloses a Chinese language processing model and method based on a deep neural network, and the model comprises three parts of a semantic coding network, a part-of-speech analysis network, and a semantic decoding network, wherein the semantic coding network and the part-of-speech analysis network are connected through an attention network and the semantic decoding network. The semantic coding network and the part-of-speech analysis network firstly process the word vectors generated by a source text, the semantic coding network outputs a semantic information vector of the sourcetext, and the part-of-speech analysis network outputs a part-of-speech information vector of the source text and connects the semantic information vectors and the part-of-speech information vectors ina concat () mode to serve as the input of the attention network, the attention network generates the background vectors containing all information of the source text according to the input information to serve as the input of the semantic decoding network, and the semantic decoding network calculates according to the background vector to obtain the probability distribution of all candidate words,and outputs each element of the target text one by one according to the probability distribution, so that the text mapping accuracy and the system performance are improved.

Owner:NANJING UNIV OF POSTS & TELECOMM

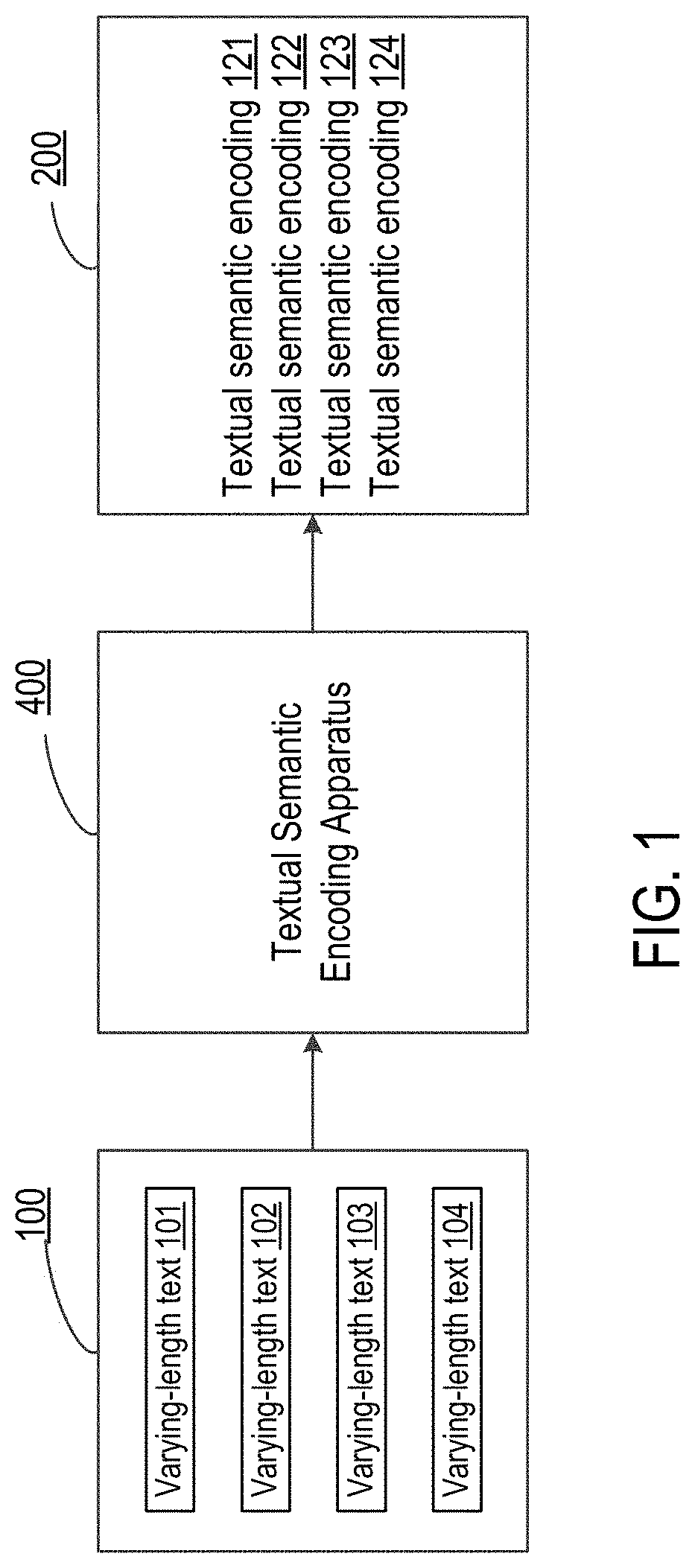

Method and apparatus for textual semantic encoding

InactiveUS20200250379A1Fail to accuratelySemantic analysisKnowledge representationAlgorithmTheoretical computer science

Owner:ALIBABA GRP HLDG LTD

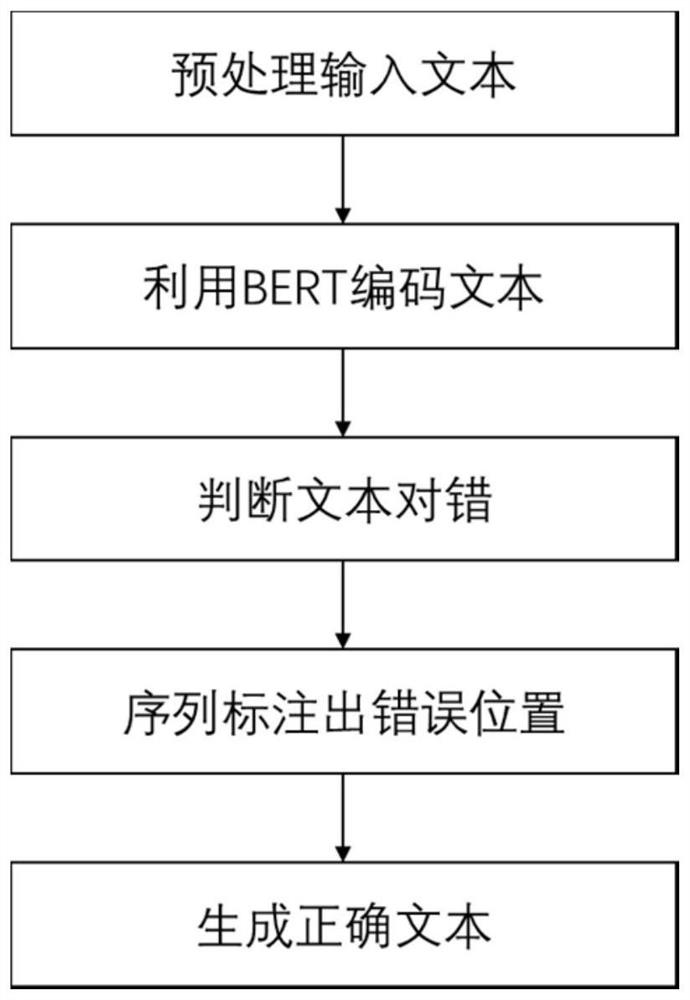

BERT and feedforward neural network-based text error correction method

PendingCN112836496ATime-consuming to improveAccurate error correctionSemantic analysisNeural architecturesEngineeringSemantics encoding

The invention discloses a BERT and feedforward neural network-based text error correction method, which can be used for quickly and accurately identifying and correcting errors of large-scale corpora. The method comprises the following steps: firstly, preprocessing a text, then carrying out semantic coding on the text by using BERT, then judging whether the text is correct or not by using semantic information of the whole text, then finding out a specific position of an error in the text which is judged to be wrong by using a sequence labeling method, and finally, combining context information of the error to determine whether the error occurs in the text; and generating a corresponding correct text by using the feedforward neural network. The text error correction method constructed by the invention has the characteristics of high reasoning speed and good interpretability.

Owner:ZHEJIANG LAB +1

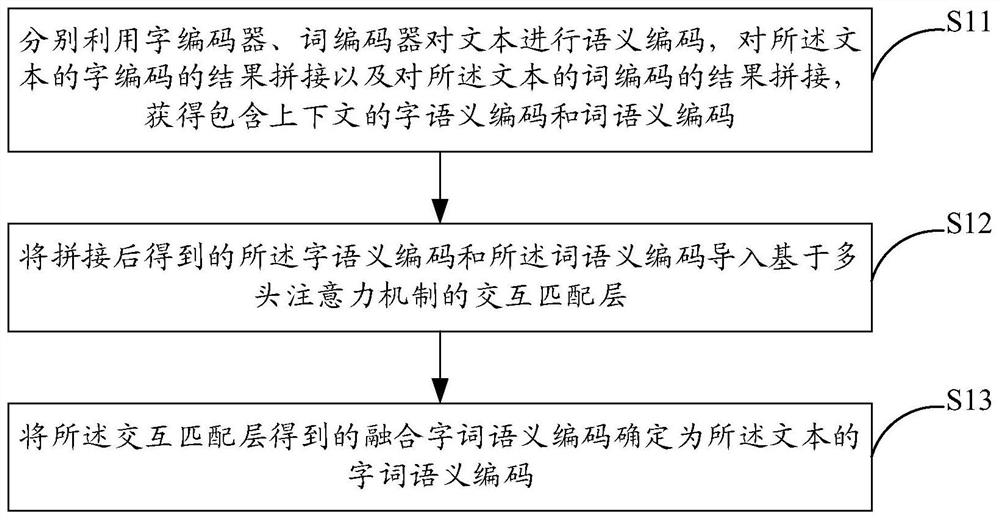

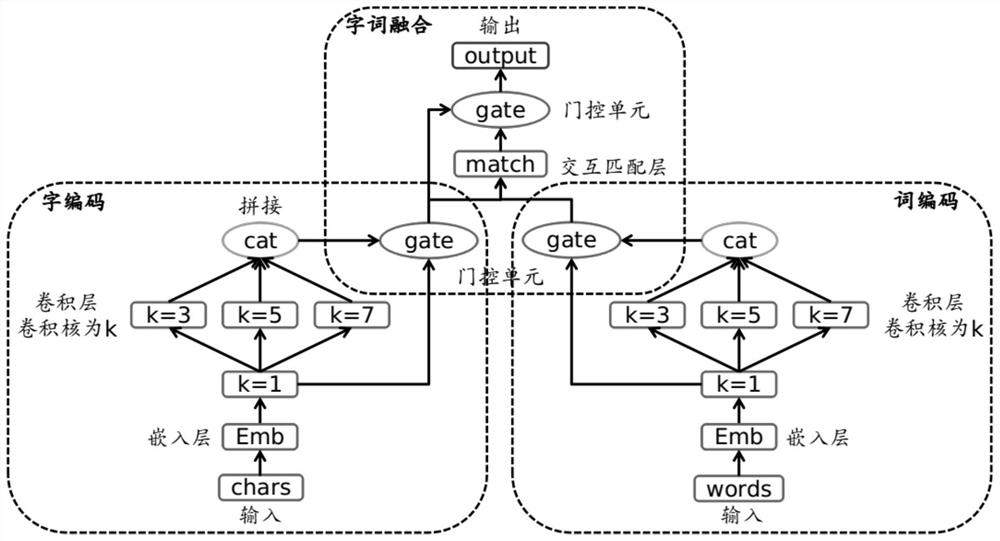

Text semantic coding method and system

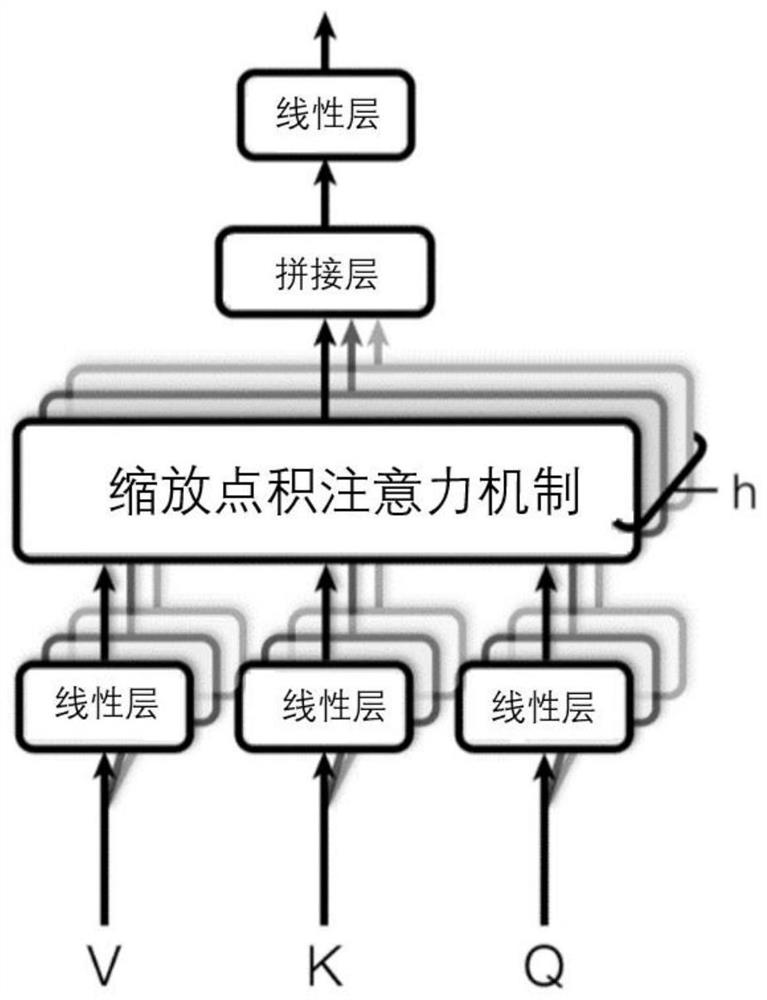

The embodiment of the invention provides a text semantic coding method. The method comprises the steps: carrying out semantic encoding on a text through a character encoder and a word encoder, splicing results of character encoding of the text, splicing results of word encoding of the text, and obtaining character semantic encoding and word semantic encoding containing contexts; importing the character semantic codes and the word semantic codes obtained after splicing into an interactive matching layer based on a multi-head attention mechanism; and determining the fused word meaning code obtained by the interaction matching layer as the word meaning code of the text. The embodiment of the invention further provides a text semantic coding system. The embodiment of the invention provides anencoder based on multilayer word fusion. According to the encoder, after semantic encoding is carried out on characters and words, interaction is carried out on the obtained character and word semantic codes, then the interacted character and word semantic codes are fused through a gating unit trained in a self-adaptive mode, and deeper character and word semantic information is contained and serves as final text semantic representation.

Owner:AISPEECH CO LTD

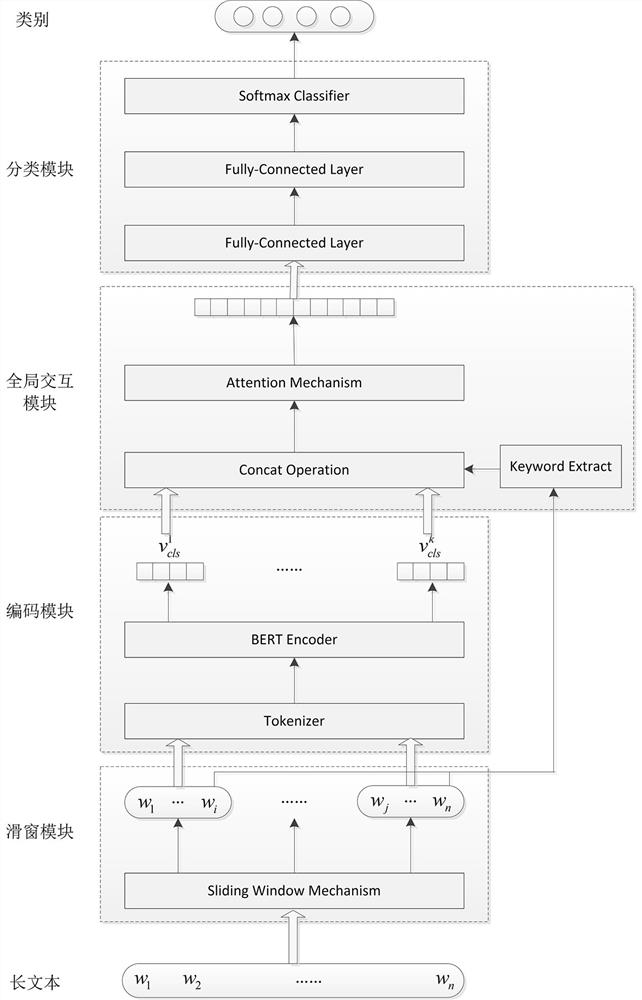

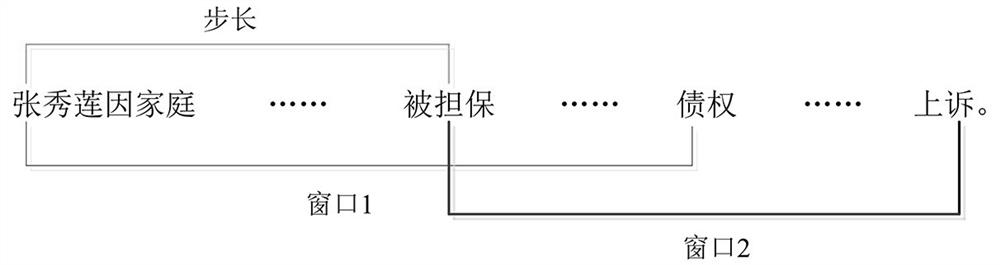

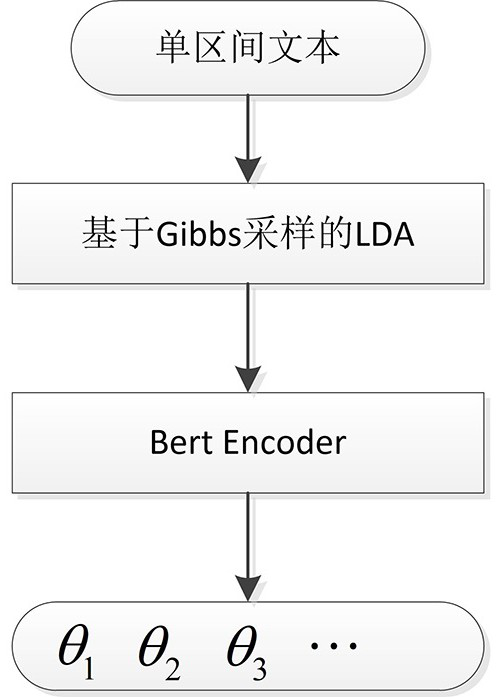

Long text cascade classification method, system and device and storage medium

PendingCN111930952AImprove experienceIncrease viscositySemantic analysisSpecial data processing applicationsSemantic vectorDimensionality reduction

The invention relates to a long text cascade classification method which comprises the following steps: S1, preprocessing input long text data by using a sliding window mechanism, and segmenting the long text data into a plurality of intervals; s2, performing semantic encoding on the generated text in each interval to obtain a local semantic vector of each interval; s3, carrying out keyword extraction on the interval text, encoding the interval text into keyword vectors, carrying out vector splicing on the keyword vectors and the local semantic vectors, and obtaining an overall semantic vectorof the long text; s4, carrying out dimension reduction on the overall semantic vector, and using a classifier to carry out category label probability distribution calculation on the overall semanticvector after dimension reduction; and S5, according to the steps S1-S4, training a classification model of the long text corpus. According to the technical scheme provided by the invention, the performance of the classification model at the bottom layer can be improved to promote the development of other intelligent services in the vertical field, thereby improving the user experience and viscosity of intelligent products.

Owner:杭州识度科技有限公司

Comment text-based deep learning recommendation method

InactiveCN112131469AAvoid problems with statements that lack completenessAvoid Lack of Integrity IssuesDigital data information retrievalSemantic analysisAlgorithmTheoretical computer science

The invention discloses a comment text-based deep learning recommendation method. The method comprises the steps of obtaining semantic features and feature vector matrixes of users and articles by applying a BERT model; performing convolution, maximum pooling and full connection operations on the vector matrixes by using a BLSTM model and combining with a CNN to obtain final representations of user features and article features respectively; splicing the obtained user feature representations and the obtained article feature representations as input through an MLP full-connection network, and generating a recommendation list by applying Top-N sorting. According to the invention, word embedding feature extraction is carried out by using the BERT model, a problem of mismatch of one word withmultiple meanings is avoided, the BLSTM model avoids the problems that one-way LSTM cannot acquire semantic information from back to front and expression of sentences lacks integrity, semantic encoding of the sentences is carried out in the forward direction and the reverse direction respectively, and more accurate sentence vector representation is obtained; and more accurate implicit representation is obtained, local semantic features are extracted through CNN, and effective recommendation is carried out.

Owner:ANHUI AGRICULTURAL UNIVERSITY

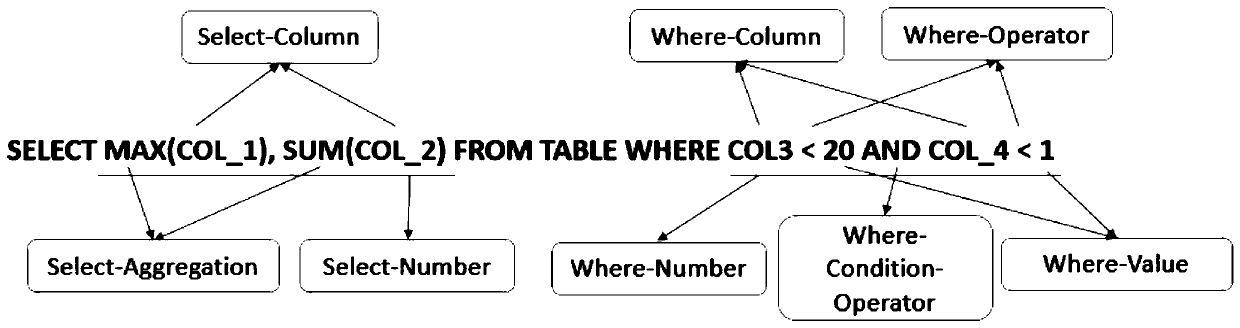

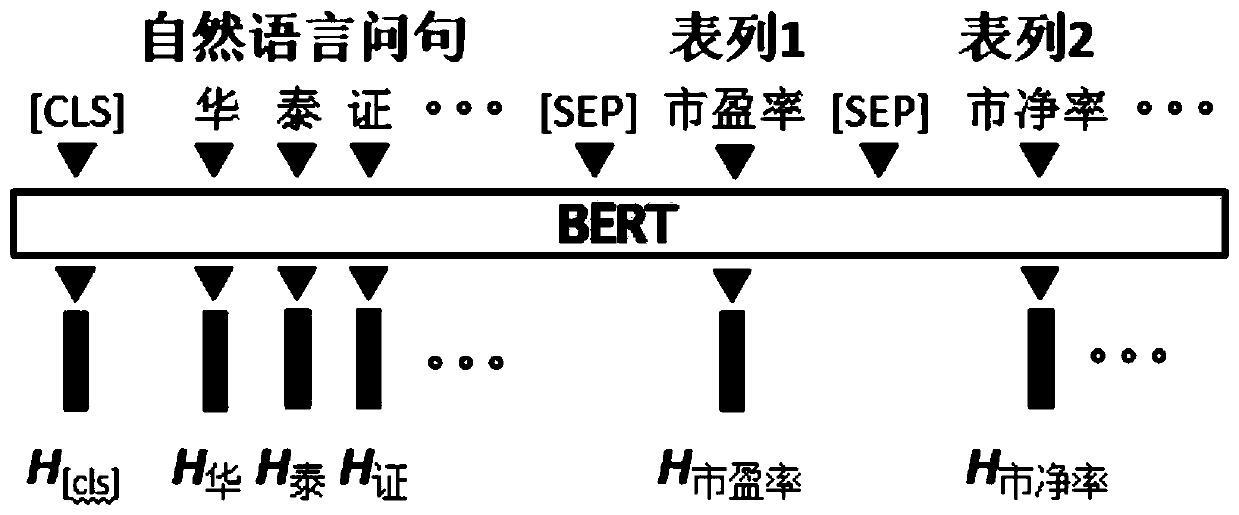

Chinese database SQL statement generation method and device, and storage medium

ActiveCN111414380AImprove scalabilityImprove generalization abilityDigital data information retrievalSemantic analysisTable (database)Semantic representation

The invention discloses a Chinese database SQL statement generation method and device, and a storage medium, and the method comprises the following steps: decomposing the construction of an SQL statement into a plurality of subtasks according to the types of tasks; carrying out semantic coding on the natural language query statement and the to-be-queried table column name by utilizing a semantic representation model, and then respectively carrying out sequential and united prediction on each sub-task; and combining the prediction results to generate the SQL statement. According to the method provided by the embodiment of the invention, the technical problems of poor generalization, expansibility, convenience and the like in the existing scheme can be effectively solved. Through combinationof pre-processing, post-processing and a deep learning model, end-to-end conversion from natural language statements to SQL statements is realized. And meanwhile, the SQL statement is subjected to sub-task disassembly, so that the complexity of model prediction is reduced, and the accuracy of SQL statement generation is improved.

Owner:JIANGSU SECURITIES

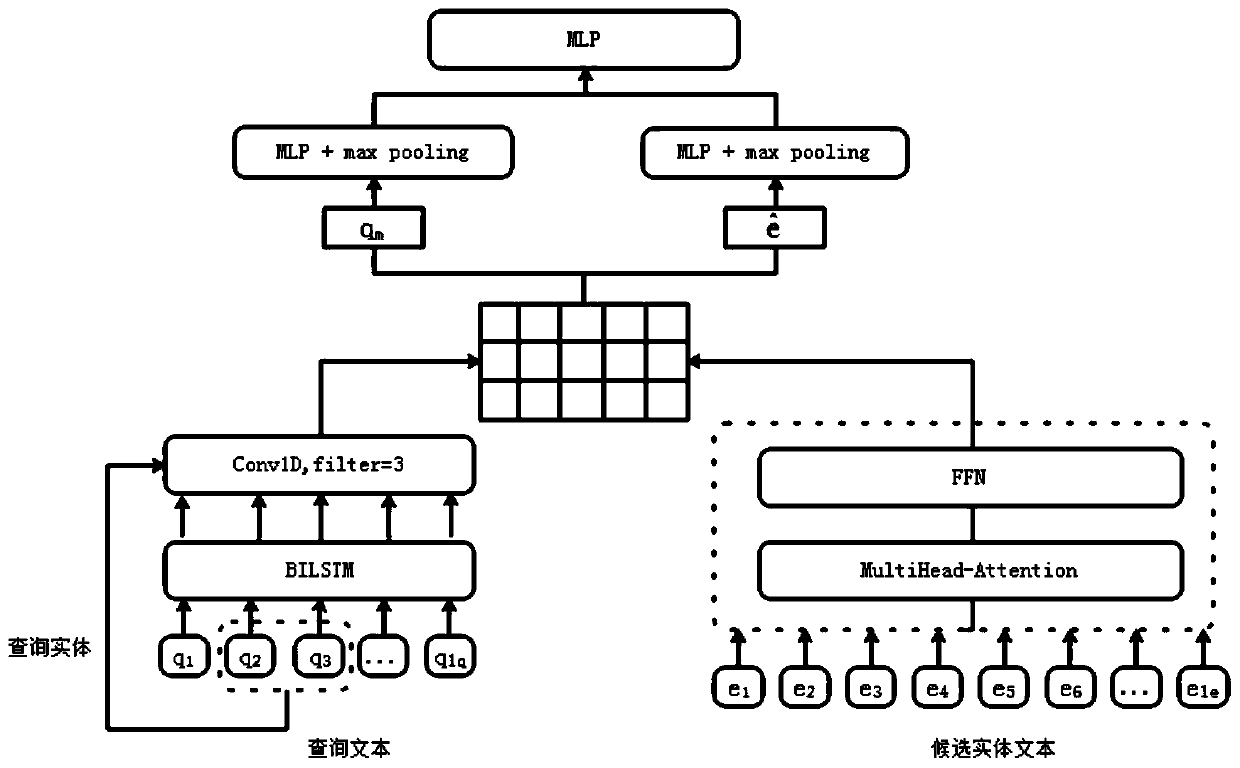

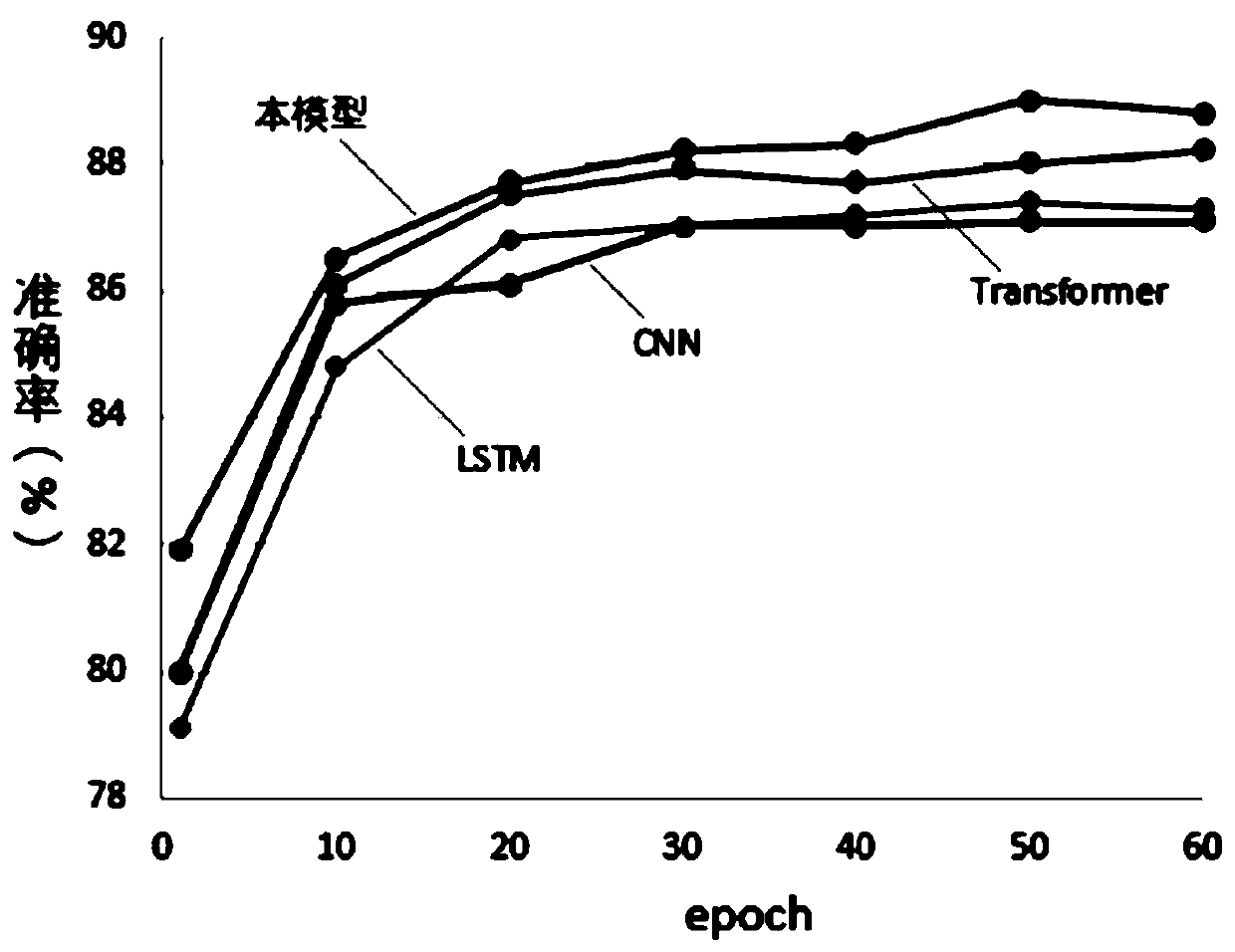

Entity linking method based on entity context semantic interaction

ActiveCN111428443ARich detailed semantic featuresVerify validityNatural language data processingNeural architecturesEntity linkingSemantic feature

The invention relates to the technical field of data processing, and discloses an entity linking method based on entity context semantic interaction. The context information of the entity to be linkedwith the attribute description information of the knowledge base entity are combined, a Transformer structure is adopted to encode a knowledge base entity text, an LSTM network is adopted to encode and inquire the entity text, and fine-grained word-level attention interaction is adopted to capture local similar information of the text for semantic encoding of the knowledge base entity text and the inquired entity text. According to the invention, on the basis of using the LSTM and Transformer networks to encode two segments of texts respectively, word-level fine-grained semantic feature interaction is increased; the detail semantic features of the text are enriched, the accuracy rates of 89.1% and 88.5% are achieved on a verification set and a test set and exceed 2.1% and 1.7% of a current mainstream entity link coding model CNN and an LSTM network respectively, and the effectiveness of the entity link method is shown.

Owner:CHINA ELECTRONICS TECH CYBER SECURITY CO LTD

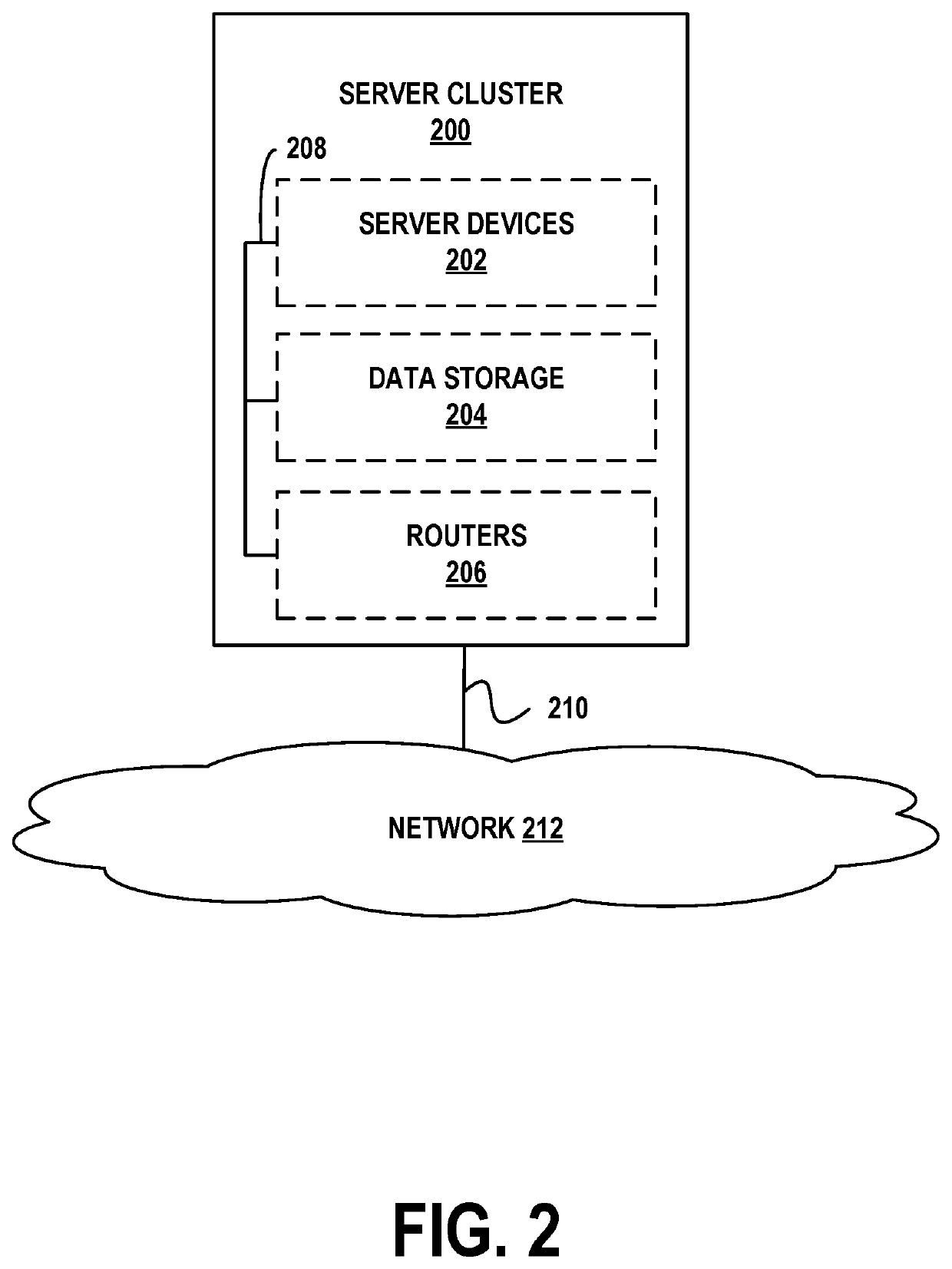

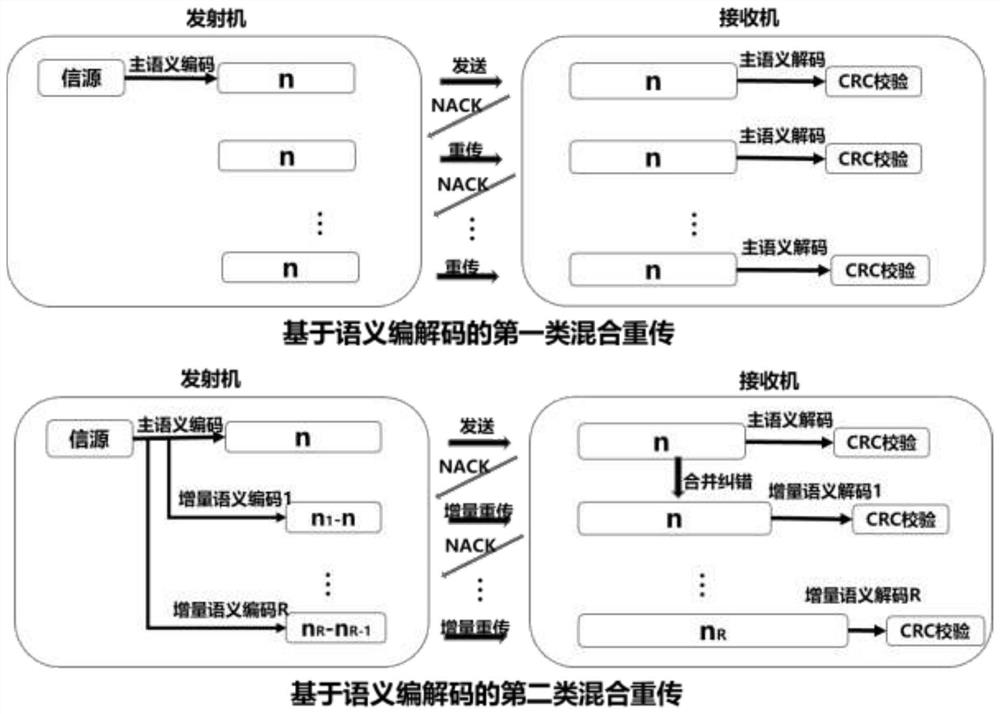

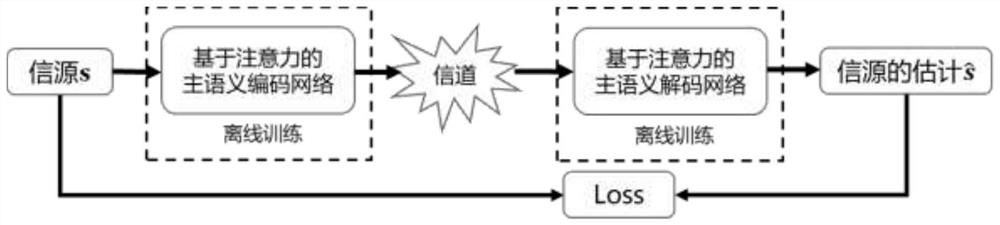

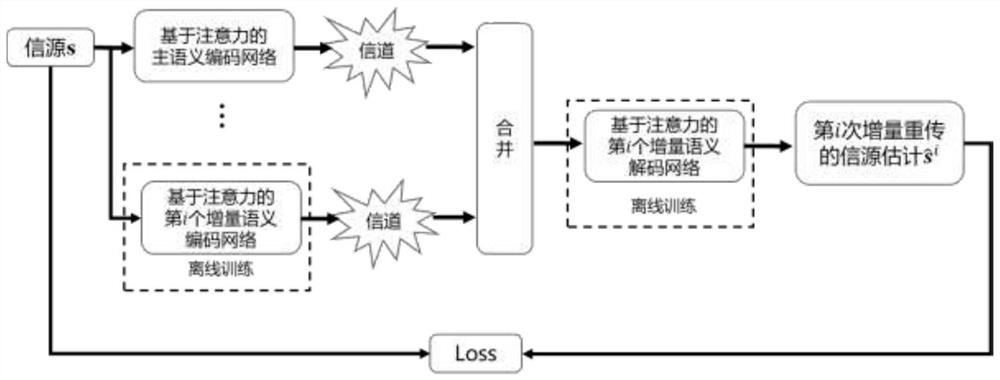

Hybrid retransmission method based on semantic coding

ActiveCN113379040AImprove transmission performanceReduce transmission consumptionNeural architecturesRedundant data error correctionComputer networkSource encoding

The invention discloses a hybrid retransmission method based on semantic coding. The method comprises the following steps: a main semantic codec and a plurality of incremental redundant semantic codec are trained for content to be transmitted; for a first type hybrid retransmission method, only one main semantic codec is used for replacing an original information source channel for coding and decoding, a sending end performs semantic coding and CRC check coding on an information source and sends the information source, a receiving end performs decoding and CRC check, and if errors exist, code words are discarded, and the sending end is notified to retransmit the same code words; for a second type hybrid retransmission method, the receiving end does not discard error code words after finding errors, the sending end is notified to continue to use an incremental redundancy semantic encoder to encode an information source and send the information source, and the receiving end combines all the received code words every time, uses the corresponding incremental redundancy semantic decoder to complete decoding and carries out CRC check. Compared with a hybrid retransmission method based on traditional forward coding, the invention has the advantages that the sending code length is greatly reduced, and the decoding performance of a hybrid retransmission mechanism in a long-term severe channel environment is improved.

Owner:SOUTHEAST UNIV

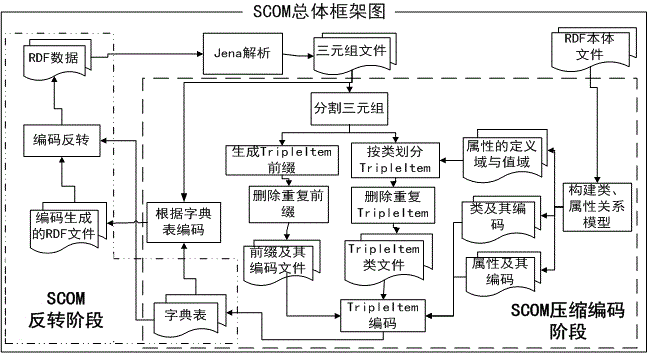

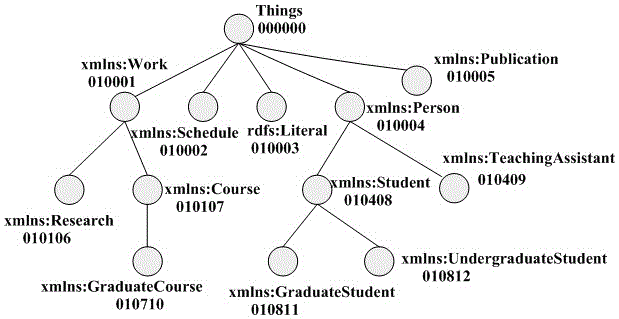

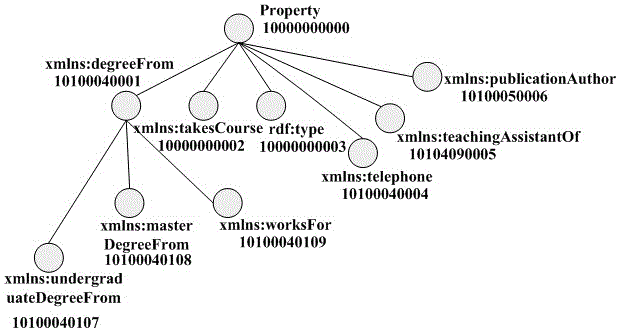

RDF data distributed parallel semantic coding method

ActiveCN105930419AAchieve reversalImprove reasoningSemantic analysisSpecial data processing applicationsRelational modelData file

The invention relates to an RDF data distributed parallel semantic coding method. The method specifically comprises the following steps of S1: reading an RDF ontology file and constructing a class relation model and an attribute relation model; S2: reading an RDF data file, dividing a triple into triple items, classifying the triple items by class, deleting the repeated triple items, and generating prefix codes; filtering the triple items to ensure the consistency of RDF triple codes and enable the same triple item not to be allocated with different codes; S3: coding the triple items to generate a dictionary table; S4: coding the triple to generate a coded triple file; and S5: taking a result file in the step S4 as an input of the step S5, and performing inversion according to the dictionary table in the step S3 to generate an original RDF data file. According to the method, compressed coding and inversion of large-scale data can be efficiently realized in combination with ontology in a distributed environment.

Owner:FUZHOU UNIV

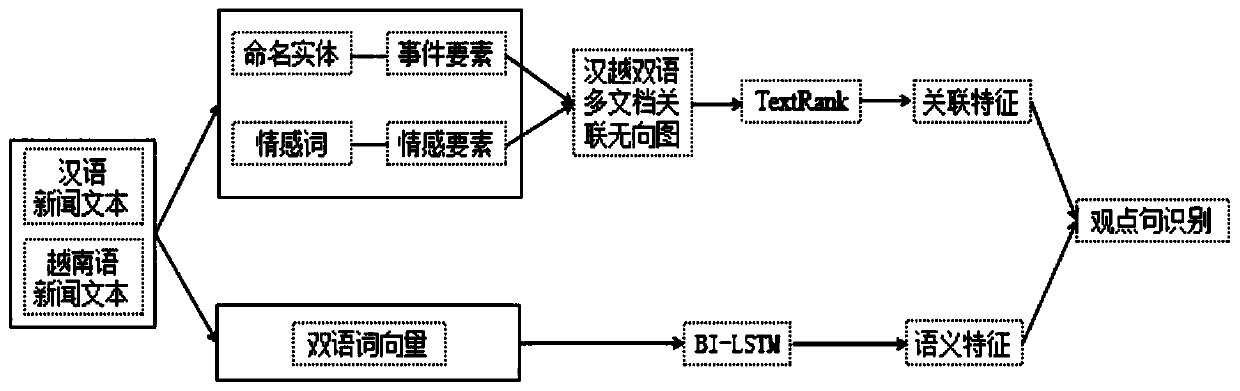

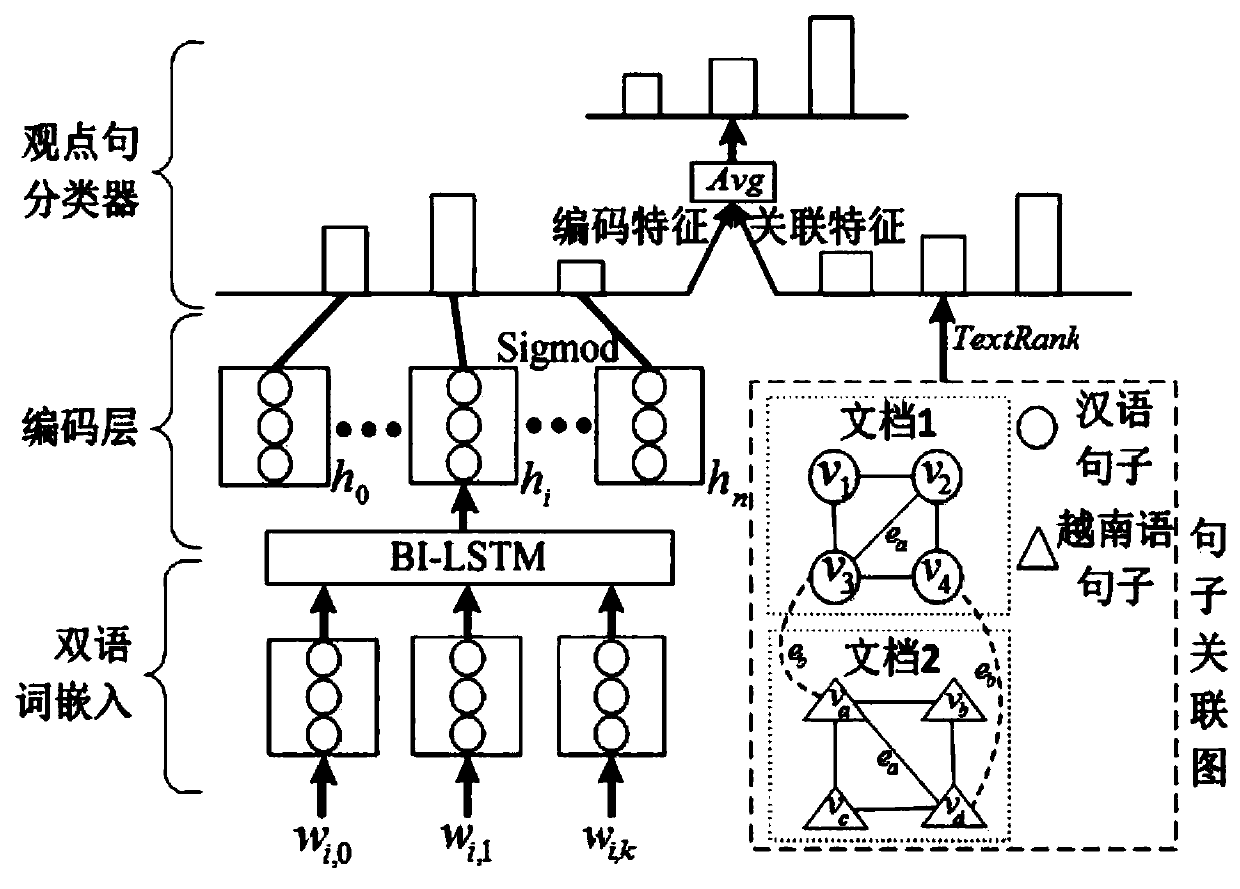

Chinese-Vietnamese bilingual multi-document news viewpoint sentence recognition method based on sentence association graph

PendingCN111581943AEasy to identifyImprove accuracyWeb data indexingSemantic analysisPattern recognitionUndirected graph

The invention relates to a Chinese-Vietnamese bilingual multi-document news viewpoint sentence recognition method based on a sentence association graph, and belongs to the technical field of natural languages. Aiming at a Chinese-Vietnamese bilingual multi-document news viewpoint sentence recognition task, the invention provides a viewpoint sentence recognition model combining sentence associationfeatures and semantic features. The method comprises the following steps: constructing a Chinese-Vietnamese bilingual multi-document association undirected graph fusing event elements and emotion elements; obtaining sentence association features of the Chinese-Vietnamese bilingual; obtaining semantic code representation of the sentence; carrying out dimensionality reduction on the obtained semantic codes to obtain sentence semantic features of the Chinese-Vietnamese bilingual; and performing joint calculation by utilizing the sentence association features and the sentence semantic features toobtain viewpoint sentence recognition features, classifying the viewpoint sentence recognition features by adopting a classifier, optimizing the classifier by adopting a binary classification cross entropy loss function, and realizing viewpoint sentence recognition by adopting the optimized classifier. The Chinese-Vietnamese bilingual multi-document news viewpoint sentence recognition method caneffectively improve the accuracy of Chinese-Vietnamese bilingual multi-document news viewpoint sentence recognition.

Owner:KUNMING UNIV OF SCI & TECH

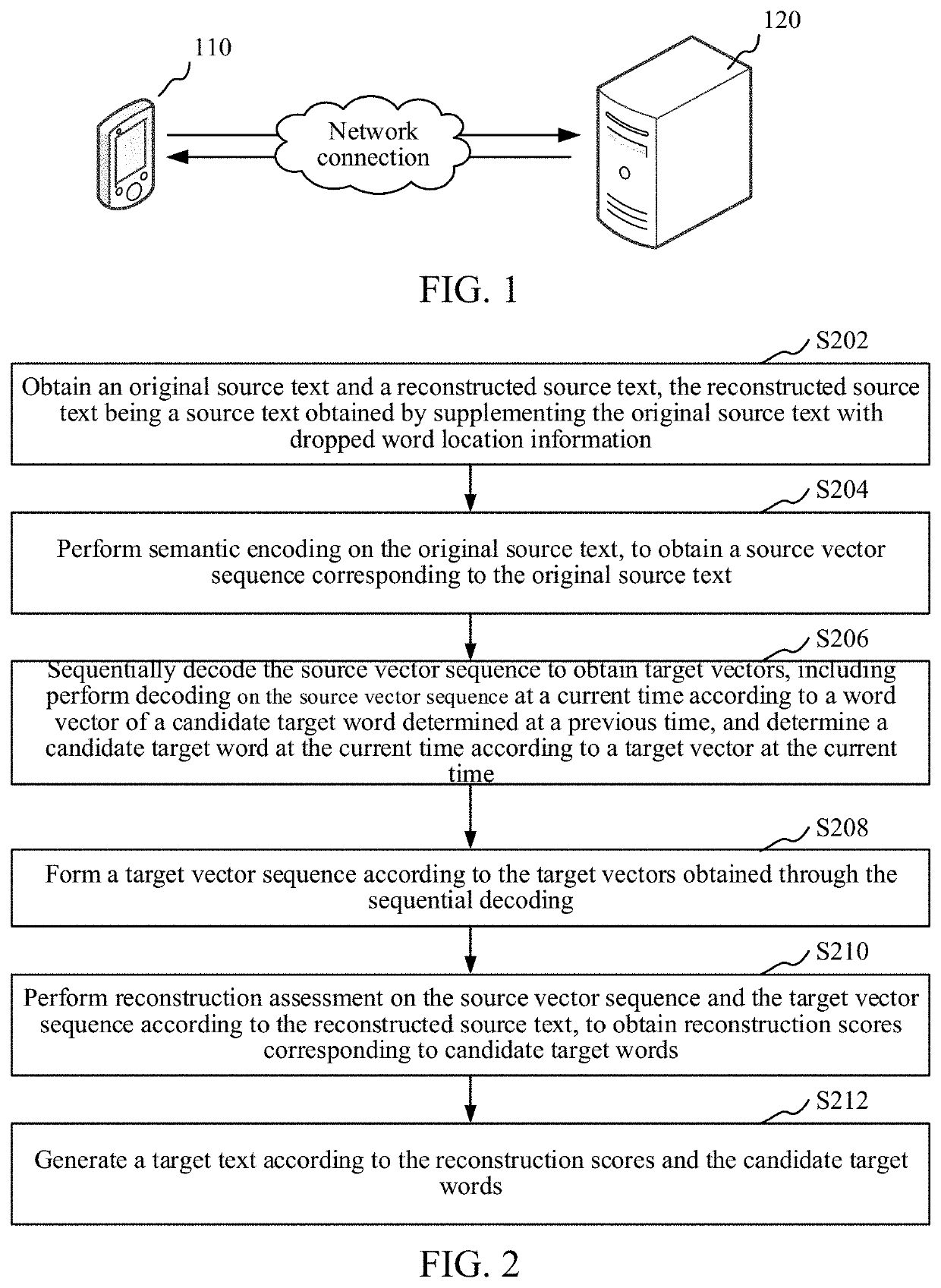

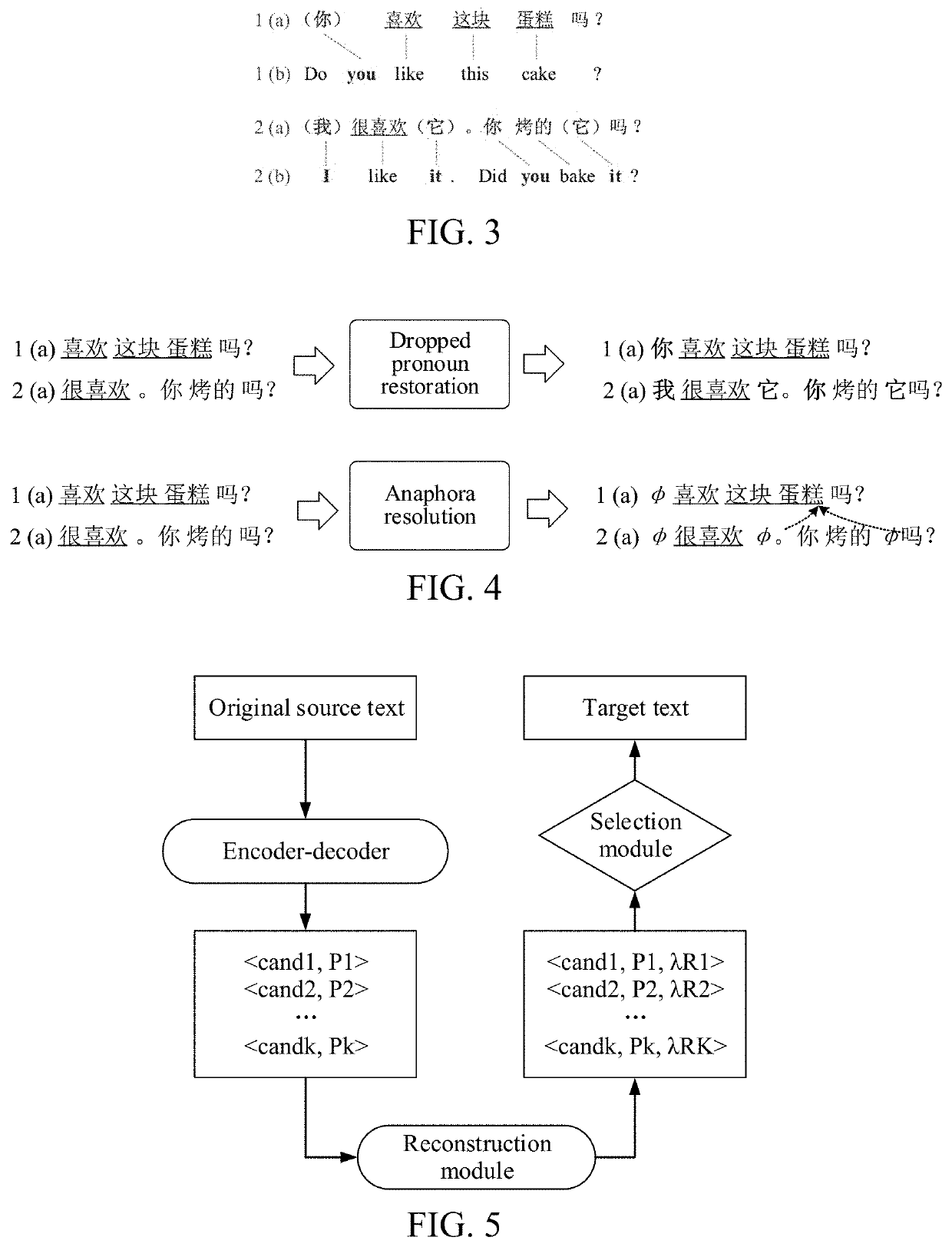

Text translation method and apparatus, storage medium, and computer device

PendingUS20210019479A1The result is accurateRedundant informationNatural language translationSemantic analysisAlgorithmTheoretical computer science

This application relates to a machine translation method performed at a computer device. The method includes: obtaining an original source text and a reconstructed source text; performing semantic encoding on the original source text, to obtain a source vector sequence; sequentially decoding the source vector sequence to obtain target vectors by performing decoding on the source vector sequence at a current time according to a word vector of a candidate target word determined at a previous time, determining a candidate target word at the current time according to a target vector at the current time, and forming a target vector sequence accordingly; performing reconstruction assessment on the source vector sequence and the target vector sequence using the reconstructed source text, to obtain reconstruction scores corresponding to the candidate target words; and generating a target text according to the reconstruction scores and the candidate target words.

Owner:TENCENT TECH (SHENZHEN) CO LTD

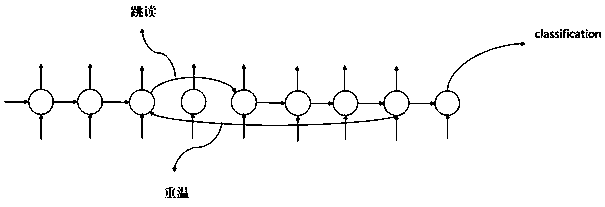

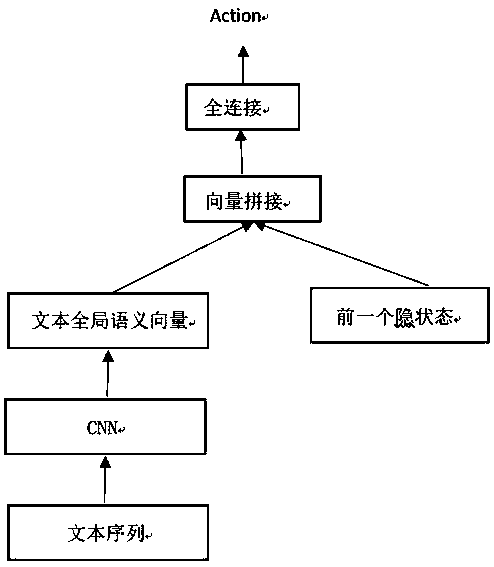

Sentence semantic coding method based on reinforcement learning

ActiveCN109359191AGood text comprehensionSemantic analysisSpecial data processing applicationsHuman behaviorSemantics encoding

The present invention relates to the technical field of artificial intelligence, natural language processing, and more particularly, to a sentence semantic coding method based on reinforcement learning. The sentence semantic encoding method based on reinforcement learning comprises the following steps: the invention realizes the reading mode similar to human behavior by reinforcement learning; andthe reinforcement learning function of the invention is to locate the text to be read in the next step. The invention is innovative in that a reinforcement learning network is introduced to learn a reading strategy similar to human reading behavior. As that human intensively reads an article, reading and processing of the text is not in disorder or fixed order, but skipping, rereading and other reading behaviors are added, so these behaviors are given to LSTM through the reinforcement learning network, so that LSTM can encode text in a more similar way to human reading behavior, which will enable the model to have better text understanding ability.

Owner:SUN YAT SEN UNIV

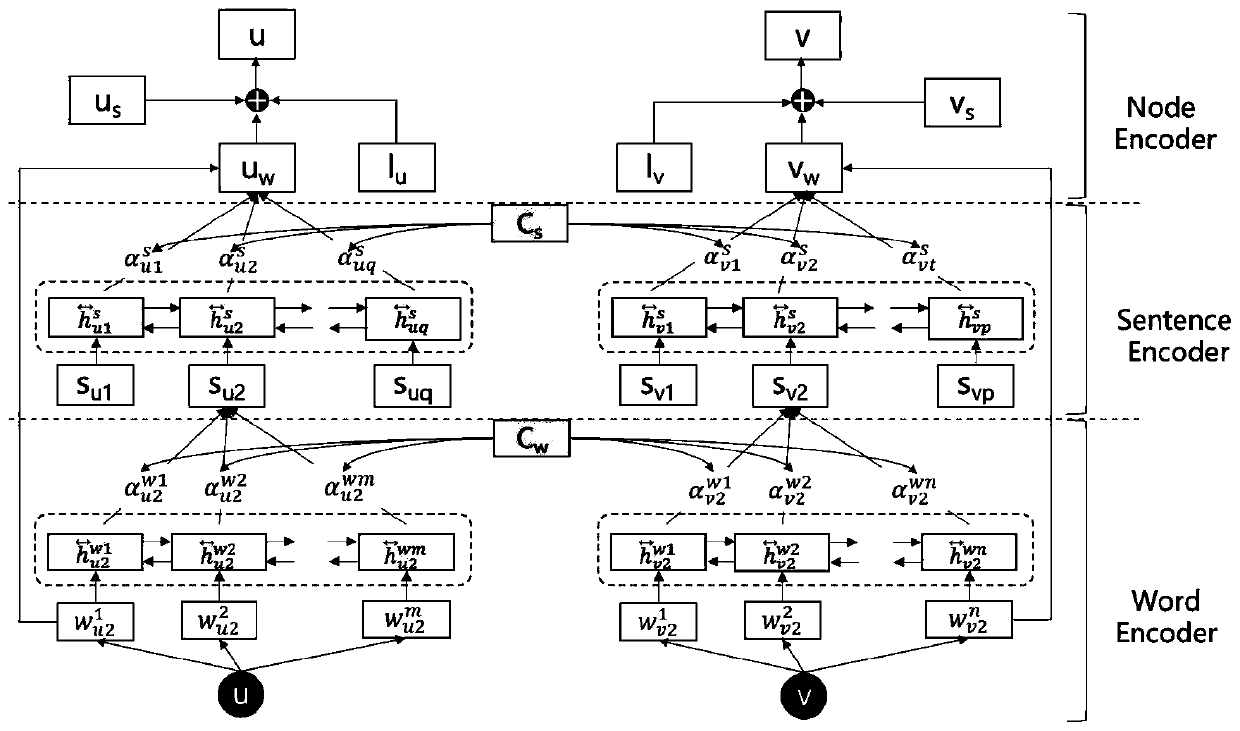

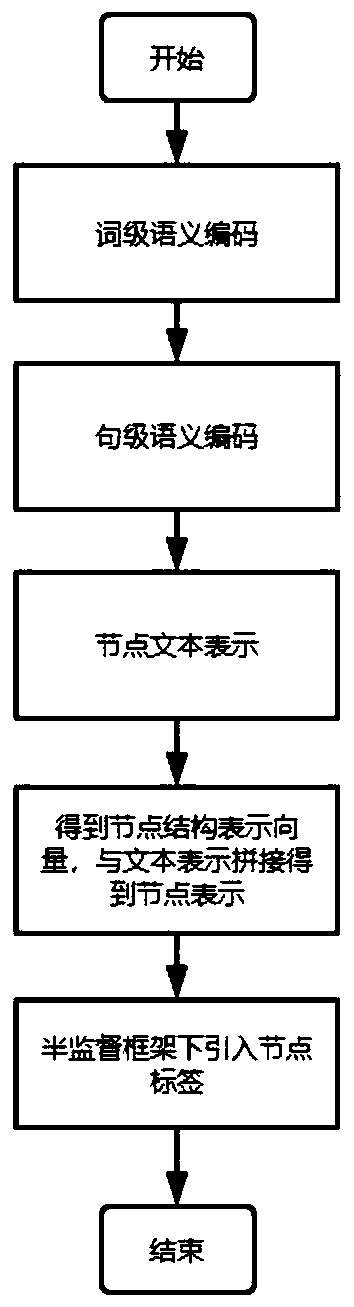

Semi-supervised network representation learning model based on hierarchical attention mechanism

PendingCN110781271AQuality improvementImprove performanceSemantic analysisText database queryingSemantics encodingLearning network

The invention relates to a semi-supervised network representation learning model based on a hierarchical attention mechanism. The semi-supervised network representation learning model is characterizedby comprising the following steps: 1), word-level semantic coding; 2), sentence-level semantic encoding; 3) node text representation; 4) obtaining of a node structure representation vector and a noderepresentation vector; and 5) introduction of a node label under the semi-supervised framework. Based on a hierarchical attention mechanism, the semi-supervised network representation learning modellearns text representation of network nodes, introduces the node label information under the semi-supervised framework, finally, obtains high-quality representation vectors of the nodes, and improvesthe performance of downstream tasks (node classification and link prediction).

Owner:ELECTRIC POWER SCI & RES INST OF STATE GRID TIANJIN ELECTRIC POWER CO +1

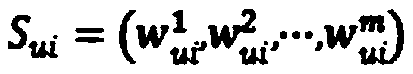

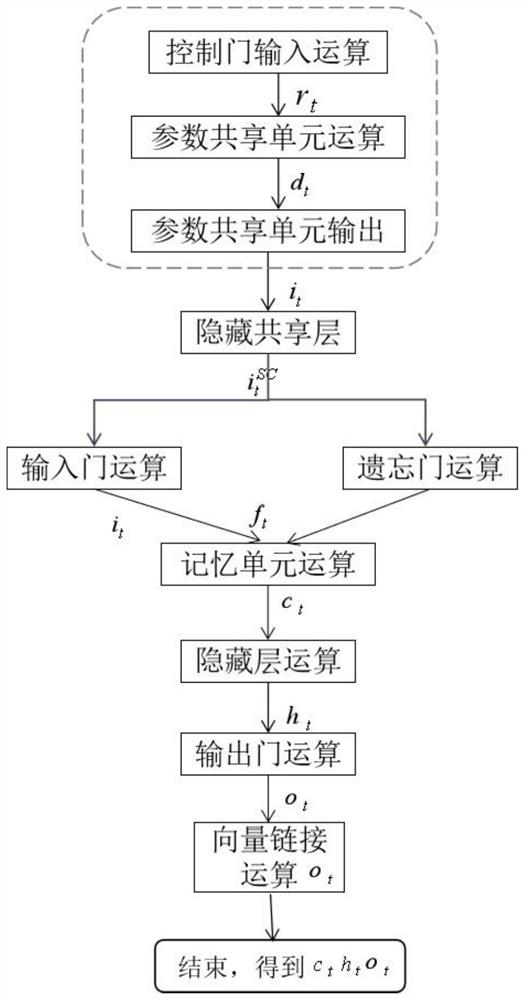

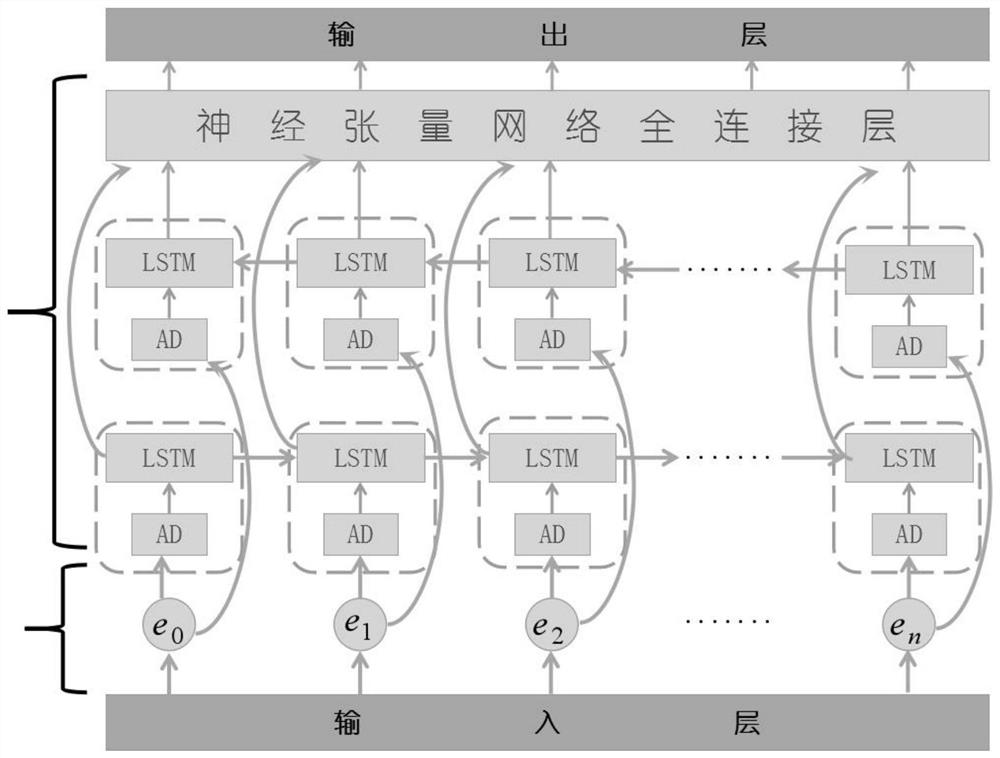

Semantic coding method of long-short-term memory network based on attention distraction

ActiveCN113033189AValid reservationImprove integritySemantic analysisNeural architecturesDistractionActivation function

The invention discloses a semantic coding method of a long short-term memory network based on attention distraction, and belongs to the field of natural language processing and generation. Aiming at the problems of semantic deviation, gradient disappearance, gradient explosion, incomplete contextual information fusion and the like in the prior art, a neural network used by the method adds a parameter sharing unit on the basis of BiLSTM, and the capability of obtaining and fusing bidirectional feature information of a model is enhanced; an activation function in an improved deep learning model is adopted, so that the probability of occurrence of a gradient problem is reduced; for an input layer and a hidden layer, a model is constructed in an interactive space and extended LSTM mode, so that the capability of fusing context information of the model is enhanced; an attention distraction mechanism of statement structure information variables is introduced, and semantic generation is limited, so that high semantic accuracy is improved. The method is suitable for natural language generation applications such as automatic news or title writing, robot customer service, conference or diagnosis report generation and the like.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY +2

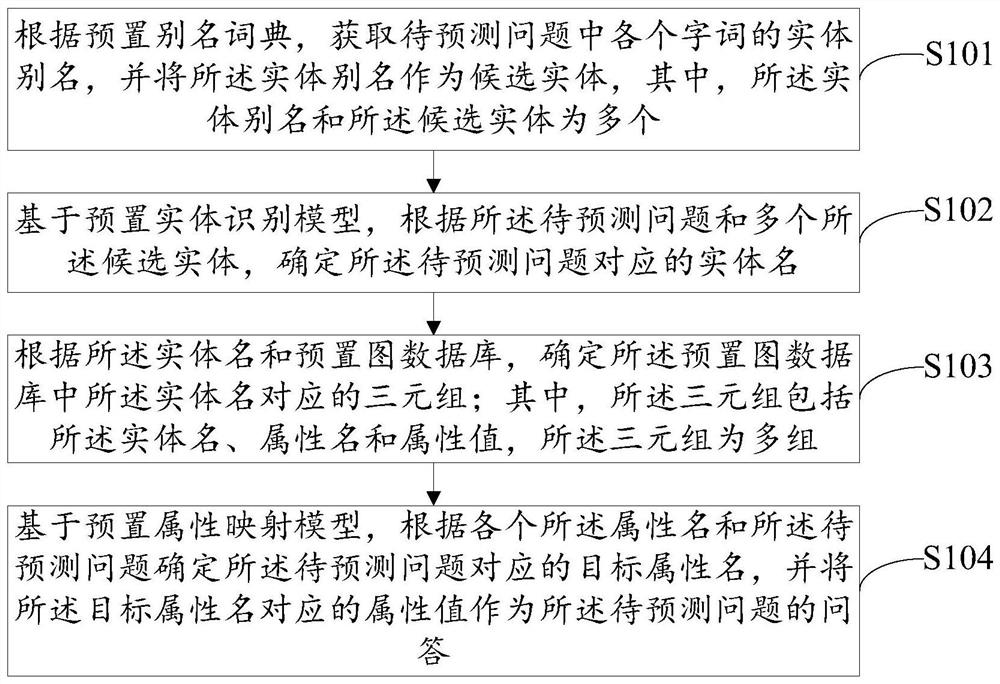

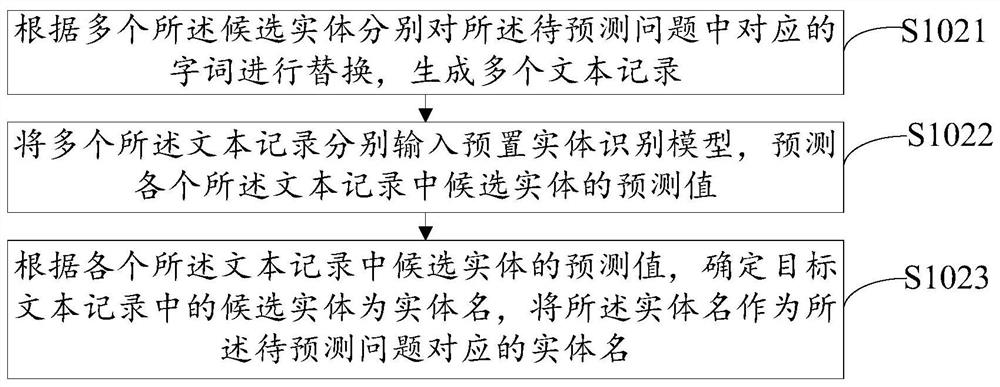

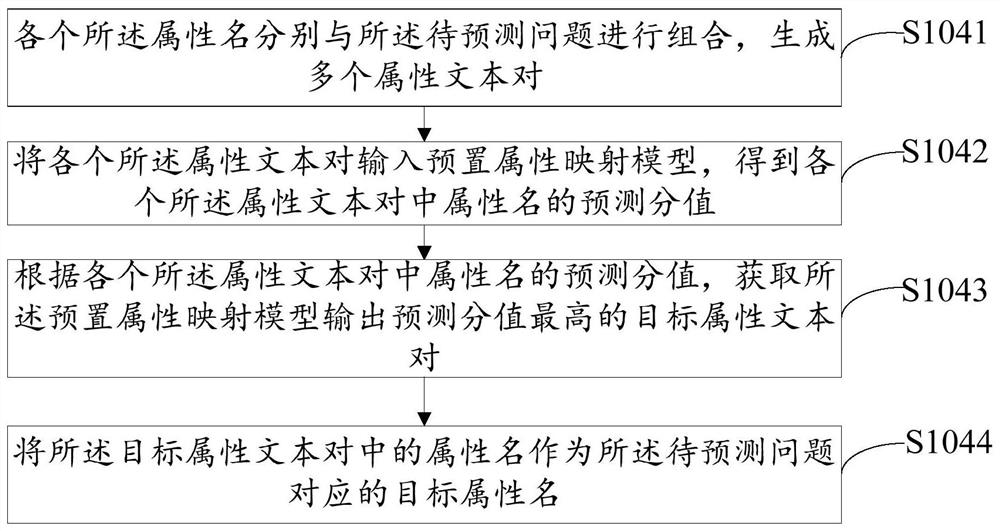

Automatic question and answer method, device and equipment and storage medium

PendingCN112328759AImprove accuracyEnhance expressive abilityDigital data information retrievalNatural language data processingEngineeringQuestions and answers

The invention relates to the technical field of artificial intelligence, and discloses an automatic question and answer method and device, computer equipment and a computer readable storage medium. The method comprises the steps that candidate entities of all words in a to-be-predicted question are acquired according to a preset alias dictionary; based on a preset entity identification model, determining an entity name corresponding to a to-be-predicted problem according to the to-be-predicted problem and the plurality of candidate entities; determining a triple corresponding to the entity name according to the entity name and a preset graph database; based on a preset attribute mapping model, determining a target attribute name corresponding to the to-be-predicted question according to each attribute name and the to-be-predicted question, and taking an attribute value corresponding to the target attribute name as a question and answer of the to-be-predicted question, according to themethod, semantic coding is carried out on entity identification of the problem by the preset entity identification model and attribute mapping of the problem by using the attribute mapping model, andthe representation capability and generalization capability of a machine reading text are improved, so that the accuracy of the preset entity identification model and the attribute mapping model is improved.

Owner:PING AN TECH (SHENZHEN) CO LTD

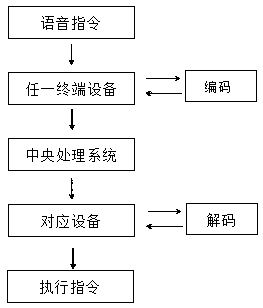

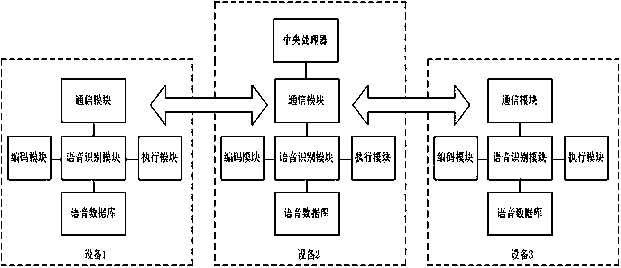

Voice terminal communication method based on natural semantic coding and system

ActiveCN110782897AWide recognitionMeet the needs of simultaneous useSpeech recognitionSpoken languageEngineering

The invention discloses a voice terminal communication method based on natural semantic coding. The method comprises the following steps of S1, collecting natural corpora with clear meanings as command words, and storing the command words to a voice database; S2, setting a uniquely corresponding combined code for the command words with the same meaning; S3, after any equipment receives voice information and identifies the corresponding command word, if the equipment corresponding to a product field is the any equipment, entering the S5, otherwise, sending the combined code corresponding to thecommand word to a central processing unit; S4, sending the combined code to the corresponding equipment by the central processing unit according to the product field in the combined code; and S5, receiving the command word code by the equipment and then carrying out a command. The invention also disclose a voice terminal system based on the natural semantic coding. According to the voice terminalcommunication method based on the natural semantic coding and the system provided by the invention, various expressions of the same natural semantics can be widely identified. Various daily oral expressions of a user are satisfied. The method and the system are applicable to an individual using habit of the user.

Owner:成都启英泰伦科技有限公司

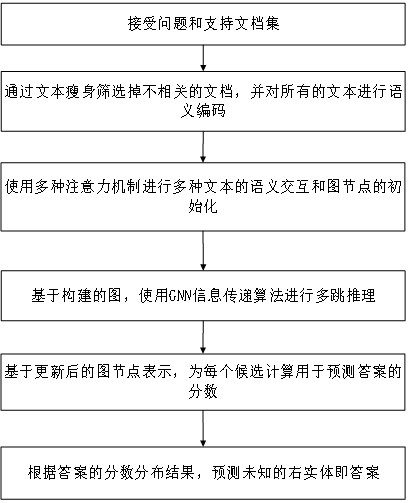

Answer prediction method and device based on graph reasoning model

InactiveCN112732888ASmooth fluidityIncrease profitSemantic analysisText database queryingGraph inferenceInformation transmission

The invention discloses an answer prediction method and device based on a graph reasoning model. The method comprises the following steps of receiving questions and a support document set; screening out irrelevant documents through text slimming, and performing semantic coding on all texts; semantic interaction of various texts and initialization of graph nodes are carried out by using various attention mechanisms; performing multi-hop reasoning by using a GNN information transfer algorithm based on the constructed graph; calculating a score for predicting an answer for each candidate based on the updated graph node representation; and predicting an unknown right entity, namely an answer, according to the score distribution result of the answer. A new graph is provided, multiple types of elements are regarded as graph nodes, and reasoning is more comprehensive. Meanwhile, due to the fact that sentence nodes are adopted, reasoning becomes more accurate and specific, multiple attention mechanisms are fused to carry out multiple semantic representation, the influence of relative correctness between candidates on reasoning is innovatively considered, and answer prediction is more accurate.

Owner:NAT UNIV OF DEFENSE TECH

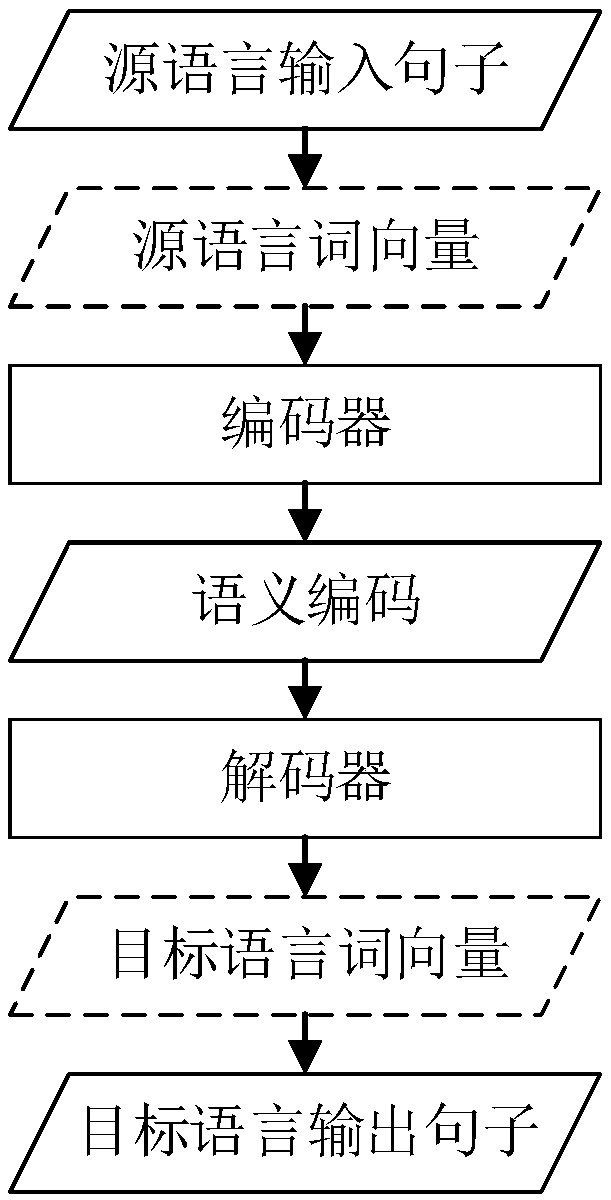

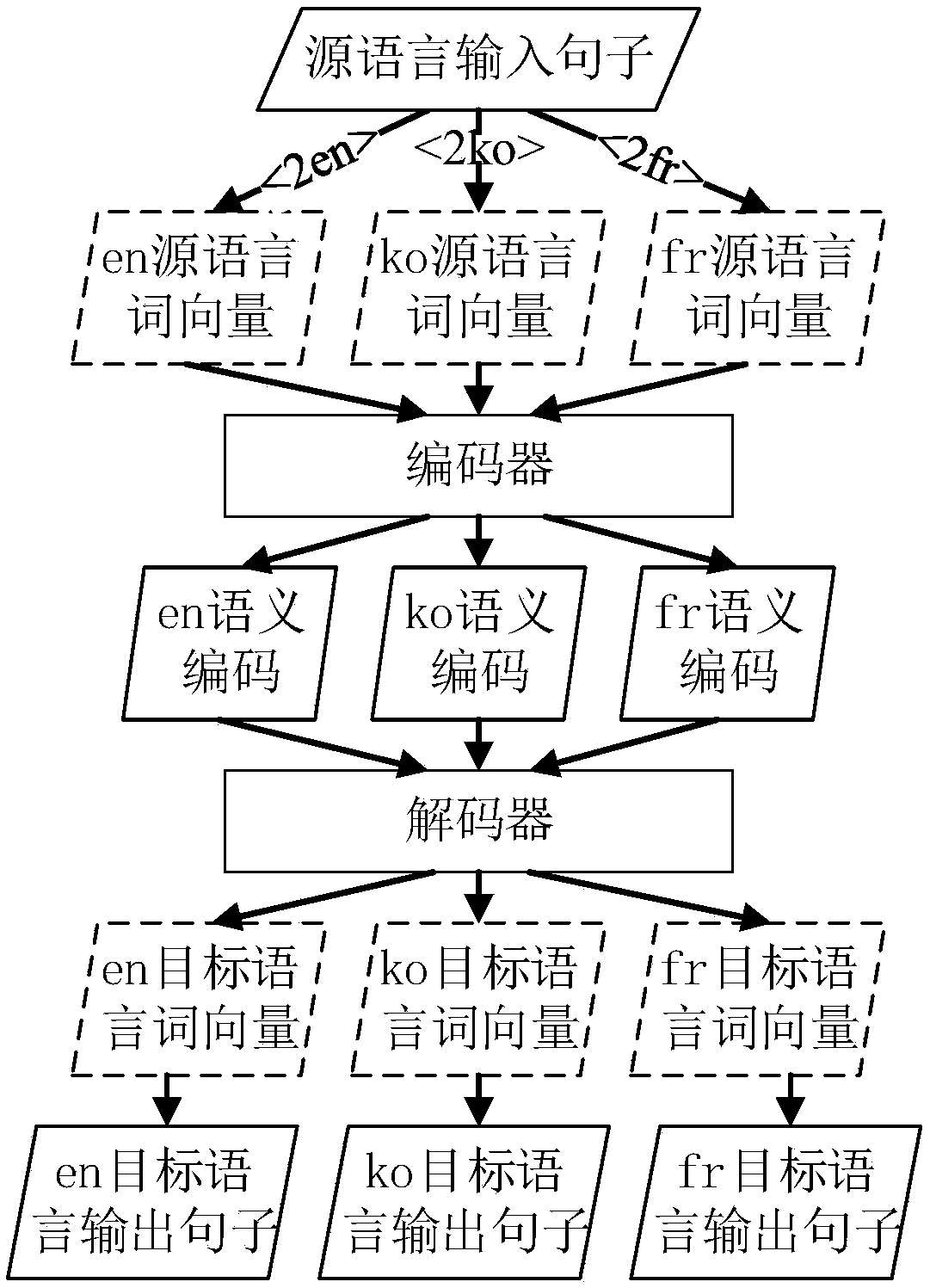

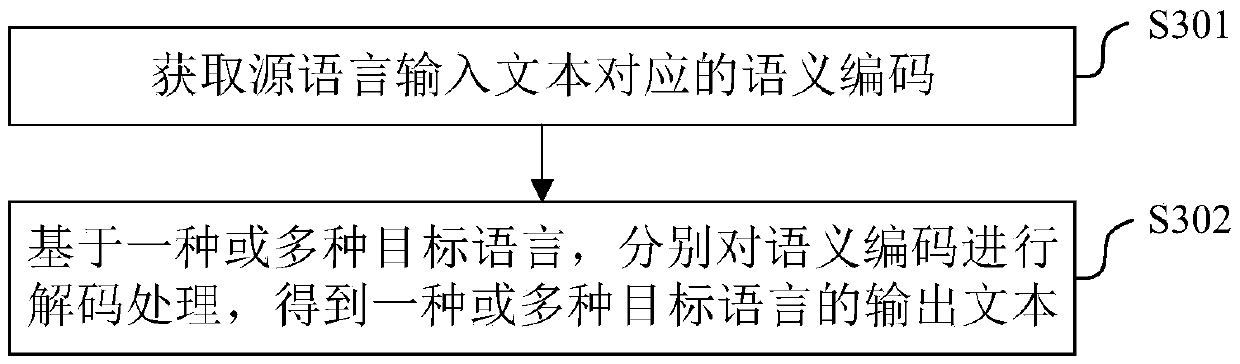

Machine translation method, training method, corresponding device and electronic equipment

InactiveCN110956045AImprove processing efficiencyAvoid multiple calculationsNatural language translationNeural learning methodsTheoretical computer scienceSemantics encoding

The invention provides a machine translation method, a training method, a corresponding device and electronic equipment. The machine translation method comprises the steps of obtaining a semantic codecorresponding to a source language input text; and based on the one or more target languages, respectively decoding the semantic codes to obtain output texts of the one or more target languages. According to the technical scheme provided by the invention, the semantic code corresponding to the source language input text only needs to be obtained once; therefore, the semantic codes can be repeatedly utilized, the output texts of different target languages are obtained through decoding based on the corresponding target languages, multiple times of calculation in the coding process are avoided,the overall calculation amount can be greatly reduced, and the processing efficiency of multi-language translation is effectively improved.

Owner:BEIJING SAMSUNG TELECOM R&D CENT +1

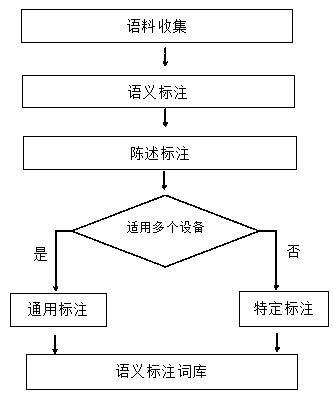

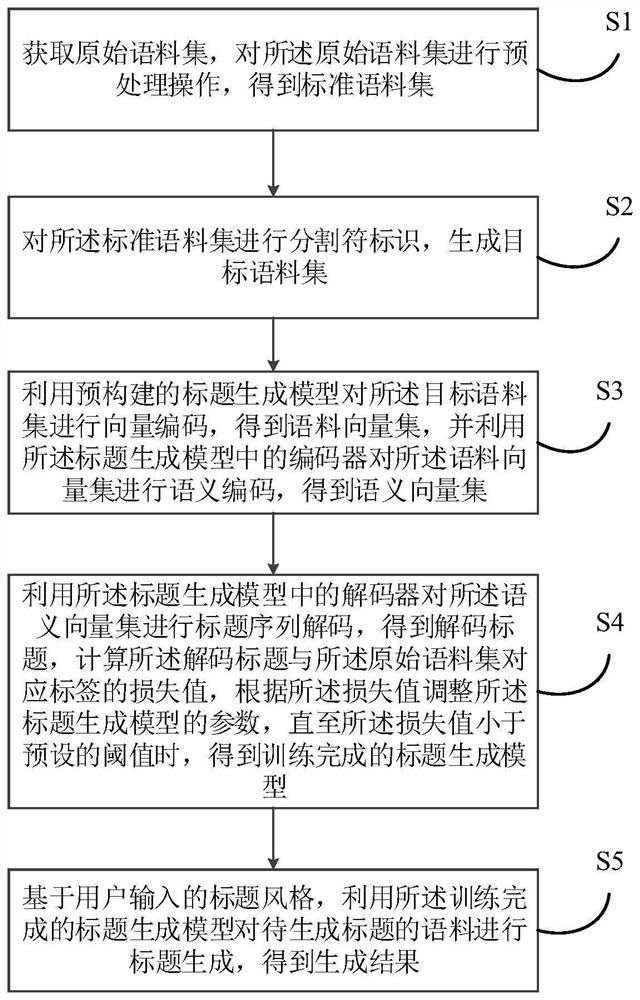

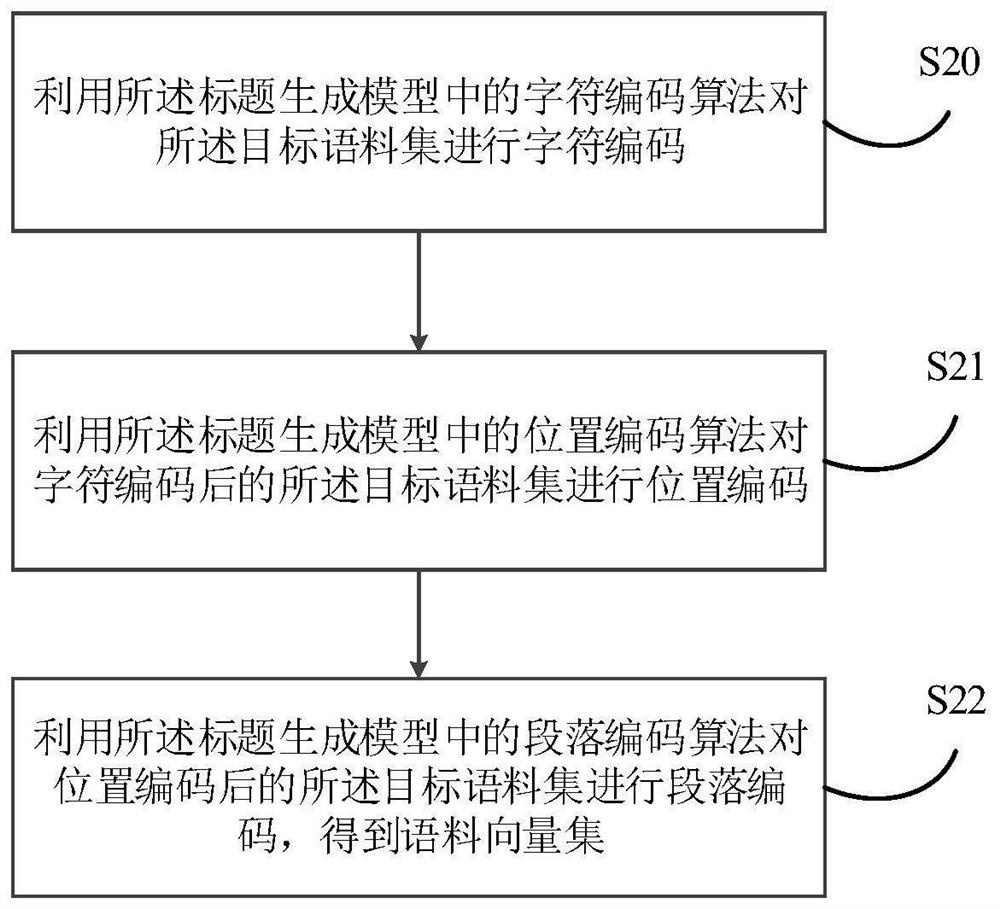

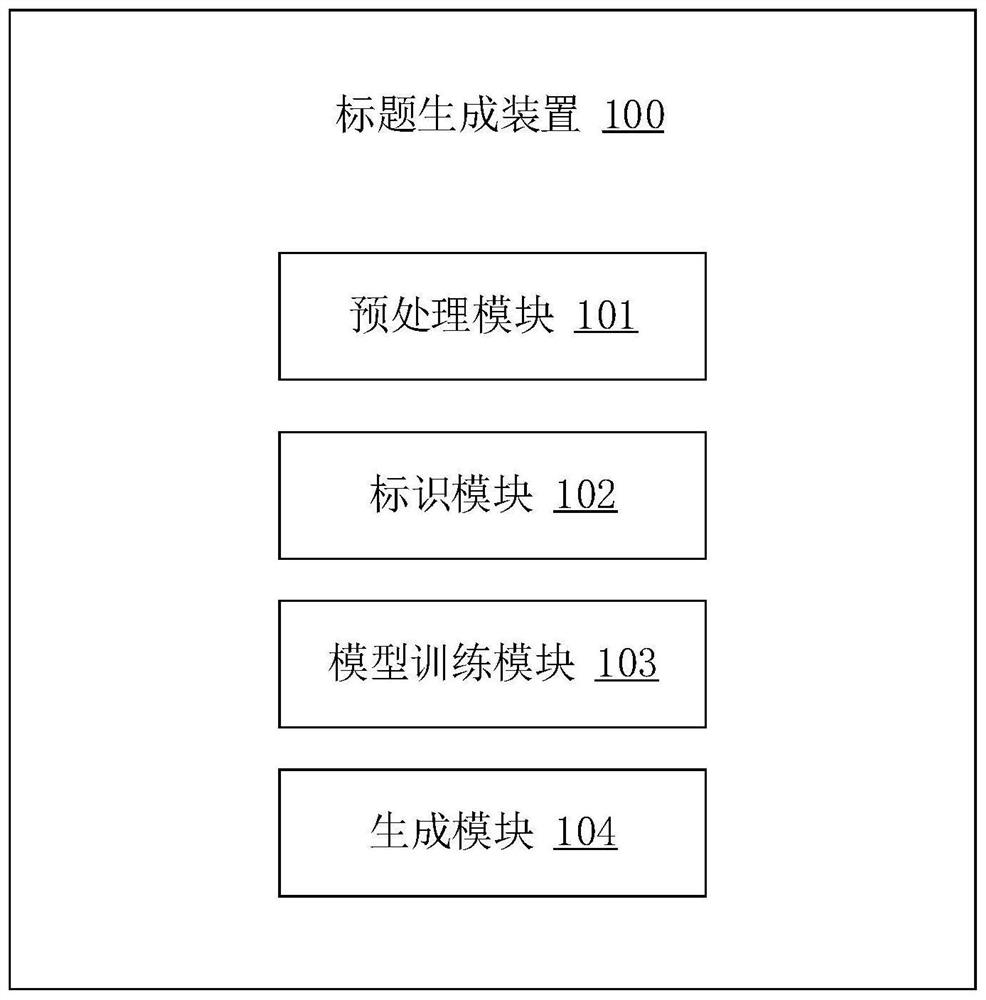

Title generation method and device, electronic equipment and storage medium

The invention discloses a title generation method and device, electronic equipment and a storage medium. The invention relates to the field of intelligent decision making, and discloses a title generation method, which comprises the following steps of: obtaining an original corpus set, and performing preprocessing operation and divider identification on the original corpus set to generate a targetcorpus set; and performing vector encoding, semantic encoding and title sequence decoding on the target corpus set by using a pre-constructed title generation model to obtain a decoded title, calculating a loss value of a tag corresponding to the decoded title and the original corpus set, and adjusting a parameter of the title generation model according to the loss value; until the loss value issmaller than a preset threshold value, obtaining a trained title generation model; and based on the title style input by the user, performing title generation on the corpus of a to-be-generated titleby utilizing the trained title generation model to obtain a generation result. In addition, the invention also relates to a blockchain technology, and the target corpus set can be stored in a blockchain. According to the invention, titles which smoothly accord with semantics and meet user styles can be generated.

Owner:PING AN TECH (SHENZHEN) CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com