Semantic coding method of long-short-term memory network based on attention distraction

A long-term and short-term memory, distraction technology, applied in semantic analysis, neural learning methods, biological neural network models, etc., can solve the problem of not establishing a link mechanism for integrating contextual information, and improve accuracy and sentence correlation. , good integrity and fluency, the effect of improving the degree of integrity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0067] The present invention will be described in detail below according to the accompanying drawings and examples, but the specific implementation of the present invention is not limited thereto.

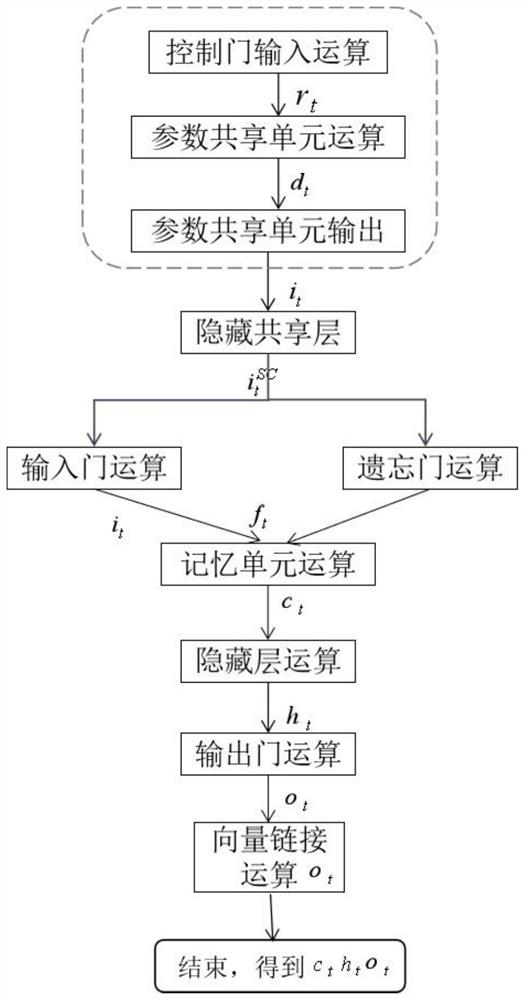

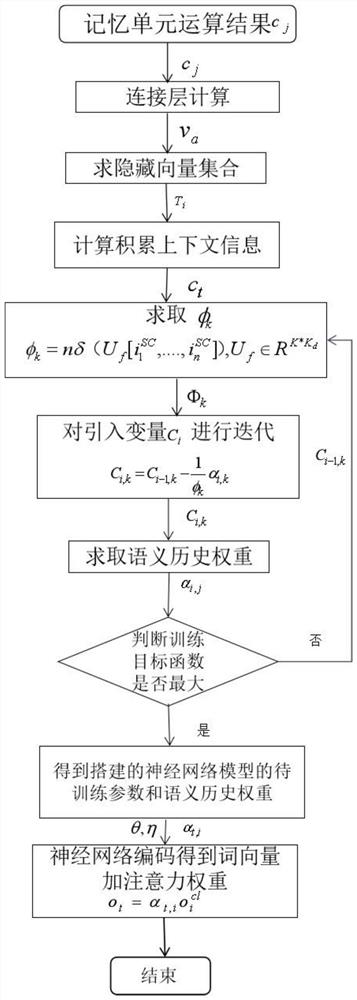

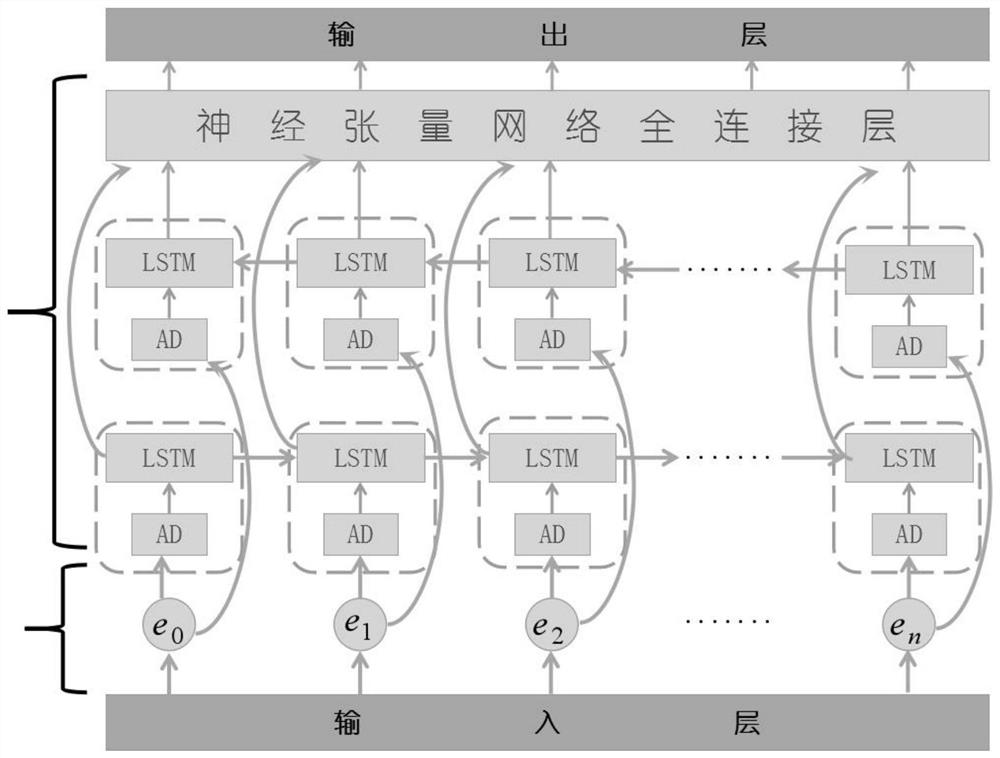

[0068] This embodiment illustrates the process of applying the "Semantic Coding Method Based on Distracted Long-Short-Term Memory Network" of the present invention to the natural language generation processing scenario.

[0069] The present invention trains and tests the model in a public data set cMedQA and cMedQA1. cMedQA and cMedQA1 are a question-and-answer matching data set for Chinese medical consultation, which is widely used in some medical Chinese question-and-answer evaluations. The cMedQA data comes from medical online forums, which include 54,000 questions and corresponding 100,000 answers. cMedQA1 is an extension of cMedQA, which contains 100,000 medical questions and about 200,000 corresponding answers.

[0070] The method provided in this embodiment is a writing of...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com