A time sequence behavior fragment generation system and method based on global context information

A context and behavior technology, applied in the field of video analysis, can solve problems such as not considering the importance of different unit behaviors, only encoding past information, and unable to obtain global context information, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

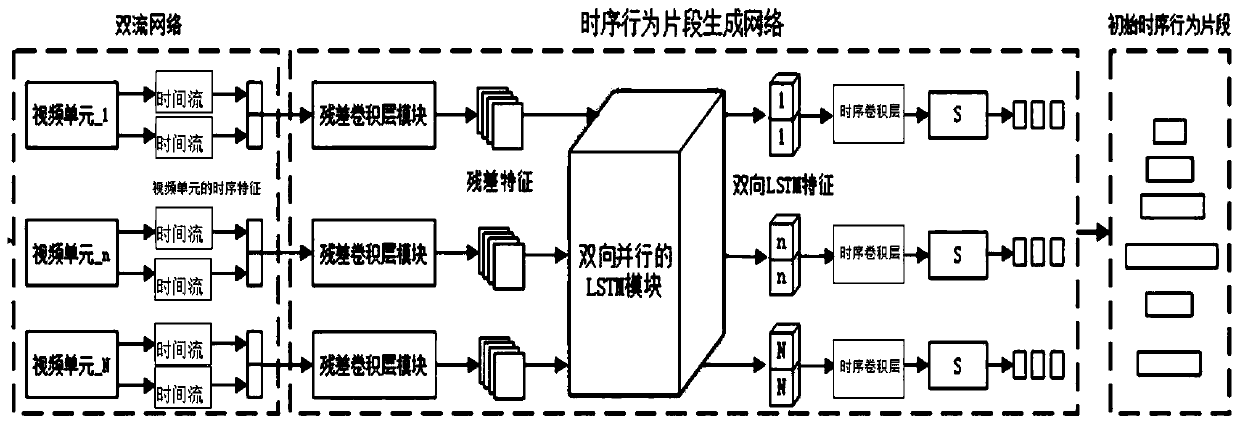

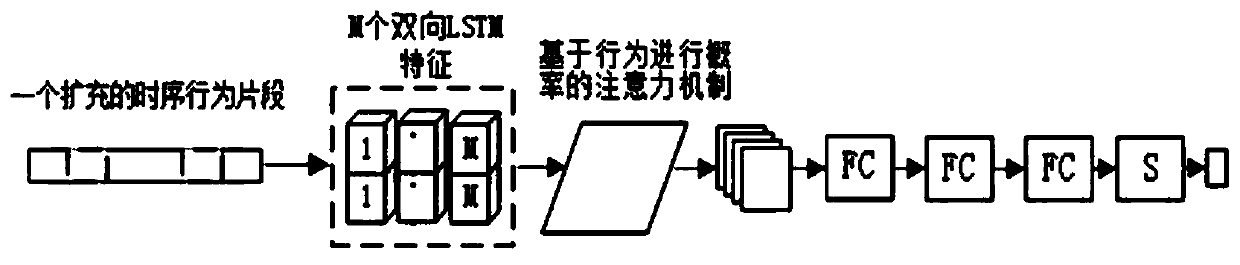

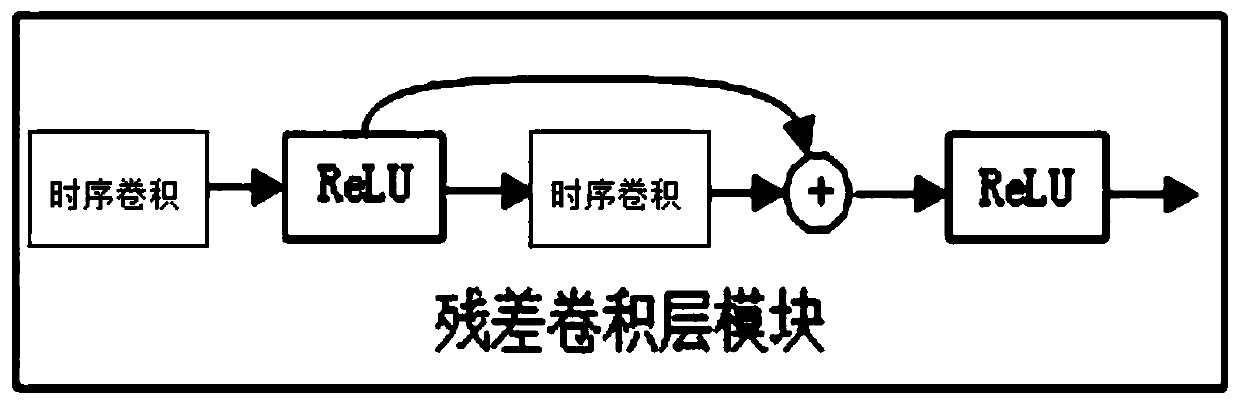

[0051] The present invention aims to propose a time-series behavior segment generation system and method based on global context information, which solves the problem that the traditional technology cannot obtain global context information, can only encode past information, and does not consider the importance of the behavior of different units so as to directly adopt average pooling question. In the present invention, the shortcomings of existing methods that cannot obtain global context information and can only encode past information are solved through the bidirectional parallel LSTM module; in addition, the sequence behavior fragment reordering network based on behavior probability is important to the behavior of different video units It solves the defect that the existing method does not consider the behavioral importance of different units and directly adopts the average pooling.

[0052] The temporal behavior segment generation system based on global context information...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com