Real-time correction method and system for self-learning multipath image fusion

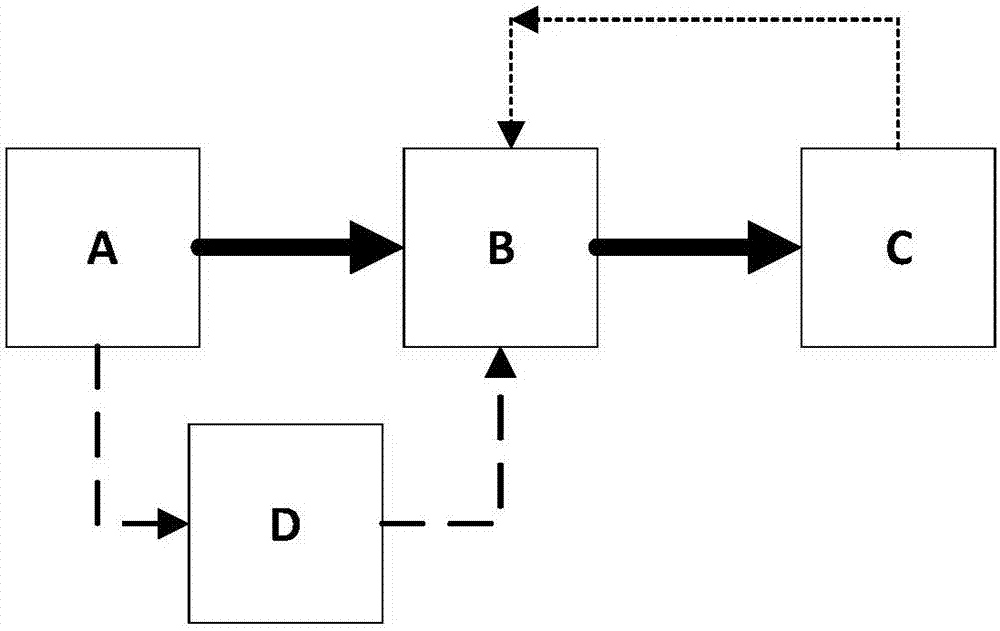

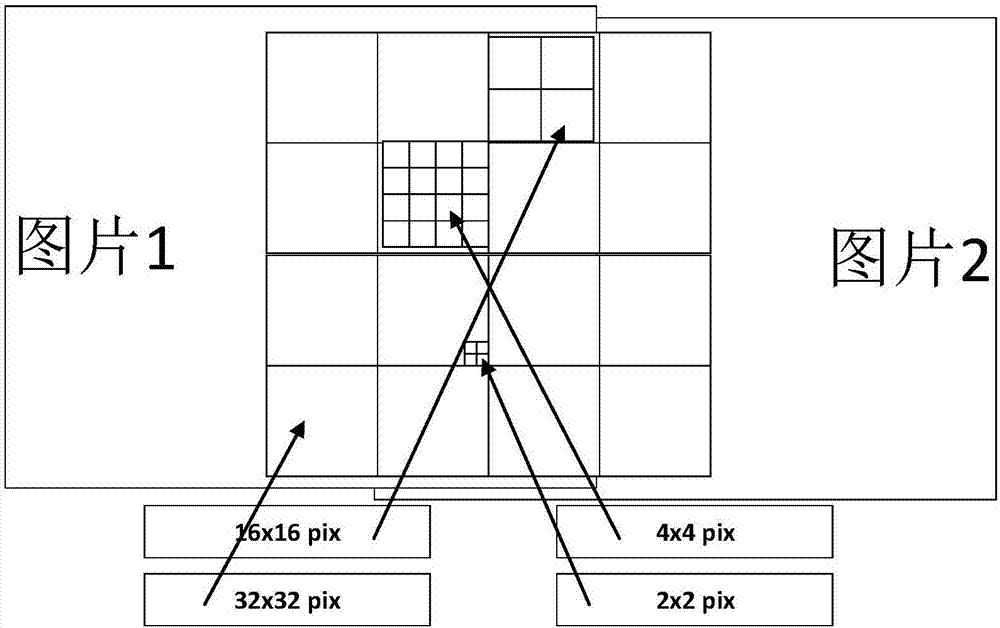

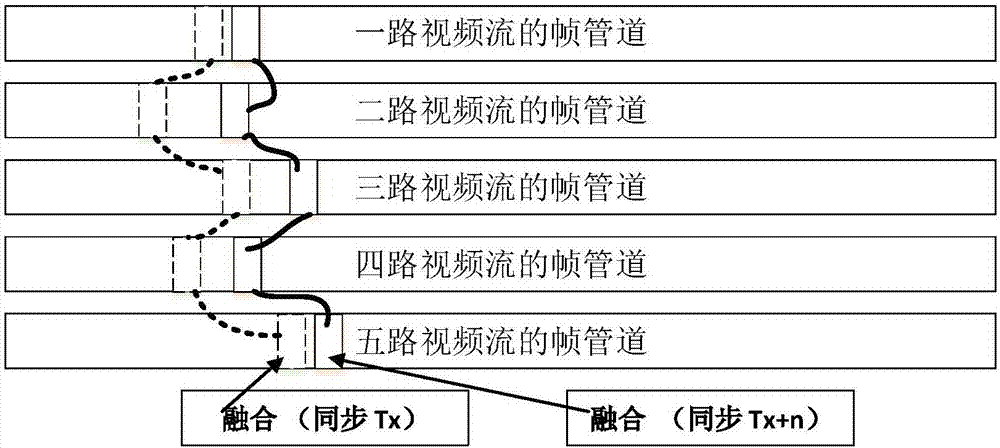

A technology of image fusion and self-learning, which is applied in the field of image processing, can solve the problems of splicing and fusion itself being irrelevant and inapplicable, and achieve the effect of splicing and fusing multi-channel images with high efficiency and strong applicability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0069] Embodiment 1 (360-degree panoramic live broadcast)

[0070] When multi-channel image alignment is used to collect 360-degree panoramas of sports events or concerts and live broadcast, after multi-channel image acquisition, real-time and high-quality correction methods can be provided by using the self-learning multi-channel image fusion real-time correction method in the present invention. The real-time panoramic video stream is broadcast live to end users who are not on site.

Embodiment 2

[0071] Embodiment 2 (security monitoring fusion storage)

[0072] In the security video monitoring system of large enterprises or public places, the self-learning multi-channel image fusion real-time correction method of the present invention can be used in the background server to continuously stitch and fuse multiple images and store them. In this way, once an enterprise or government needs to restore a certain event, it can conduct investigations with high precision without dead ends, and at the same time reduce the pressure on multi-channel security monitoring and storage.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com