An fpga and opencl-based fpga algorithm for large-capacity data

A data computing and data technology, applied in the field of data computing, can solve problems such as large time resources, limited algorithm performance, complex DDR hardware, etc., and achieve the effect of acceleration

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

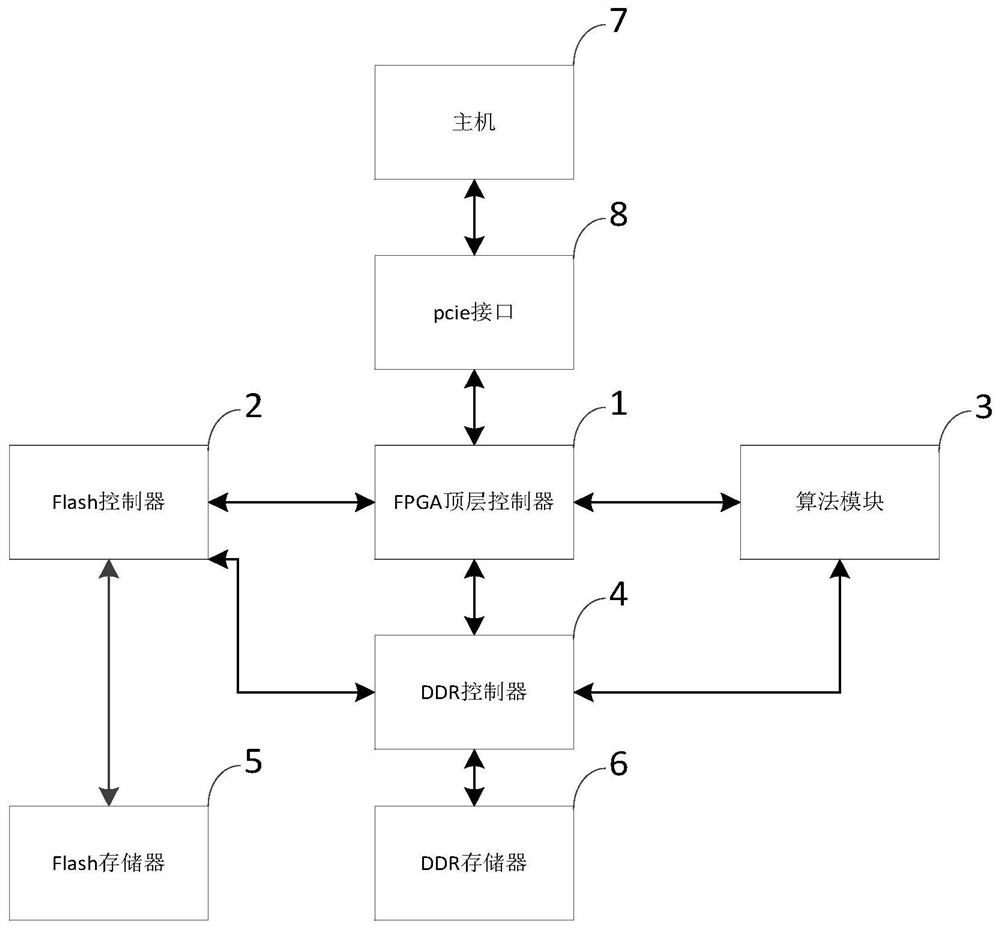

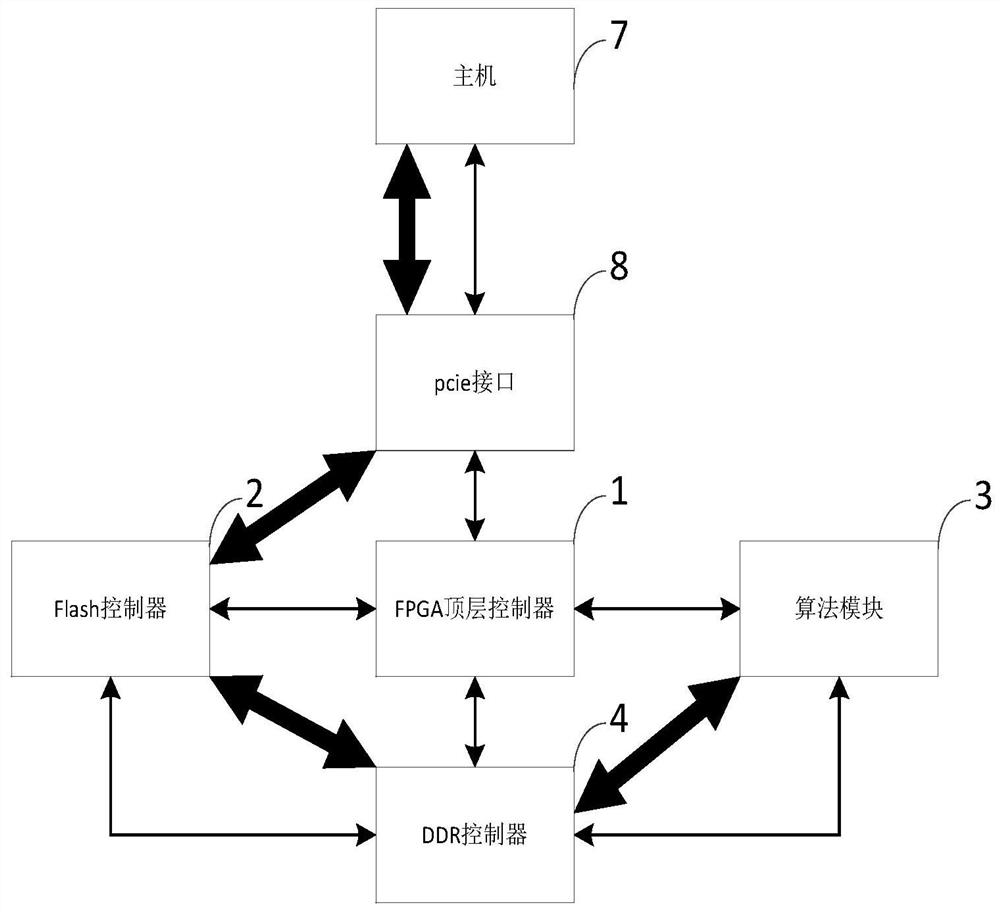

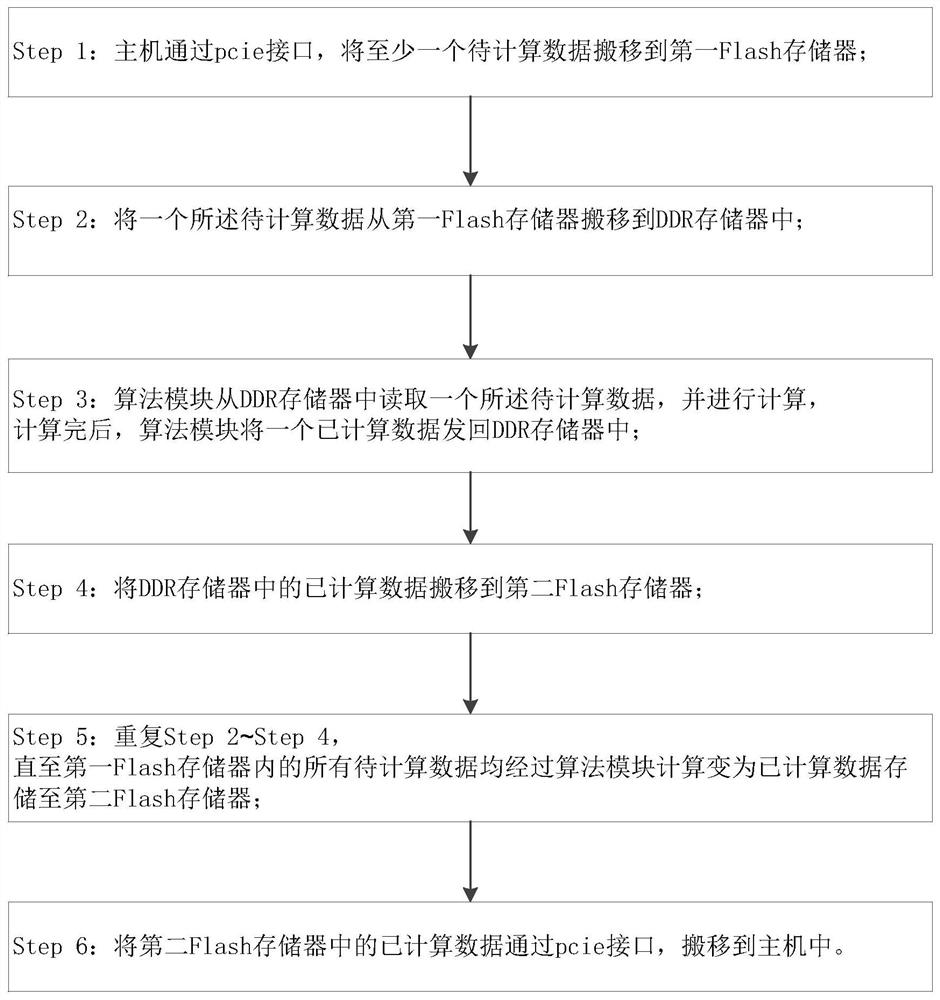

[0039]Such asfigure 1 withfigure 2 As shown, an FPGA for large-capacity data includes an FPGA controller, a pcie interface for command communication with the FPGA controller, a Flash controller, a DDR controller, and an algorithm module; it also includes a Flash memory controlled by the Flash controller And the DDR memory controlled by the DDR controller; the Flash controller communicates with the DDR controller command communication, the DDR controller communicates with the algorithm module command communication; the pcie interface and the Flash controller communicate data between the Data transmission between the Flash controller and the DDR controller, and data transmission between the DDR controller and the algorithm module.

[0040]In this embodiment, the Flash controller 2 includes a Flash array group A controller and a Flash array group B controller; there are 96 Flash memories 5, of which 48 pieces of Flash memories 5 are connected to 12 of the Flash array group A controllers F...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com