Patents

Literature

104 results about "Algorithm acceleration" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Algorithm Acceleration. Algorithm acceleration uses code generation technology to generate fast executable code. Accelerated algorithms must comply with MATLAB ® Coder™ code generation requirements and rules.

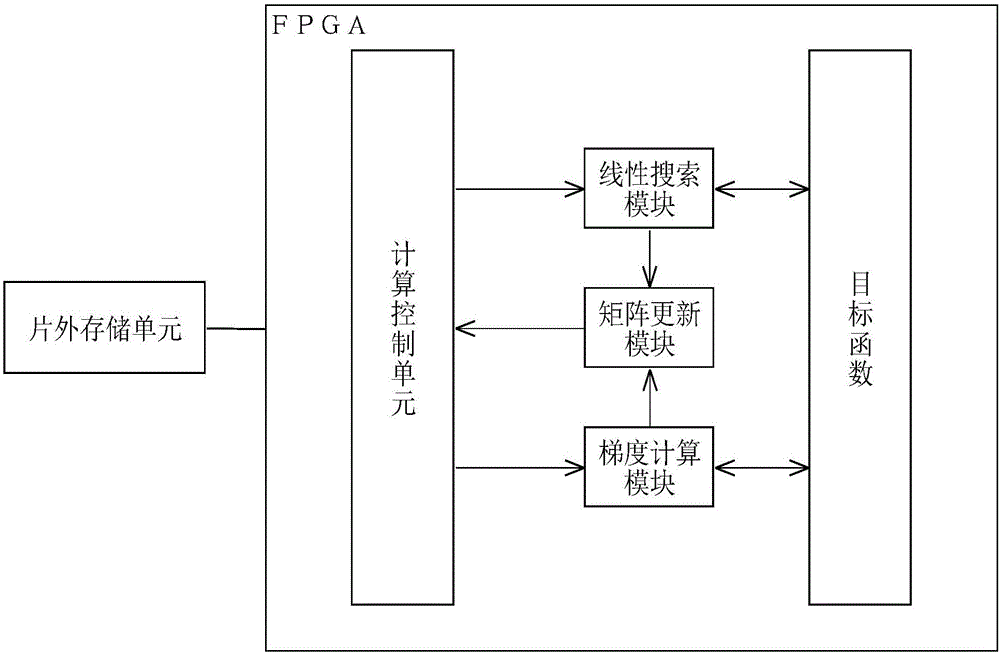

Method and system for deep learning algorithm acceleration on field-programmable gate array platform

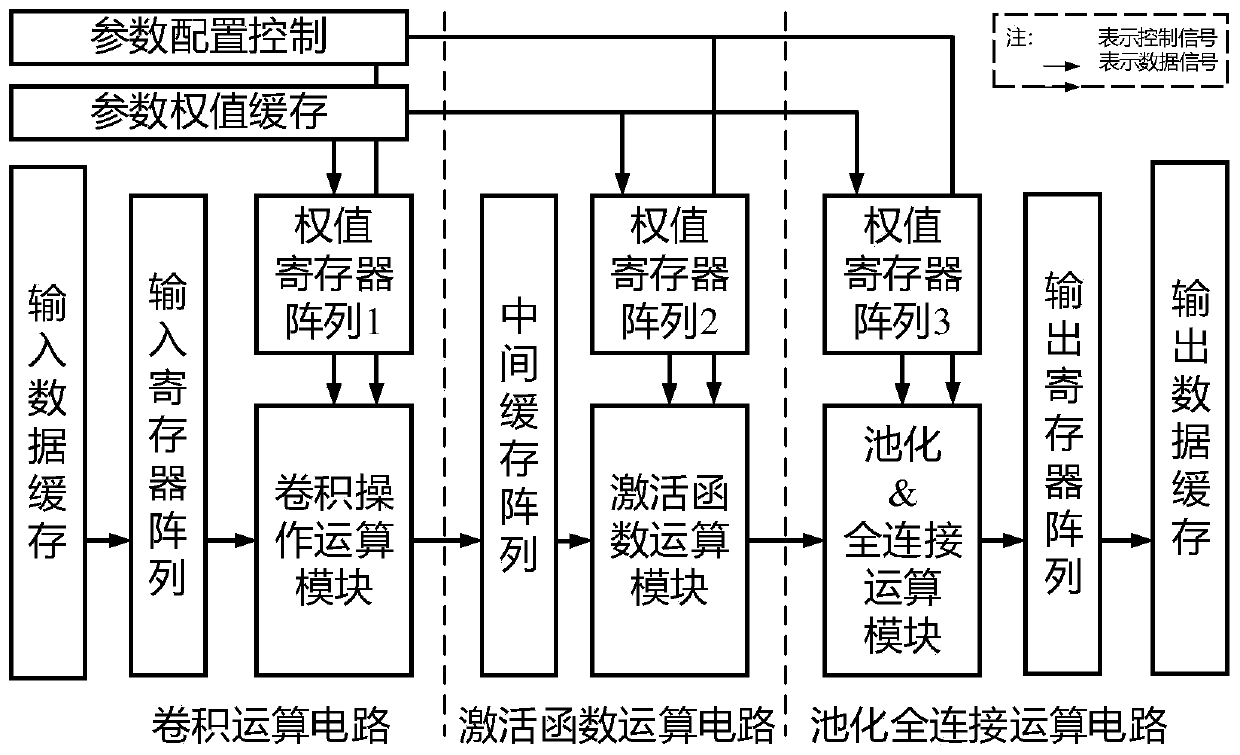

ActiveCN106228238AEffective accelerationEfficient designPhysical realisationNeural learning methodsParallel computingComputer module

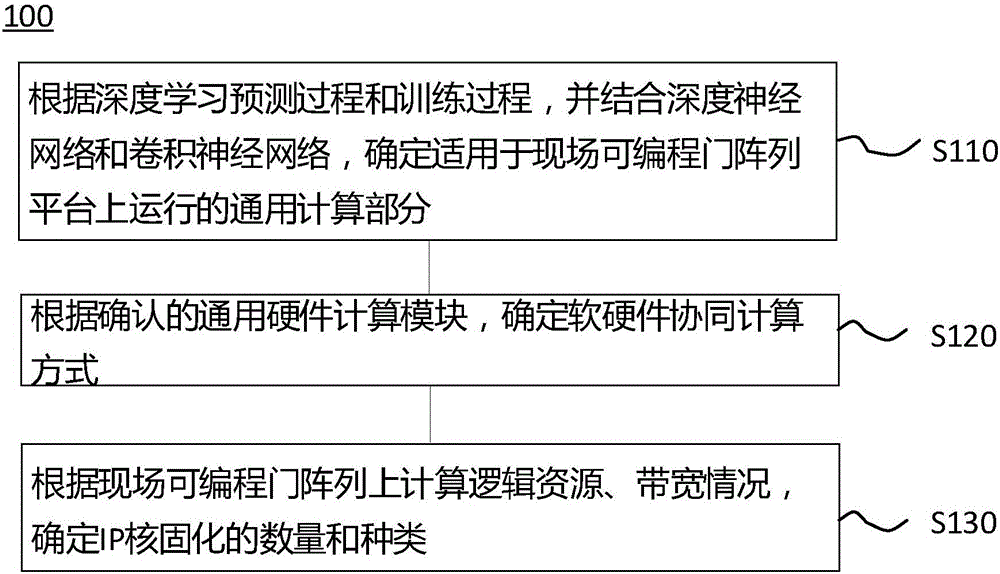

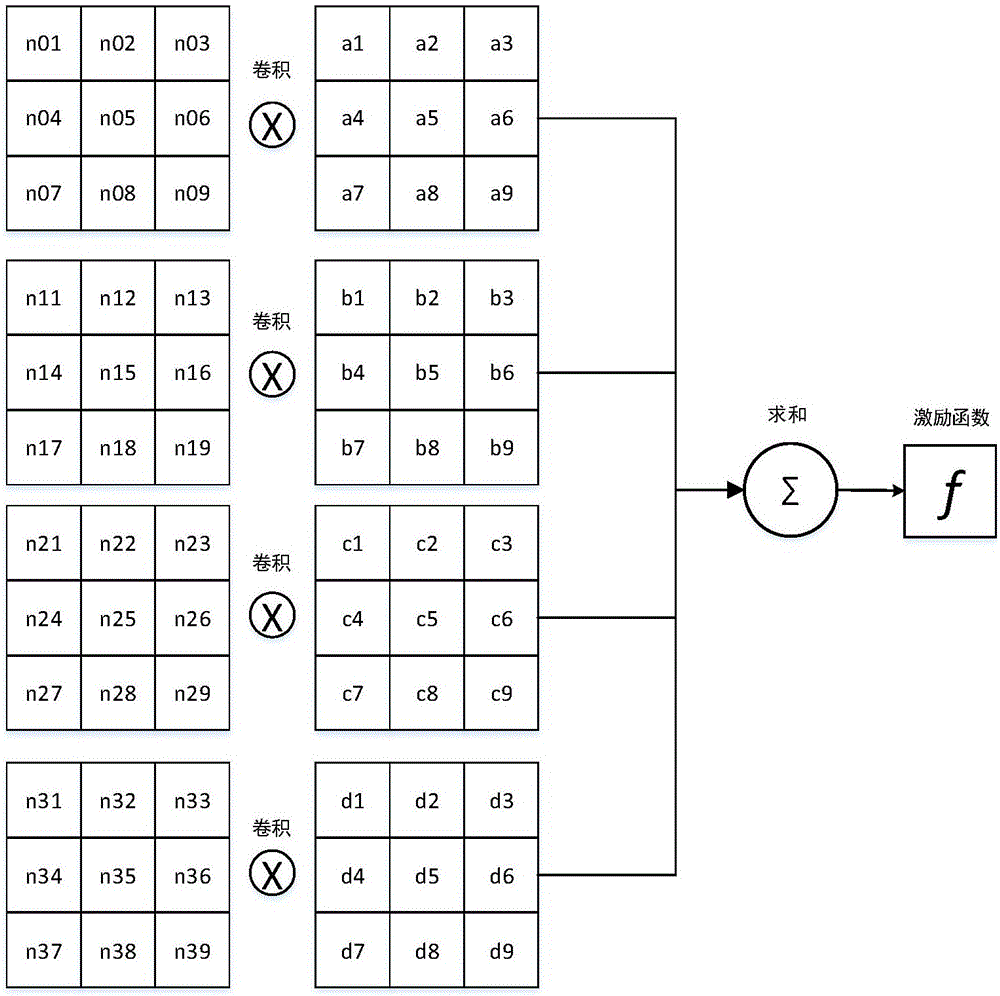

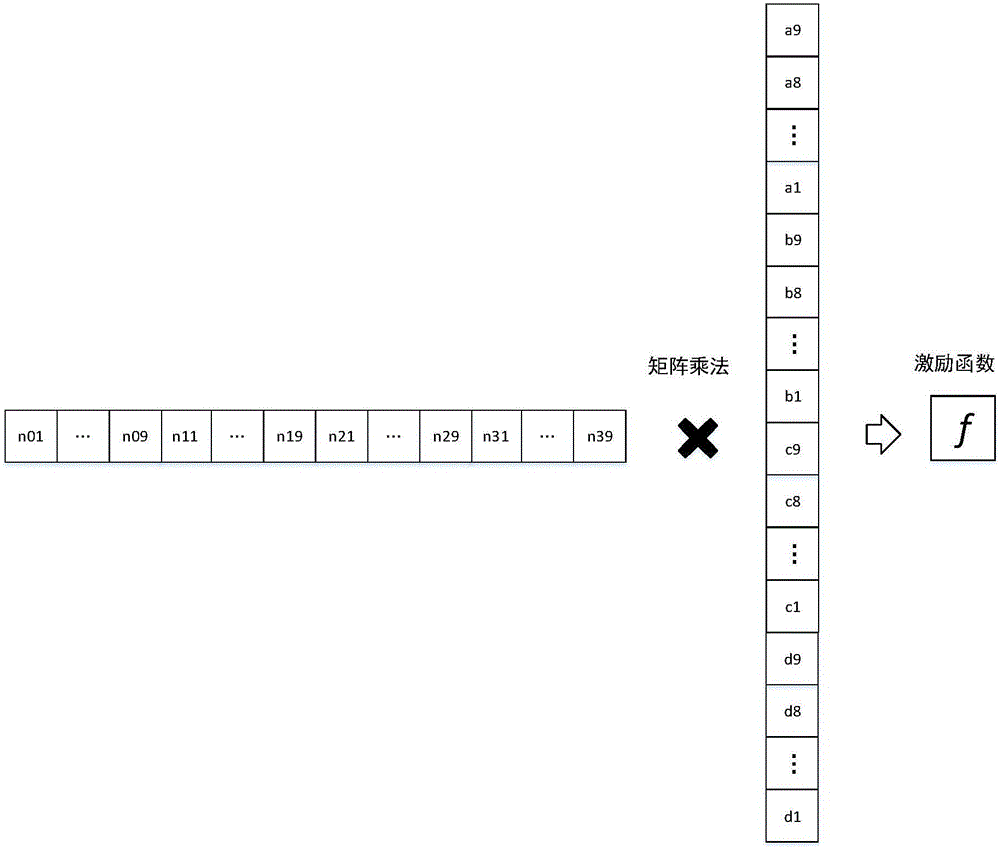

The invention discloses a method and system for deep learning algorithm acceleration on a field-programmable gate array platform. The field-programmable gate array platform is composed of a universal processor, a field-programmable gate array and a storage module. The method comprises: according to a deep learning prediction process and a training process, a general computation part that can be operated on a field-programmable gate array platform is determined by combining a deep neural network and a convolutional neural network; a software and hardware cooperative computing way is determined based on the determined general computation part; and according to computing logic resources and the bandwidth situation of the FPGA, the number and type of IP core solidification are determined, and acceleration is carried out on the field-programmable gate array platform by using a hardware computing unit. Therefore, a hardware processing unit for deep learning algorithm acceleration is designed rapidly based on hardware resources; and compared with the general processor, the processing unit has characteristics of excellent performance and low power consumption.

Owner:SUZHOU INST FOR ADVANCED STUDY USTC

Techniques of optical proximity correction using GPU

ActiveUS8490034B1Enhanced MaskingPhotomechanical apparatusCAD circuit designFine structureGraphics processing unit

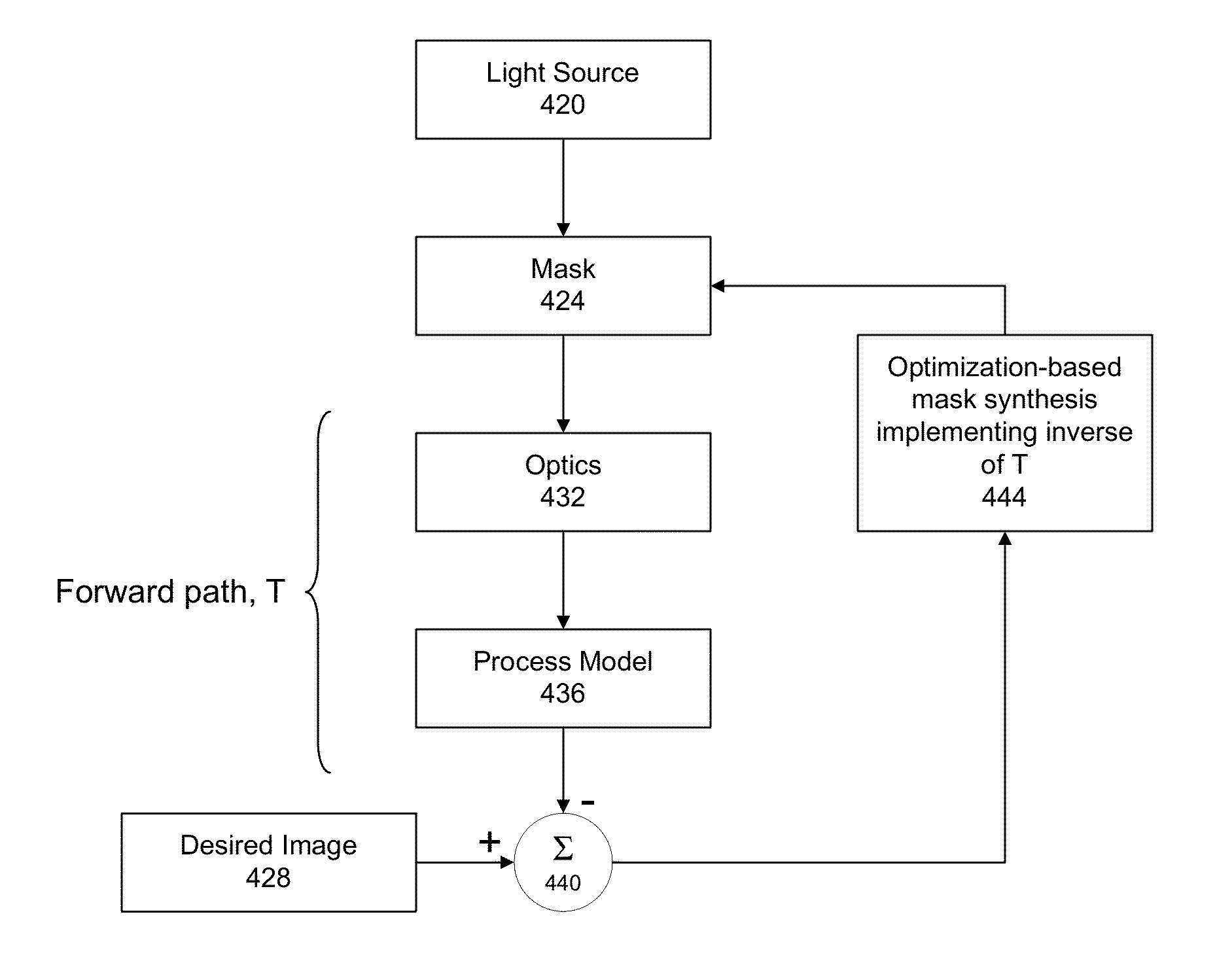

Computationally intensive electronic design automation operations are accelerated with algorithms utilizing one or more graphics processing units. The optical proximity correction (OPC) process calculates, improves, and optimizes one or more features on an exposure mask (used in semiconductor or other processing) so that a resulting structure realized on an integrated circuit or chip meets desired design and performance requirements. When a chip has billions of transistors or more, each with many fine structures, the computational requirements for OPC can be very large. This processing can be accelerated using one or more graphics processing units.

Owner:D2S

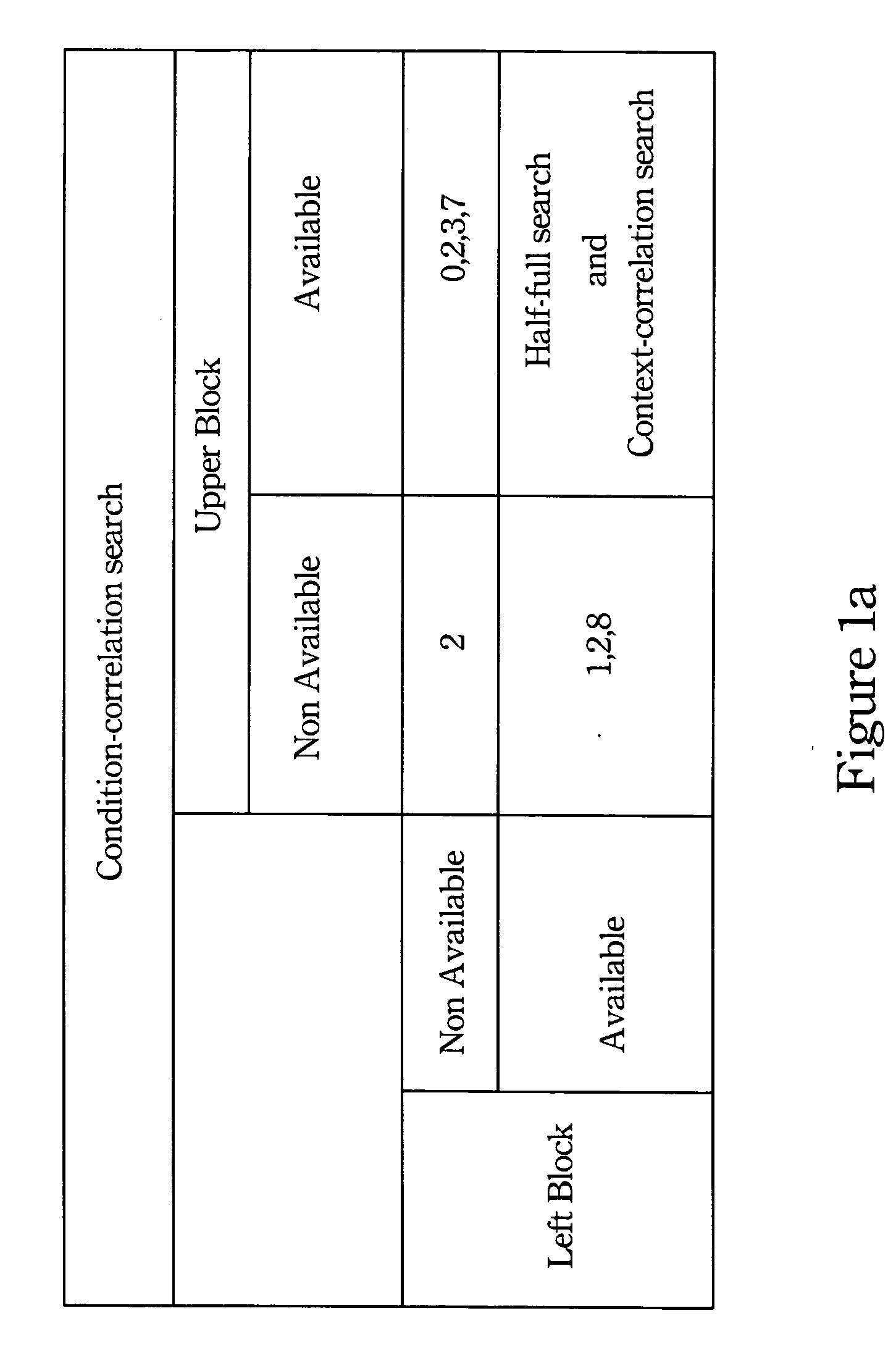

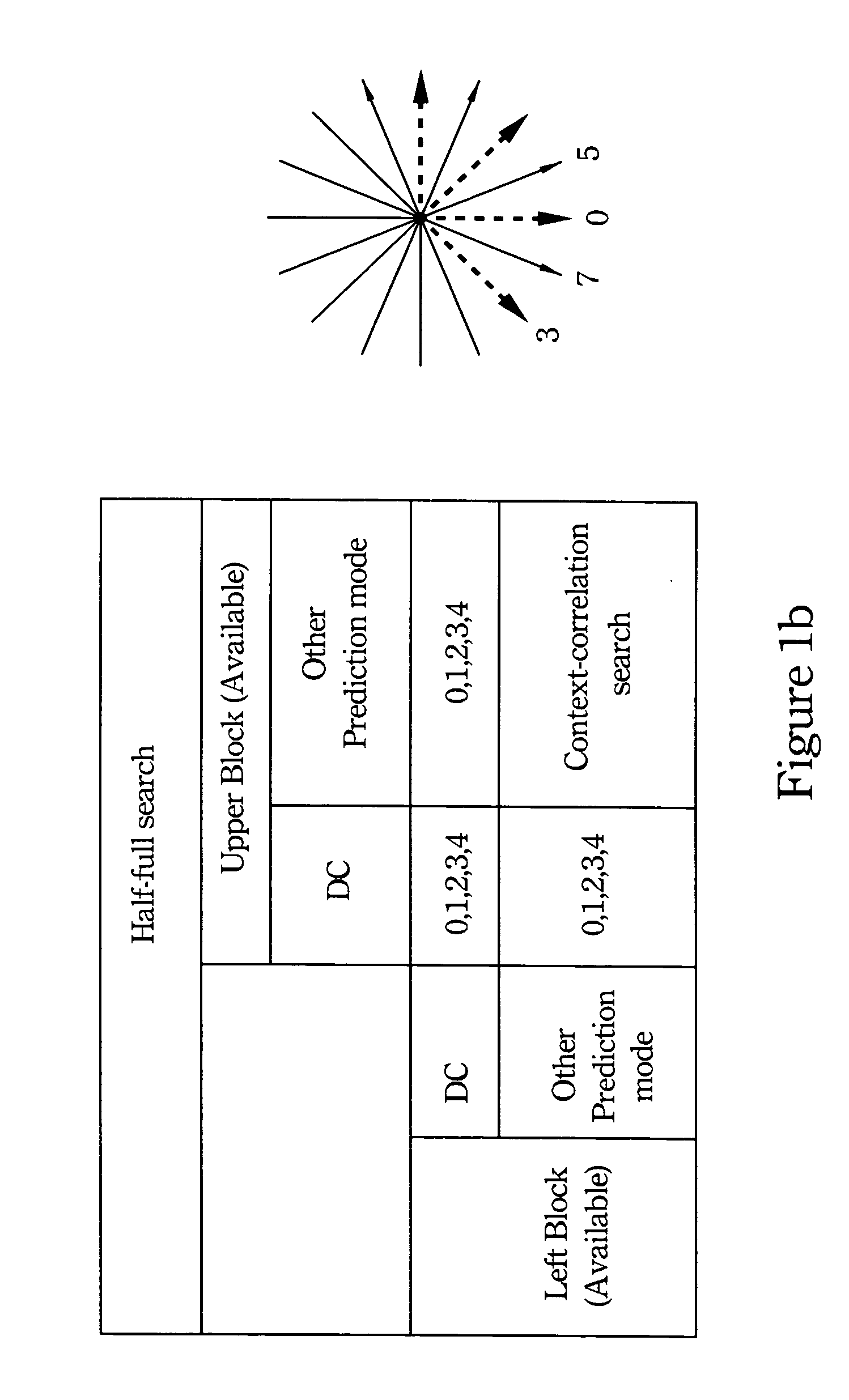

Method for reducing computational complexity of video compression standard

InactiveUS20080056355A1Reduce luminance computationDecrease in luminanceColor television with pulse code modulationColor television with bandwidth reductionComputation complexityComputational complexity theory

A method for reducing computational complexity of video compression standard is provided, and it includes an intra 4×4 macroblock (I4MB) search algorithm, an intra 16×16 macroblock (I16MB) search algorithm and a chroma search algorithm. The I4MB search algorithm and I16MB search algorithm accelerate the prediction process of the luma macroblock, and the chroma search algorithm accelerates the prediction process of chroma macroblock. The above algorithms can greatly reduce the computation of prediction mode of video compression standard.

Owner:NATIONAL CHUNG CHENG UNIV

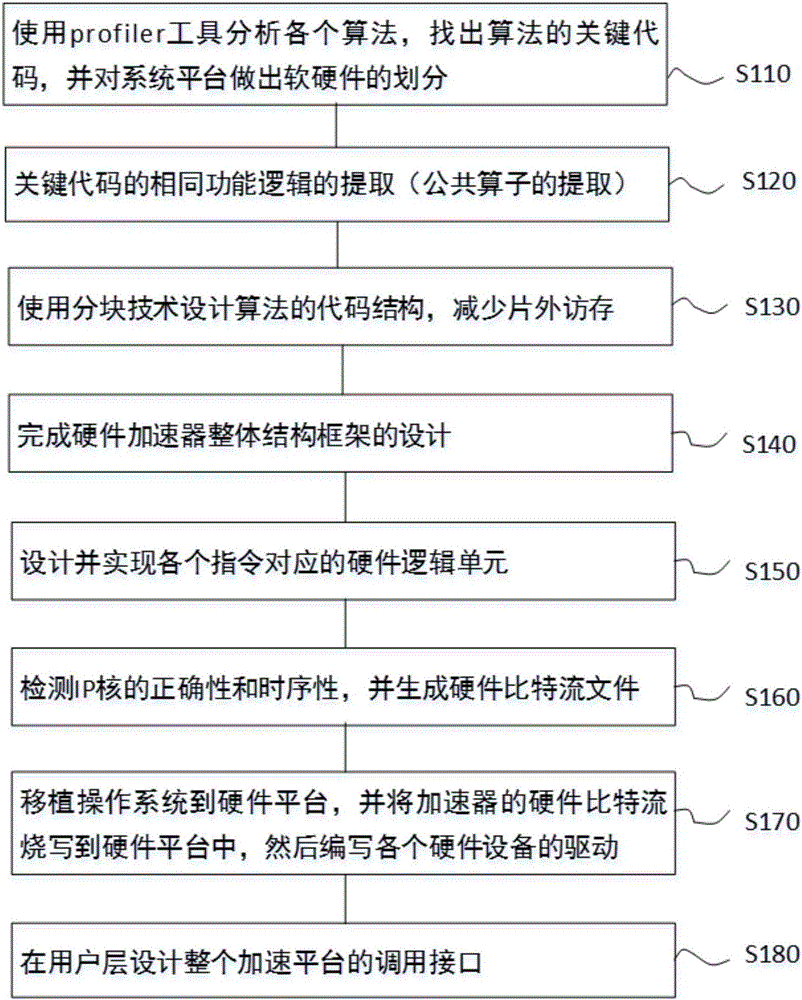

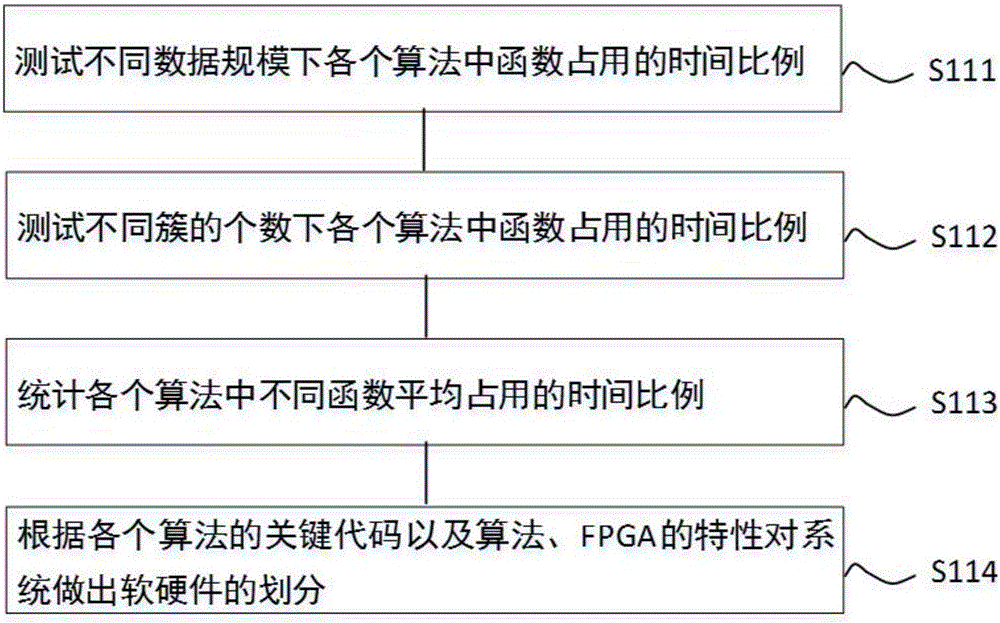

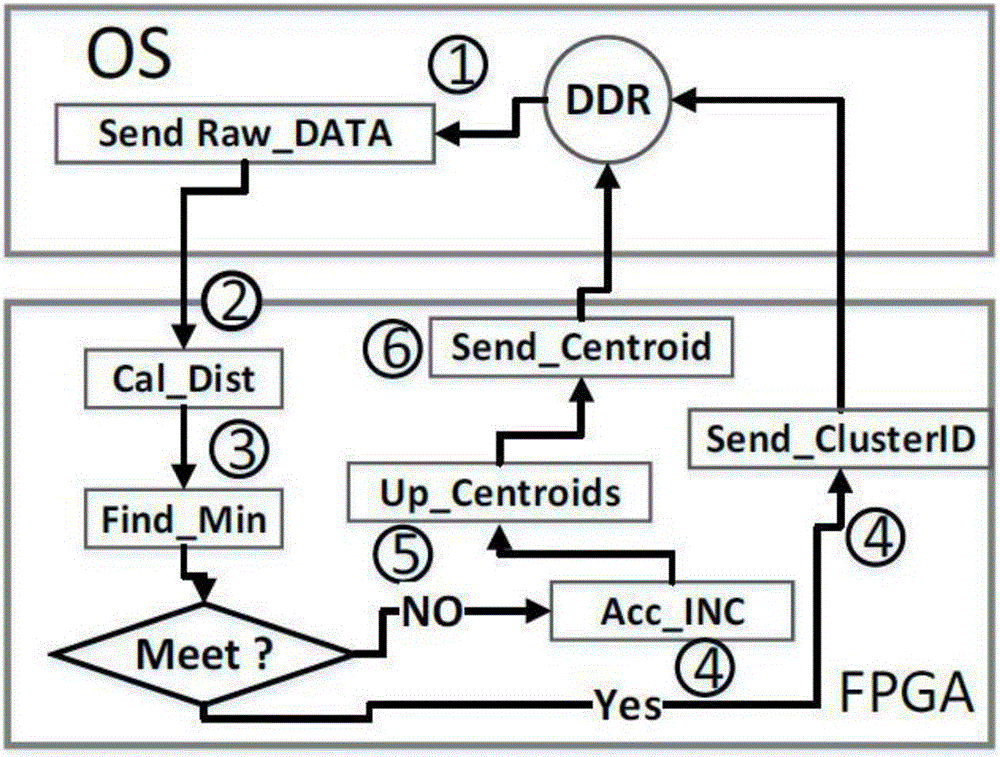

FPGA-based clustering algorithm acceleration system and design method thereof

ActiveCN106383695AEasy to handleHandles large data transfers wellConcurrent instruction executionCluster algorithmOperational system

The invention discloses an FPGA-based clustering algorithm acceleration system and a design method thereof. The method comprises the steps of obtaining a key code of each algorithm through a profiling technology; detailing the key code of each algorithm and extracting same function logic (a common operator); redesigning a code structure by using a blocking technology to increase the utilization rate of data locality and reduce the off-chip access frequency; designing an extended semantic instruction set, realizing function logic parts corresponding to the instruction set, and finishing a key code function through operations of fetching, decoding and execution of instructions; designing an acceleration framework of an accelerator and generating an IP core; and transplanting an operation system to a development board, and finishing cooperative work of software and hardware in the operation system. Various clustering algorithms can be supported and the flexibility and universality of a hardware accelerator can be improved; and the code of each algorithm is reconstructed by adopting the blocking technology to reduce the off-chip access frequency so as to reduce the influence of the off-chip access bandwidth on the acceleration effect of the accelerator.

Owner:SUZHOU INST FOR ADVANCED STUDY USTC

Method for accelerating RNA secondary structure prediction based on stochastic context-free grammar

InactiveCN101717817AAchieve accelerationLow costMicrobiological testing/measurementConcurrent instruction executionAlgorithmHigh acceleration

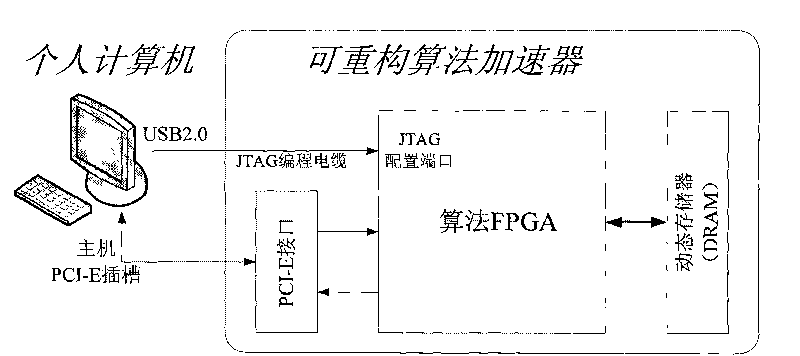

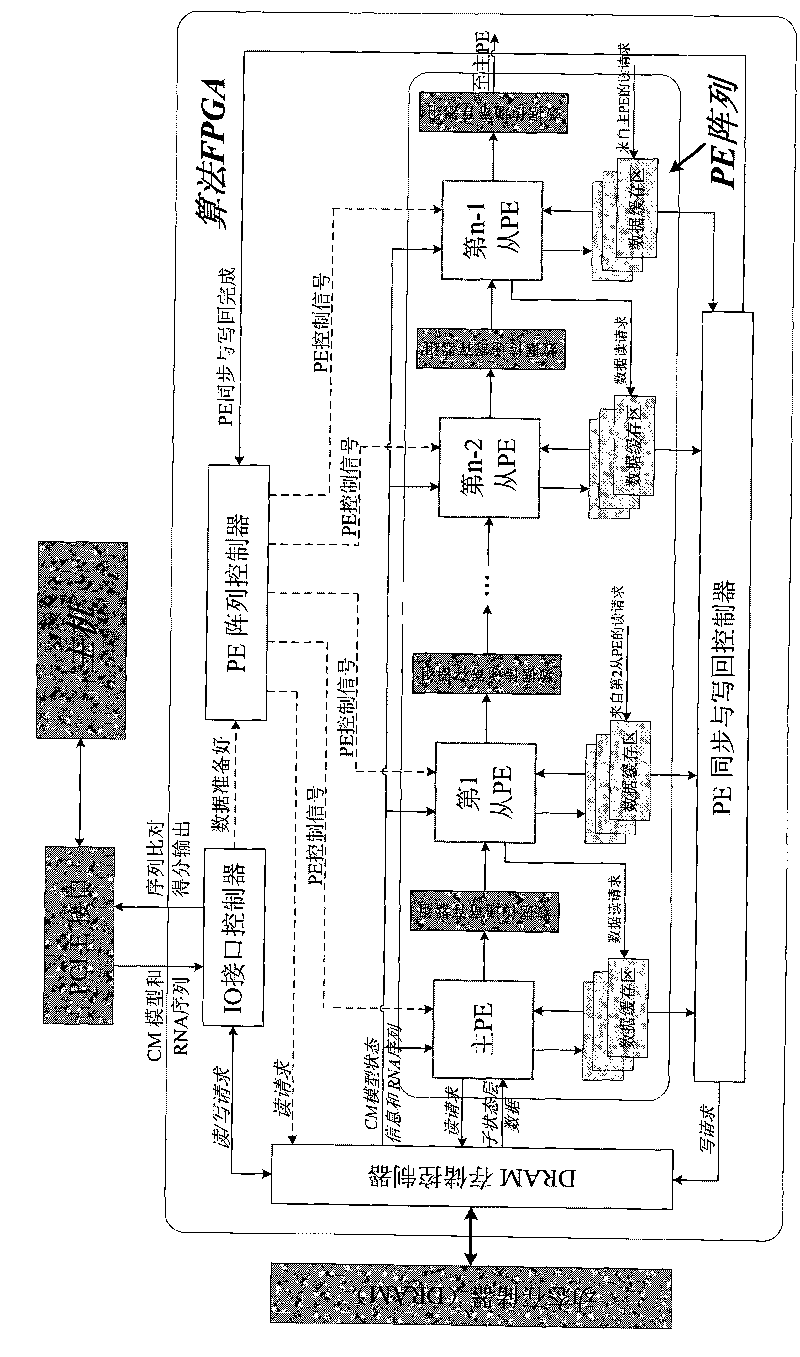

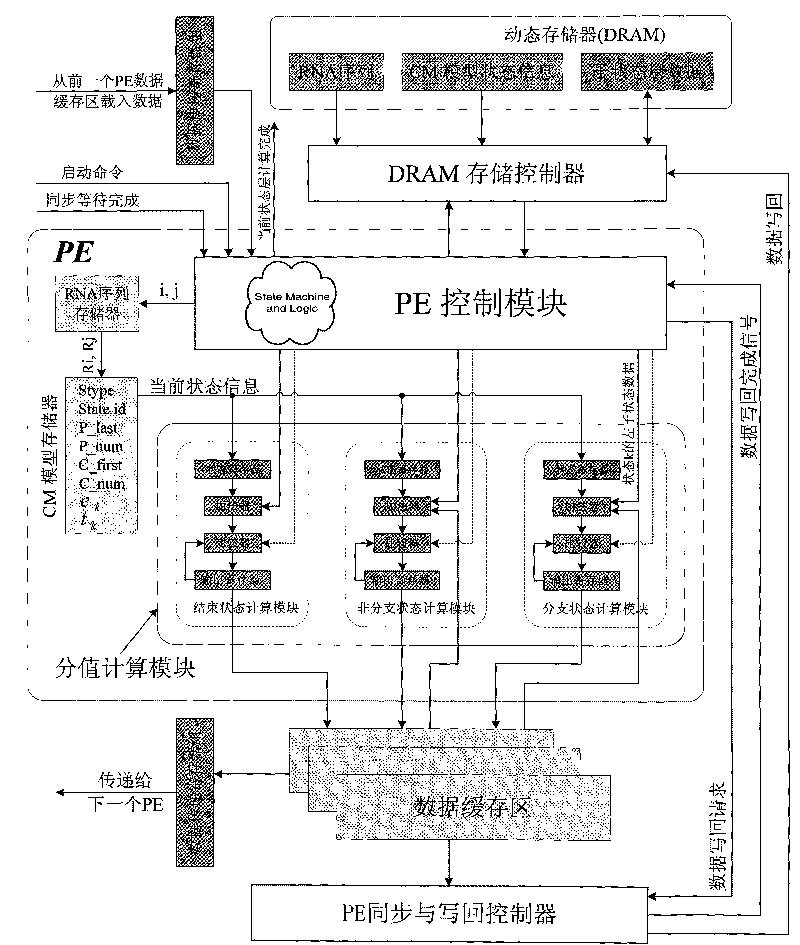

The invention discloses a method for accelerating RNA secondary structure prediction based on stochastic context-free grammar (SCFG), aiming at accelerating the speed of RNA secondary structure predication by using the SCFG. The method comprises the following steps of: firstly establishing a heterogeneous computer system comprising a host computer and a reconfigurable algorithm accelerator, then transmitting a formatted CM model and an encoded RNA sequence into the reconfigurable algorithm accelerator through the host computer, and executing a non-backtrace CYK / inside algorithm calculation by a PE array of the reconfigurable algorithm accelerator, wherein task division strategies of region-dependent segmentation and layered column-dependent parallel processing are adopted in the calculation so as to realize fine-grained parallel calculation, and n numbers of PEs simultaneously calculate n numbers of data positioned different columns of a matrix by adopting an SPMD way, but different calculation sequences are adopted in the calculation according to different state types. The invention realizes the application acceleration of the RNA sequence secondary structure prediction based on the SCFG model and has high acceleration ratio and low cost.

Owner:NAT UNIV OF DEFENSE TECH

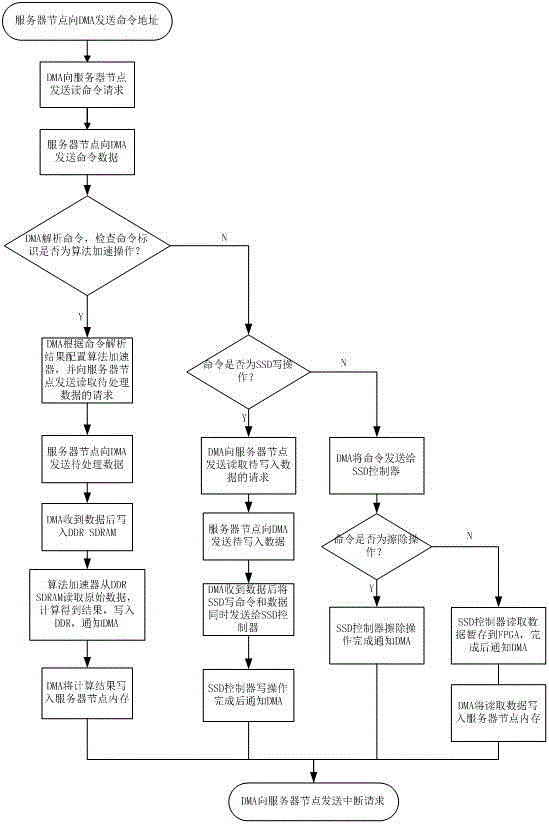

FPGA method achieving computation speedup and PCIESSD storage simultaneously

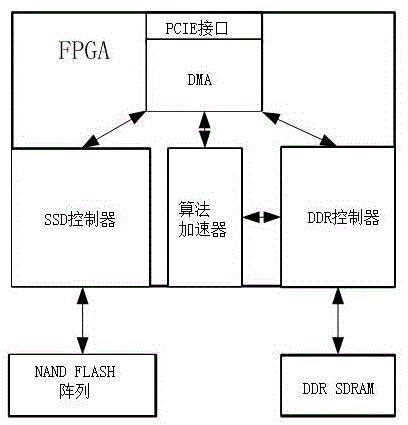

InactiveCN105677595AReduce power consumptionLow costEnergy efficient computingArchitecture with single central processing unitDisk controllerEmbedded system

The invention discloses an FPGA method achieving computation speedup and PCIE SSD storage simultaneously. An FPGA is used, an SSD controller and an algorithm accelerator are integrated in the FPGA, the FPGA is further internally provided with a DDR controller and a direct memory read module DMA, and the direct memory read module DMA is connected with the SSD controller, the DDR controller and the algorithm accelerator respectively. According to the FPGA method achieving computation speedup and PCIE SSD storage simultaneously, the two functions of computation speedup and SSD storage are achieved on PCIE equipment, the layout difficult is reduced, overall power consumption of server nodes is reduced, and the cost of an enterprise is reduced.

Owner:FASII INFORMATION TECH SHANGHAI

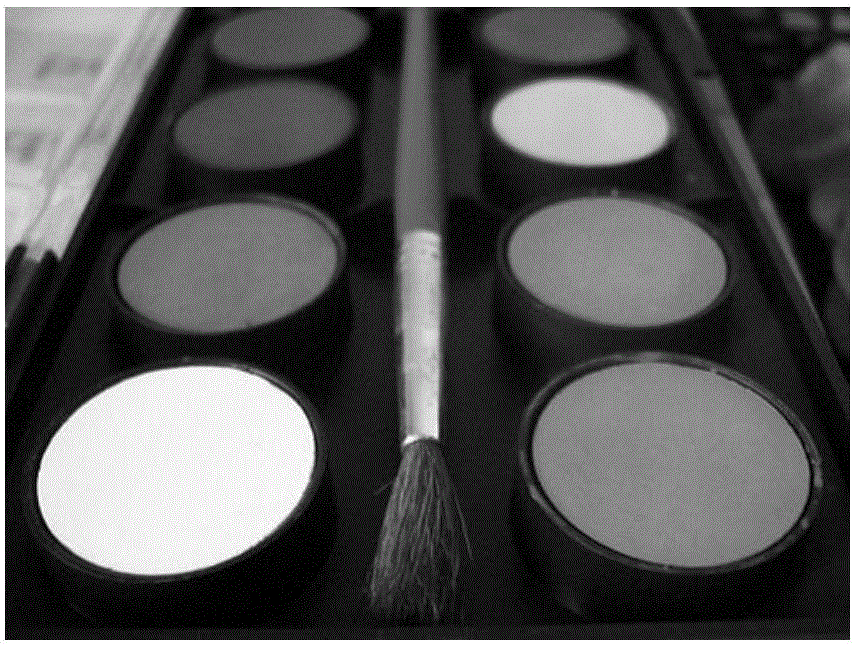

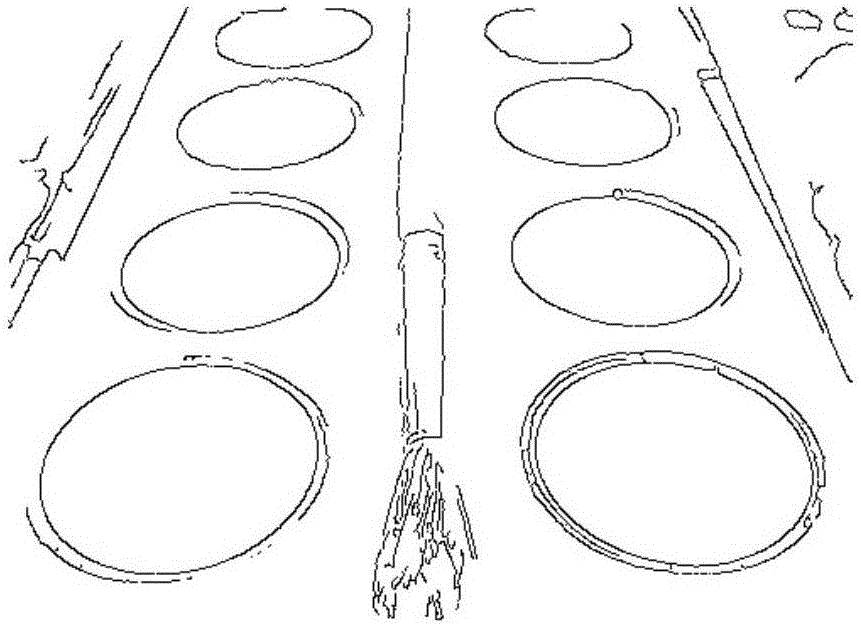

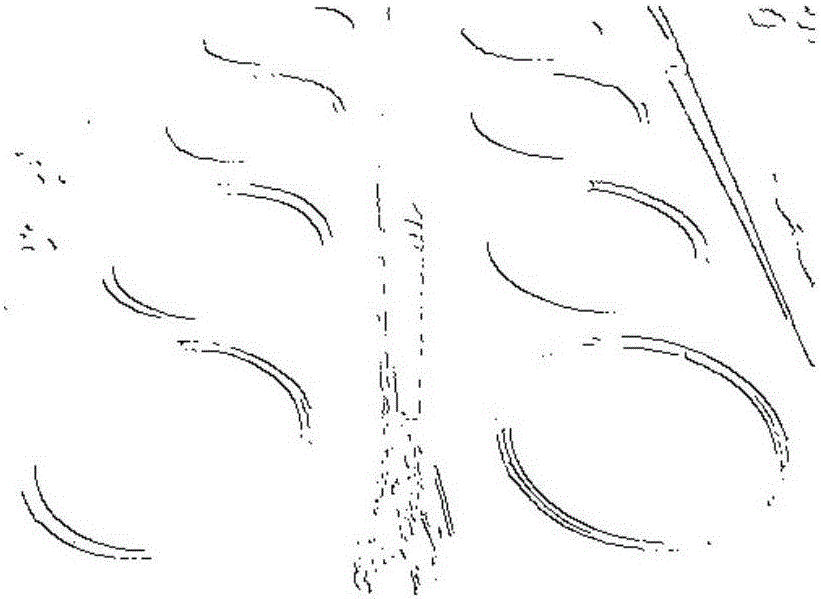

Ellipse rapid detection method based on geometric constraint

ActiveCN105931252AAchieve accelerationSolve problems that take a relatively long timeImage enhancementImage analysisAlgorithmEllipse

The invention discloses an ellipse rapid detection method based on geometric constraint. The method employs a characteristic number to screen a combination of arcs which definitely do not belong to the same ellipse before the ellipse fitting, reduces the unnecessary ellipse fitting operation, and achieves the algorithm acceleration. The method solves a problem that the consumed time is longer in the prior art, and guarantees the ellipse detection accuracy well.

Owner:DALIAN UNIV OF TECH

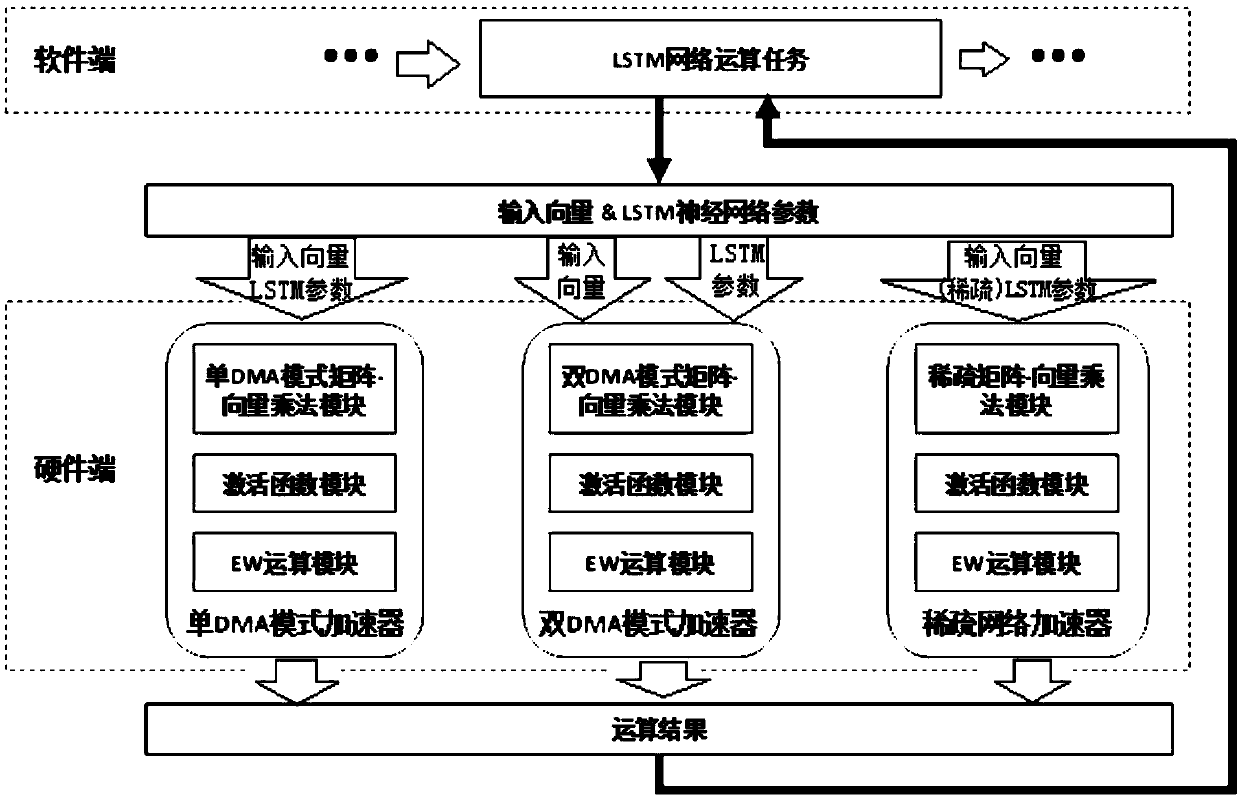

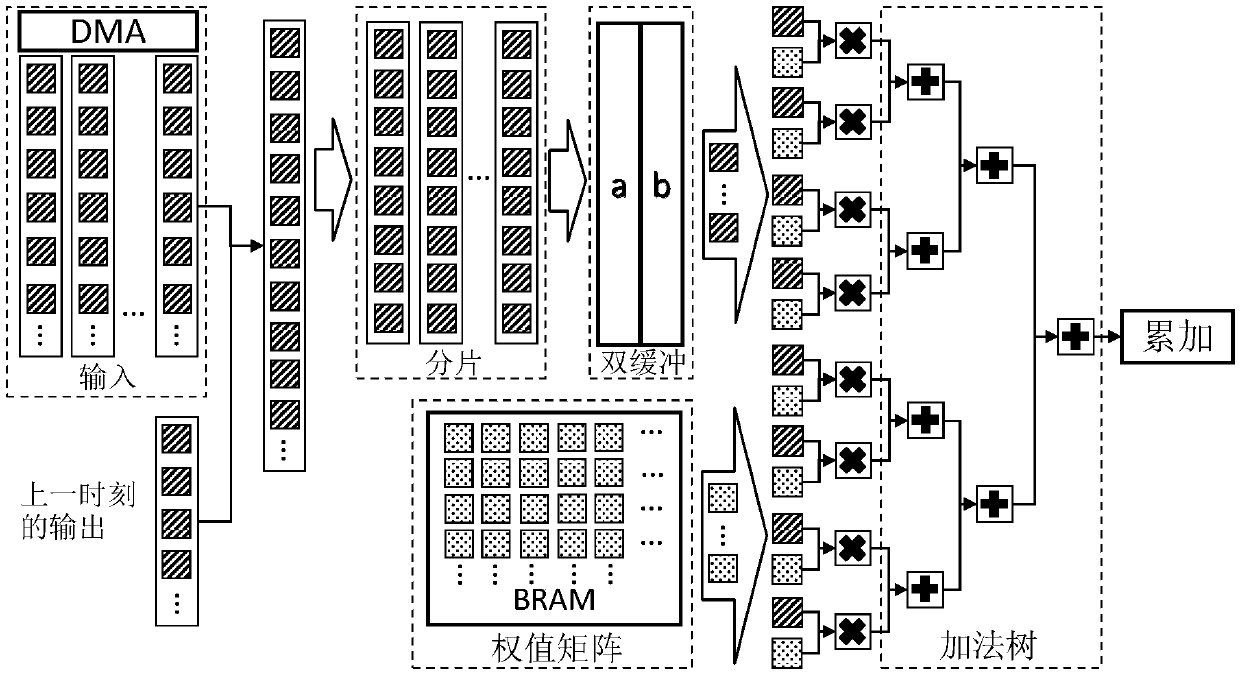

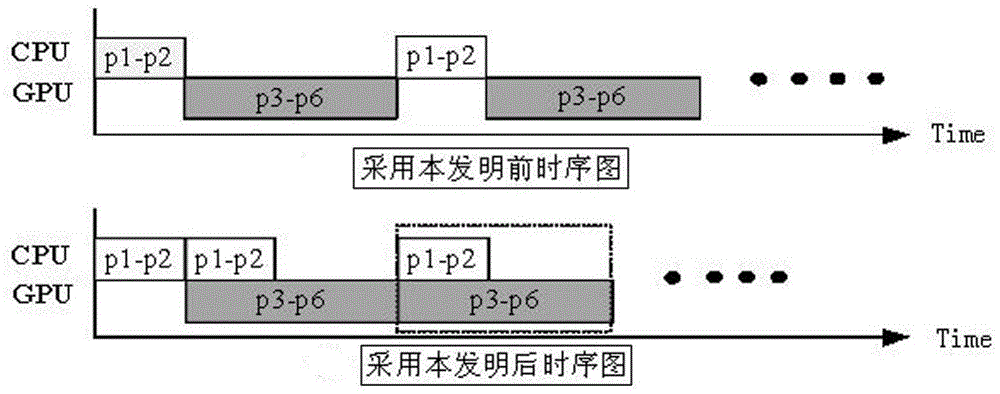

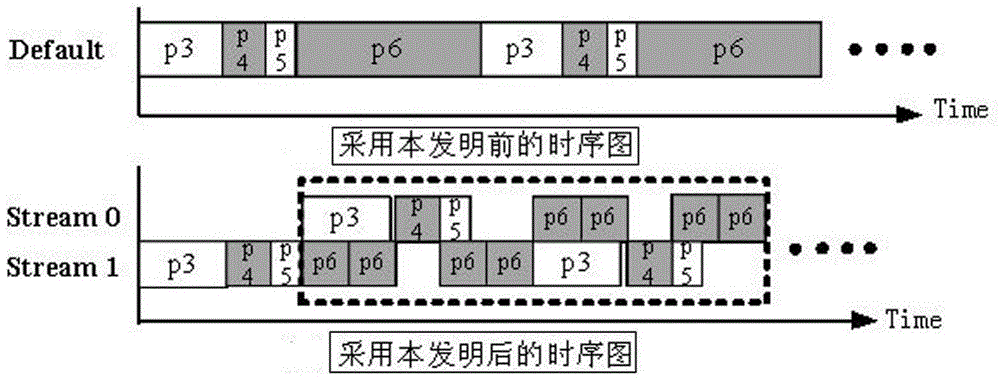

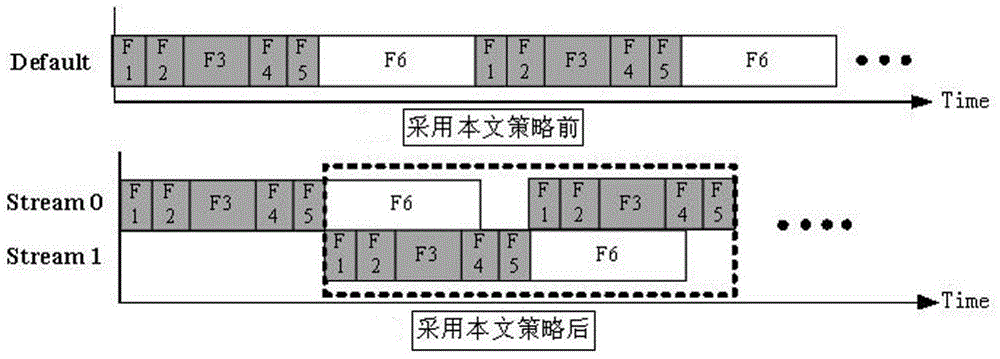

LSTM (Long Short-Term Memory) forward direction operation accelerator based on FPGA (Field Programmable Gate Array)

InactiveCN108763159AImprove computing powerImprove energy efficiency ratioDigital data processing detailsComplex mathematical operationsActivation functionDirect memory access

The invention discloses a LSTM (Long Short-Term Memory) forward direction operation accelerator based on an FPGA (Field Programmable Gate Array), and works by a hardware and software coordination pattern. A hardware part contains three types of accelerator designs: a single-DMA (Direct Memory Access) pattern LSTM neural network forward direction algorithm accelerator, a double-DMA pattern LSTM neural network forward direction algorithm accelerator and a spare LSTM neural network forward direction algorithm accelerator. The accelerator is used for accelerating a LSTM network forward direction calculation part and comprises a matrix-vector multiplication module, an Element-wise operation module and an activation function module. The single-DMA pattern accelerator has a good operation effecton the aspects of performance and an energy efficiency ratio. The double-DMA pattern accelerator and the sparse network accelerator have the good effect on the aspect of the energy efficiency ratio, and in addition, more on-chip storage resources of the FPGA can be saved.

Owner:SUZHOU INST FOR ADVANCED STUDY USTC

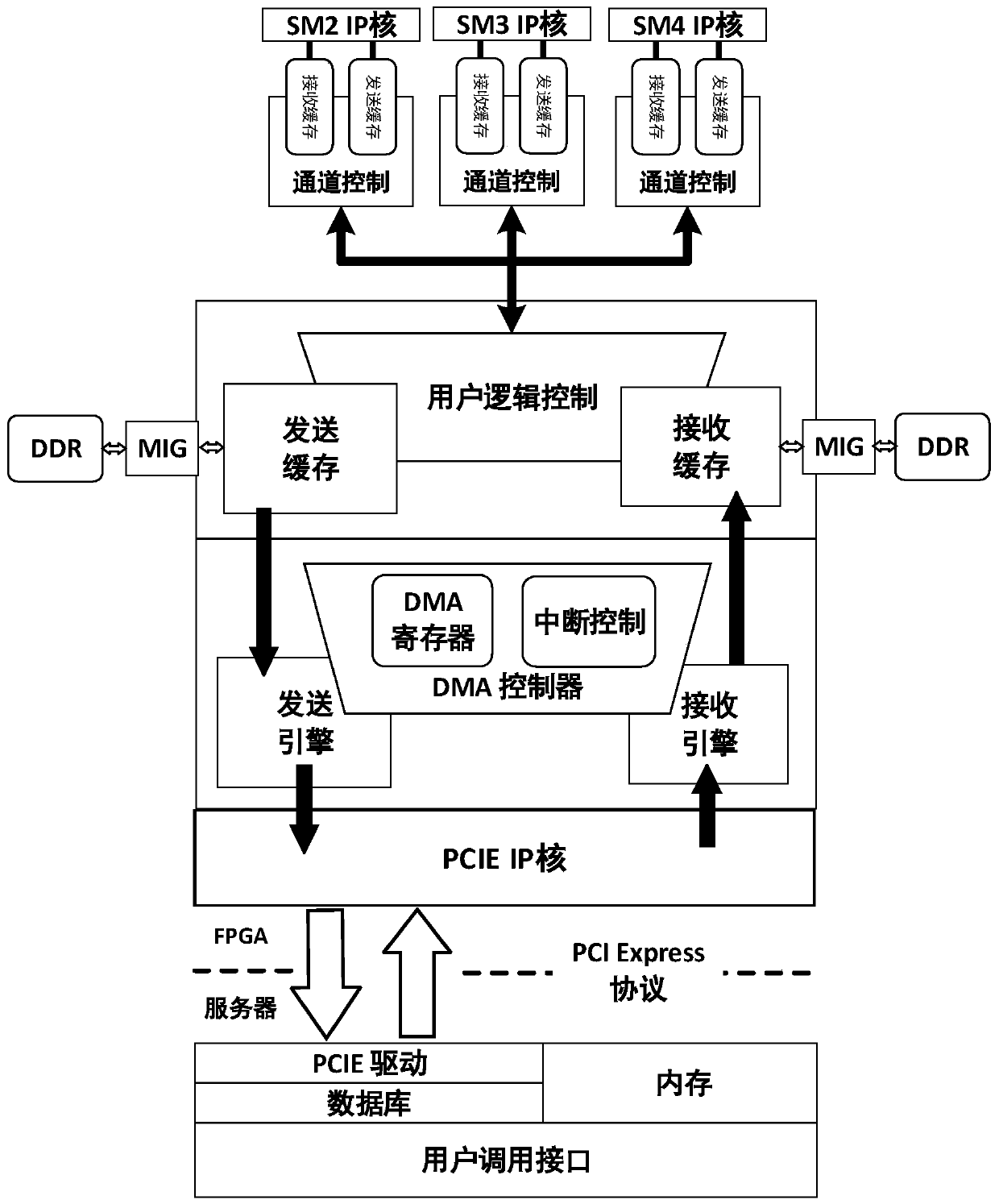

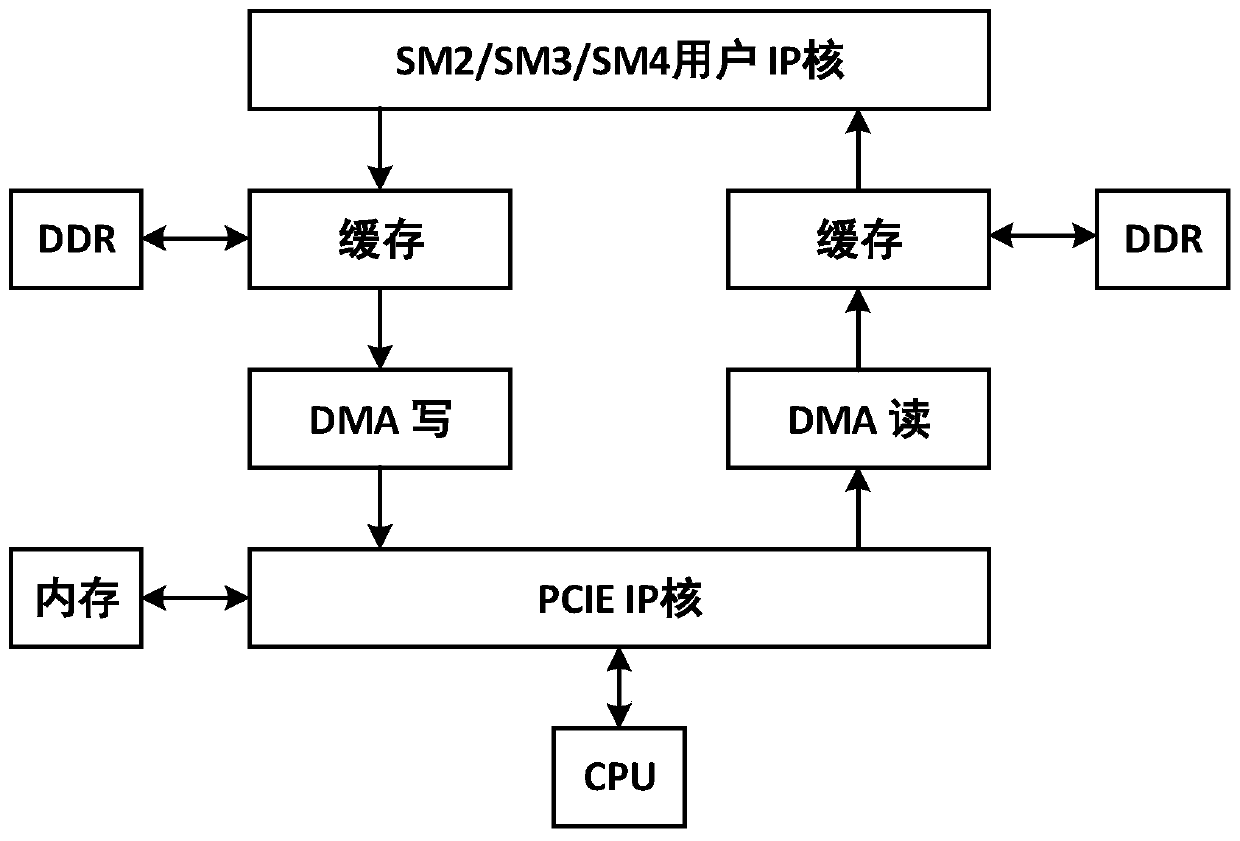

A national cryptographic algorithm acceleration processing system based on an FPGA

The invention discloses a national cryptographic algorithm acceleration processing system based on an FPGA. The system is used for processing a data packet which is sent to a server and needs to be processed by a national cryptographic algorithm. The system comprises an FPGA (Field Programmable Gate Array) accessed to a server through a PCIE (Peripheral Component Interface Express) core interface,wherein the FPGA is used for transmitting a data packet which is stored in the server and needs to be processed by a national cryptographic algorithm to a high-capacity cache DDR of the FPGA at a high speed through a PCIE core interface through DMA reading operation; The method comprises the following steps: processing a data packet needing to be processed by a national cryptographic algorithm through a corresponding national cryptographic algorithm IP core defined by a user, forming the data packet processed by the national cryptographic algorithm and transmitting the data packet to a DDR, and transmitting the data packet processed by the national cryptographic algorithm in the DDR to a server side memory through a PCIE core interface through DMA write operation. The acceleration processing system disclosed by the invention has good reusability and expandability, and has very good popularization and application values.

Owner:北京中科海网科技有限公司

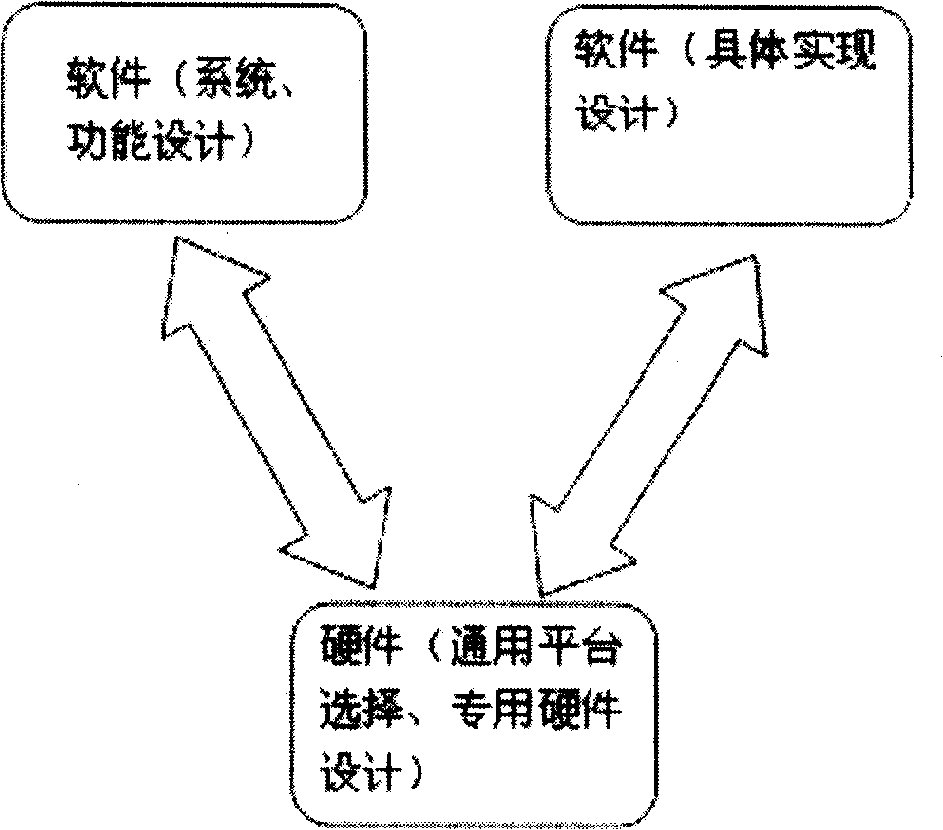

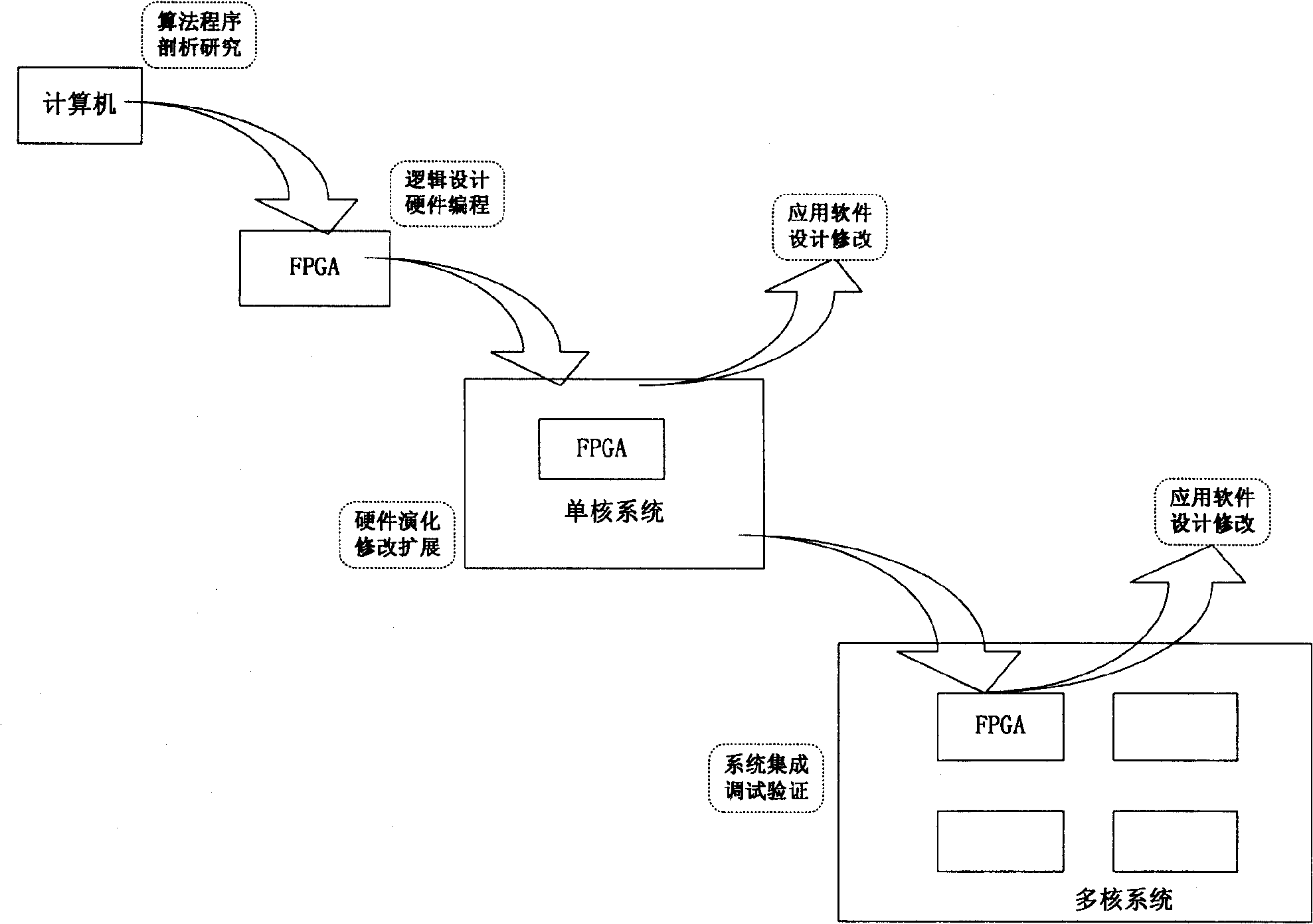

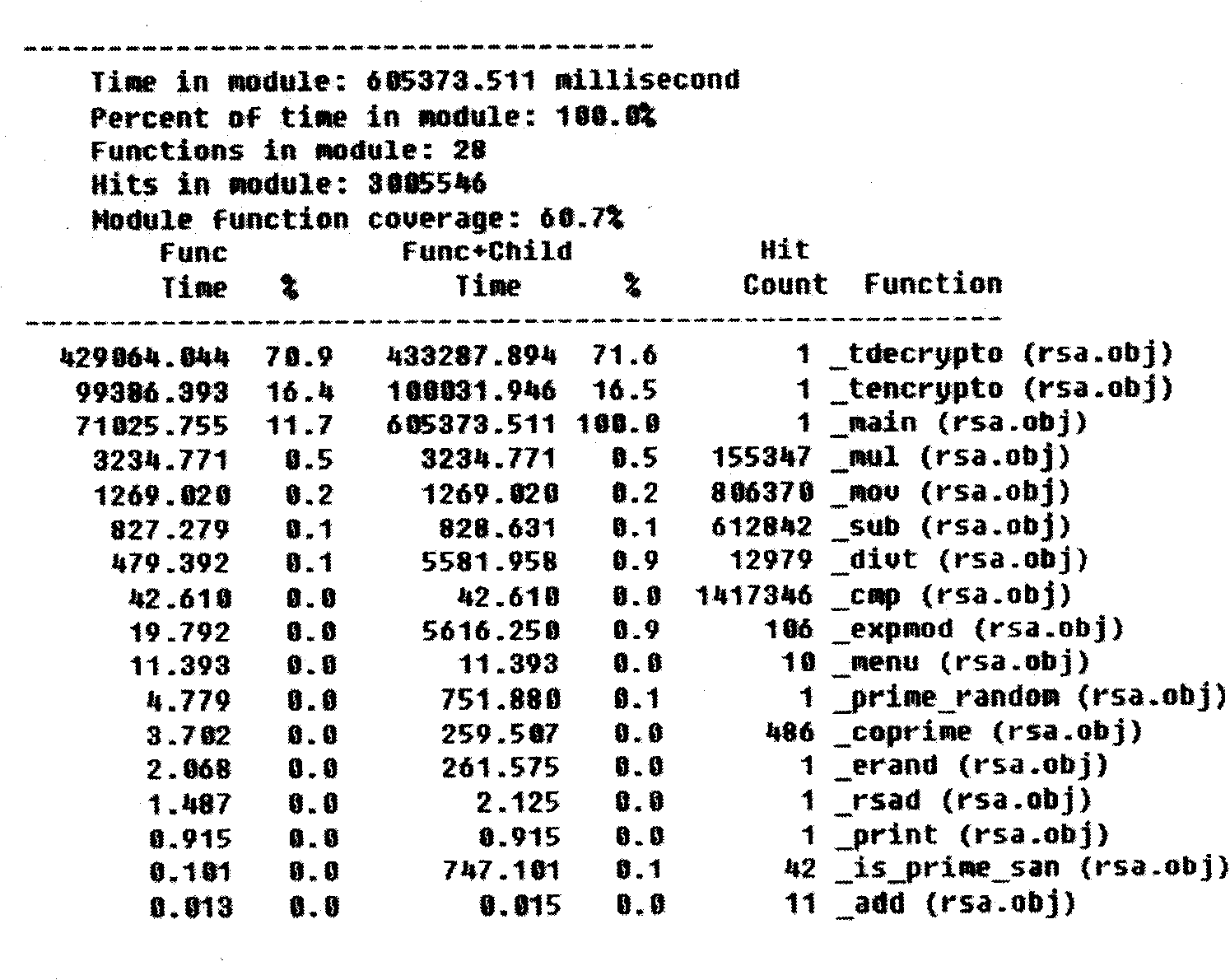

Software and hardware cooperating design method for arithmetic acceleration

InactiveCN101493862AChanging the status quo of secular stagnationImprove compatibilitySpecial data processing applicationsAnalysis dataSystem requirements

The invention discloses a software and hardware collaborative design method of algorithm acceleration. The method has six steps of: step 1: static analysis of algorithm and software; step 2: using software analysis tools to carry out dynamic actual measurement analysis of the software so as to obtain a basic data chart of software operation; step 3: making overall structure and function design of a multi-core hardware system by combination of system requirements, the algorithm analysis and the software actual measurement analysis data; step 4: using appropriate modeling tools (RML) to describe the whole system; step 5: constructing a function process abstract chart GCG (including a function call chart of operation time parameters) on the base of the step 2 and discussing the distribution of the software in the multi-core system by using the chart GCG as the subject; and step 6: carrying out the software and hardware realization of a prototype system according to a proposal obtained from the step 5 and evaluating the realization results. The method has good compatibility, is applicable to the urgent demand for the design of a multi-core system on chip (SOC) and promotes the improvement of multi-core design tools. The method has very high utility value and promising application prospect.

Owner:BEIHANG UNIV

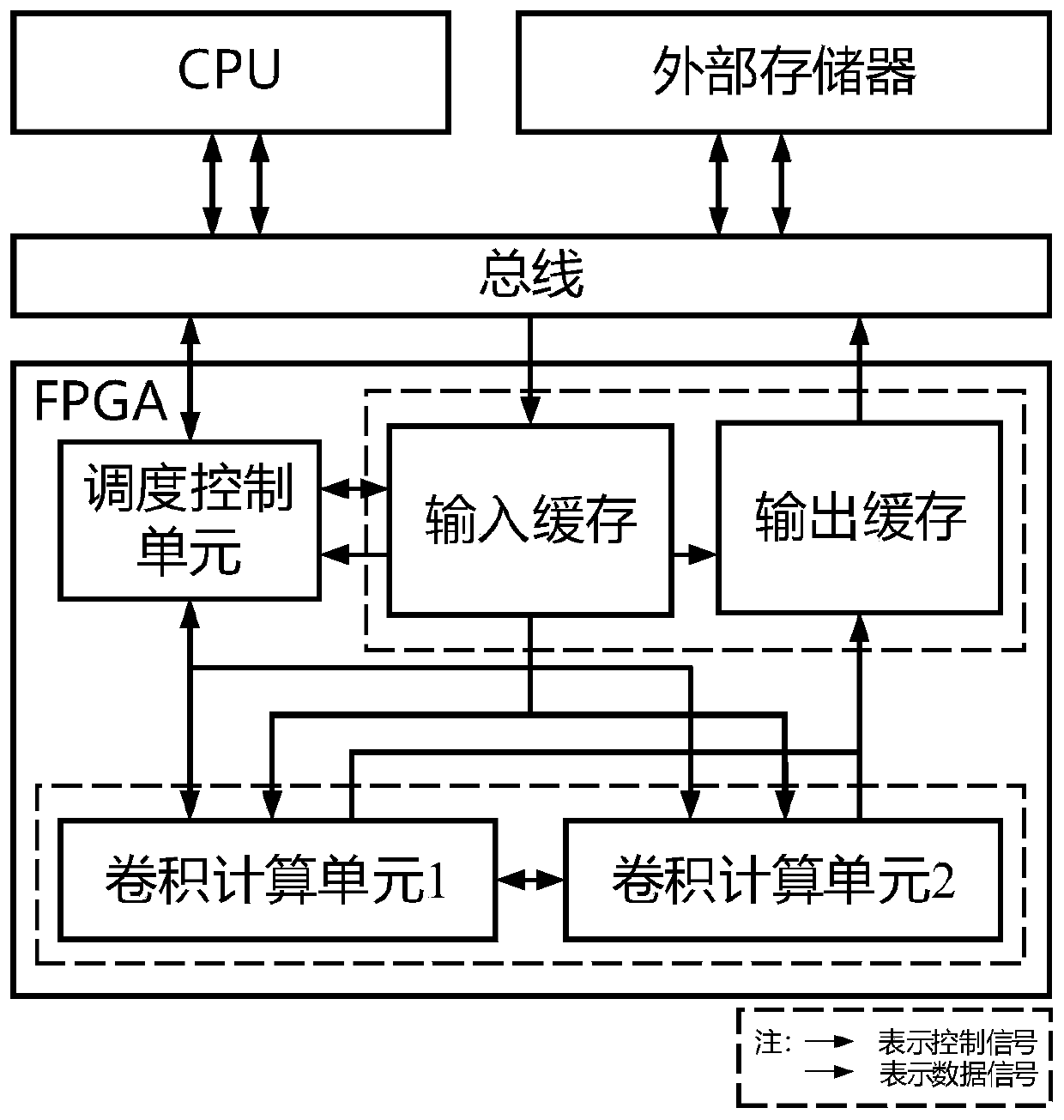

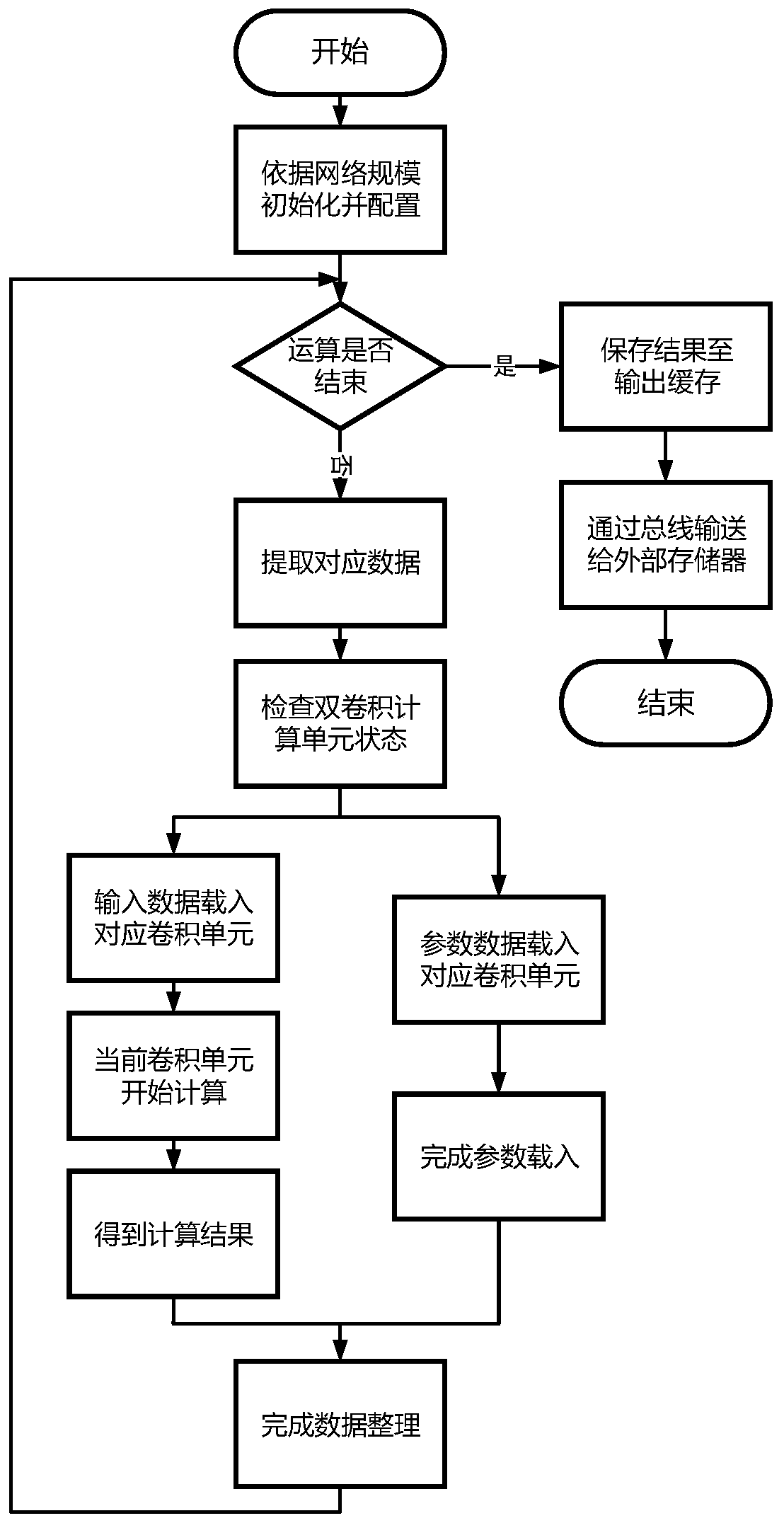

Heterogeneous neural network calculation accelerator design method based on FPGA

ActiveCN110991632AVersatilityReduce wasteEnergy efficient computingPhysical realisationExternal storageAlgorithm

The invention belongs to the technical field of computers. The invention provides an FPGA-based heterogeneous neural network calculation accelerator design method, which is suitable for large-scale deep neural network algorithm acceleration. The method comprises the following steps that: a CPU reads related parameters of a neural network, and dynamically configures an external memory and a convolution calculation unit according to obtained information; an external memory stores parameters and input data which need to be loaded into corresponding positions of an input cache through a bus; the parameters are alternately loaded into the two convolution calculation units respectively, the other convolution calculation unit performs calculation while the parameters are loaded into one convolution calculation unit, and circularly alternating is conducted until all operations of the whole convolution neural network are completed; and final output results are stored in an output cache to waitfor an external memory to access. According to the method, the FPGA is used for combining the convolutional neural network calculation units, so a computing rate of a platform can be increased while resources are saved.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

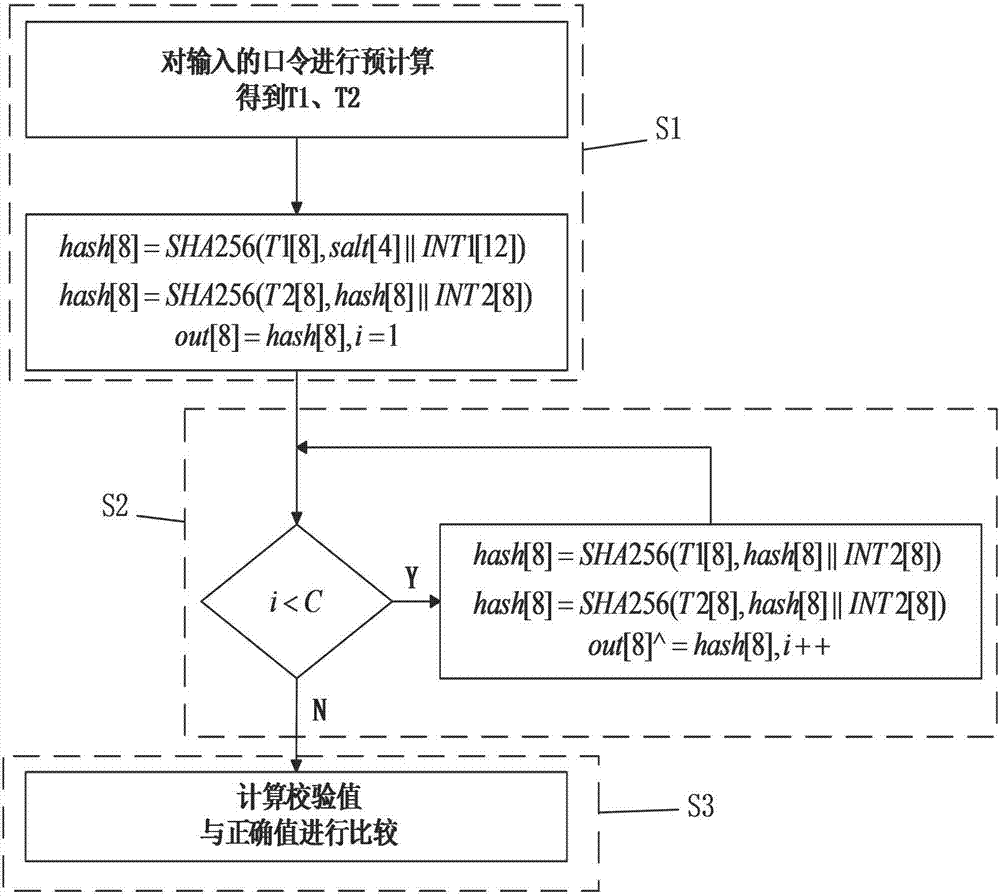

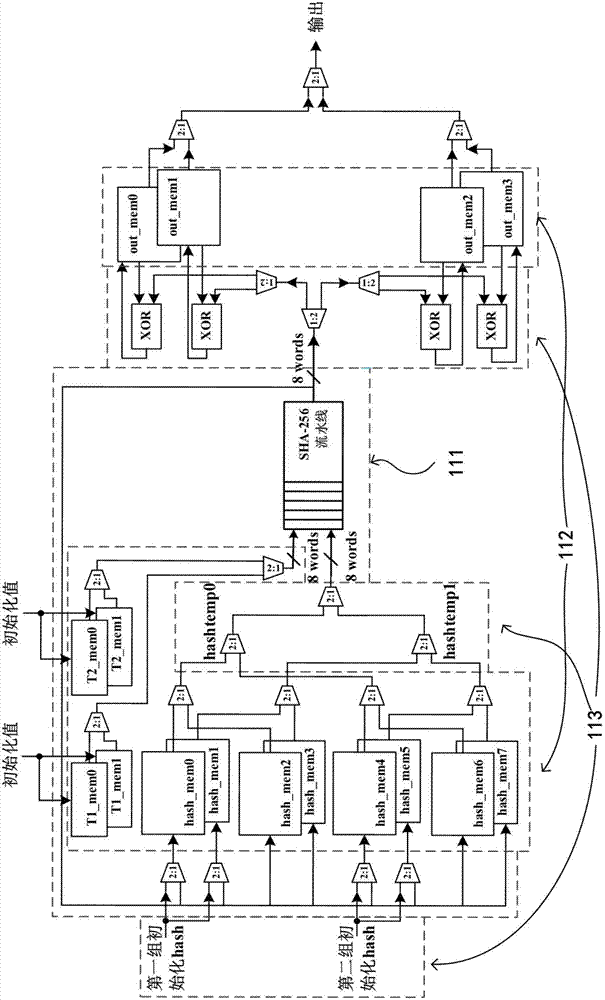

PBKDF2 cryptographic algorithm accelerating method and used device

ActiveCN107135078AImprove abstract abilityImprove reusabilityKey distribution for secure communicationUser identity/authority verificationResource utilizationBus interface

The invention discloses a PBKDF2 cryptographic algorithm accelerating device. The device comprises a CPU+FPGA (Field-Programmable Gate Array) heterogeneous system composed of an FPGA and a universal CPU. The invention further provides a PBKDF2 cryptographic algorithm accelerating method. The method comprises the following steps: 1) initial: computing a pre-computing part and a part before a loop body of a PBKDF2 algorithm is executed in a CPU, and transmitting a computed result to the FPGA via a bus interface; 2) loop: placing a computing-intensive loop body part in the PBKDF2 algorithm onto the FPGA, improving an acceleration effect and a resource utilization efficiency on the FPGA using an optimization means, and transmitting the computed result to the CPU via the bus interface; and 3) check: reading result data obtained after FPGA accelerated computing, and performing computed result summarization as well as check value computation and judgment.

Owner:北京骏戎嘉速科技有限公司

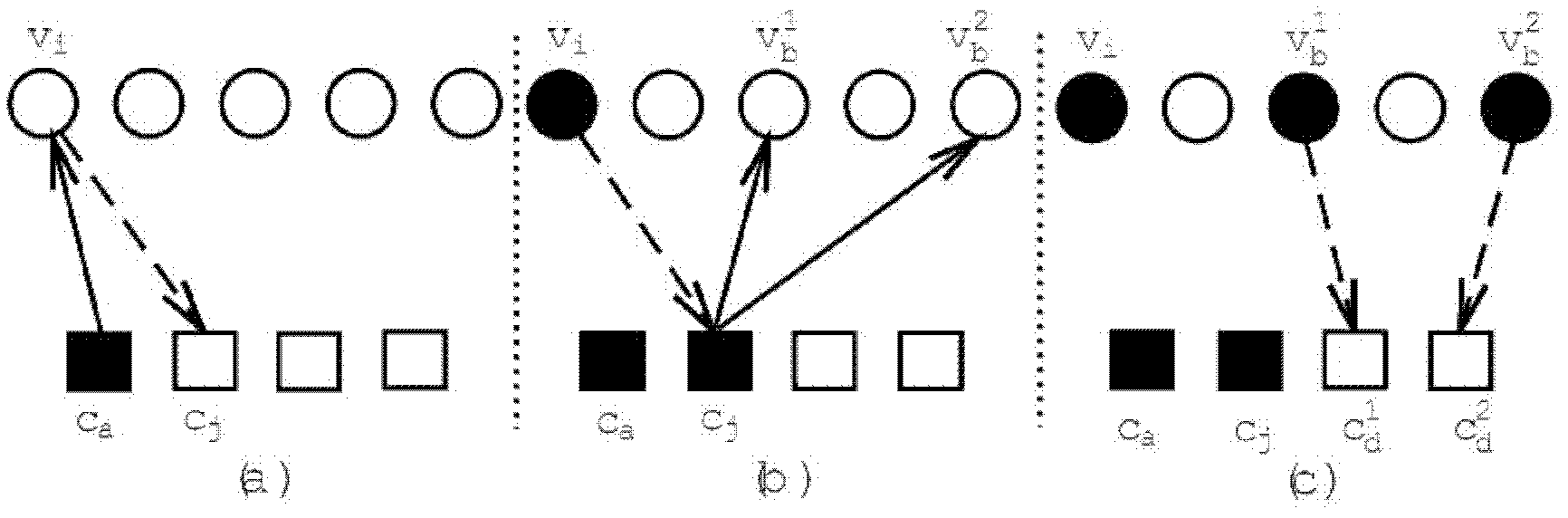

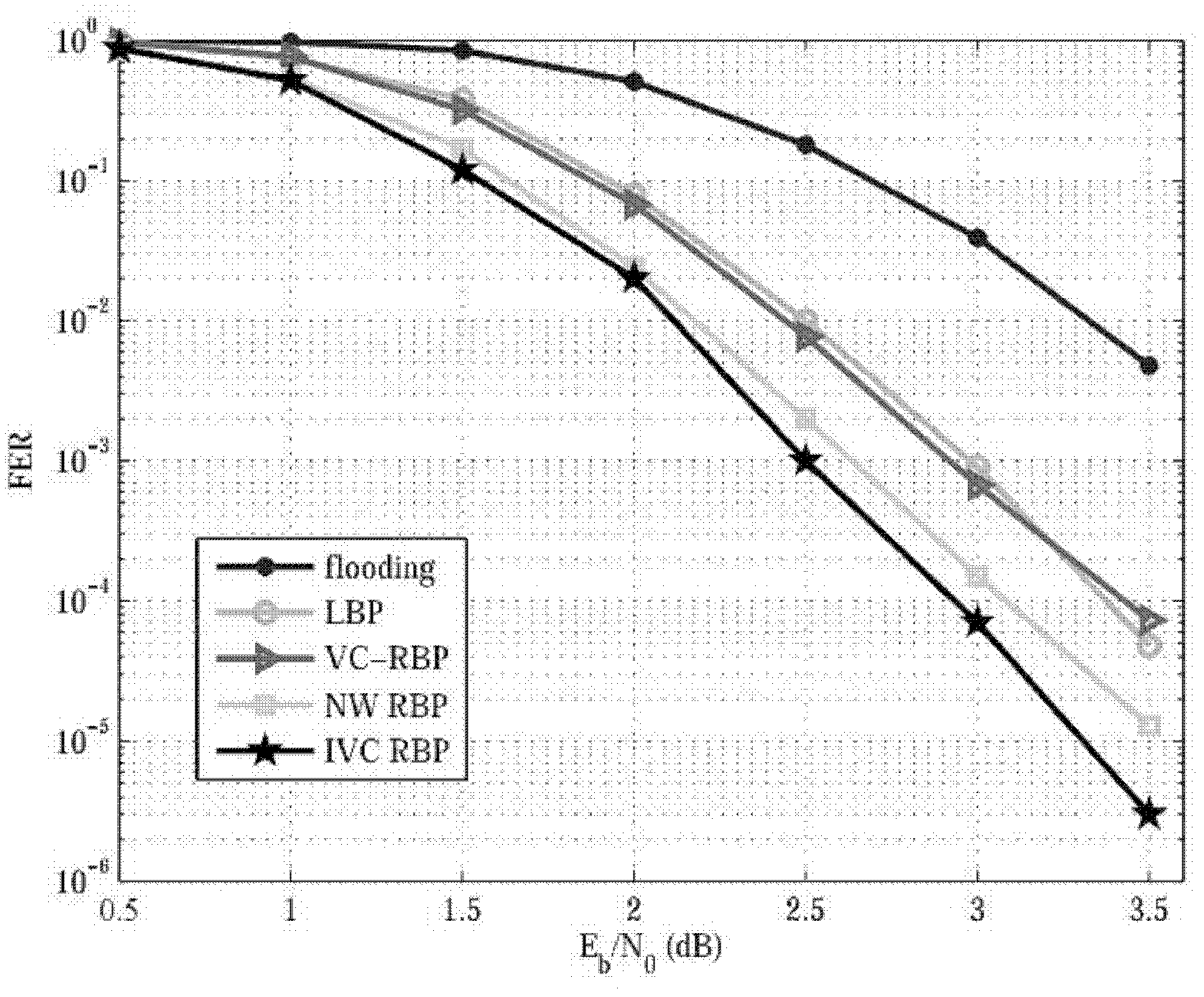

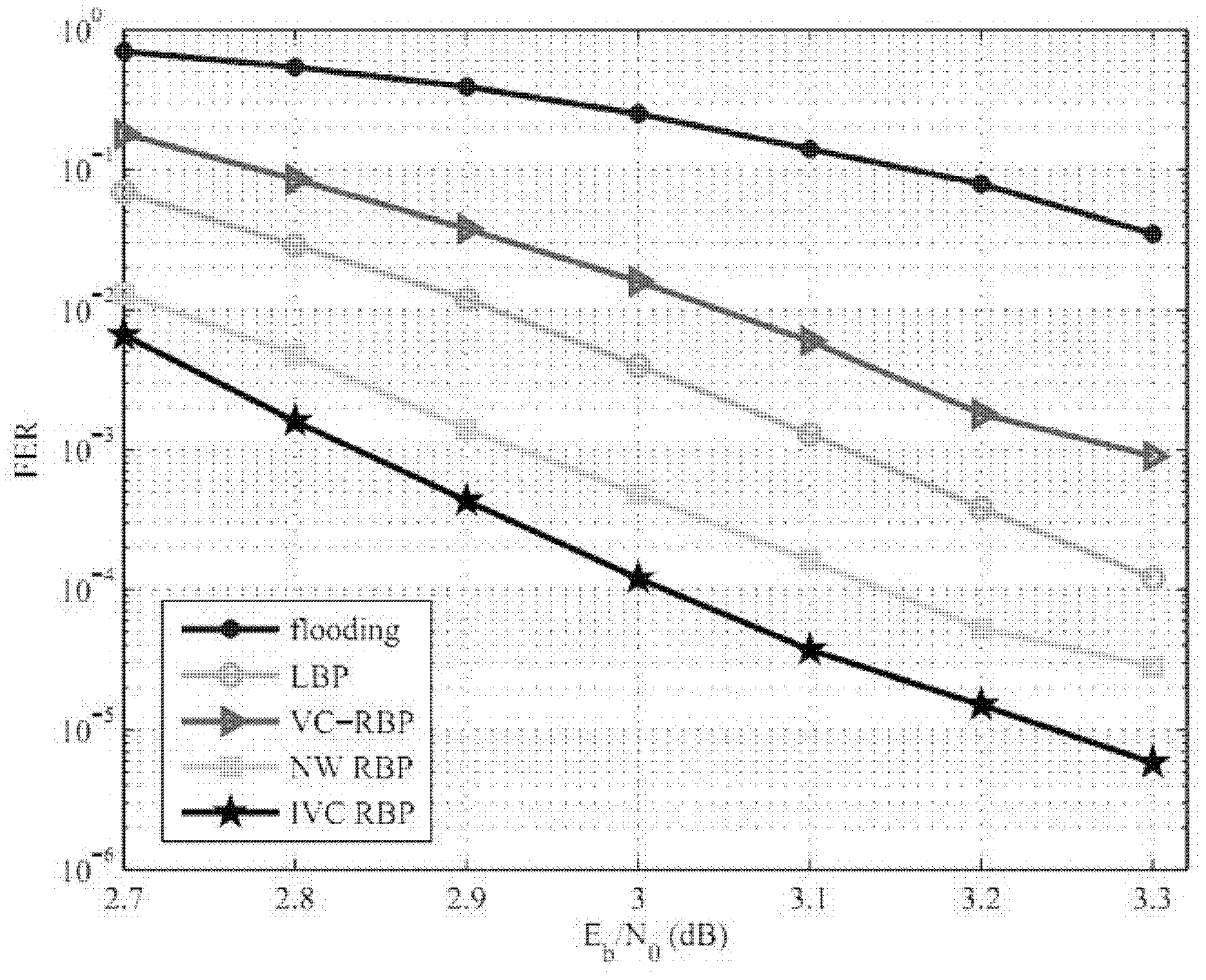

Dynamic asynchronous BP decoding method

InactiveCN102594365AFast convergenceImprove decoding performanceError correction/detection using multiple parity bitsDecoding methodsInstability

The invention aims at a BP decoding algorithm of a low density parity check (LDPC) code and provides an algorithm for dynamically constructing an asynchronous update sequence judged by the instability of variable nodes, wherein the algorithm is based on the maximum information residual between variable nodes and check nodes which are updated and variable nodes and check nodes not updated. The principle that convergence can be controlled by controlling the difference value between two adjacent calculation results in error estimation through fixed point iterative algorithm is used in the algorithm, message calculation from the variable nodes to the check nodes is selected as an iterated function, and the check function of the check nodes is fully used. The messages required to be optionally updated are better positioned by the algorithm, and a trap set in the LDPC is more quickly overcome, so the iterations required during decoding are reduced, and the aims of quickening convergence and improving the decoding performance through the algorithm are fulfilled.

Owner:SUN YAT SEN UNIV

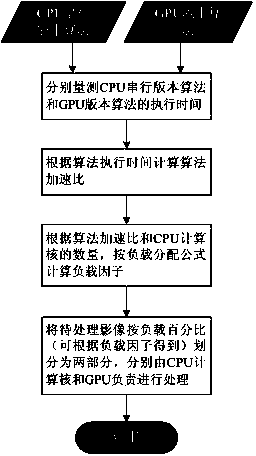

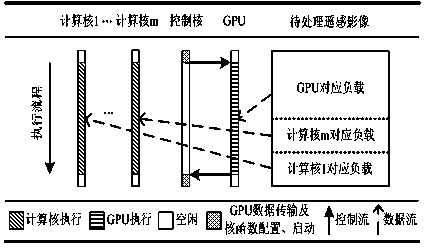

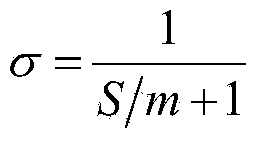

Remote sensing image CPU/GPU (central processing unit/graphics processing unit) co-processing method based on load distribution

ActiveCN103632336AImprove processing efficiencyIn line with actual operating conditionsProcessor architectures/configurationMain processing unitLoad distribution

The invention discloses a remote sensing image CPU / GPU (central processing unit / graphics processing unit) co-processing method based on load distribution. The method comprises the steps of measuring the execution time of a CPU serial version algorithm and a GPU version algorithm respectively; calculating the speed-up ratio of the GPU version algorithm relative to the CPU serial version algorithm according to the execution time of the algorithms; calculating load factors according to the algorithm speed-up ratio, the amount of CPU calculation cores and a load distribution formula; acquiring load percentages corresponding to the CPU calculation cores and the GPU according to the load factors, dividing images to be processed into two parts according to the load percentages, and processing the images by the CPU calculation cores and the GPU respectively. By adopting the method, computing resources in a high-performance remote sensing image processing system consisting of a multi-core CPU and the GPU can be fully used, so that the processing efficiency of big data remote sensing images is improved.

Owner:WUHAN UNIV

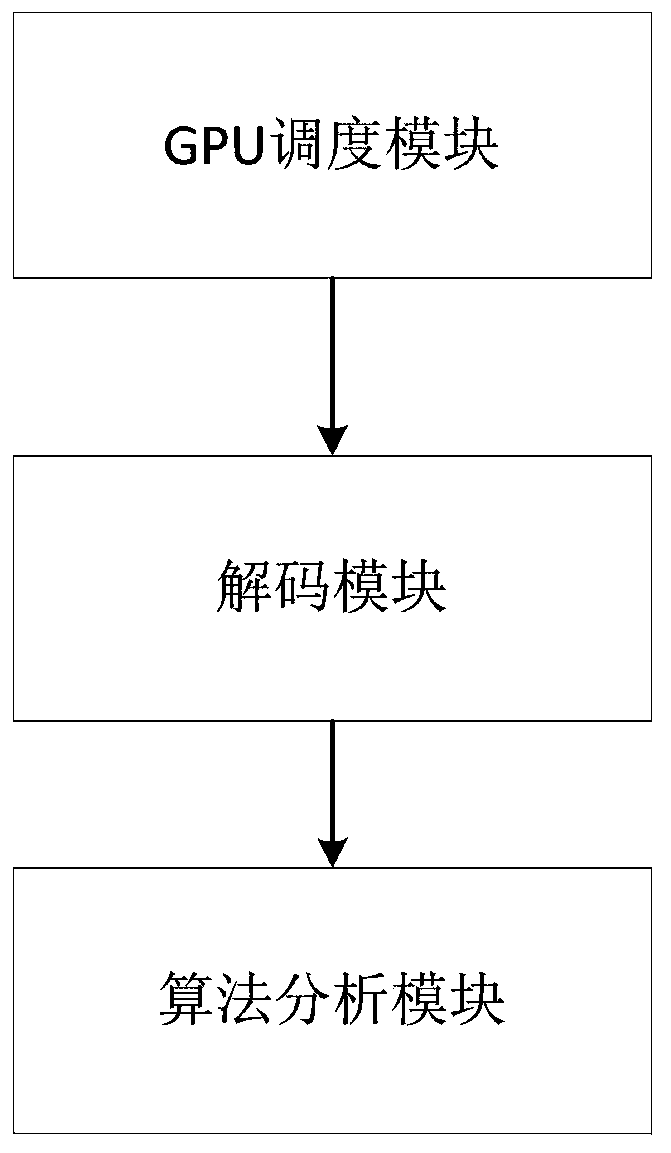

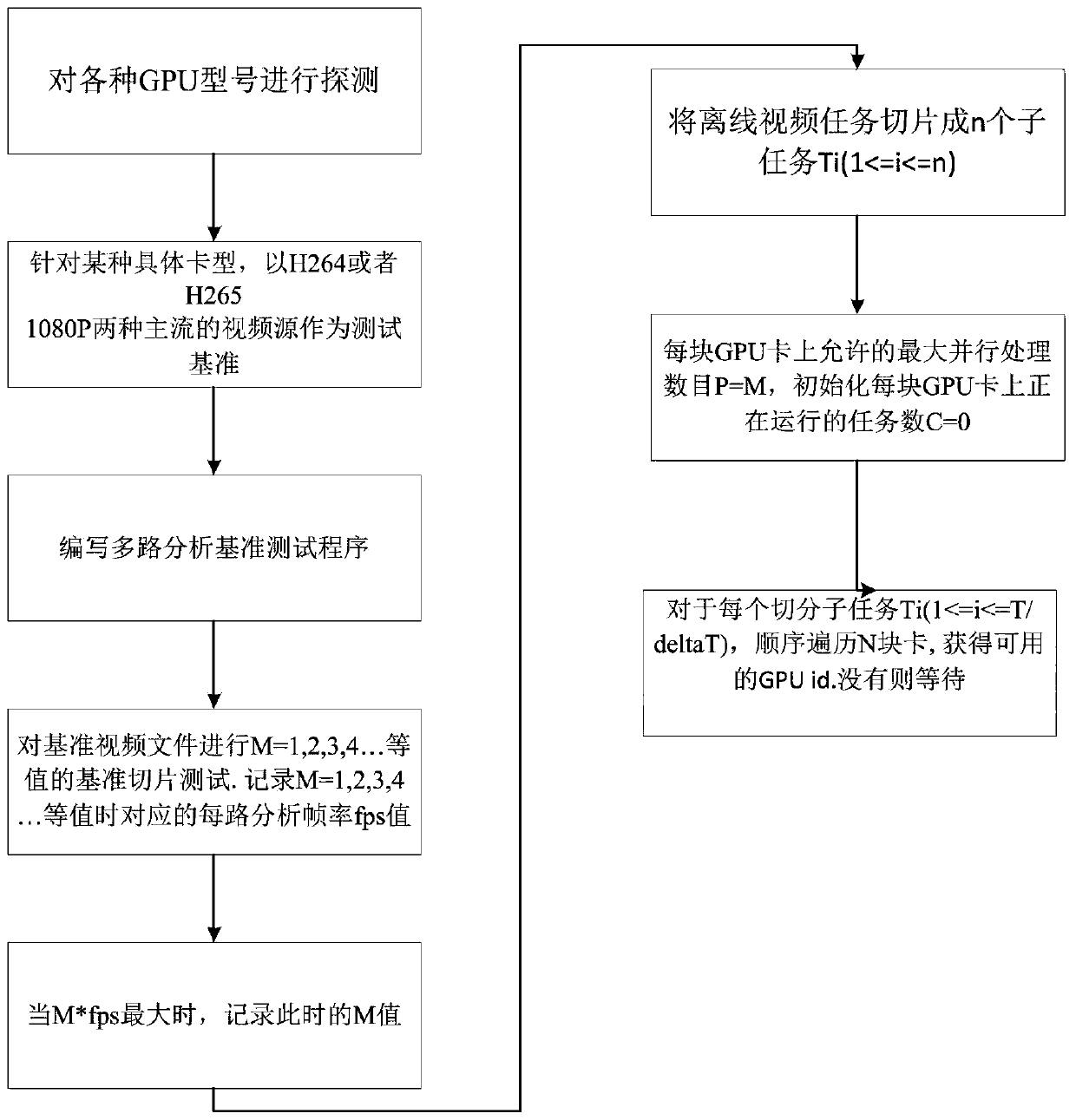

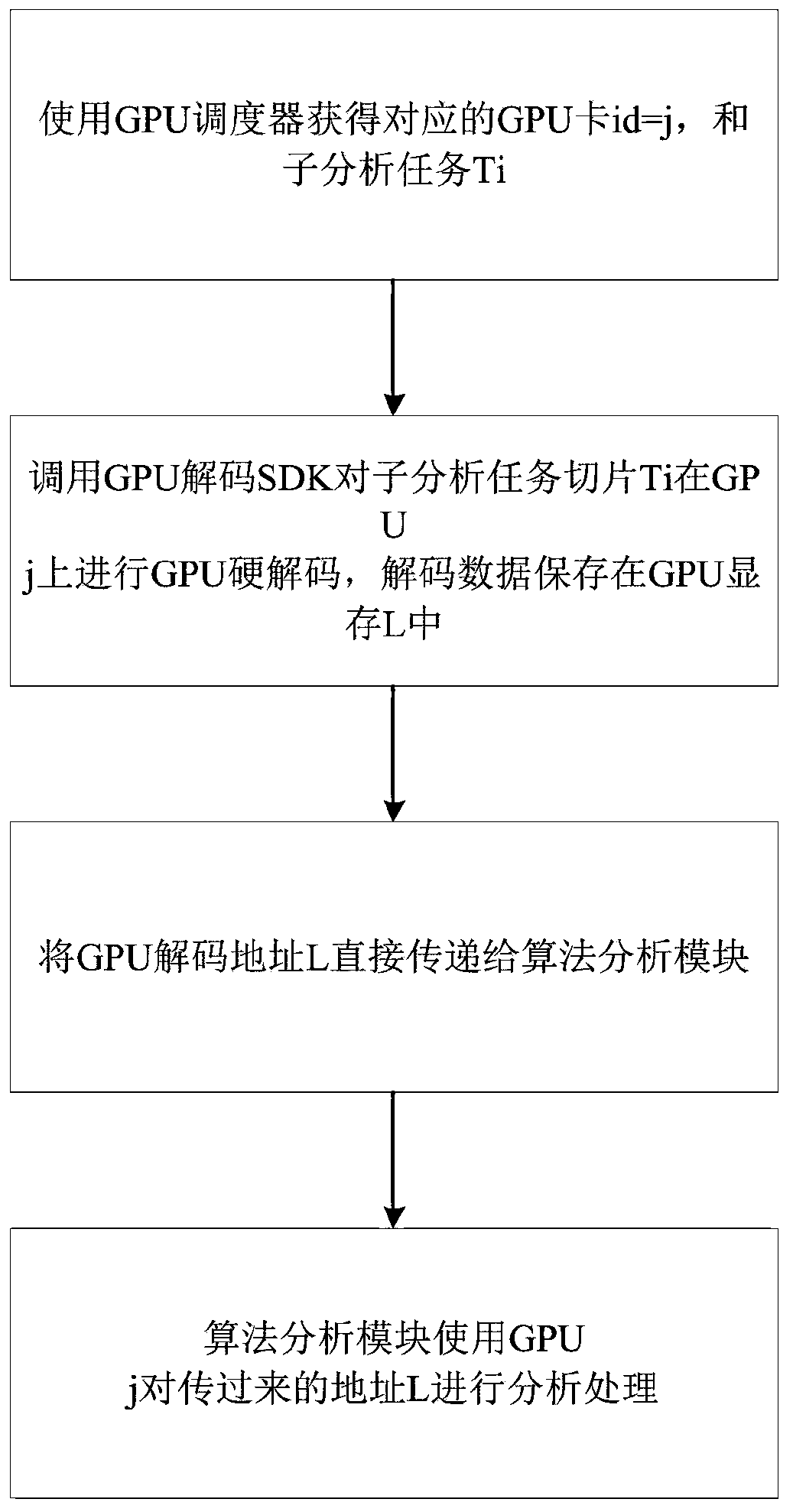

Method, device and equipment for optimizing intelligent video analysis performance

ActiveCN109769115ALower latencyImprove system performanceTelevision systemsDigital video signal modificationVideo memoryBatch processing

The invention relates to a method, a device and equipment for optimizing the analysis performance of an intelligent video, and the method comprises the steps: (1) carrying out a reference piperine test on a video file for the acceleration of an offline video file, and setting an optimal file slice number; slicing the video file, and issuing a slicing task to the GPU; calling a GPU to decode the slice file, and calling back a decoding result to an algorithm directly through a video memory address, and reducing the performance loss without the video memory-main memory copy, wherein the video analysis algorithm takes the decoded video memory address, calls a GPU for algorithm acceleration and outputs an analysis result; (2) optimizing and expanding the number of paths for real-time video stream algorithm analysis; and calling the GPU to decode each path of real-time video, calling back a decoding result to the algorithm directly through a video memory address, setting double caches by analgorithm end, storing decoded data in multiple paths, transmitting the decoded data to the algorithm for GPU batch processing, and switching the two cache functions after batch processing is completed to achieve the purpose of minimum system delay.

Owner:武汉众智数字技术有限公司

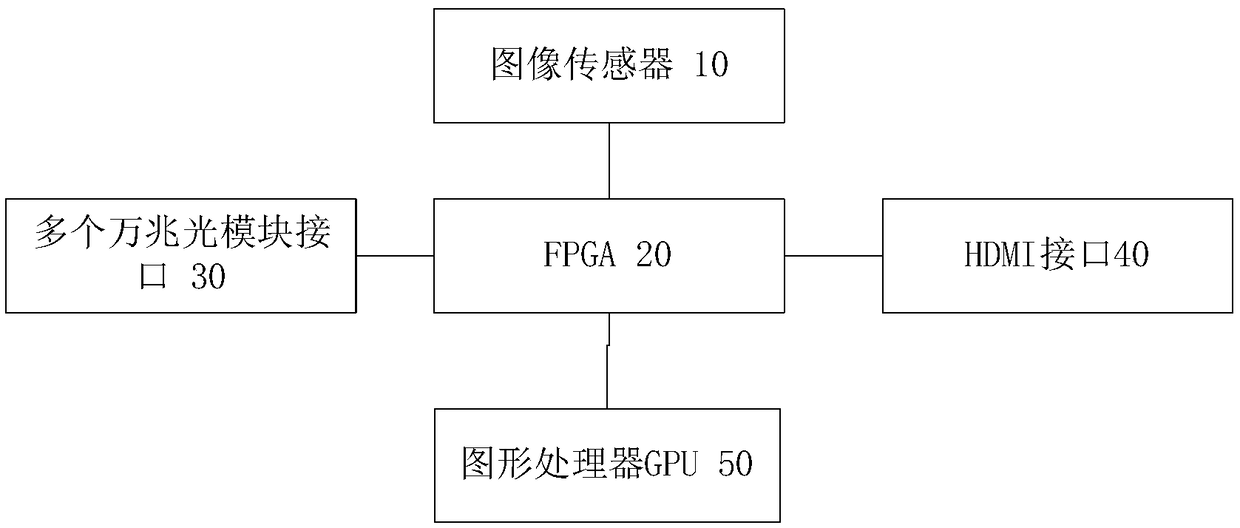

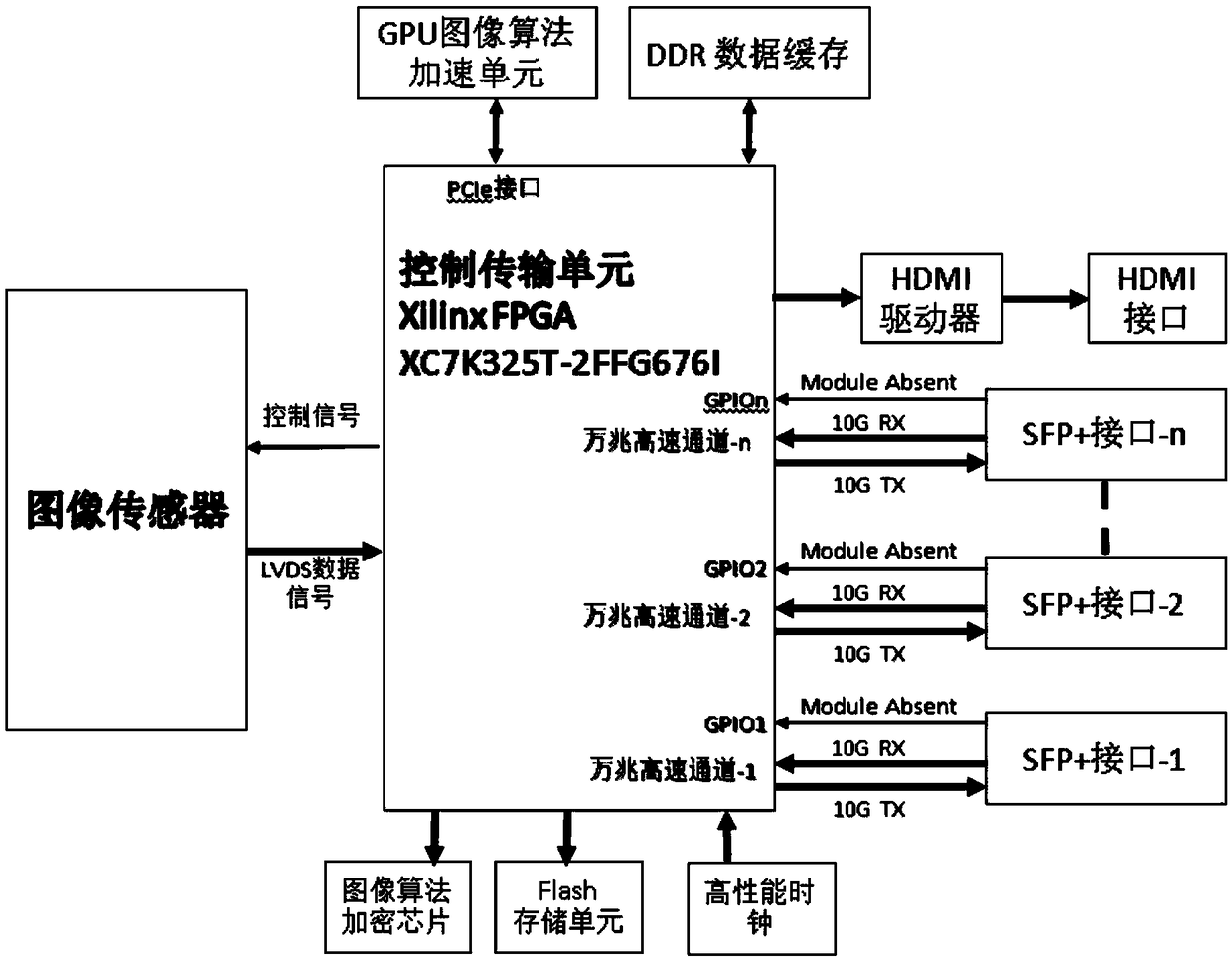

Industrial camera

InactiveCN108696727AIndependent processing capacityEasy to achieve smooth expansionTelevision system detailsColor television detailsGate arrayGraphics processing unit

The invention discloses an industrial camera. The industrial camera comprises an image sensor used for acquiring image data; a programmable gate array FPGA used for connecting the image sensor, a plurality of 10 gigabit optical modules, an HDMI display interface and a graphics processing unit GPU, and performing image processing and executing system and data management; a plurality of 10 gigabit optical module interfaces, which are connected with the image sensor through the FPGA and used for transmitting the image data acquired by the image sensor or processed by the FPGA; the graphics processing unit GPU, which is connected with the FPGA and used for performing algorithm acceleration on the image data transmitted by the FPGA; and the HDMI interface which is connected with the FPGA and used for performing image display on the image data processed by the FPGA. According to the industrial camera provided by the invention, the design of 10 gigabit optical interfaces can realize the smooth expansion of multiple interfaces, achieve the requirement of high bandwidth, and use an optical fiber to perform long-distance transmission without relaying, so that the transmission cost is reduced, the connection of multiple hosts to the camera can be met, and high-bandwidth image data can be acquired.

Owner:杭州言曼科技有限公司

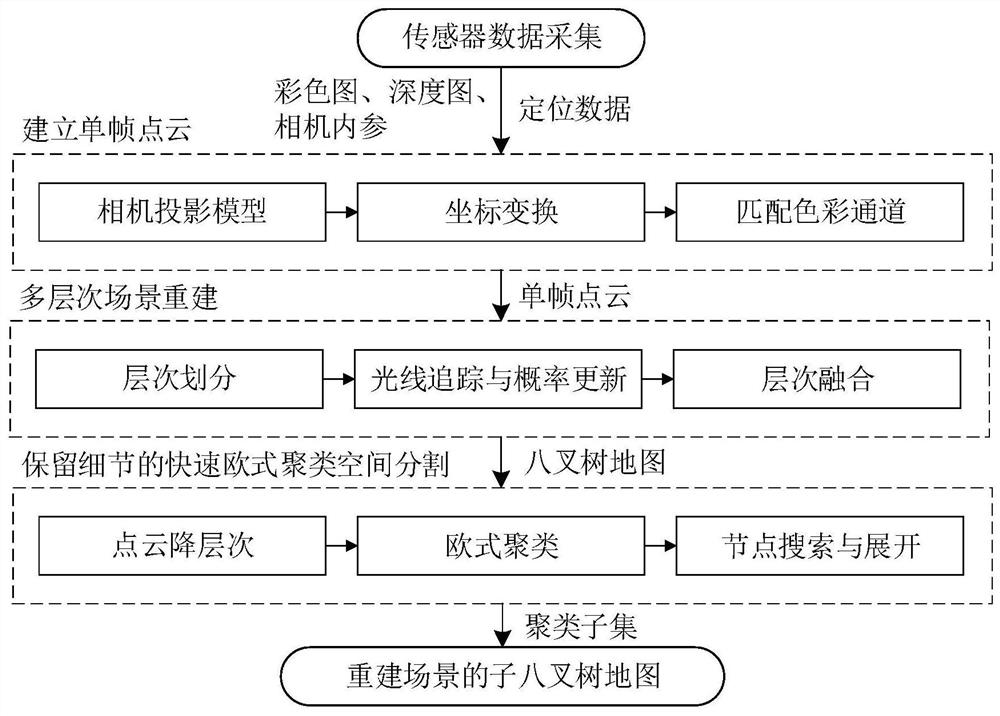

Multi-level scene reconstruction and rapid segmentation method, system and device for narrow space

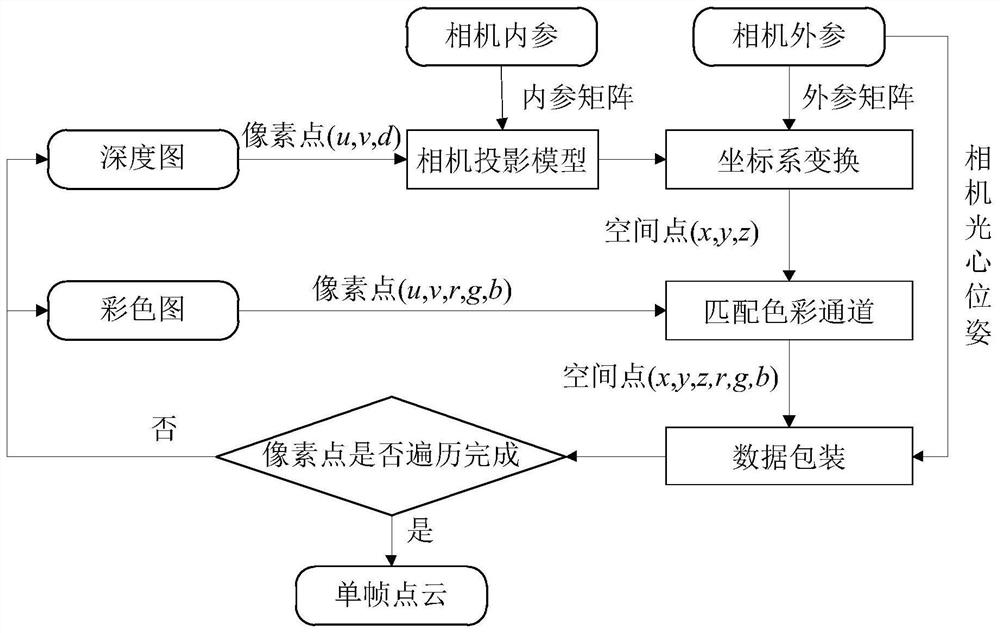

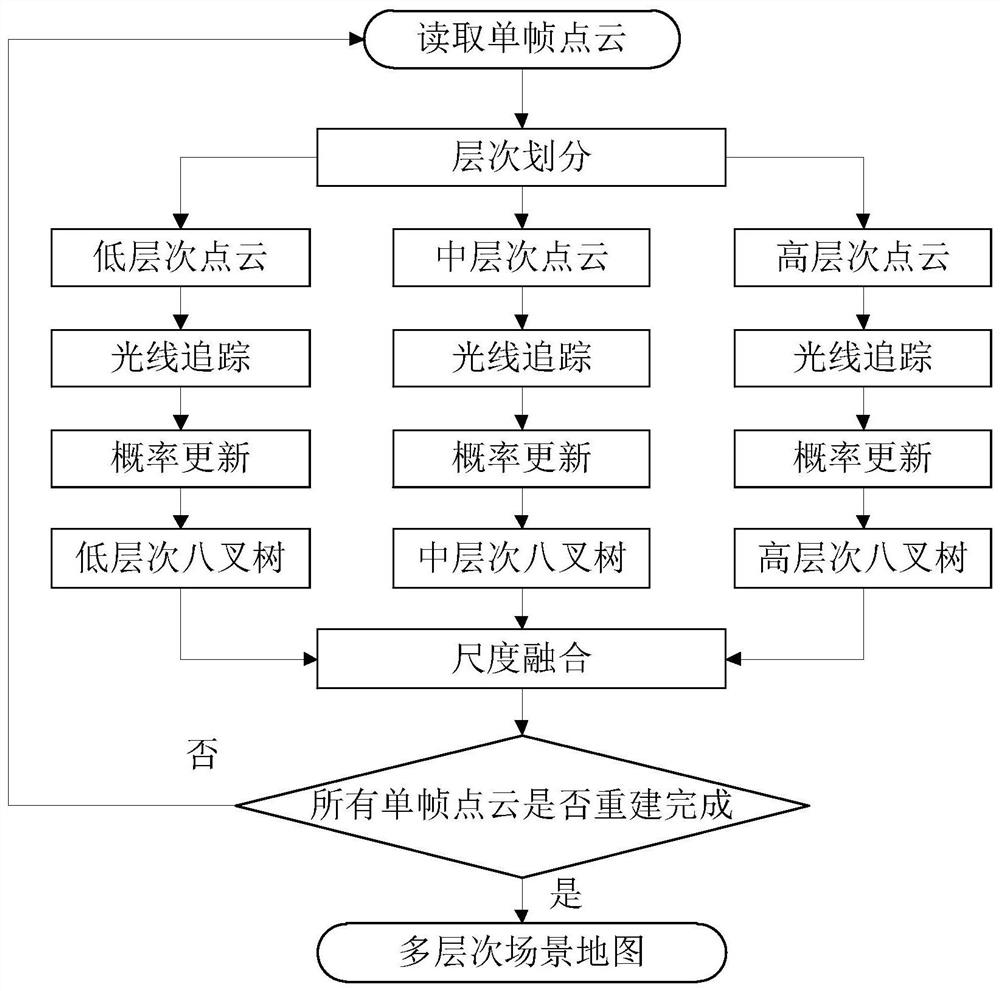

ActiveCN112200874AImprove scene reconstruction accuracyImprove rebuild speedImage enhancementImage analysisPattern recognitionColor image

The invention belongs to the field of robot scene reconstruction, particularly relates to a multi-level scene reconstruction and rapid segmentation method, system and device for a narrow space, and aims to solve the problem that the reconstruction precision and calculation real-time performance of robot scene reconstruction and segmentation in the narrow space cannot be considered at the same time. The method comprises the following steps: taking a color image, a depth image, camera calibration data and robot spatial position and attitude information; converting sensor data into a single-framepoint cloud through coordinate conversion; dividing scales of the single-frame point cloud, carrying out ray tracing and probability updating to acquire a multi-level scene map after scale fusion; and performing downsampling twice and upsampling once on the scene map, performing lossless transformation by means of scales, and establishing a plurality of sub-octree maps based on a space segmentation result, thereby realizing multi-level scene reconstruction and rapid segmentation. On the premise that necessary details of the scene are not lost, dense reconstruction and algorithm acceleration are achieved, and application to actual engineering occasions is better facilitated.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI +2

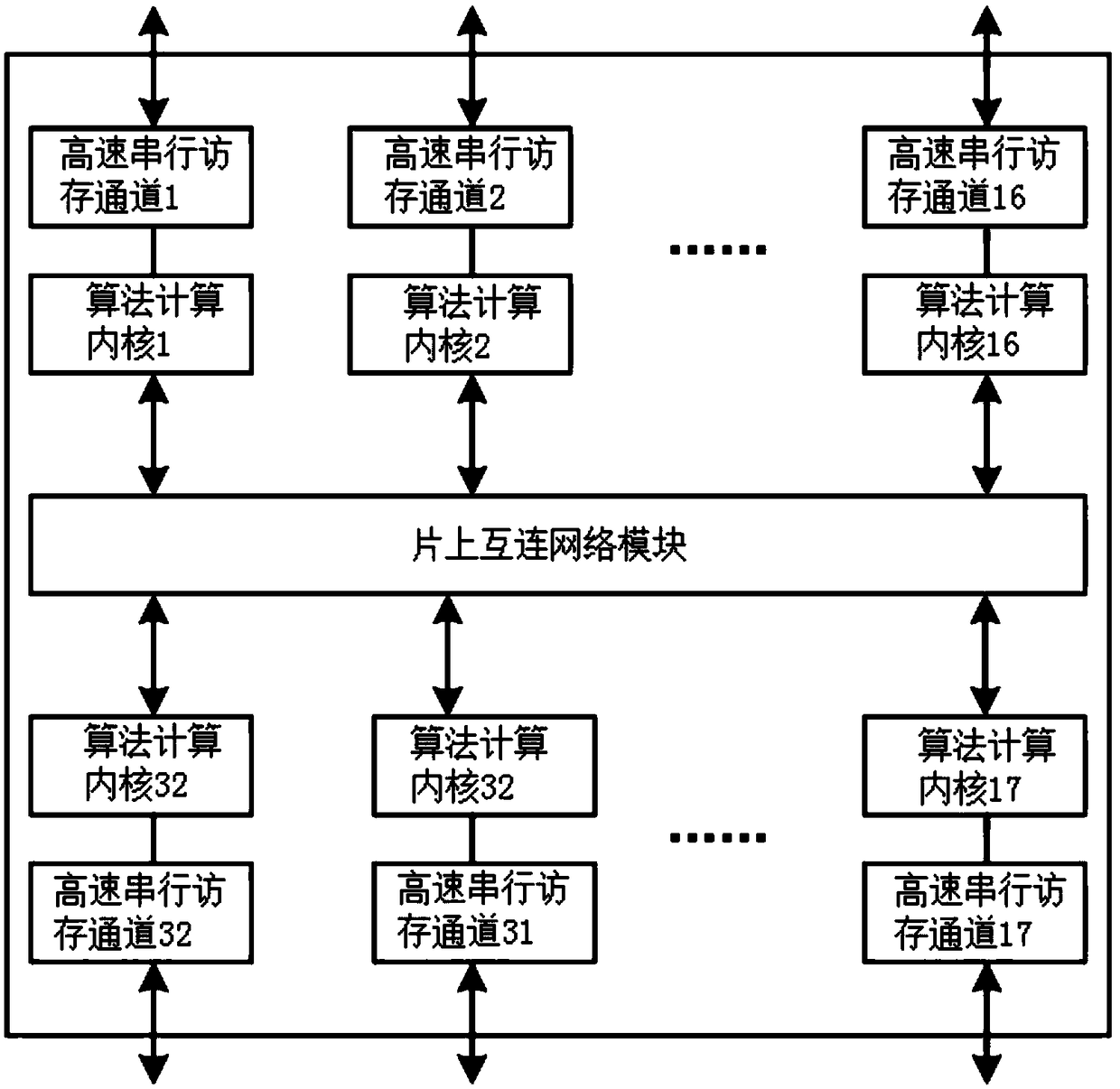

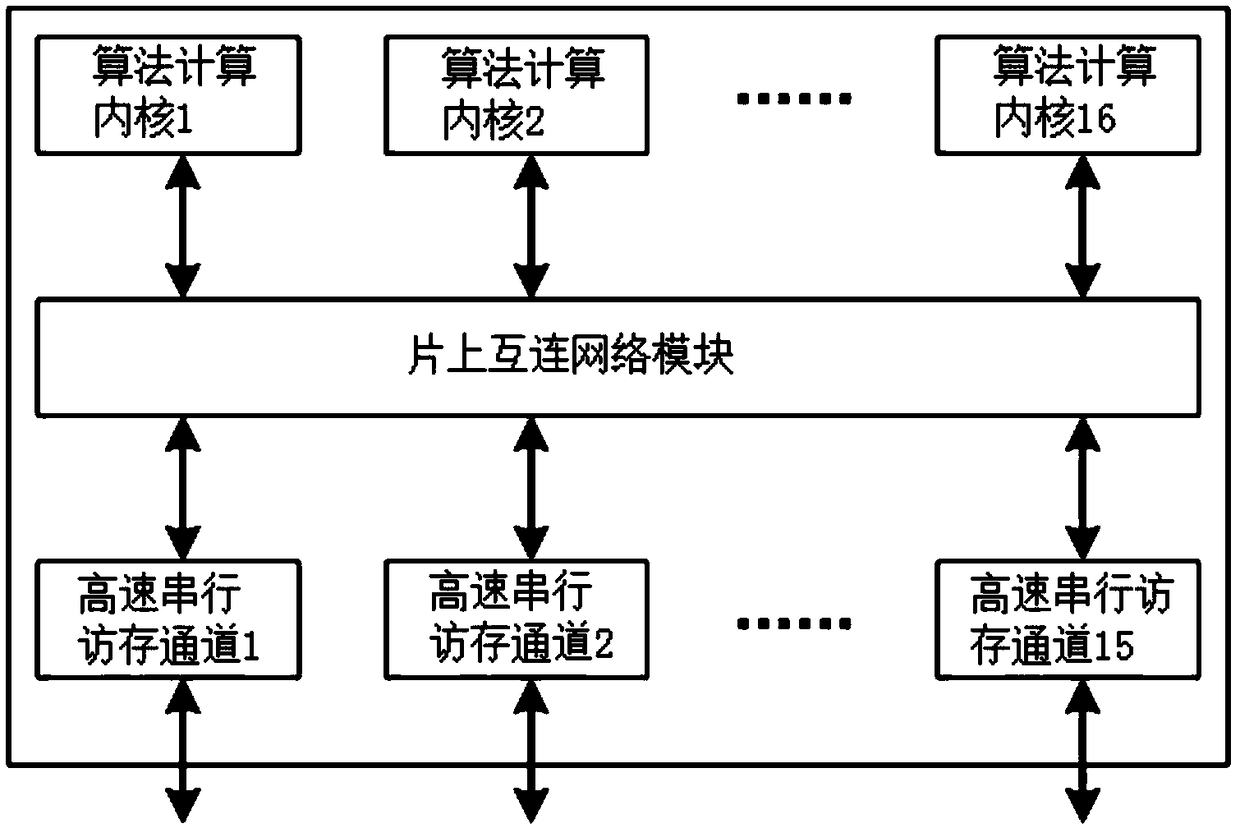

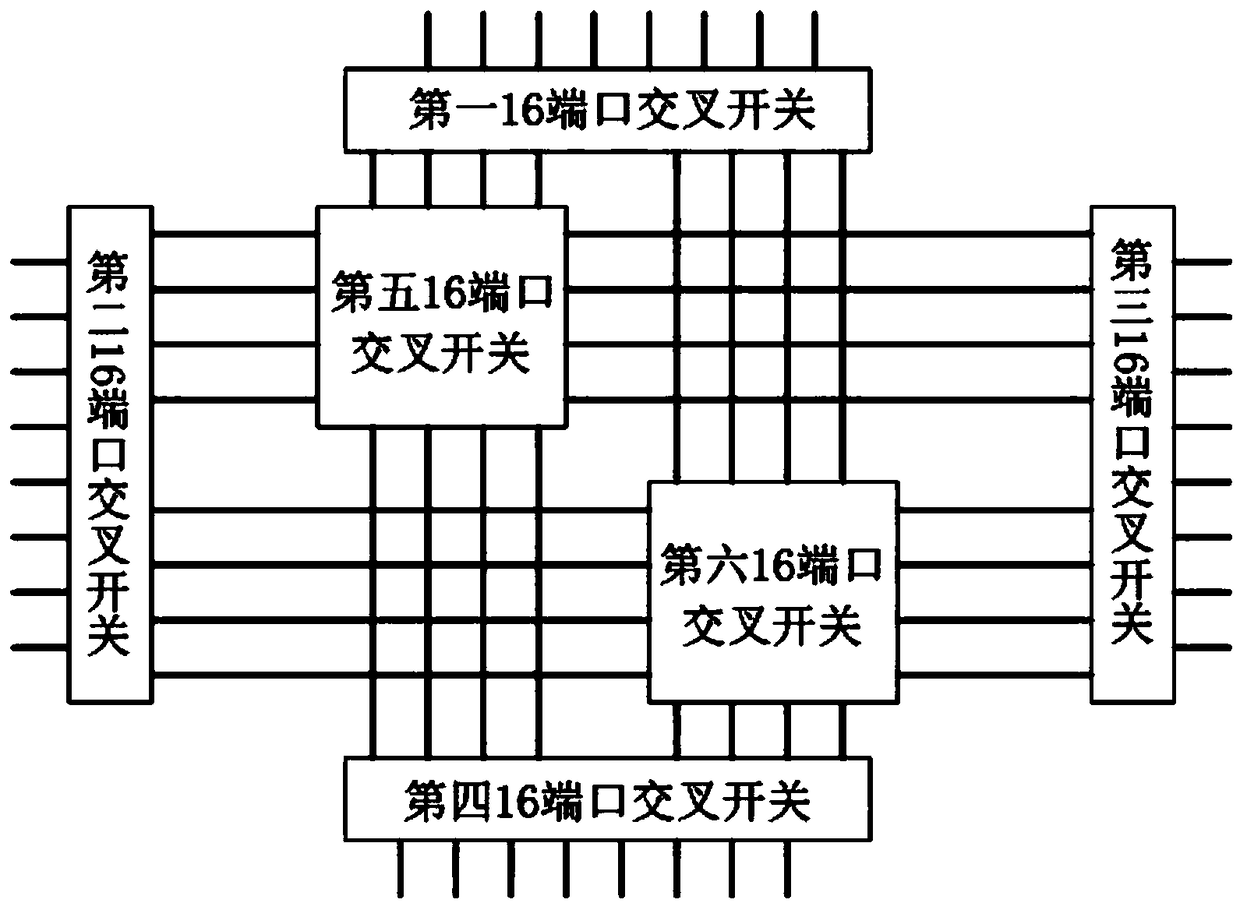

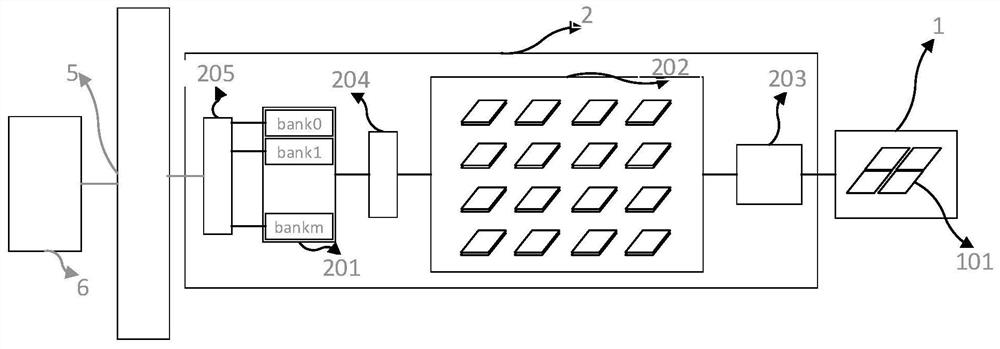

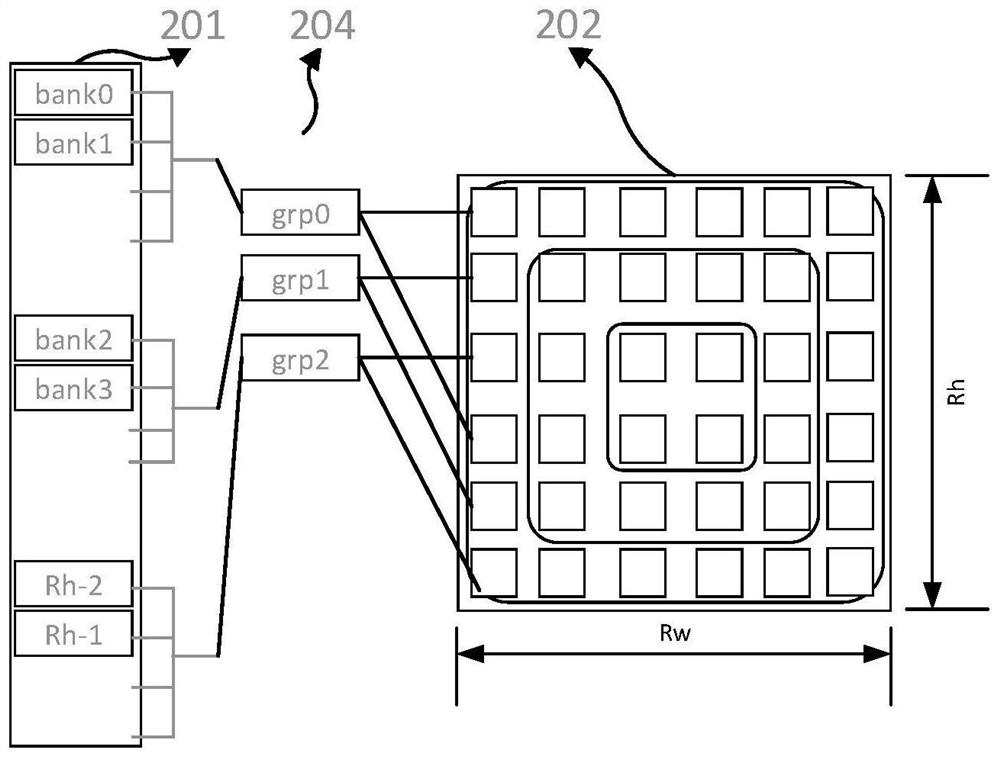

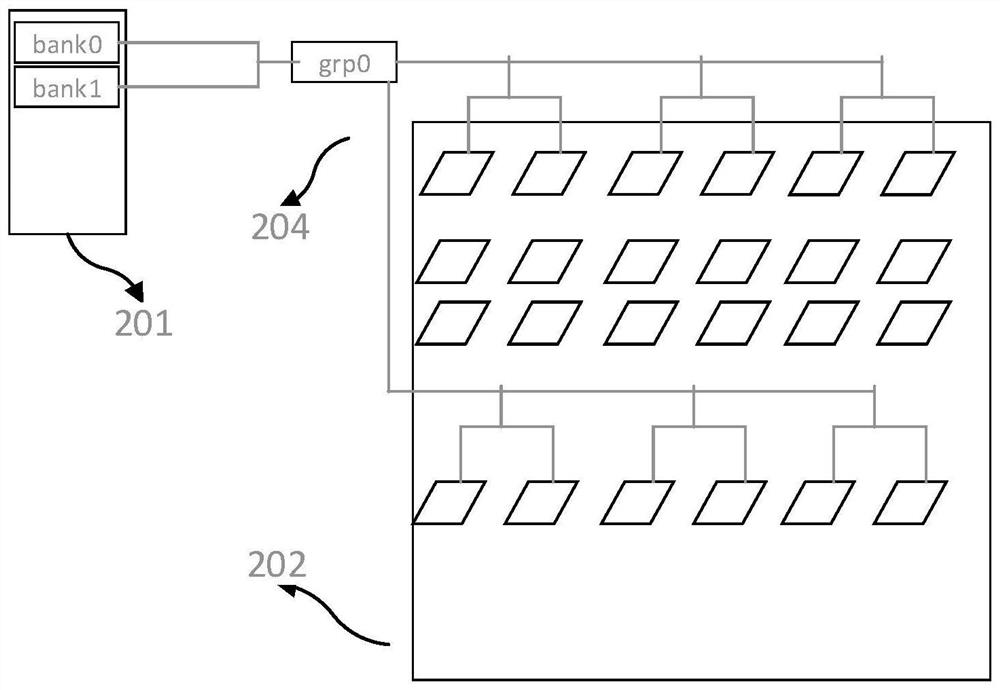

A memory access intensive algorithm acceleration chip with multiple high speed serial memory access channels

InactiveCN109240980AIncrease the number ofStructural rulesArchitecture with single central processing unitElectric digital data processingComputer architectureSystem architecture design

The invention relates to the field of computer system structure and integrated circuit design, an access-intensive algorithm acceleration chip having a plurality of high-speed serial access channels is disclosed, includes a plurality of algorithm computation cores for performing data processing operations in the algorithm and a plurality of high speed serial access memory channels, the high speedserial access channel is connected with the off-chip memory chip. The implementation mode of the on-chip interconnection network module includes single bus, multi-bus, ring network, two-dimensional mesh or crossover switch. A memory access intensive algorithm acceleration chip with a plurality of high-speed serial memory access channel, the invention can flexibly expand the number of high-speed serial memory access channels according to the algorithm processing requirements so as to expand the memory access bandwidth, support various address mapping modes, and support the algorithm to accelerate the direct data transmission between chips, thus providing better flexibility for the whole machine system architecture design.

Owner:深圳市安信智控科技有限公司

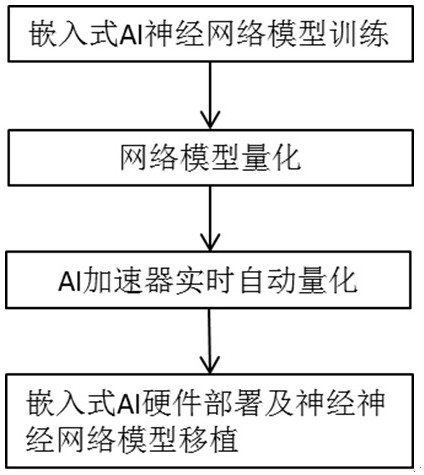

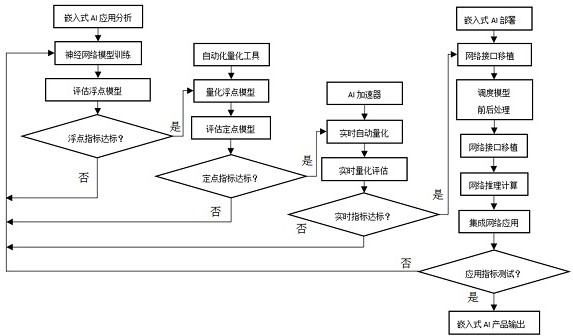

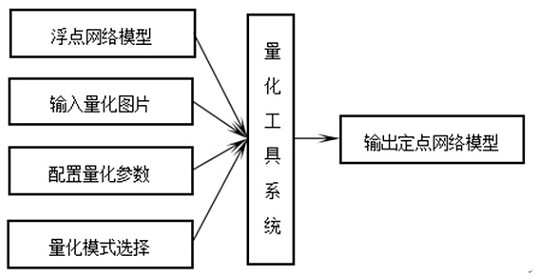

Neural network model real-time automatic quantification method and real-time automatic quantification system

PendingCN112446491AEfficient deploymentFast operationDigital data processing detailsCharacter and pattern recognitionEmbedded technologyNetwork model

The invention discloses a neural network model real-time automatic quantification method, which is based on an embedded AI accelerator, and comprises the following steps: carrying out embedded AI neural network training at a PC end, establishing a PC end deep learning neural network, and training an input floating point network model of an embedded AI model; quantizing the floating point network model into an embedded end fixed point network model; preprocessing data needing to be quantized, and realizing all acceleration operators of each layer of the model network through a hardware mode; deploying embedded AI hardware of the embedded end and transplanting the neural network model of the embedded end, and transplanting the neural network model of the built AI hardware platform. The invention further discloses a neural network model real-time automatic quantification system. According to the invention, algorithm acceleration is realized based on an embedded AI accelerator hardware mode, the storage occupied space of a neural network model can be reduced, the operation of the neural network model can be accelerated, the computing power of embedded equipment can be improved, the operation power consumption can be reduced, and the effective deployment of the embedded AI technology can be realized.

Owner:SENSLAB INC

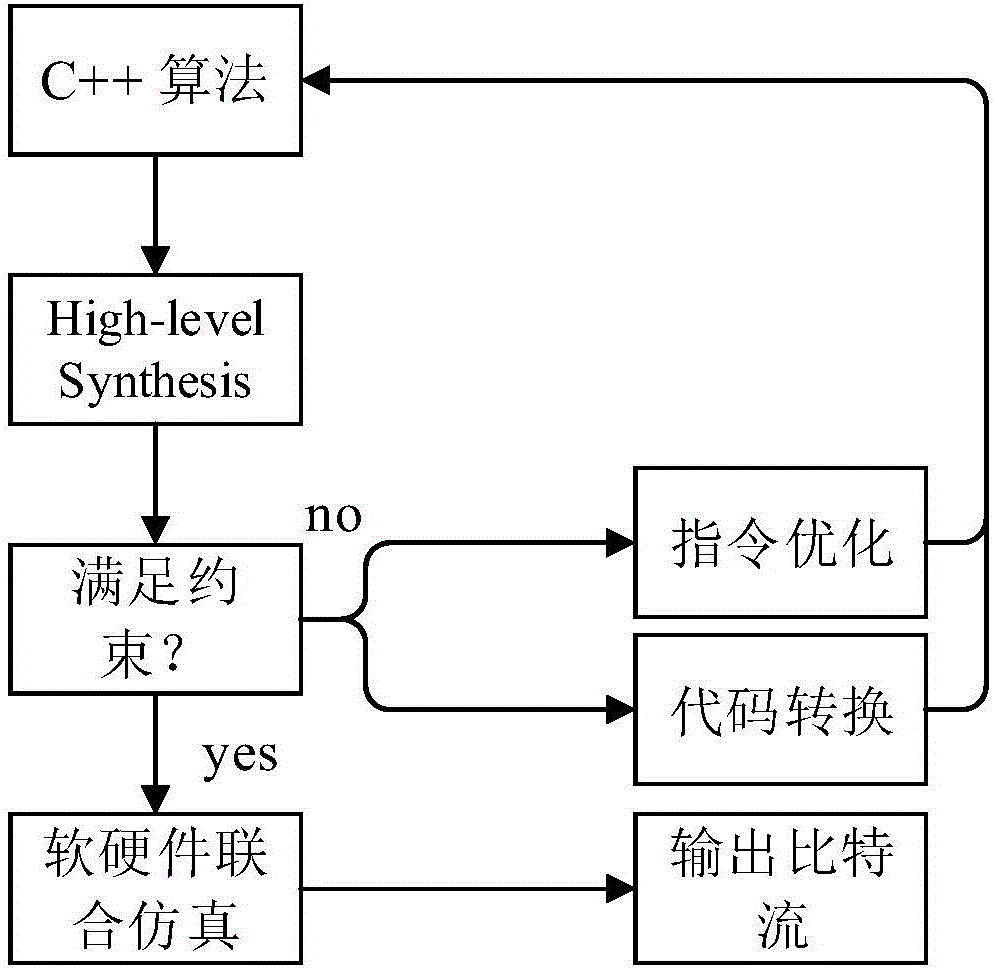

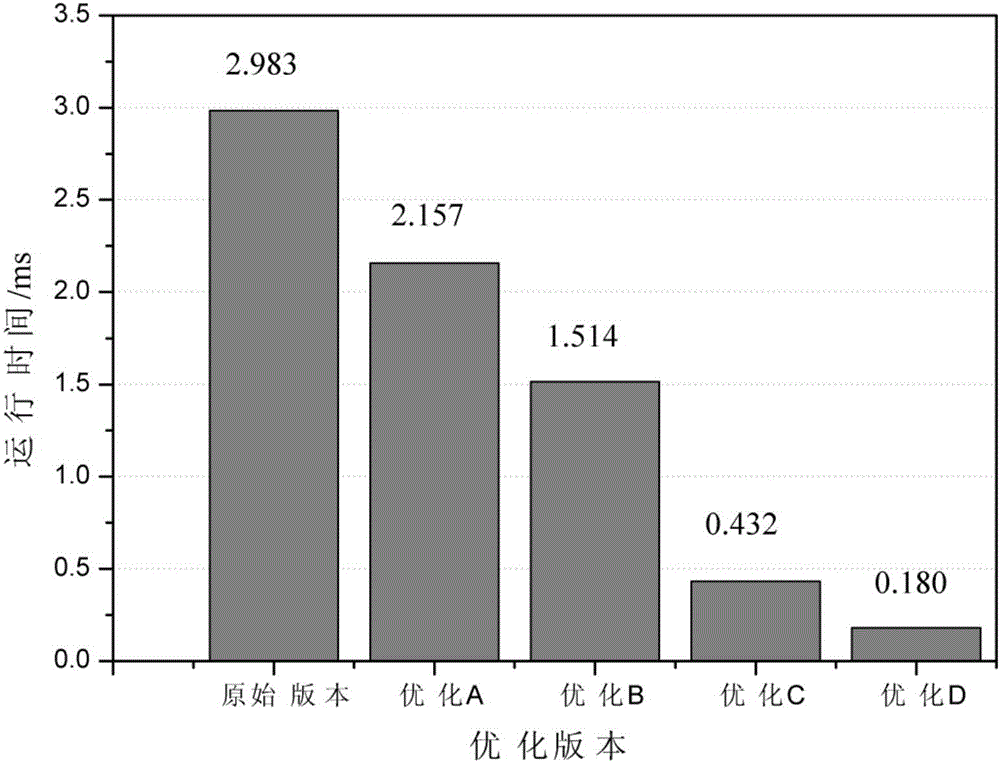

Method for achieving quasi-Newton algorithm acceleration based on high-level synthesis of FPGA

InactiveCN106775905AReduce development difficultyImprove versatilitySoftware engineeringProgram controlFpga implementationsLogical part

The invention discloses a method for achieving quasi-Newton algorithm acceleration based on high-level synthesis of an FPGA. The method comprises the steps that 1, functions of a quasi-Newton algorithm are analyzed, and main calculation modules of the quasi-Newton algorithm are divided; 2, advanced languages C and C++ are utilized to achieve modules in the step 1, and the correctness of the functions of the algorithm are verified; 3, the quasi-Newton algorithm with the functions correct through function verification in the step 2 serves as an input file, a high-level synthesis tool is utilized to convert the advanced languages into RTL-level languages, and generated RTL codes are verified; 4, the generated RTL codes are manufactured into bitstream files, and the files are downloaded to the configurable logical parts of the FPGA. Starting from the quasi-Newton algorithm acceleration, high-level synthesis is utilized to achieve the quasi-Newton algorithm, quasi-Newton algorithm acceleration is achieved through the FPGA, and the FPGA development difficulty is reduced.

Owner:TIANJIN UNIV

Hardware circuit design and method of data loading device for accelerating calculation of deep convolutional neural network and combining with main memory

InactiveCN111783933ASimplify connection complexitySimplify space complexityNeural architecturesPhysical realisationComputer hardwareHigh bandwidth

The invention relates to a hardware circuit design and method of a data loading device combined with a main memory. The hardware circuit design and method are used for deep convolutional neural network calculation acceleration. According to the device, a cache structure is specifically designed and comprises input cache and control, wherein a macro block segmentation method is applied to input ofa main memory or / and other memories, and regional data sharing and tensor data fusion and distribution are achieved; a parallel input register array for converting the data segmentation pieces input into the cache; and a tensor type data loading unit that is connected with the output of the input cache and the input of the parallel input register array. The design simplifies an address decoding circuit, saves area and power consumption, and does not influence high bandwidth of data. The hardware device and the data processing method provided by the invention comprise a transformation method, amacro block segmentation method and an addressing method for the input data, so that the requirement of carrying out algorithm acceleration by limited hardware resources is met, and the address management complexity is reduced.

Owner:北京芯启科技有限公司

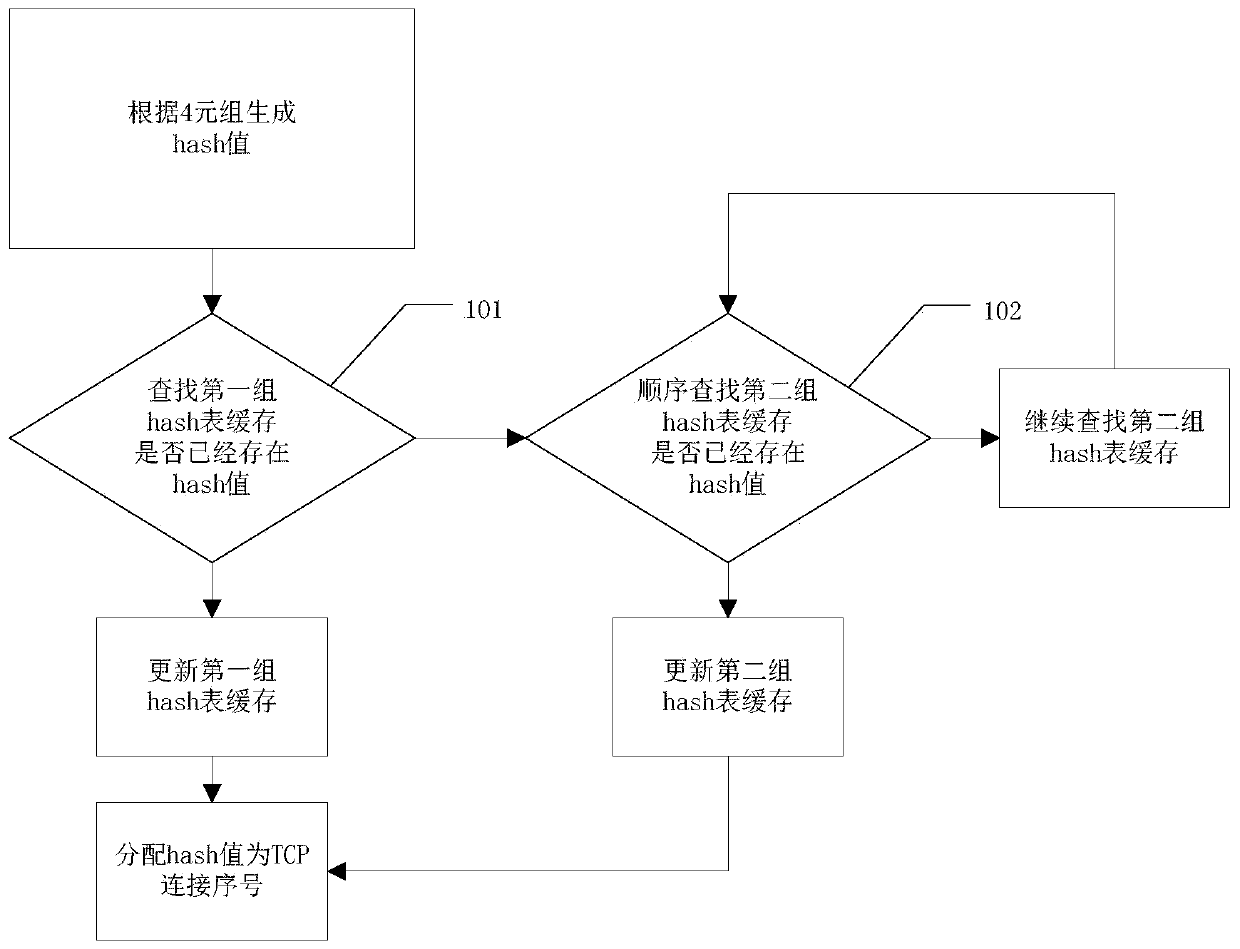

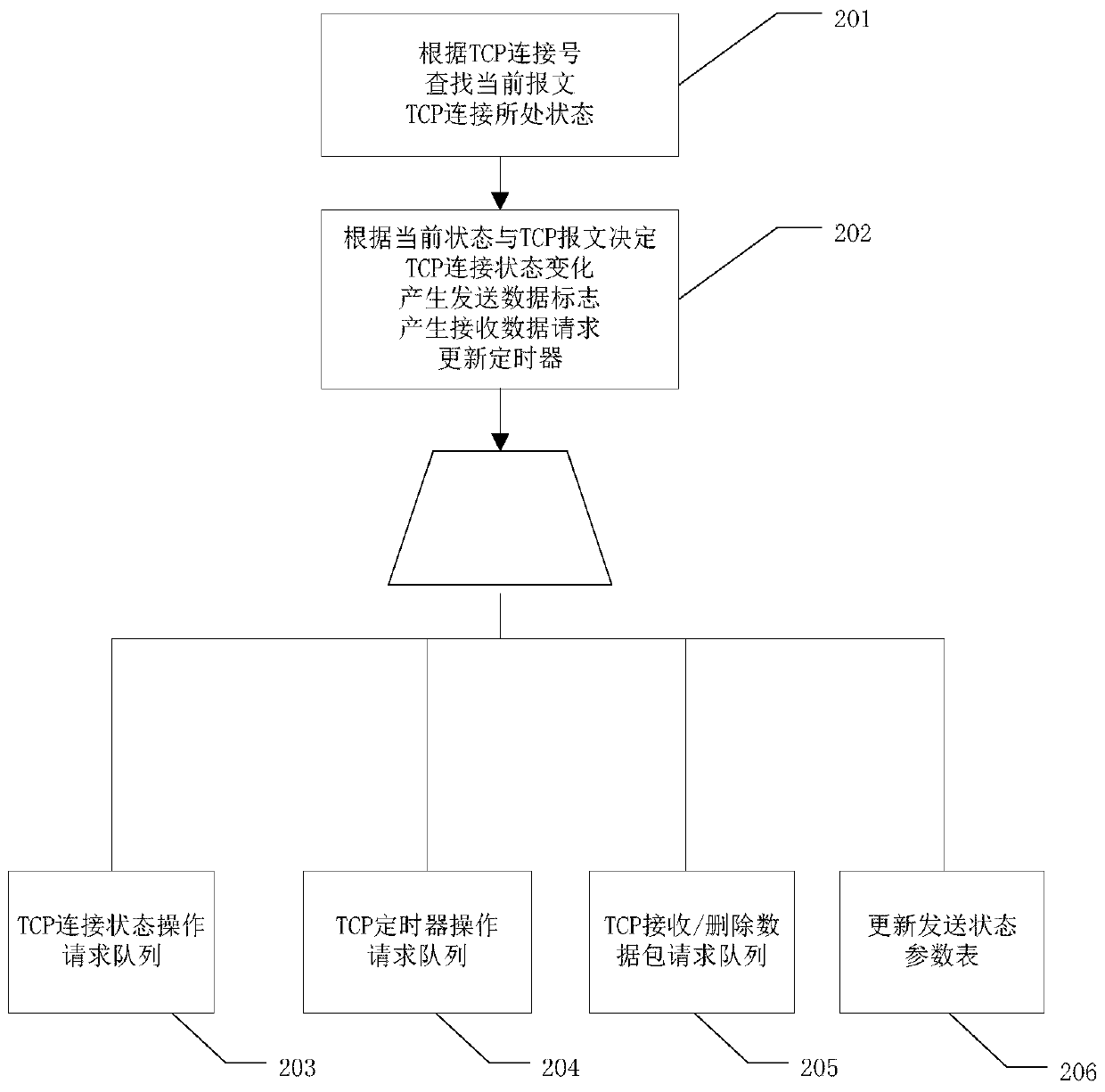

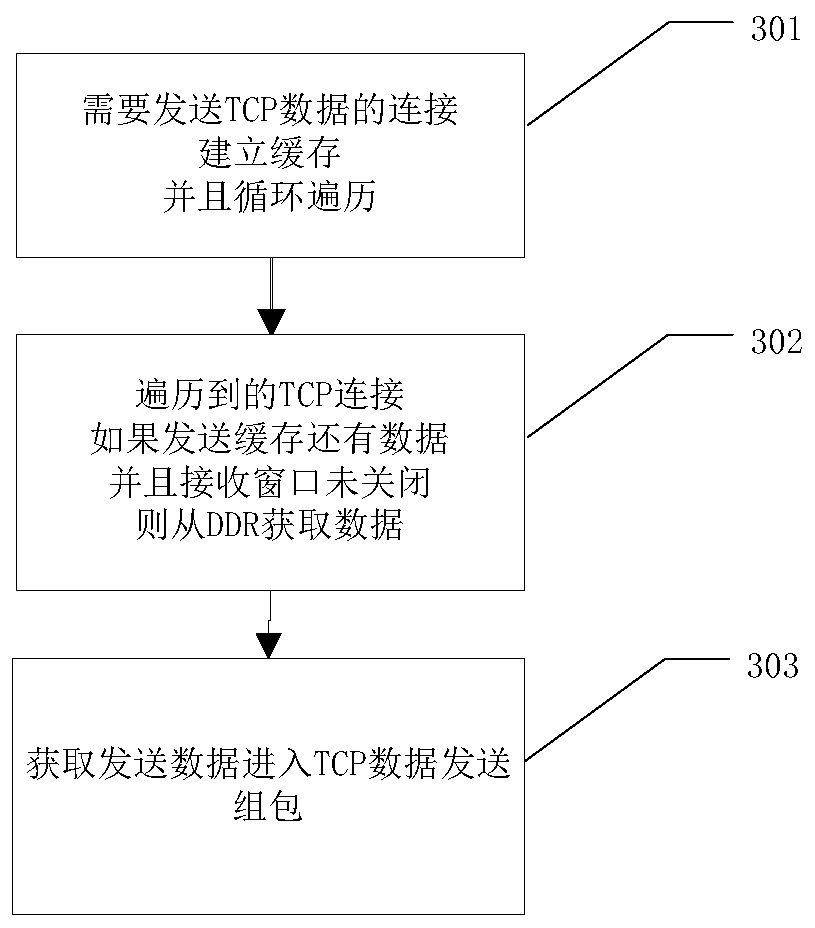

Method and system for realizing TCP (Transmission Control Protocol) full-offload IP (Internet Protocol) cores for multi-connection management

The invention provides a method and a system for realizing TCP (Transmission Control Protocol) full-offload IP (Internet Protocol) cores for multi-connection management. The method comprises a hash value generation step, a connection management step, a sending management step and a timer management step. The IP core for realizing TCP unloading can be connected with different types of buses to serve as a protocol processor; by means of the IP core, TCP network data can be obtained on FPGA hardware, and the function of supporting network control and data receiving and sending is achieved throughthe data connection algorithm acceleration module, the dynamic reconstruction module and the like.

Owner:EAST CHINA INST OF COMPUTING TECH

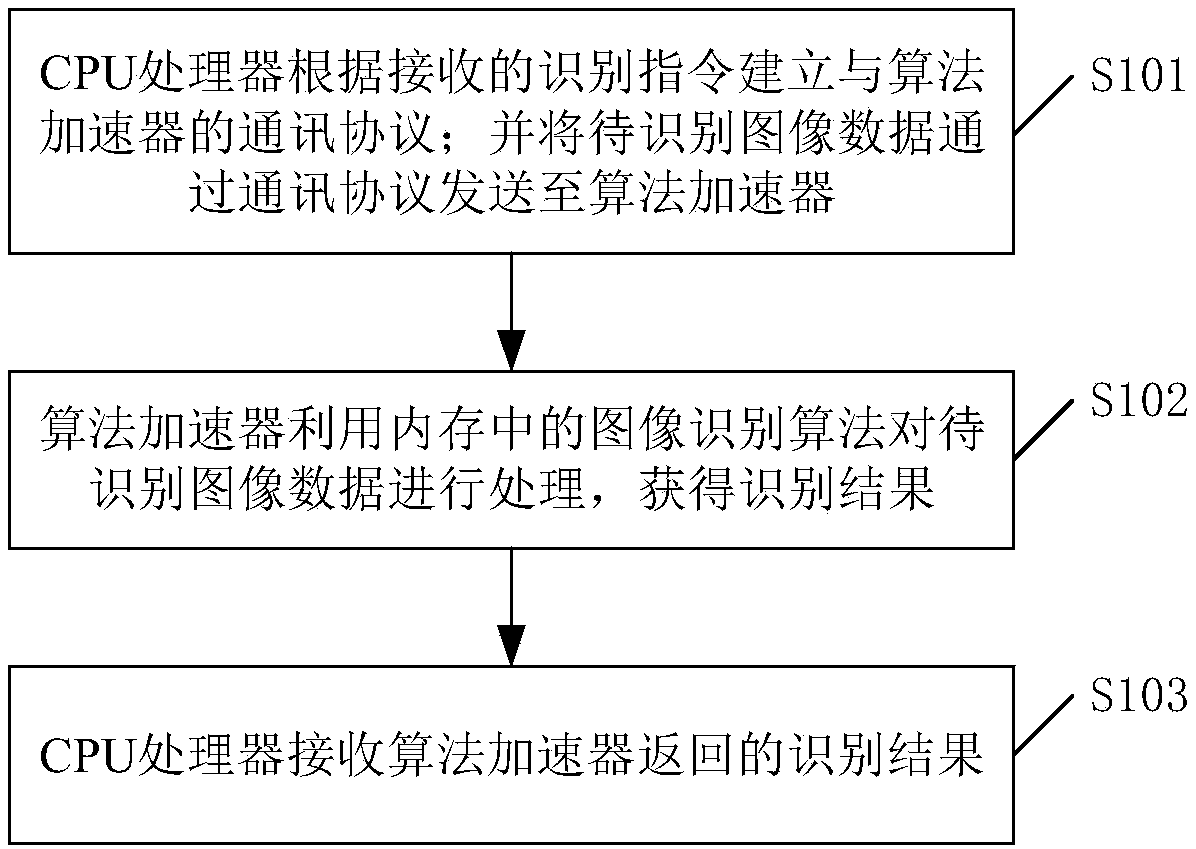

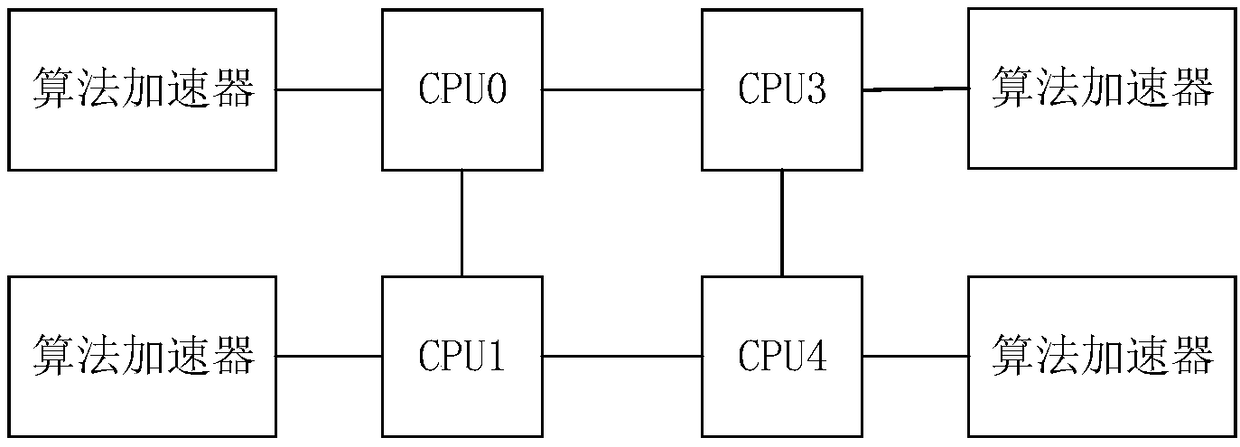

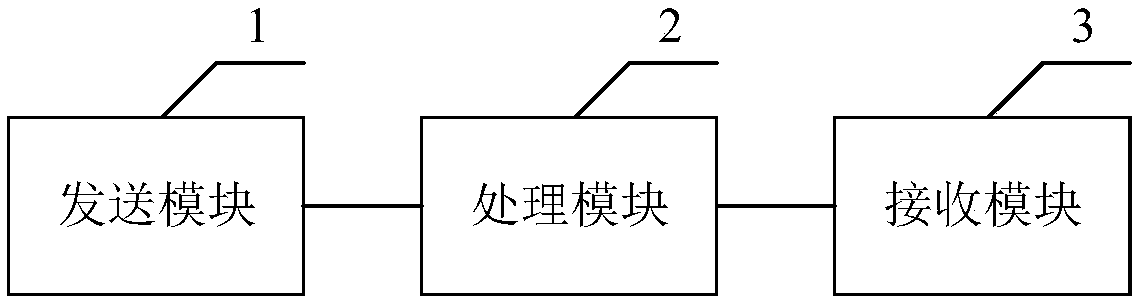

Image identification method, device and system

InactiveCN108256492AEasy to handleImprove performanceCharacter and pattern recognitionRecognition algorithmCommunications protocol

The invention discloses an image identification method. The method comprises the steps that CPUs establish communication protocols with algorithm accelerators according to received identification instructions; image data to be identified is sent to the algorithm accelerators according to the communication protocol; the algorithm accelerators use an image identification algorithm in a memory to process the image data to be identified, and an identification result is obtained; the CPUs receive the identification result which is returned by the algorithm accelerators. According to the method, theCPUs are connected with the algorithm accelerators, the image identification algorithm is operated by the algorithm accelerators to accelerate the image data to be identified, that is to say, the number of the CPUs is increased, the performance of processing various image identification algorithms by a system is effectively improved, and the whole performance of the system is further improved. The invention further discloses an image identification device and system which have the advantages above.

Owner:ZHENGZHOU YUNHAI INFORMATION TECH CO LTD

Method and system for solving complete risk link sharing group separation path pair

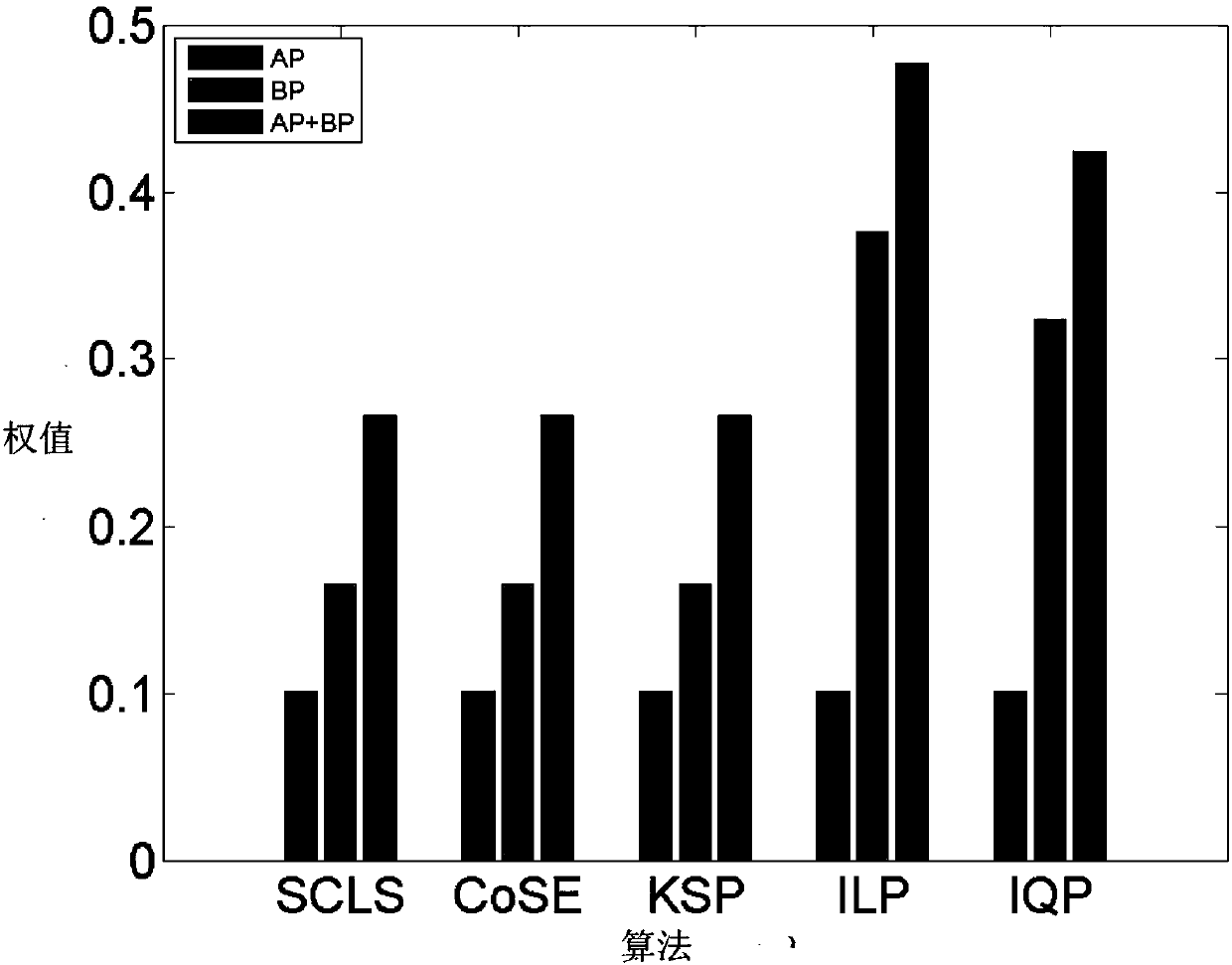

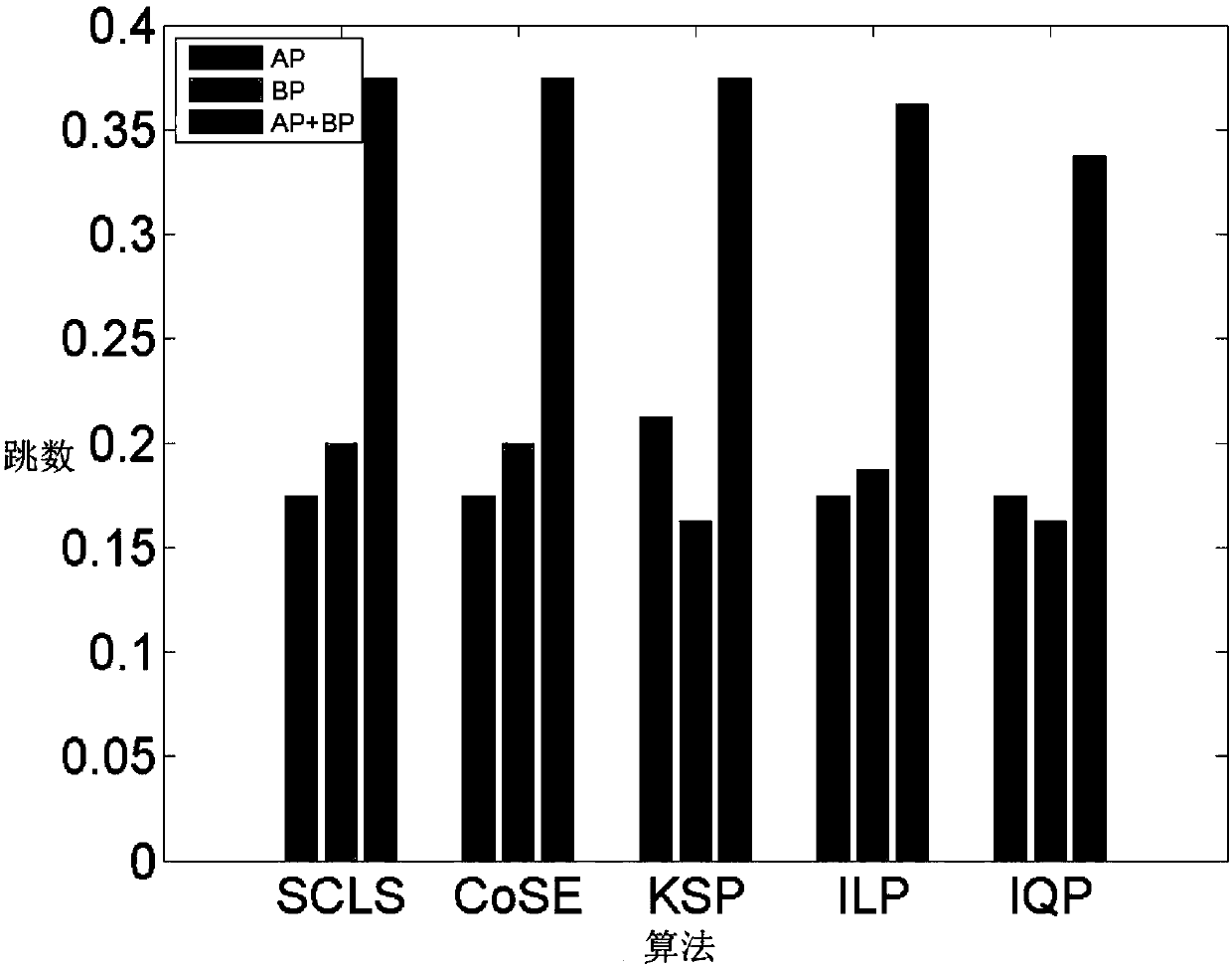

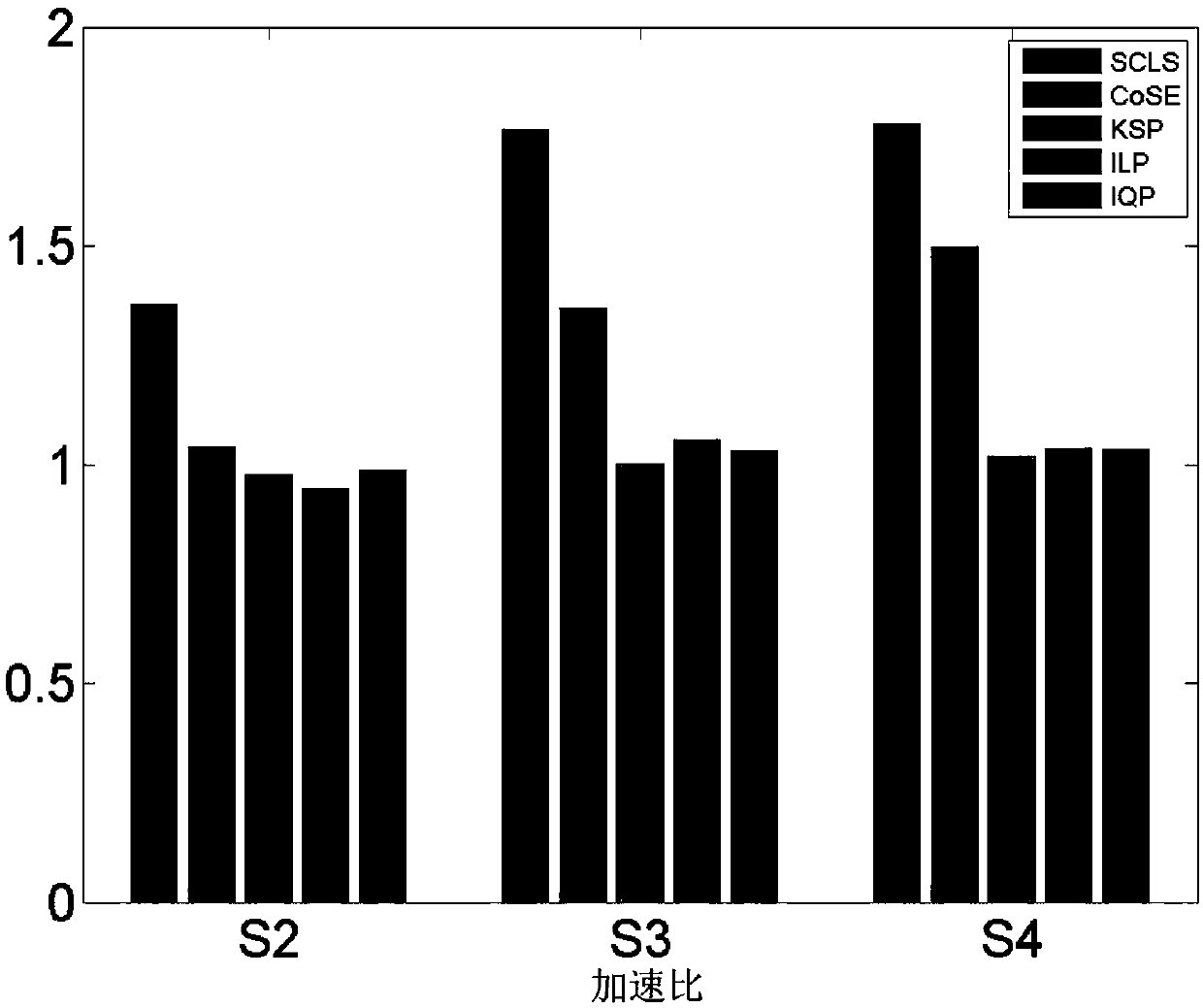

ActiveCN107689916AReduce complexityNarrow down the search spaceElectromagnetic transmissionData switching networksRisk sharingParallel processing

The invention discloses a method and system for solving a complete risk link sharing group separation path pair. The method comprises the following steps: under the condition of meeting a trap in thenetwork of a risk sharing link group, acquiring a risk sharing link group side conflict set T by finding the information of a first work (main) path AP firstly, and providing an algorithm for divisionand rule as well as parallel processing of an original problem by utilizing the risk sharing link group side conflict set T. In the application field in which fault-tolerant protection needs to be carried out on the work route AP in software defining network controller layer route business, the operation time of the complete risk sharing link group separation path algorithm is far less than thatof other same type of algorithms, and the algorithmic speed-up ratio is 20 times higher, and the algorithm is far superior to other same type of algorithms in solving speed. The method can adapt to all fields of current complete risk sharing link group separation routes, and has broader application prospects compared with current complete risk sharing link group separation route algorithms.

Owner:HUNAN UNIV

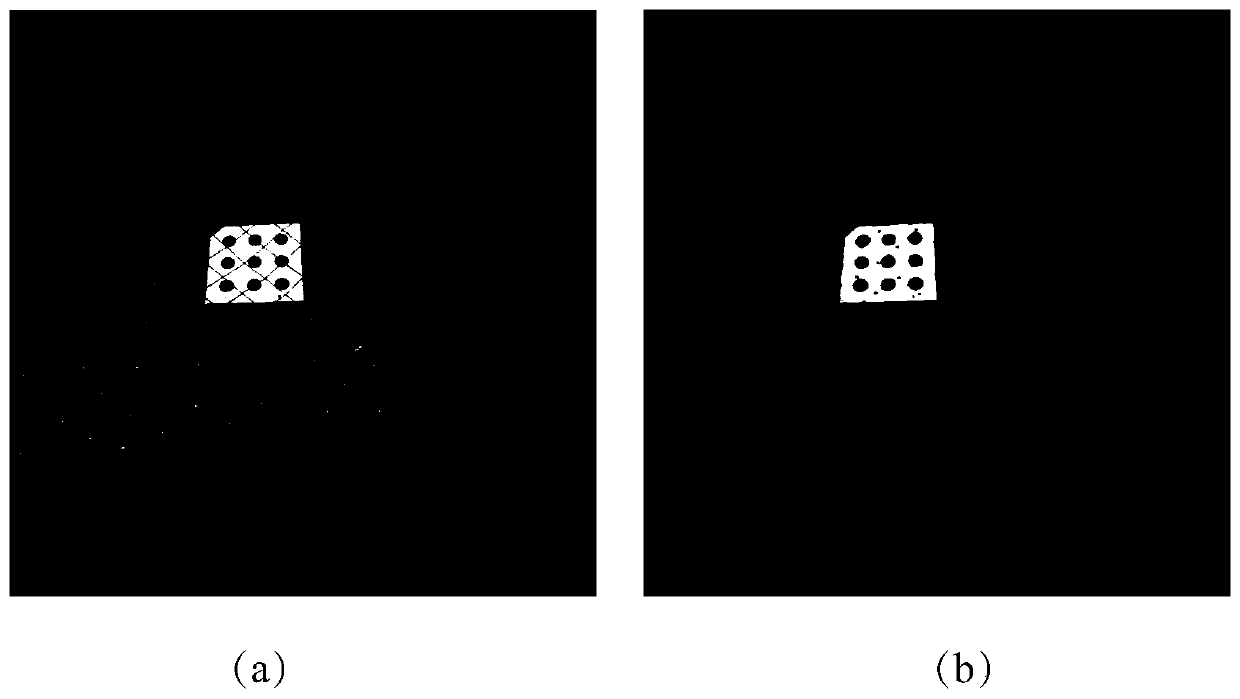

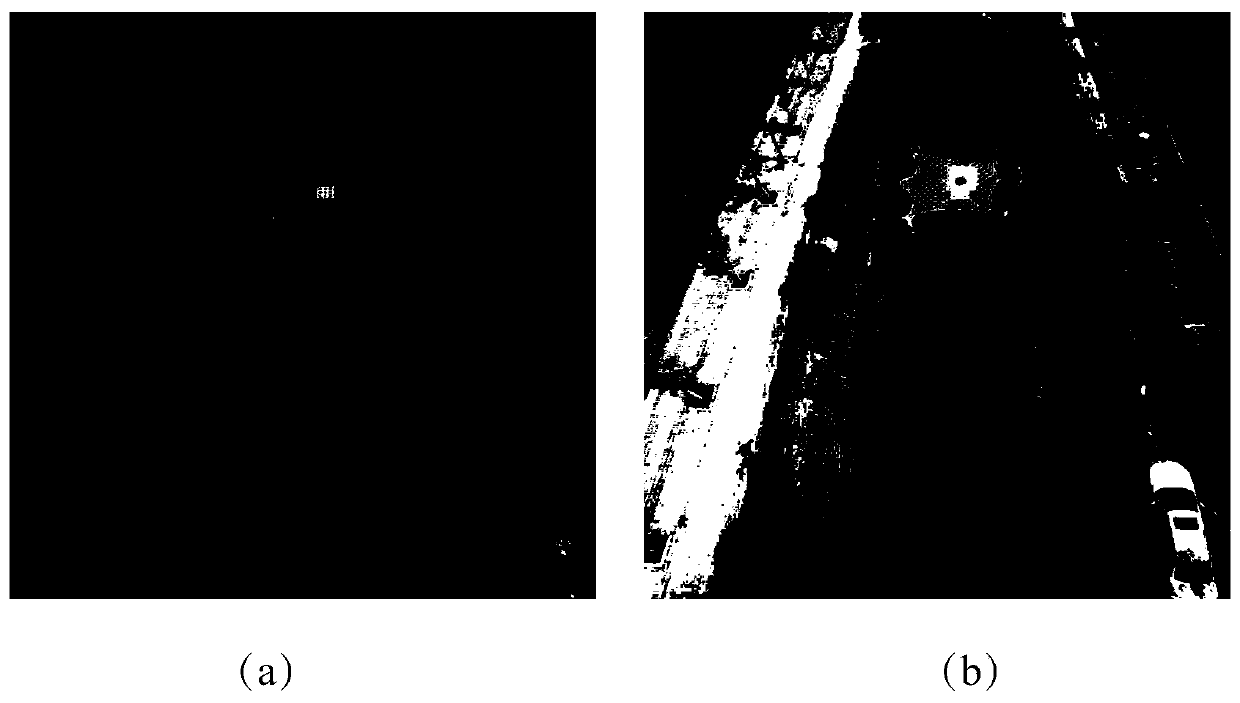

High-precision target identification and detection method under grid background

ActiveCN110210295ARealize the function of self-recyclingGuaranteed High Accuracy SolvingImage analysisCharacter and pattern recognitionImaging processingMorphological filtering

The invention discloses a high-precision target identification and detection method under a grid background. By using the method, the real-time and high-precision identification of the target under the grid background can be realized, and reliable visual navigation information is provided for the net collision recovery of the unmanned aerial vehicle. The method comprises the following steps: firstly, designing a target; updating an algorithm based on a gradient search threshold value and an optimal threshold value; obtaining an appropriate binarization threshold value; realizing target identification under a netted background by using morphological filtering and a coarse and fine contour screening algorithm. Meanwhile, in order to realize real-time detection, algorithm acceleration is realized by adopting a mode of generating an interested area and downsampling, and position resolving information is provided for navigation by adopting an image processing mode in a net collision recovery process of the unmanned aerial vehicle, so that the unmanned aerial vehicle can realize an autonomous recovery function.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

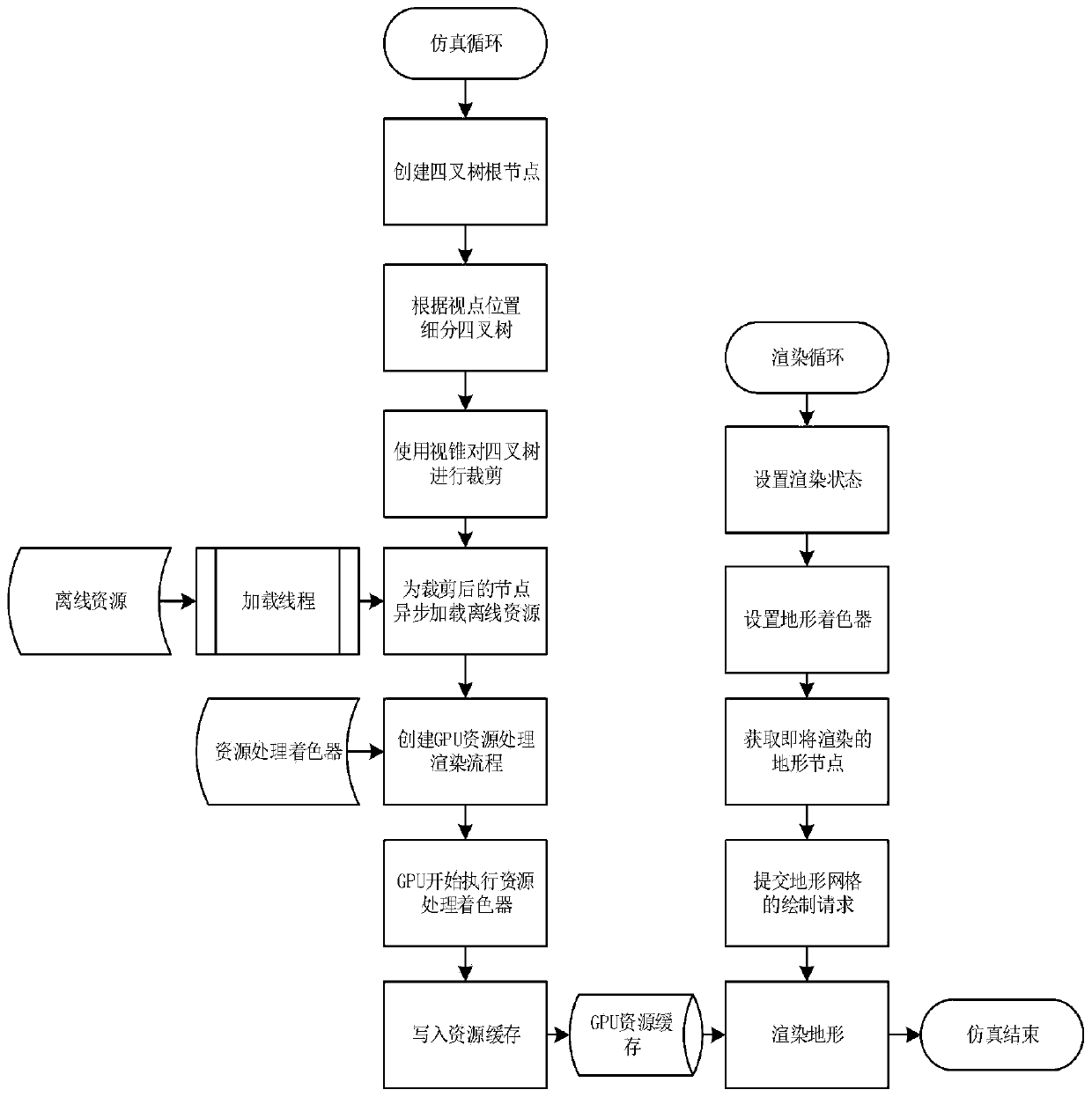

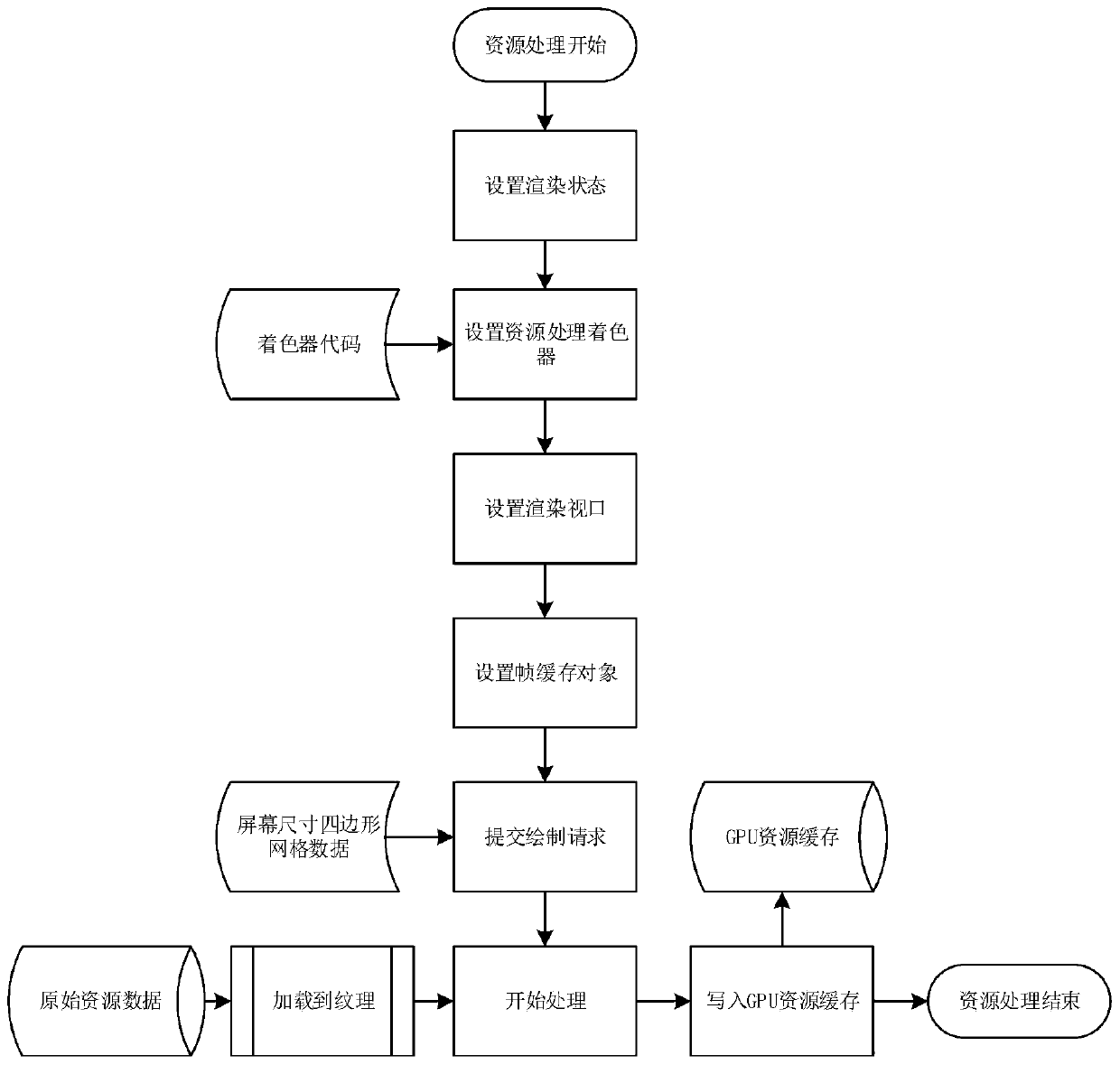

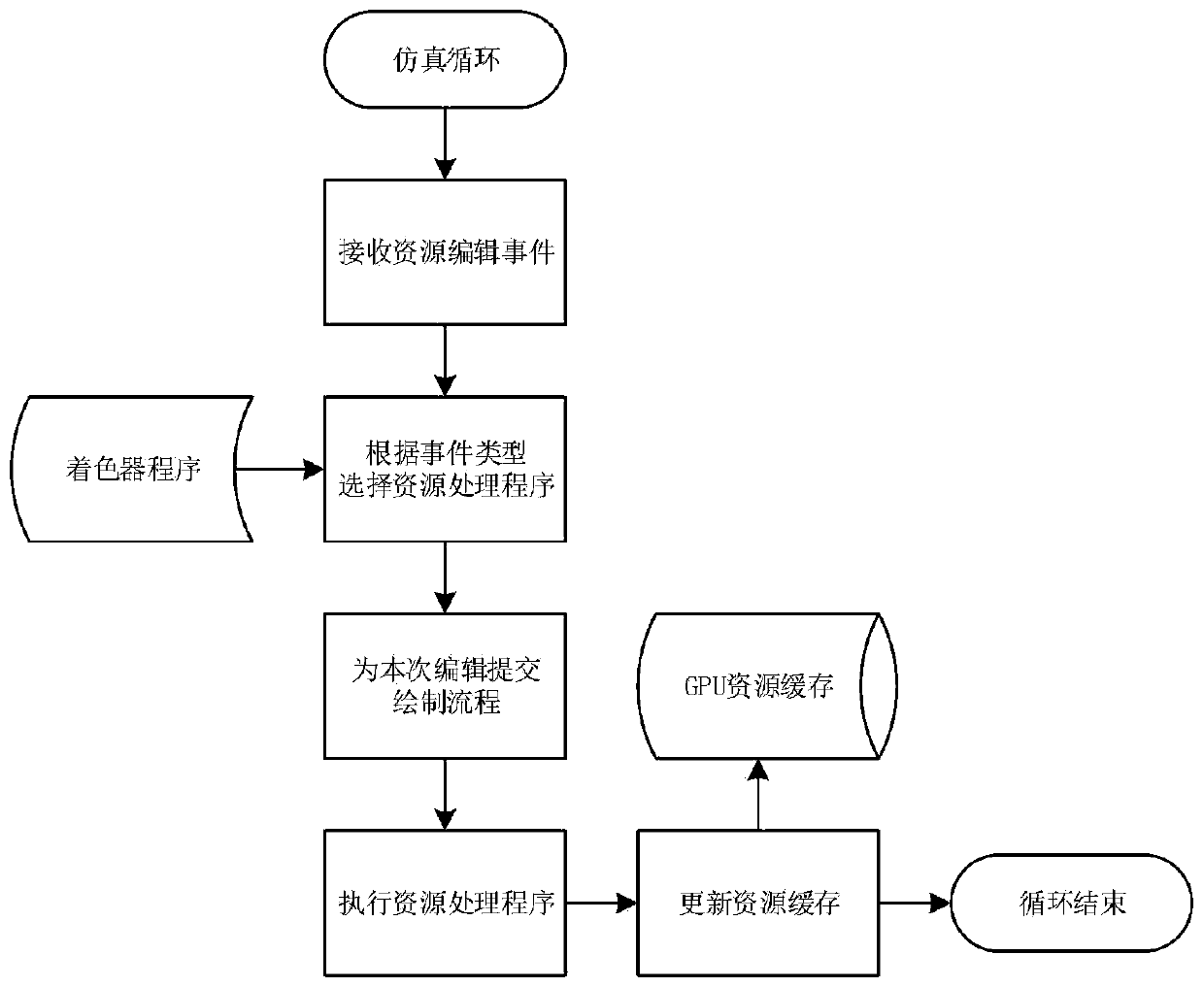

Virtual terrain rendering method for carrying out resource dynamic processing and caching based on GPU

ActiveCN111563948AImprove execution efficiencyImprove performance3D-image renderingComputational scienceParallel computing

The invention discloses a virtual terrain rendering method for dynamically processing and caching resources based on a GPU. The method comprises the following steps: constructing a terrain grid according to a spatial quadtree algorithm; gradually subdividing the terrain and cutting the view cone according to a viewpoint position, and creating GPU end caches for different terrain resources according to the resources positioned by the logic coordinates to realize different shader programs; starting different rendering processes for different resources, and storing the processed resources in a cache opened up by a GPU; writing a shader for terrain rendering to create a drawing instruction for the remaining nodes after terrain cutting, and submitting Draw Call to a GPU to complete drawing. According to the method, the computing power of the GPU is fully utilized, rendering work is submitted to the GPU, processing work of rendering resources is submitted to the GPU to be completed, and therefore resource processing work is greatly accelerated. Meanwhile, a whole set of GPU resource caching and access algorithm is realized, the access performance of resources is accelerated, the rendering performance is further improved, and real-time rendering and flexible editing of super-large-scale virtual terrains become possible.

Owner:航天远景科技(南京)有限公司

CUDA-based S-BPF reconstruction algorithm acceleration method

InactiveCN105678820AThe acceleration effect is obviousImprove rebuild efficiencyReconstruction from projectionProcessor architectures/configurationVideo memoryInternal memory

The present invention discloses a CUDA-based S-BPF reconstruction algorithm acceleration method, which overcomes the problem in the prior art that the conventional CT imaging-based image reconstruction algorithm lasts long. The method comprises the steps of 1, reading a plurality of projections from a hard disk and calculating a constant C for the limited Hilbert inverse transformation in a CPU; 2, transmitting the plurality of projections from an internal memory to a video memory and deriving a back projection in a GPU to obtain a DBP image; 3, conducting the limited Hilbert inverse transformation on the DBP image obtained in the step 2 to transmit an obtained result from the video memory to the internal memory. According to the technical scheme of the invention, the method solves the problems in the prior art that the reconstruction algorithm-based GPU acceleration is obvious in accelerating effect and the communication delay becomes a bottleneck in limiting the existing acceleration strategy. Experimental results show that, the speed-up ratio obtained based on the above method is about 2 times based on existing policies.

Owner:THE PLA INFORMATION ENG UNIV

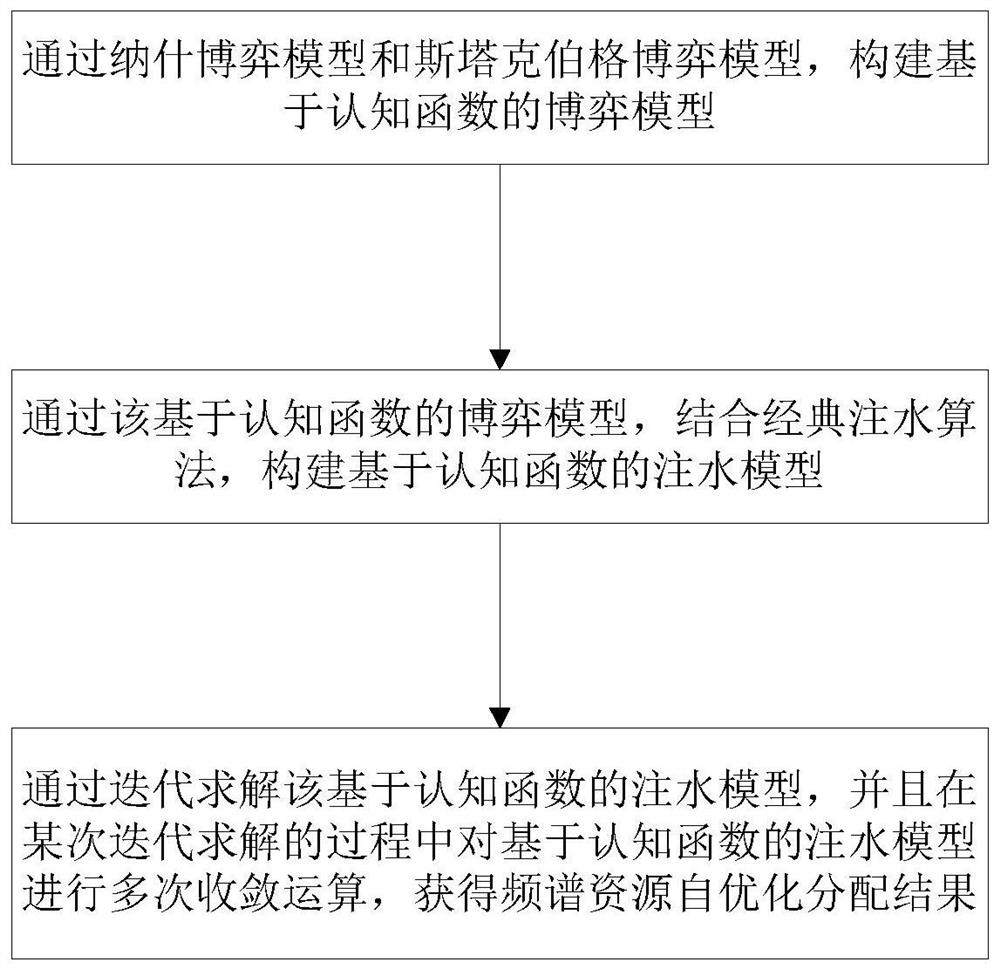

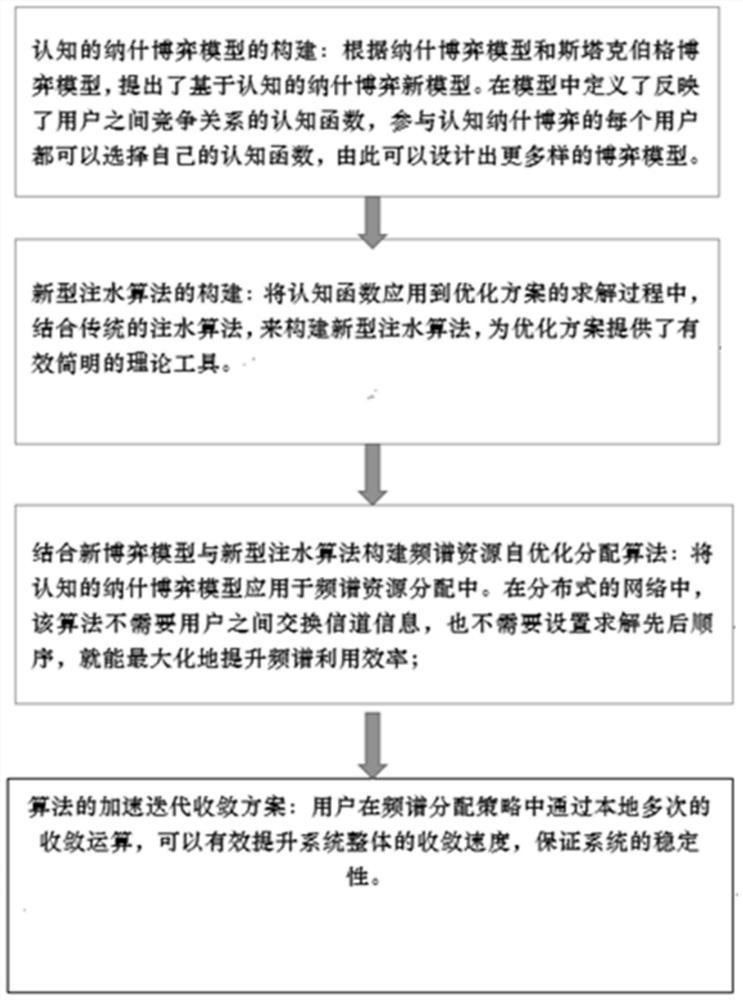

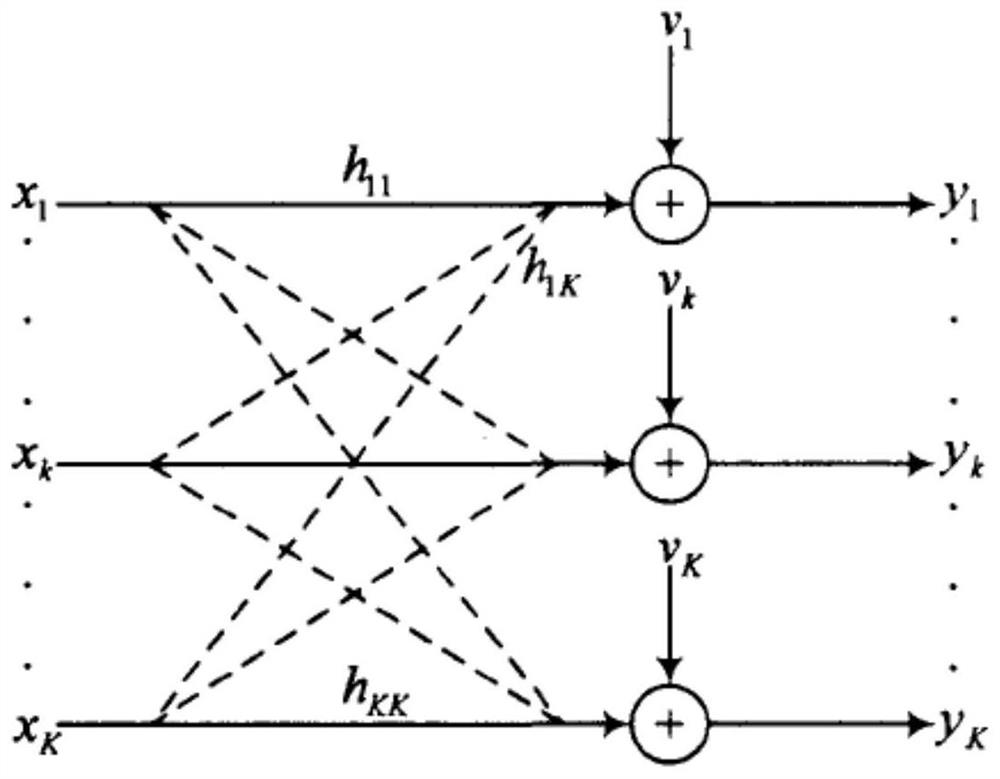

Spectrum resource self-allocation method

ActiveCN111682915AImprove Spectrum Utilization EfficiencyFast convergenceTransmission monitoringFrequency spectrumSimulation

The invention provides a spectrum resource self-allocation method, which comprises the following steps of: constructing a cognitive function-based game model through a Nash game model and a Stackelberg game model; constructing a water injection model based on a cognitive function through the game model based on the cognitive function in combination with a classical water injection algorithm; and solving the water injection model based on the cognitive function through distributed free iteration to realize self-optimization allocation optimization of spectrum resources. In iteration, a user caneffectively improve the convergence speed of the system by using an acceleration scheme. According to the invention, the optimization of the utilization efficiency of channel resources is realized, the channel rate of the scheme can reach the maximum value of the theoretical spectrum utilization rate, and good system performance can be obtained in different scenes. Frequency spectrum resources can be intelligently found and utilized, and the frequency spectrum resource utilization efficiency is improved to the maximum extent. An algorithm acceleration scheme is provided by utilizing the capability of predicting a competition result of a cognitive function, so that the operation speed can be greatly improved under the condition of not reducing the performance.

Owner:BEIJING JIAOTONG UNIV

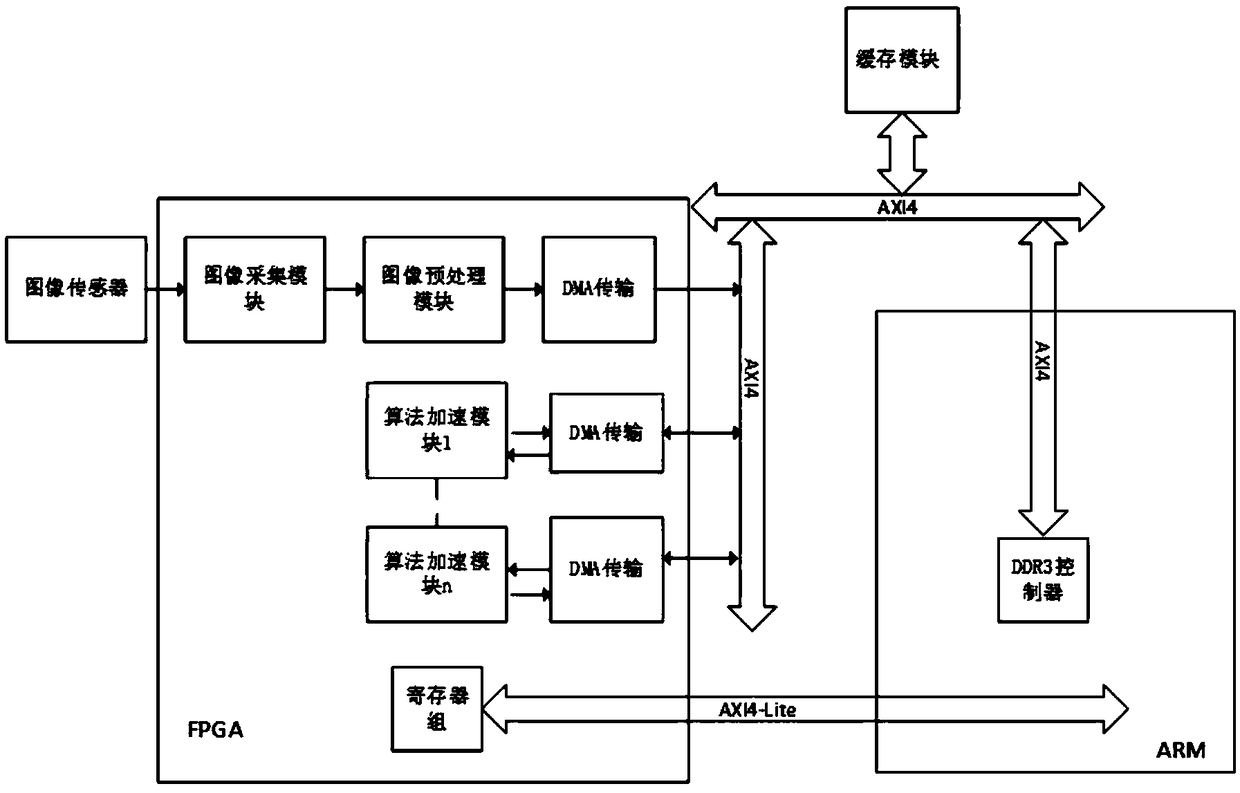

SOC-based leukocyte counting system and method

InactiveCN108765377AQuick splitFast countImage enhancementImage analysisSequence controlInteraction time

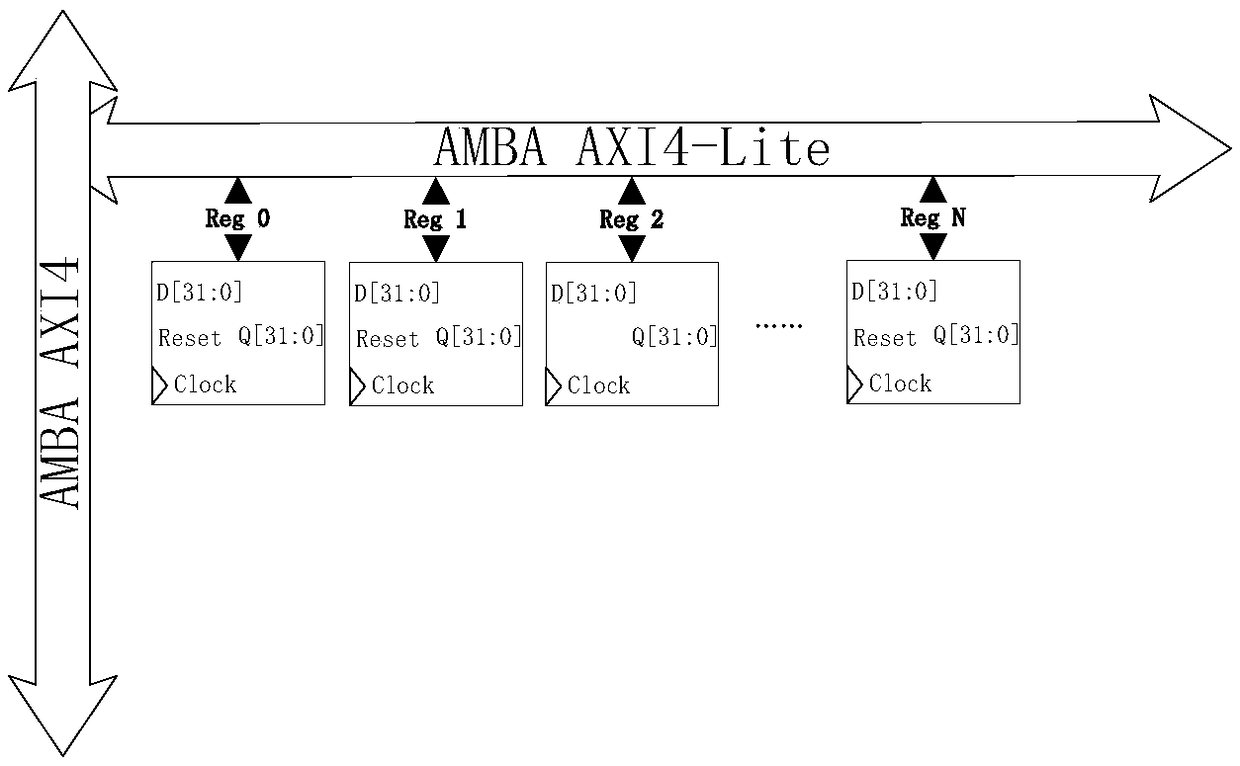

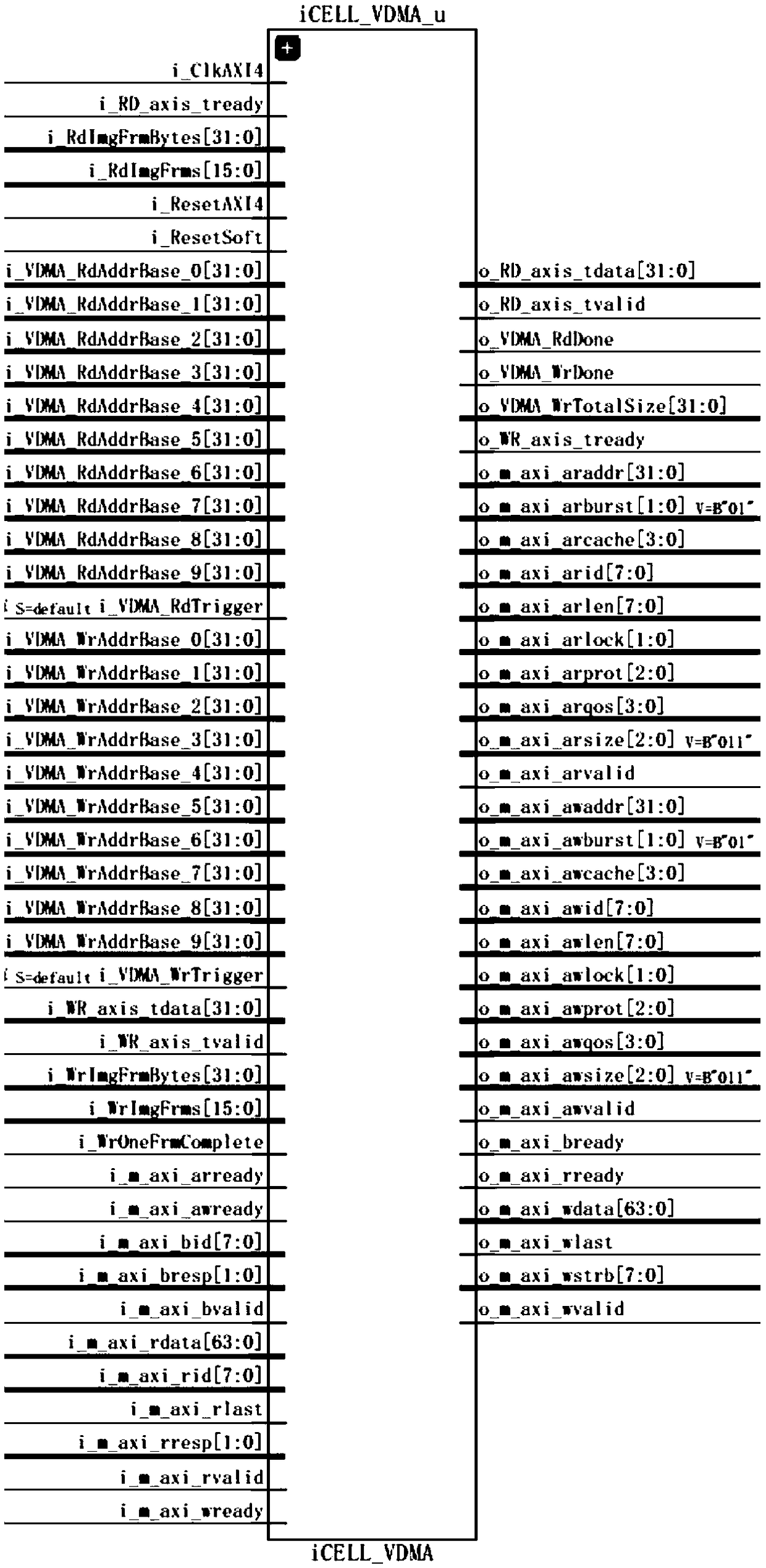

The invention discloses an SOC-based leukocyte counting system. The system is characterized by comprising an SOC-FPGA, an SOC-ARM and a cache module, wherein an image collection module of the SOC-FPGAcollects a to-be-analyzed image from an image sensor in real time, and data of the to-be-analyzed image is preprocessed; the SOC-ARM comprises a DDR3 controller, is used for completing initializationconfiguration and dynamic configuration of the image sensor, data communication, image caching and display and software and hardware interaction time sequence control and is connected with the SOC-FPGA through an AMBA AXI4-Lite bus; and the cache module realizes caching of the data of the to-be-analyzed image by the adoption of a DMA data transmission IP core and is connected with the DDR3 controller through an AMBA AXI4 bus interface. Through the counting system and method, development cost can be lowered while image algorithm acceleration computing is realized quickly and efficiently.

Owner:JIANGSU KONSUNG BIOMEDICAL TECH

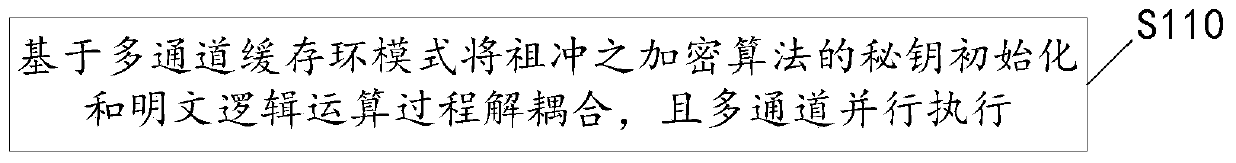

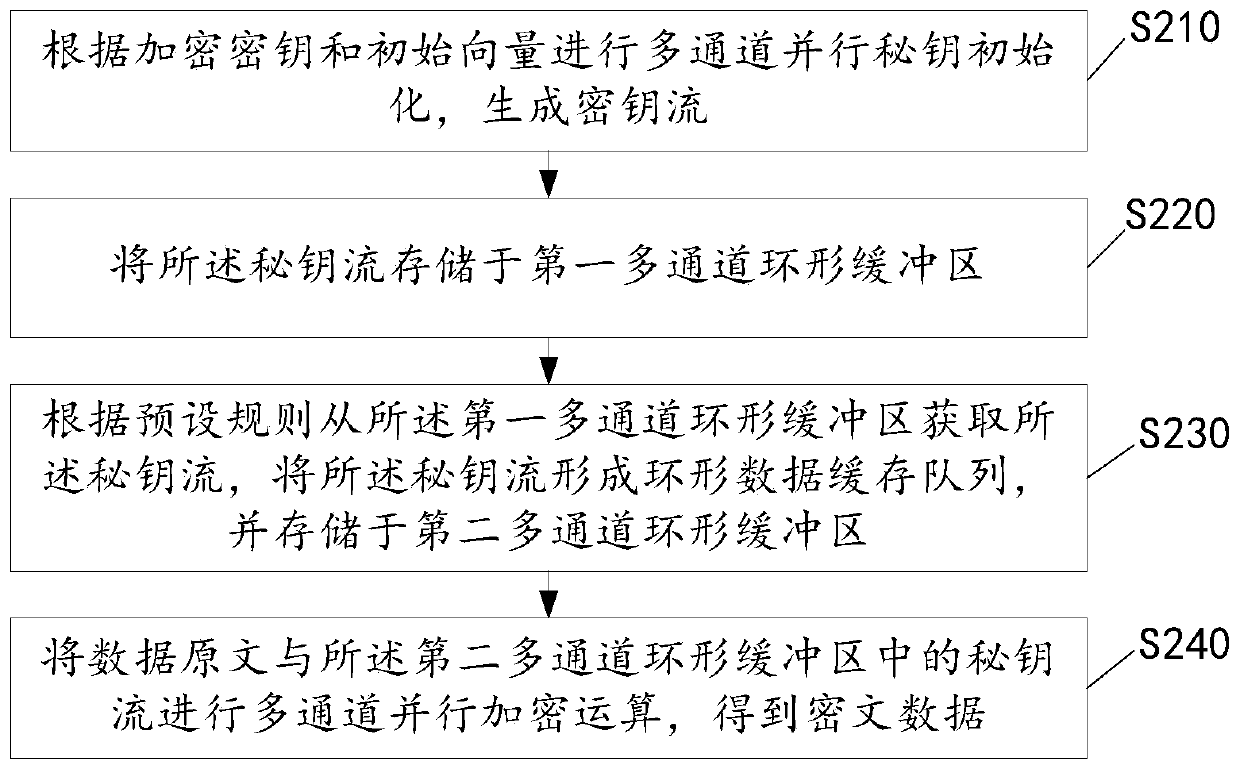

Zu-Chongzhi encryption algorithm acceleration method, system, storage medium and computer equipment

ActiveCN110445601AImprove encryption computing abilityReduce real-time business application delayData stream serial/continuous modificationComputer equipmentAlgorithm acceleration

The invention relates to the technical field of information safety, and particularly discloses a Zu-Chongzhi encryption algorithm acceleration method. The method comprises the step of performing secret key initialization and plaintext logic operation process multichannel parallel execution of an ancestral encryption algorithm based on a multichannel cache ring mode. Based on a multi-channel cachering mode, an ancestral algorithm is operated separately, so that logic separation of the ancestral algorithm. The key initialization and plaintext logic operation are executed in parallel in multiplechannels. The data encryption operation capability is improved. The real-time service application delay is reduced. The invention further discloses a Zu-Chongzhi encryption algorithm acceleration system, a storage medium and computer equipment.

Owner:BEIJING SANSEC TECH DEV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com