Heterogeneous neural network calculation accelerator design method based on FPGA

A neural network and design method technology, applied in the computer field, can solve problems such as reduced efficiency, achieve high-efficiency utilization, save computing time, and require low computing power

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0029] Below in conjunction with specific accompanying drawing and embodiment the present invention is described in further detail:

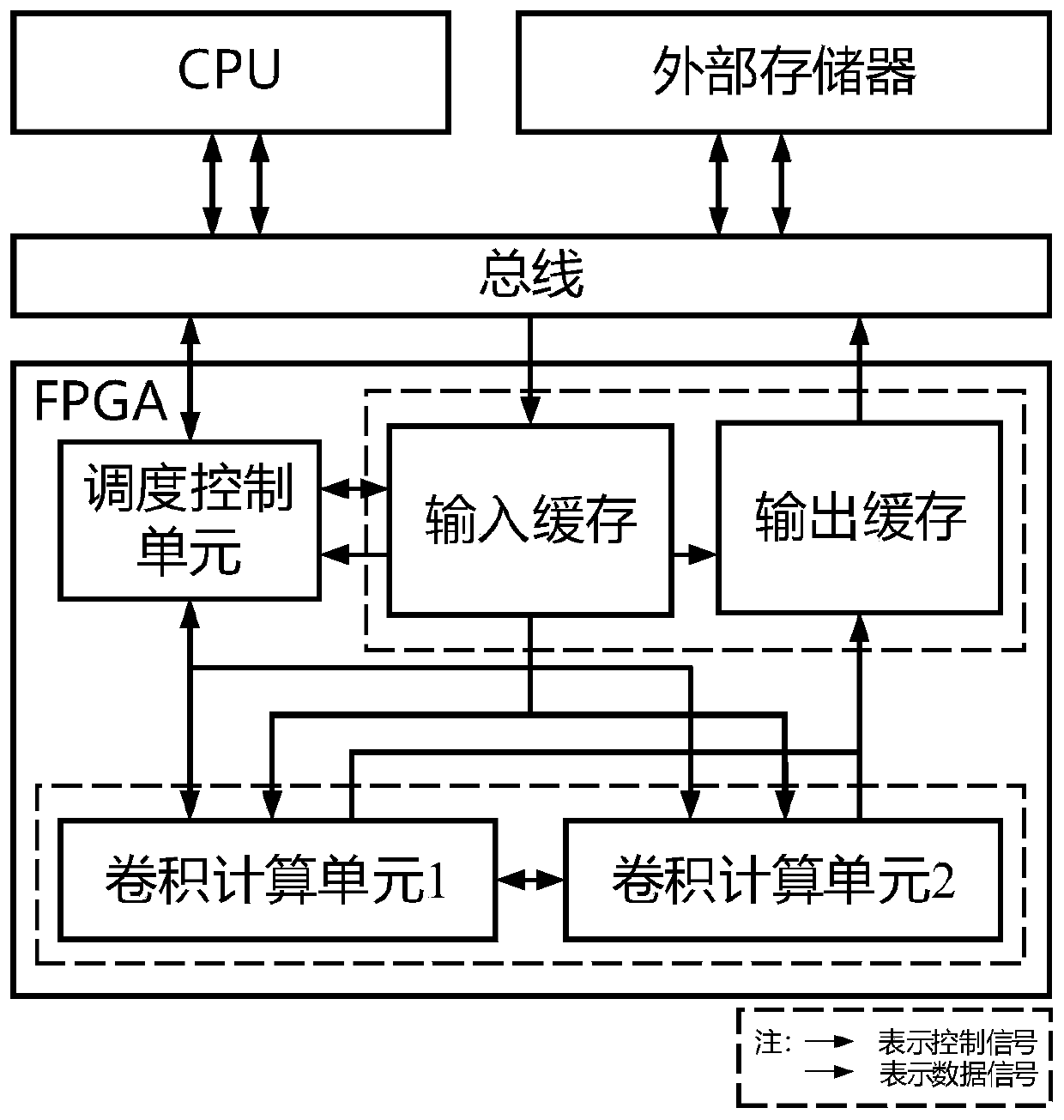

[0030] In this embodiment, based on the deep learning computing platform design method, its overall structure is as follows figure 1 As shown, it specifically includes the following:

[0031] The CPU accesses the external memory and FPGA through the bus, and the external memory is used to store the relevant parameters of the neural network, as well as input data and output results; the CPU controls the scheduling control unit located on the FPGA through the bus to perform data access and the work of the convolution calculation unit ; The convolution calculation unit performs orderly parameter loading, convolution calculation and final result output under the scheduling of the scheduling controller.

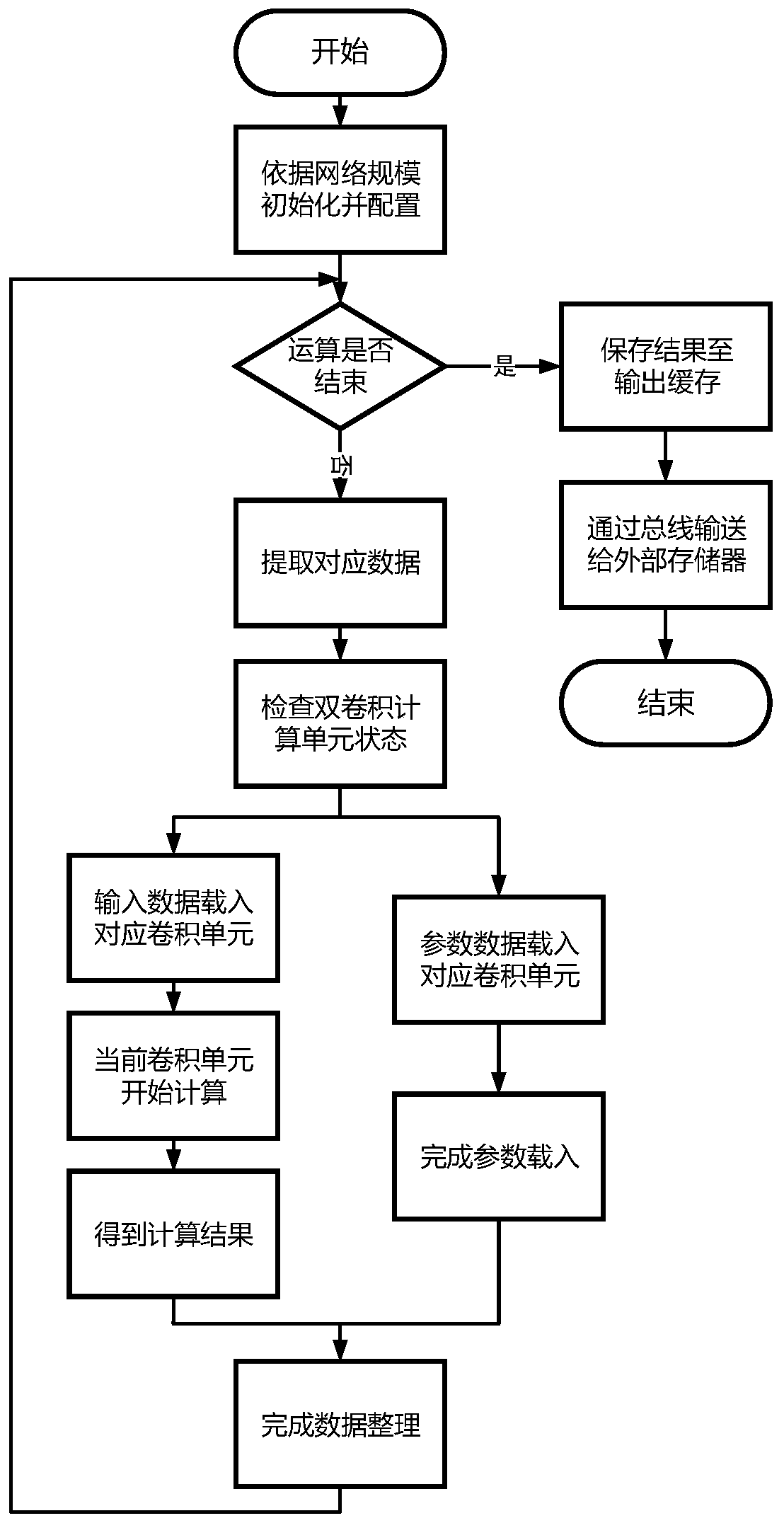

[0032] image 3 It is a data calculation flowchart of the present invention, and the implementation details specifically include the following st...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com