A memory access intensive algorithm acceleration chip with multiple high speed serial memory access channels

A memory-intensive, high-speed serial technology, applied in the field of memory-intensive algorithm acceleration chips, can solve the problems of difficult interface implementation, complex system architecture design, and accelerated chip memory access bandwidth data transmission, etc. The effect of good performance, extended memory access bandwidth, and good flexibility

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

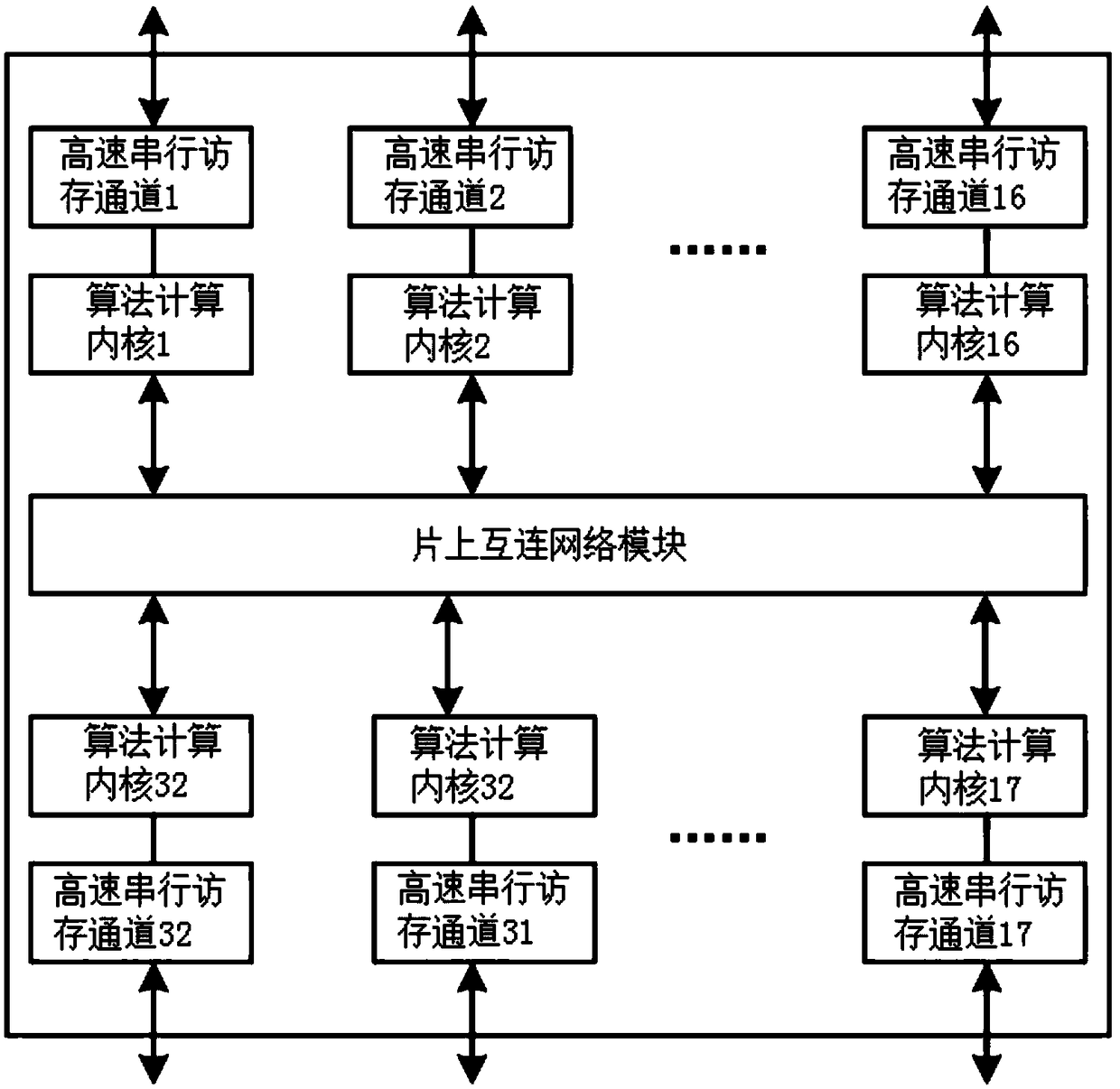

[0032] like figure 1 As shown, a memory-intensive algorithm acceleration chip with multiple high-speed serial memory access channels includes 32 algorithm computing cores for performing data processing operations in the algorithm and 32 high-speed serial memory access channels, and also includes on-chip interconnect The network module is connected, and the algorithm calculation core and the high-speed serial memory access channel are interconnected through the on-chip interconnection network module, and the high-speed serial memory access channel is connected with an off-chip memory chip. In this embodiment, the high-speed serial access channel and the algorithm calculation core are closely coupled and connected one by one, and the 32 algorithm calculation cores are all connected to the on-chip interconnection network module. Through the on-chip interconnection network module, any algorithm calculation core can pass Any high-speed serial memory access channel accesses memory c...

Embodiment 2

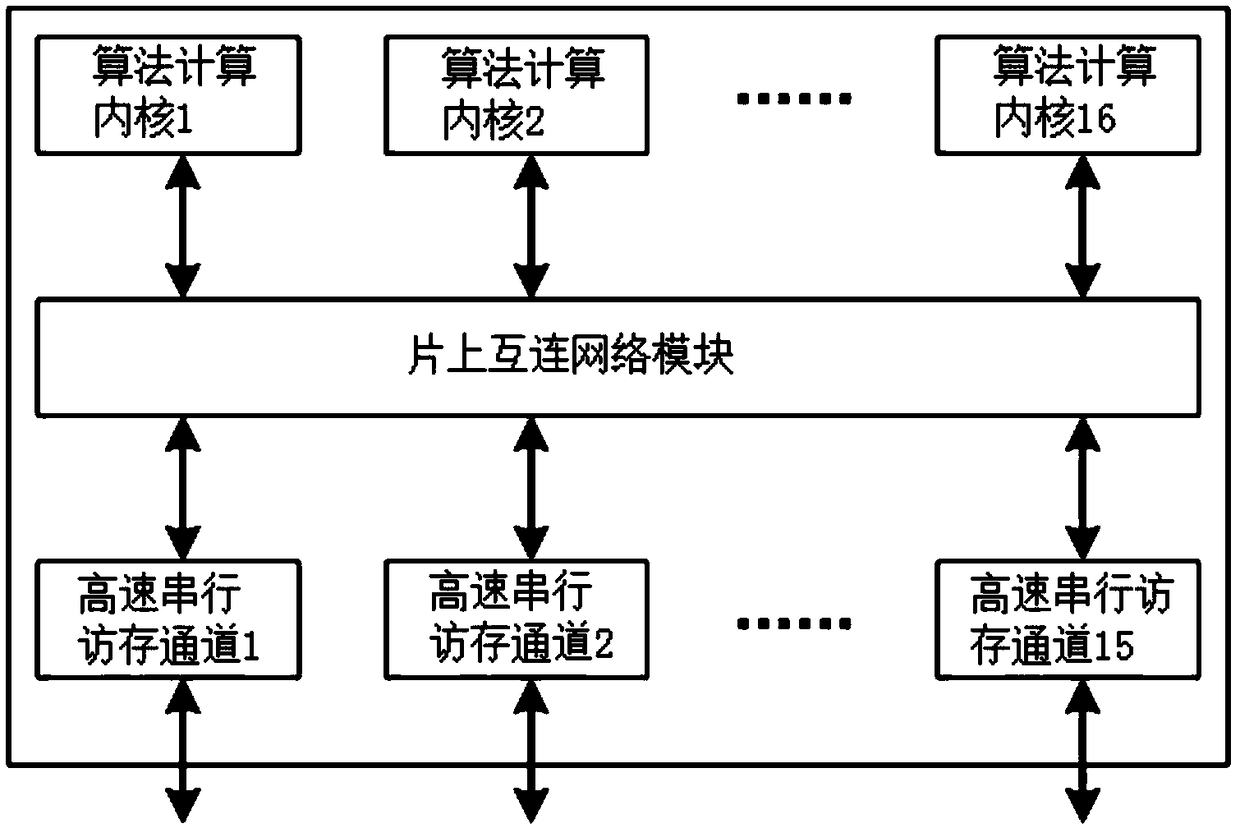

[0038] like figure 2 As shown, a memory-intensive algorithm acceleration chip with multiple high-speed serial memory access channels includes 16 algorithm computing cores for performing data processing operations in the algorithm and 15 high-speed serial memory access channels, as well as on-chip interconnect The network module is connected, and the algorithm calculation core and the high-speed serial memory access channel are interconnected through the on-chip interconnection network module, and the high-speed serial memory access channel is connected with an off-chip memory chip. In this embodiment, the high-speed serial memory access channel is loosely coupled with the algorithm calculation core, and the 16 algorithm calculation cores are all connected to the on-chip interconnection network module, and the 15 high-speed serial memory access channels are all connected to the on-chip interconnection network module. The on-chip interconnection network module, any algorithm co...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com