Video fine structuring method based on multi-feature fusion

A technology of multi-feature fusion and fine structure, applied in the direction of instruments, character and pattern recognition, computer components, etc., can solve the problem of difficult to take into account the video frame information, etc., to improve computing efficiency, reduce computing complexity, and increase reliability Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

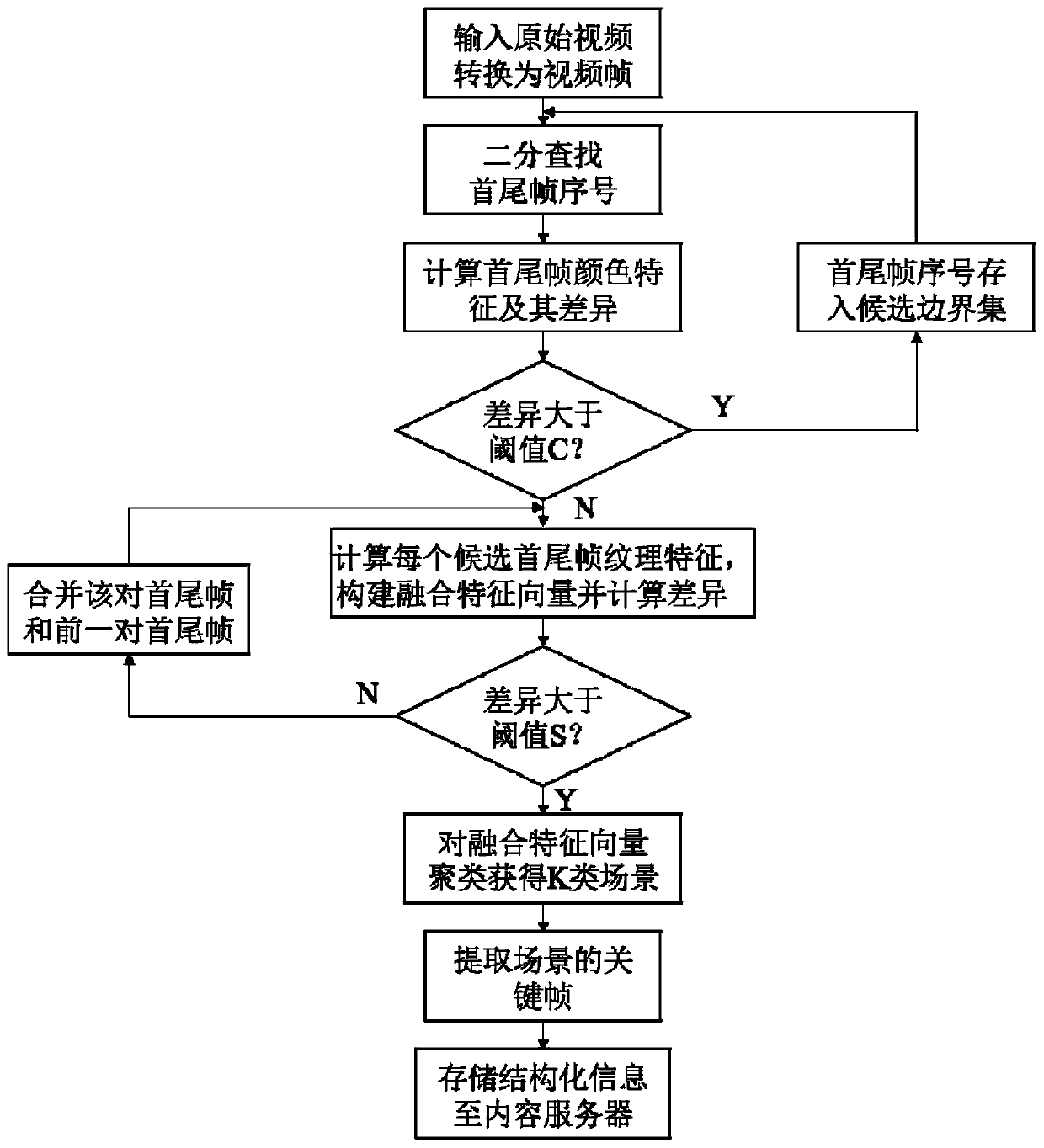

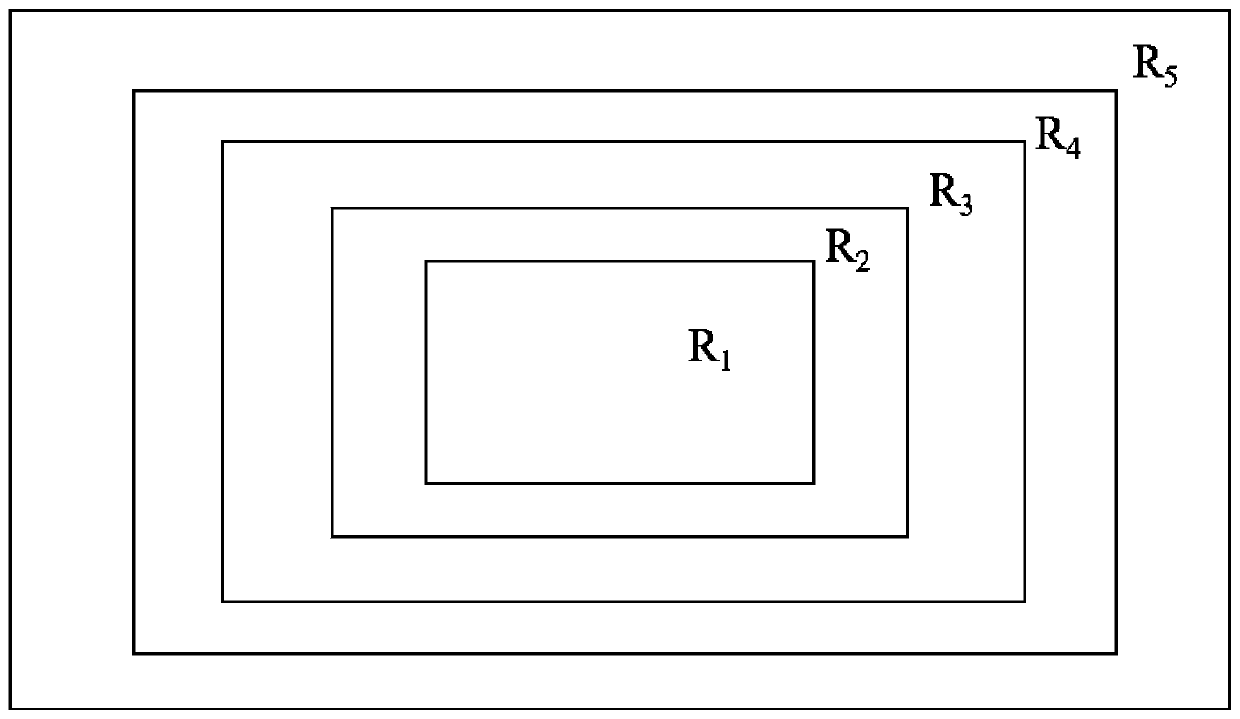

[0061] (1) Preliminary detection of video shot boundaries: use HSV color space information that is highly compatible with the human visual system to perform preliminary detection of shot boundaries on frames, and obtain the set of frames at the start and end of the boundary, referred to as the set of first and last frames; The search method selects the first and last frames of the boundary, which reduces the computational complexity of boundary selection and shortens the time consumption. Calculate the color features of the first and last frames of the border, if the difference between the first and last frames of the border is greater than the threshold, continue to search, otherwise stop;

[0062] Among them, the HSV calculation method is as follows:

[0063] (1.1) frame image is converted into HSV color information by RGB color information;

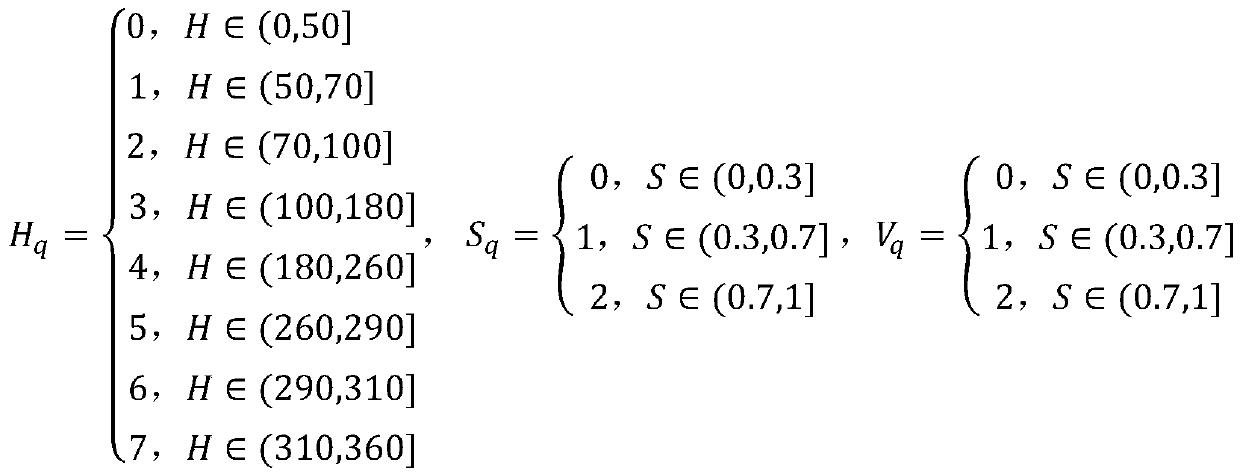

[0064] (1.2) Non-uniform quantization of the three components of HSV, quantized to 8th order, 3rd order and 3rd order, where:

[00...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com