Visual positioning method based on ground texture, chip and mobile robot

A mobile robot and visual positioning technology, applied in processor architecture/configuration, instrumentation, image data processing, etc., can solve the problems of fewer feature points, lower reliability, difficulty in feature selection and extraction, etc., to improve matching operation speed, Reduce calculation time and calculation amount, suppress the effect of natural background

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

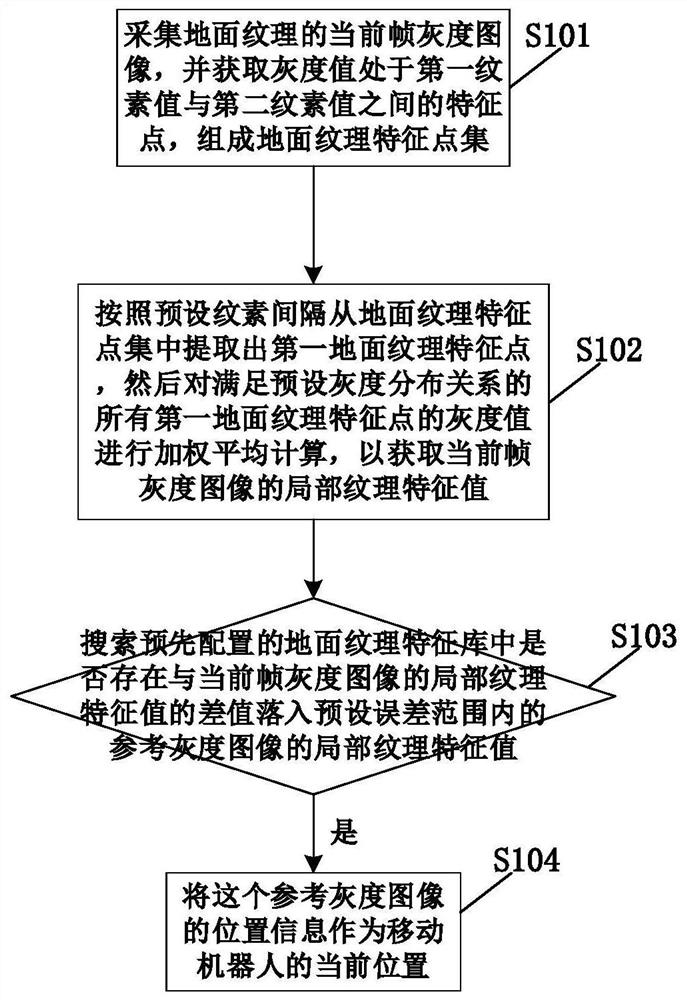

[0016] The technical solutions in the embodiments of the present invention will be described in detail below with reference to the drawings in the embodiments of the present invention.

[0017] It should be noted that relative terms such as the terms "first" and "second" are only used to distinguish one entity or operation from another entity or operation, and do not necessarily require or imply any relationship between these entities or operations. There is no such actual relationship or order between them. Furthermore, the term "comprises", "comprises" or any other variation thereof is intended to cover a non-exclusive inclusion such that a process, method, article, or apparatus comprising a set of elements includes not only those elements, but also includes elements not expressly listed. other elements of or also include elements inherent in such a process, method, article, or device. Without further limitations, an element defined by the phrase "comprising a ..." does not...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com