Human body three-dimensional joint point prediction method based on grouping regression model

A regression model and prediction method technology, applied in character and pattern recognition, instruments, calculations, etc., can solve problems such as high cost and time cost, inability to meet the movement characteristics of limbs, unreal and reliable final results, and avoid internal chaos. , the effect of deepening influence and improving robustness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

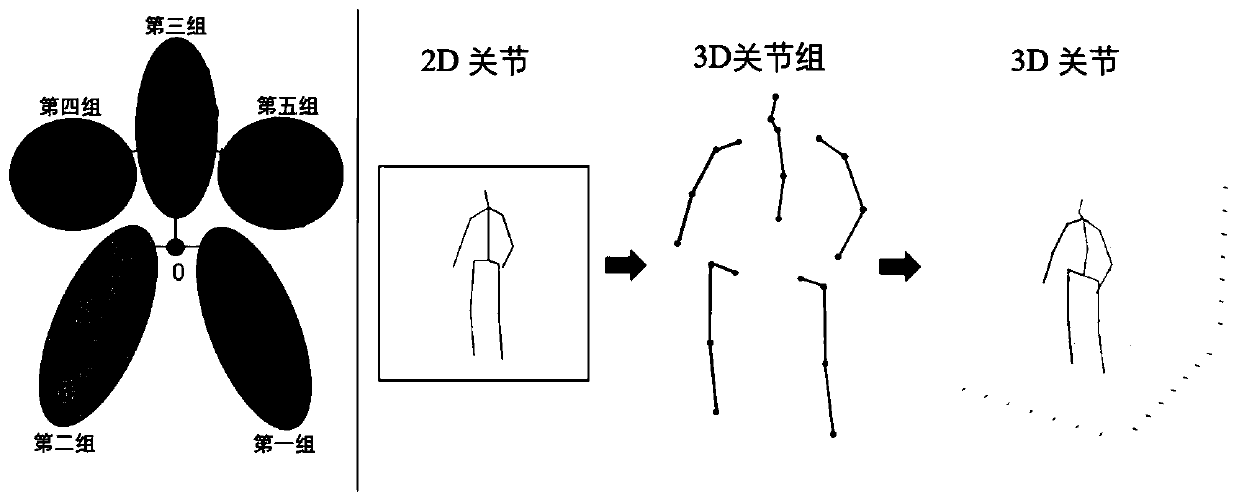

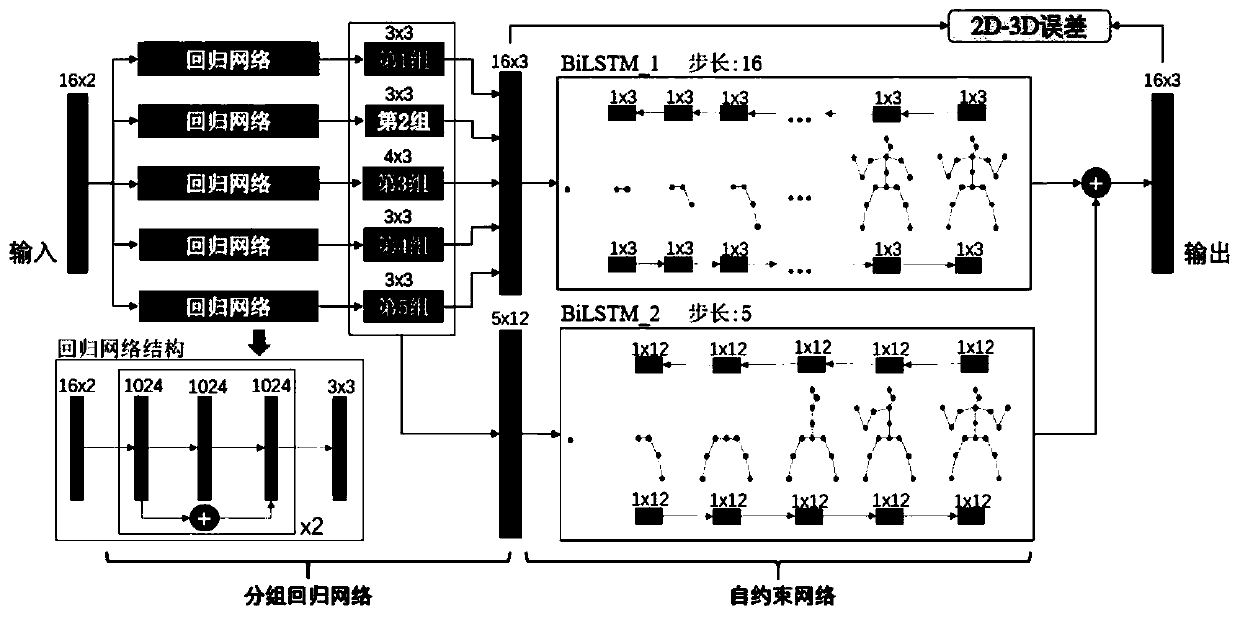

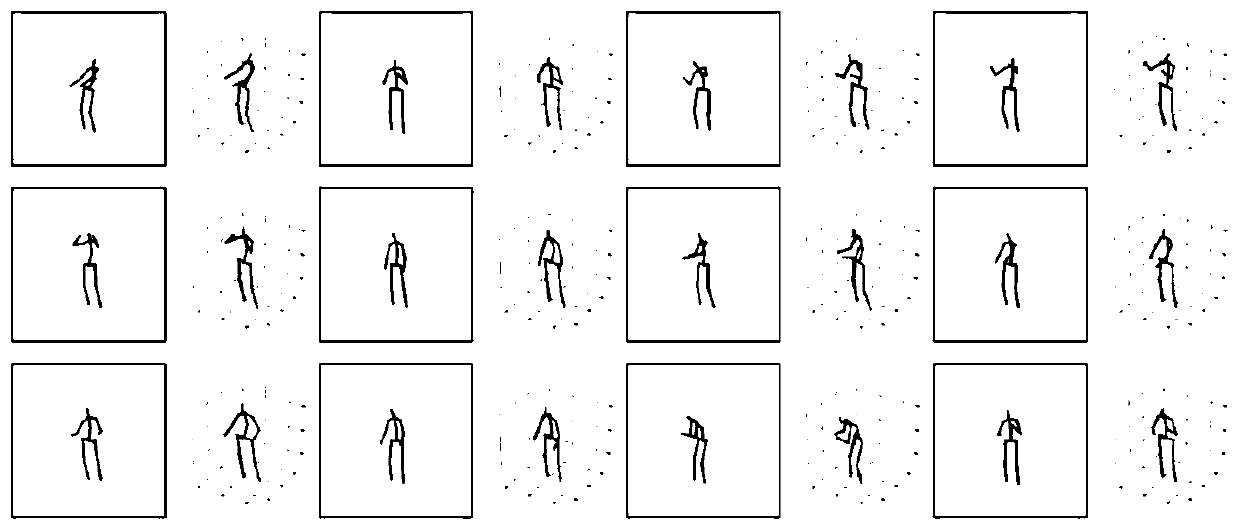

[0075] In this embodiment, firstly, the 2D joint detector is used to obtain the positions of the main joints of the human body in the picture, and then the two-dimensional position information of the joints is used to obtain the three-dimensional posture of the human body. The specific process is as figure 1 shown. In this embodiment, a more sophisticated 2d to 3d regression model is adopted. This model is implemented based on TensorFlow. It takes 45ms to perform a forward+backward pass (i.e. one forward+backward pass) in the graphics card of GTX1080, and the evaluation of the model is based on Two large human body pose data are Human3.6M and MPII.

[0076] Human3.6M is currently the largest public dataset for human 3D pose estimation. The dataset consists of 3.6 million pictures, and professional actors perform 15 kinds of daily activities, such as walking, eating, sitting, making phone calls and participating in discussions. Provide 2D and 3D real data of human joints.

...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com