Cross-modal retrieval method based on adversarial learning and asymmetric hashing

A cross-modal and asymmetric technology, applied in the field of cross-modal retrieval, which can solve the problem that the hash code is not optimal, and the modal data cannot be fully extracted.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0051] In order to better express the cross-modal retrieval method based on adversarial learning and asymmetric hashing proposed in the present invention, the following will take a 224×224 pixel picture and its corresponding text description as an example in combination with the accompanying drawings and specific implementation methods , the present invention will be further described.

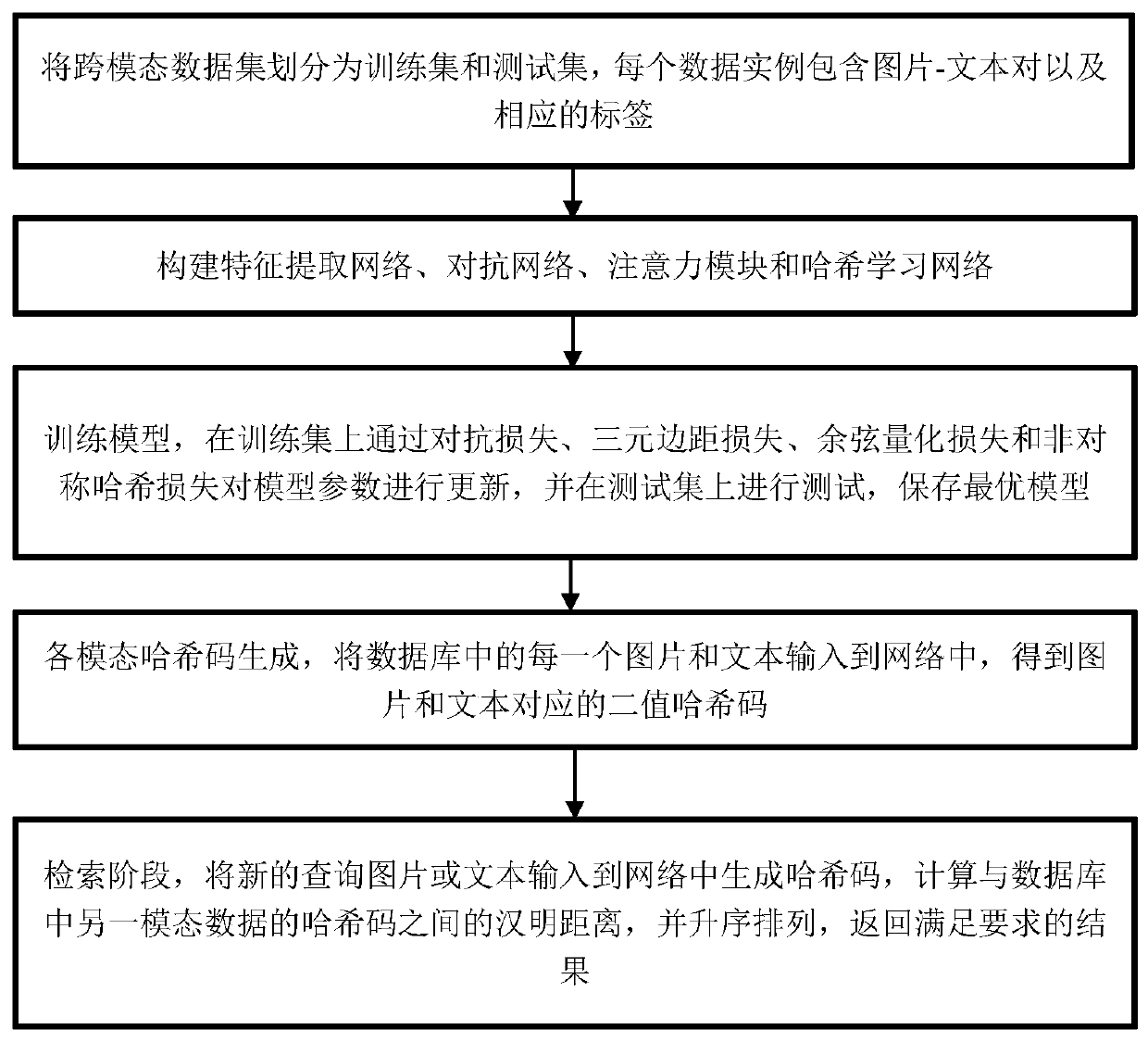

[0052] figure 1 It is the overall flowchart of the present invention, including five parts: data preprocessing, initializing model framework, model training, generation of hash codes for each modal data, and retrieval stages.

[0053] Step 1. Data preprocessing. Divide the cross-modal data set into two parts: training set and test set, each data instance contains image-text pairs and their corresponding labels;

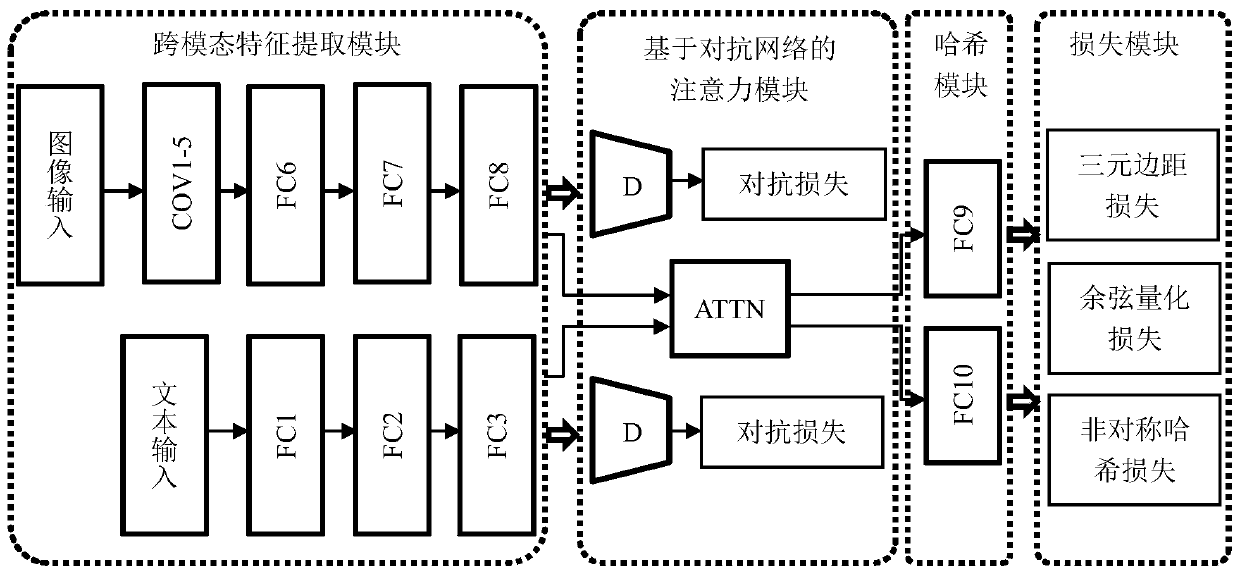

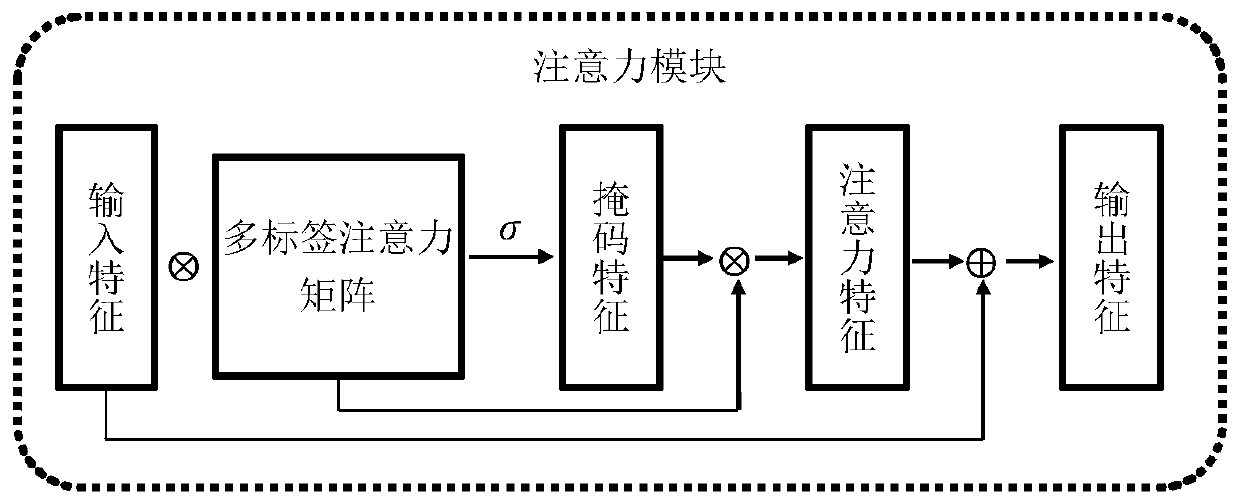

[0054] Step 2. Initialize the model framework. figure 2 is the model framework designed in the present invention, which includes a cross-modal feature extraction module, an attent...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com