Multi-camera multi-target tracking method

A multi-target tracking and multi-camera technology, applied in the field of multi-camera multi-target tracking, can solve the problems of large cumulative error, low fusion accuracy, and low camera resolution, so as to improve efficiency and effectiveness, ensure real-time performance, and improve Build effects for optimized speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

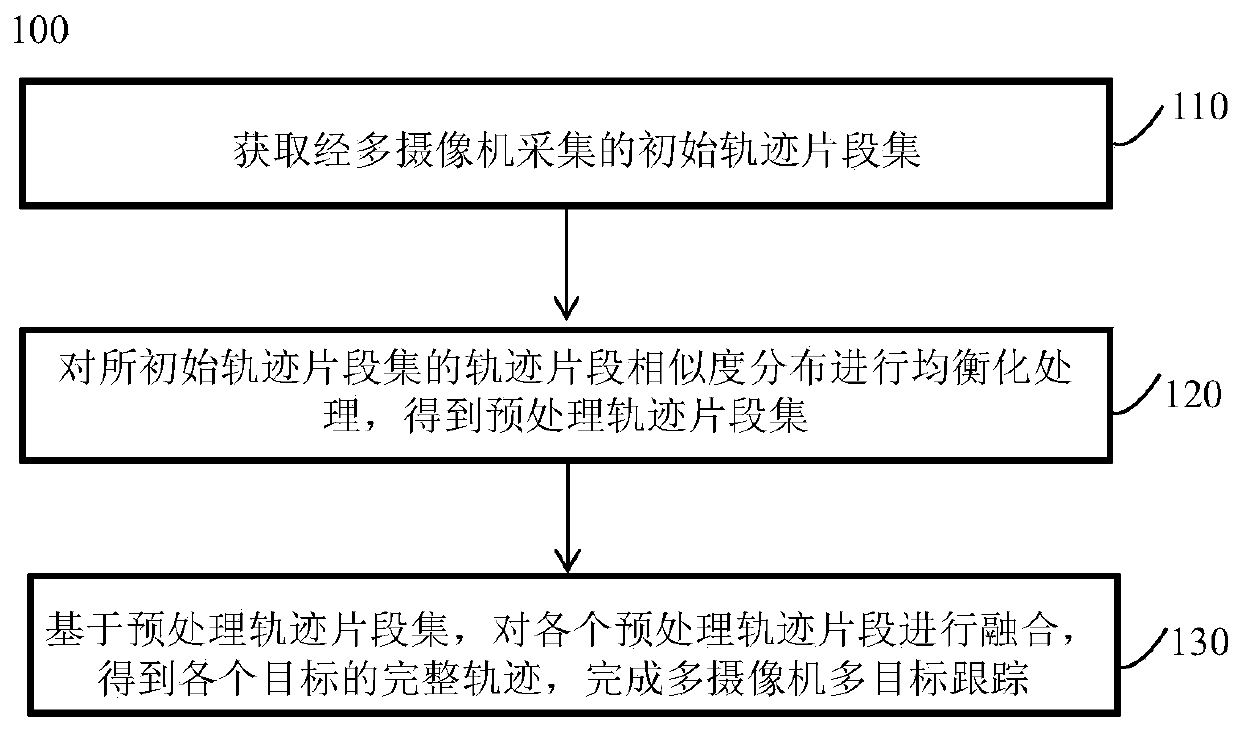

[0042] A multi-camera multi-target tracking method 100, such as figure 1 shown, including:

[0043] Step 110, obtaining the initial trajectory segment set collected by multiple cameras;

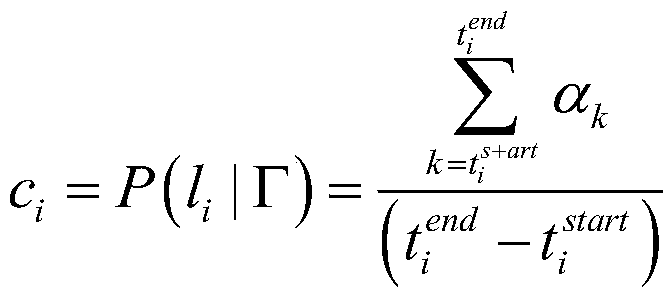

[0044] Step 120, performing equalization processing on the trajectory segment similarity distribution of the initial trajectory segment set to obtain a preprocessed trajectory segment set;

[0045] Step 130 , based on the pre-processed track segment set, fuse each pre-processed track segment to obtain a complete track of each target, and complete multi-camera and multi-target tracking.

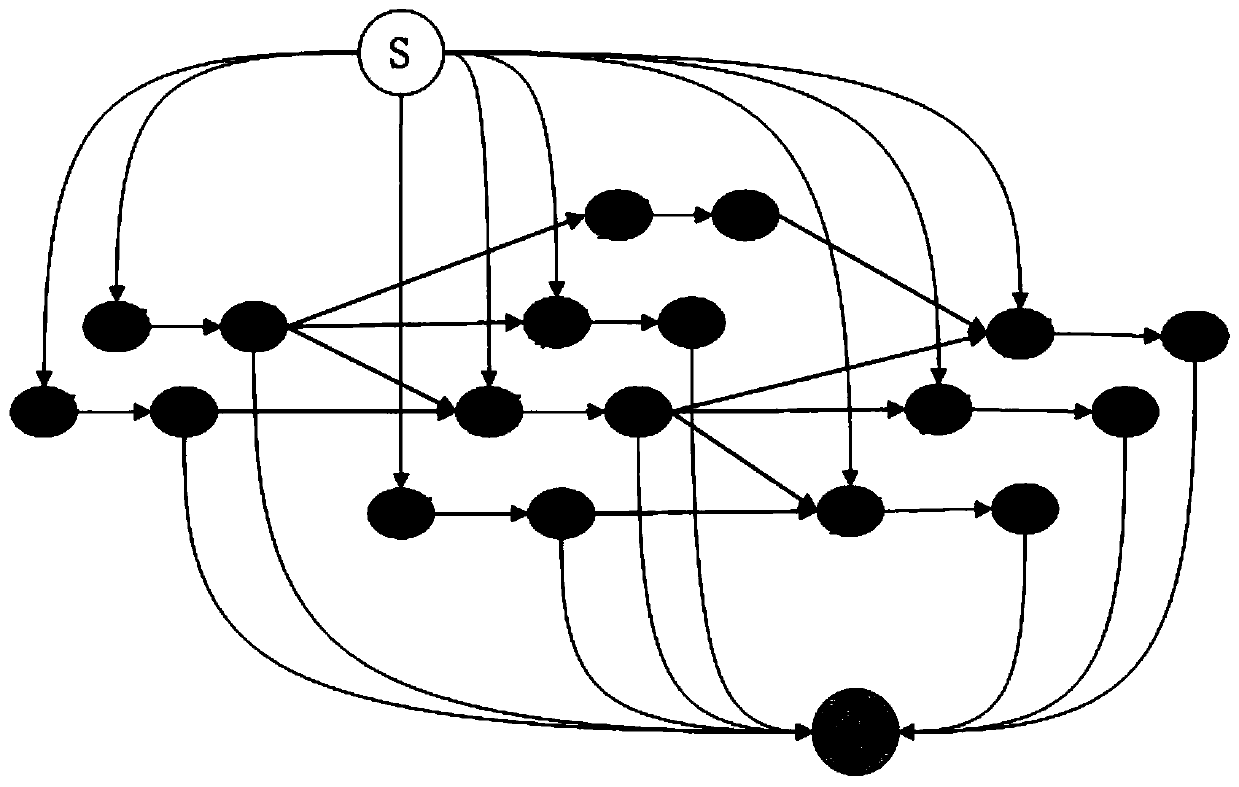

[0046] This embodiment adopts a one-step idea, but it is different from the existing method of fusion directly based on image data. The present invention puts the trajectory segments under multiple cameras together for trajectory fusion to perform global optimization and better solve the problem. If there is an error in single-camera multi-target tracking, the error will be further amplified in the subseque...

Embodiment 2

[0101] A storage medium, in which instructions are stored, and when a computer reads the instructions, the computer is made to execute any one of the above multi-camera and multi-target tracking methods.

[0102] The relevant technical solutions are the same as those in Embodiment 1, and will not be repeated here.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com