Video loop filter based on deep convolutional network

A technology of loop filtering and deep convolution, applied in the field of computer vision, to achieve high reconstruction quality, improve accuracy, and reduce the number of bits

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

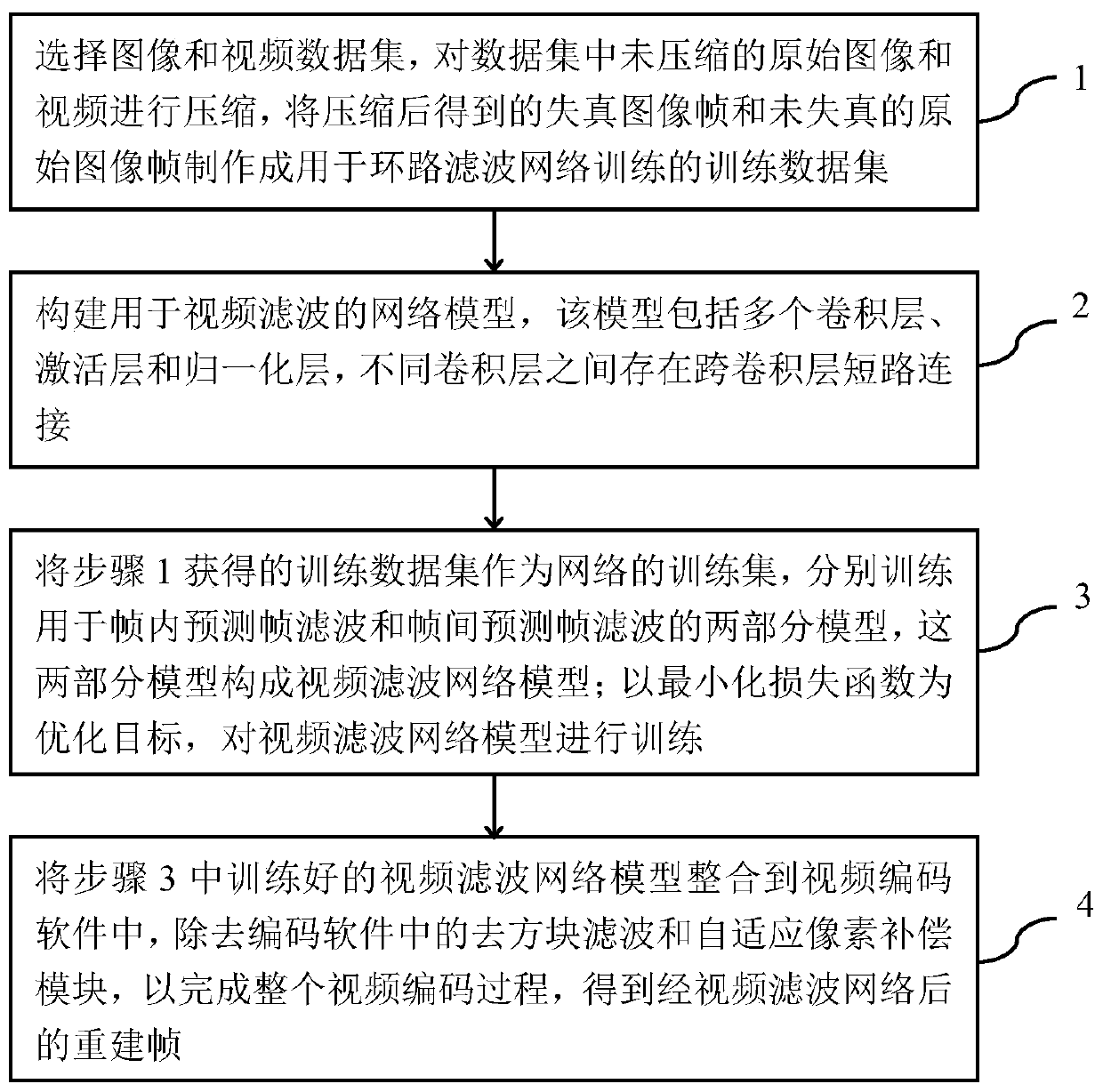

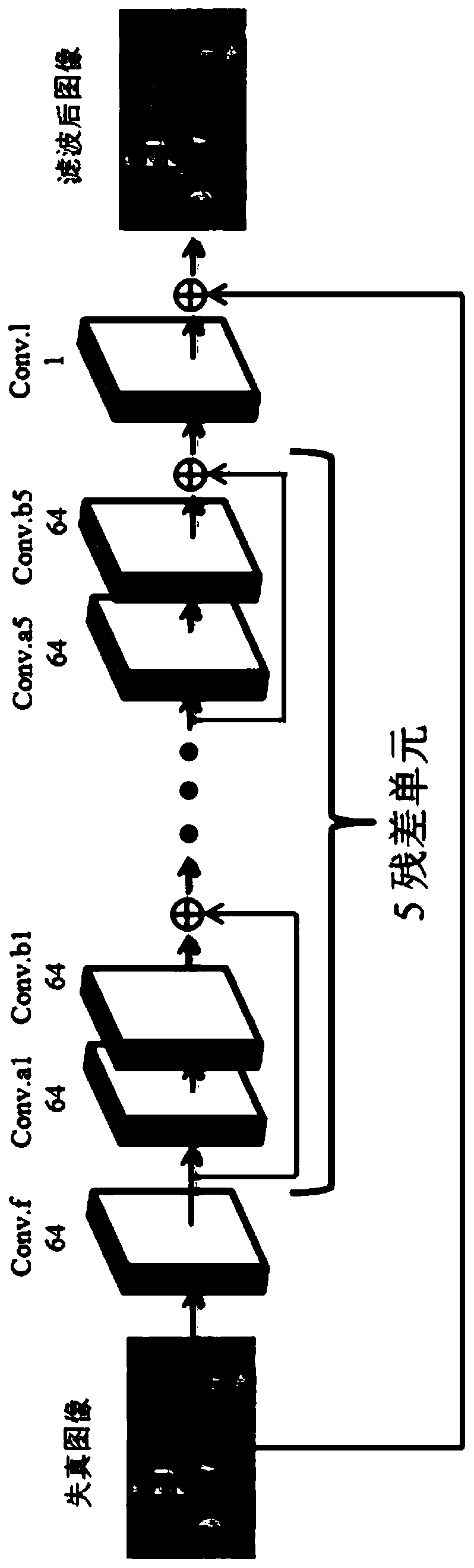

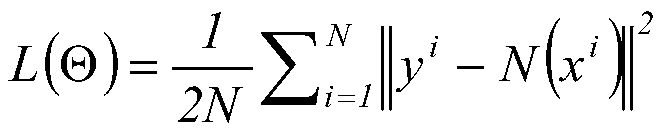

[0017] The technical solution of the present invention will be described in detail below in conjunction with the accompanying drawings and embodiments.

[0018] Step 1. In view of the different distortion characteristics of intra-frame prediction frames and inter-frame prediction frames, this embodiment selects two different data sets to make training sets for intra-frame prediction frames and inter-frame prediction frame networks respectively.

[0019] For the intra-frame prediction frame, this embodiment selects the UCID (UncompressedColour Image Database) image data set containing 1338 natural images. Each image in the data set uses the HEVC reference software in the full frame (AllIntra), and closes the deblocking filter and adaptive The compressed image is compressed under the configuration of pixel compensation, and the compressed image is divided into 35x35 pixel blocks, and together with the original image, it is made into a training set for the intra-frame prediction f...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com