Object recognition and real-time translation method and device

A technology for object recognition and translation devices, applied in neural learning methods, character and pattern recognition, and electric-operated teaching aids. The effect of improving the learning experience

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

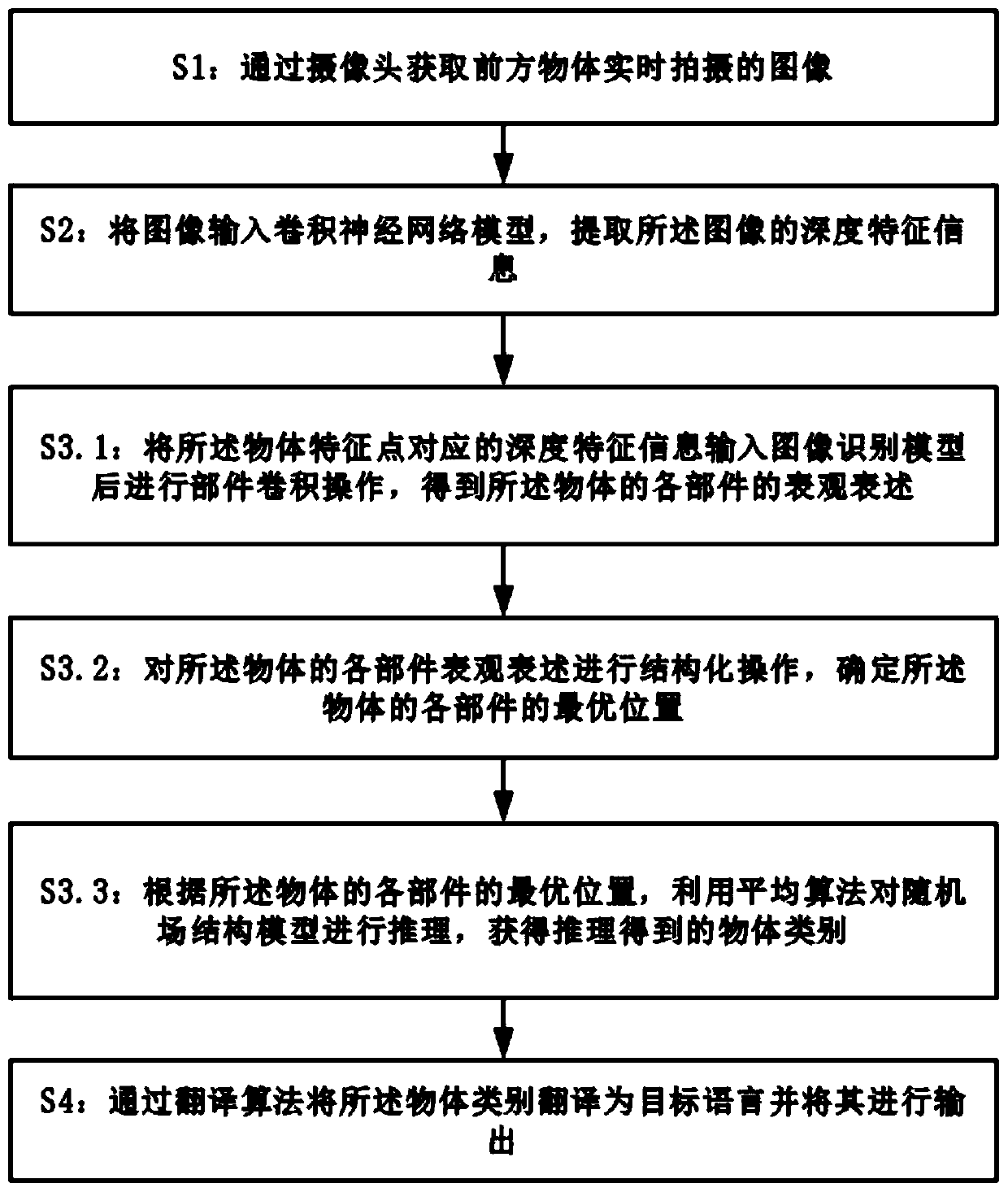

[0039] Such as figure 1 Shown is a flow chart of the method for object recognition and real-time translation in this embodiment.

[0040] This embodiment proposes a method for object recognition and real-time translation, including the following steps:

[0041] S1: Obtain real-time images of objects in front through the camera.

[0042] S2: Input the image into a convolutional neural network model to extract depth feature information of the image.

[0043] In this step, the convolutional neural network model includes a convolutional layer and a pooling layer for extracting depth feature information of the input image.

[0044] S3: Input the extracted depth feature information into the image recognition model to recognize the category of the object, and output the recognized category of the object. The specific steps are as follows:

[0045] S3.1: Input the depth feature information corresponding to the feature points of the object into the image recognition model and perfo...

Embodiment 2

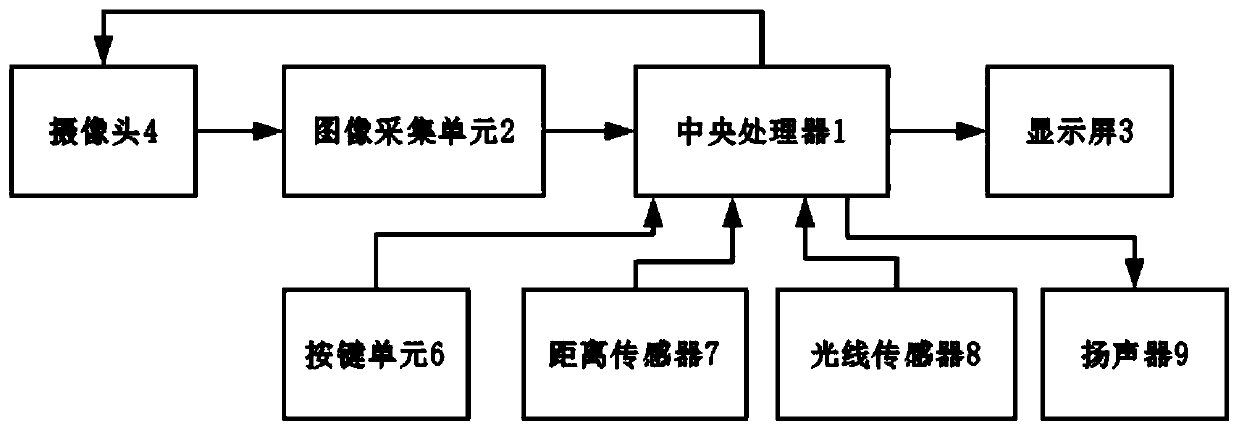

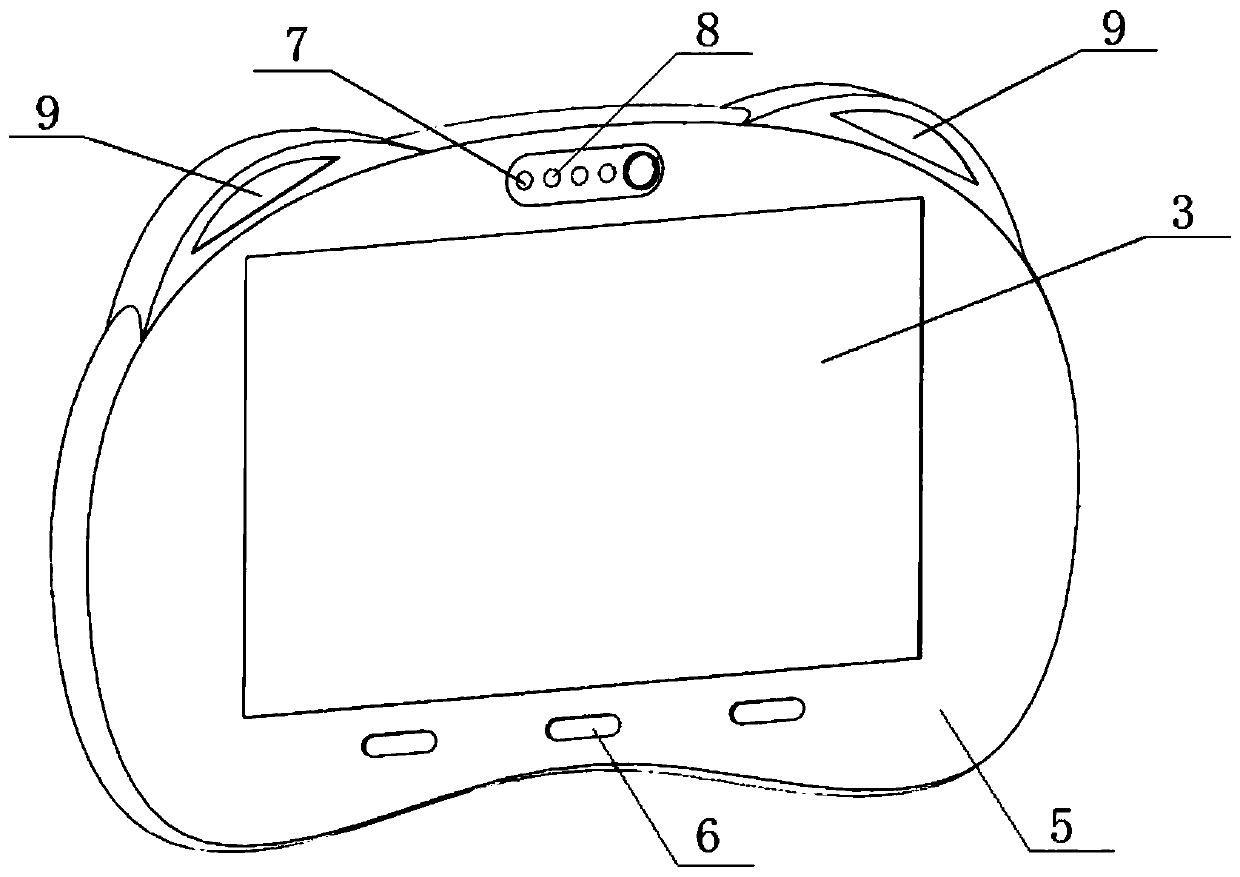

[0054] This embodiment proposes an object recognition and real-time translation device, which applies the object recognition and real-time translation method of the above-mentioned embodiments. Such as Figure 2-4 Shown is a schematic diagram of the object recognition and real-time translation device of this embodiment.

[0055] The object recognition and real-time translation device of this embodiment includes a central processing unit 1, an image acquisition unit 2, a display screen 3, a camera 4, a device housing 5, a key unit 6, a distance sensor 7, a light sensor 8, and a speaker 9, wherein The camera 4 is arranged on one side of the device casing 5, the display screen 3 is arranged on the other side of the device casing 5, the central processing unit 1 and the image acquisition unit 2 are integrated inside the device casing 5, the button unit 6, the distance sensor 7, the light The sensor 8 and the speaker 9 are respectively arranged on the device casing 5 . Specifical...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com