Target-assisted action recognition method based on graph neural network

An action recognition and neural network technology, applied in the field of target-assisted action recognition, can solve the problem of low accuracy of video action recognition, and achieve the effect of improving accuracy and accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

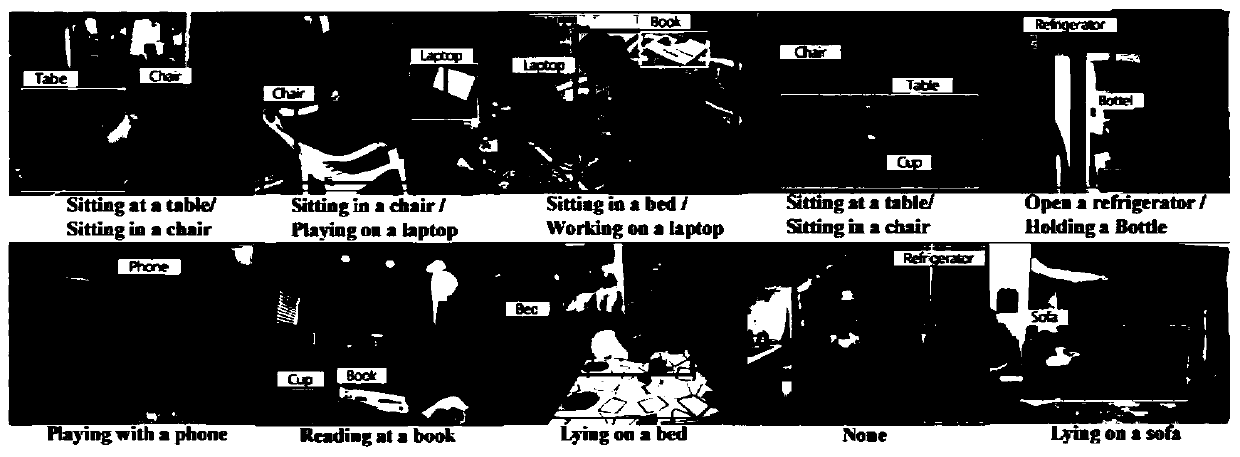

[0054] see figure 1 , figure 1 It is a public data set Object-Charades to verify the feasibility of the method of the present invention: this data set is a large-scale multi-label video data set, the actions in it include character interaction, and the true value information of the video includes the actions of the video and the actions in the video. Bounding boxes of people and interacting objects within each frame, detected with a pretrained object detector. The data set contains 52 types of actions and more than 7,000 videos. The average length of each video is about 30 seconds, and the scenes where the actions take place are all indoors. Such as figure 1 As shown, each image represents a video, which contains bounding boxes of people and interactive objects, and below the image is the action label of the video.

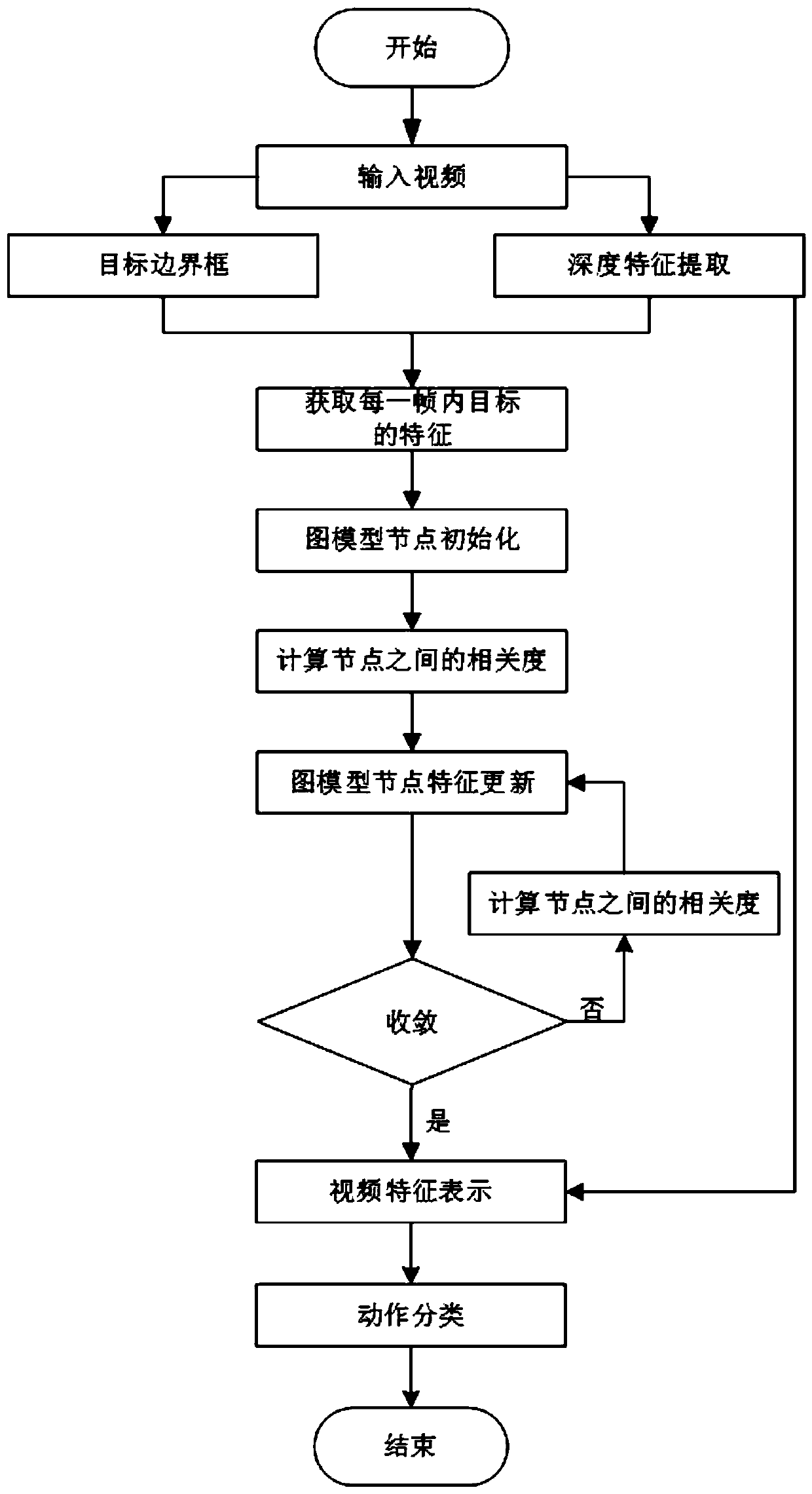

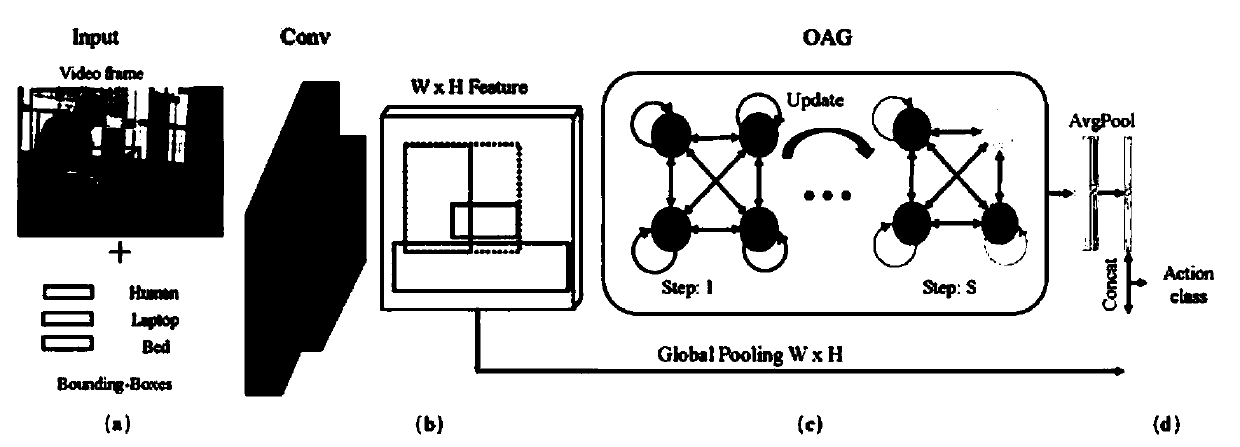

[0055] see figure 2 , a target-assisted action recognition method based on a graph neural network in an embodiment of the present invention, specifically com...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com