Bionic visual image target recognition method fusing dot-line memory information

A visual image and target recognition technology, which is applied in the field of zooming and occlusion target recognition, can solve the problem of low recognition rate of occlusion targets, achieve the effect of dealing with occlusion problems and providing size and position invariance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

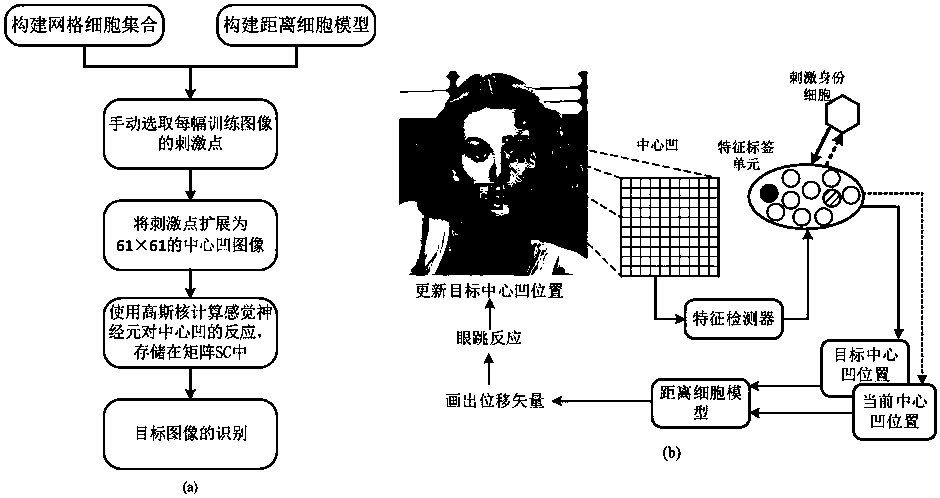

[0029] The overall frame diagram of the method identification process of the present invention is as figure 1 As shown, it specifically includes the following steps:

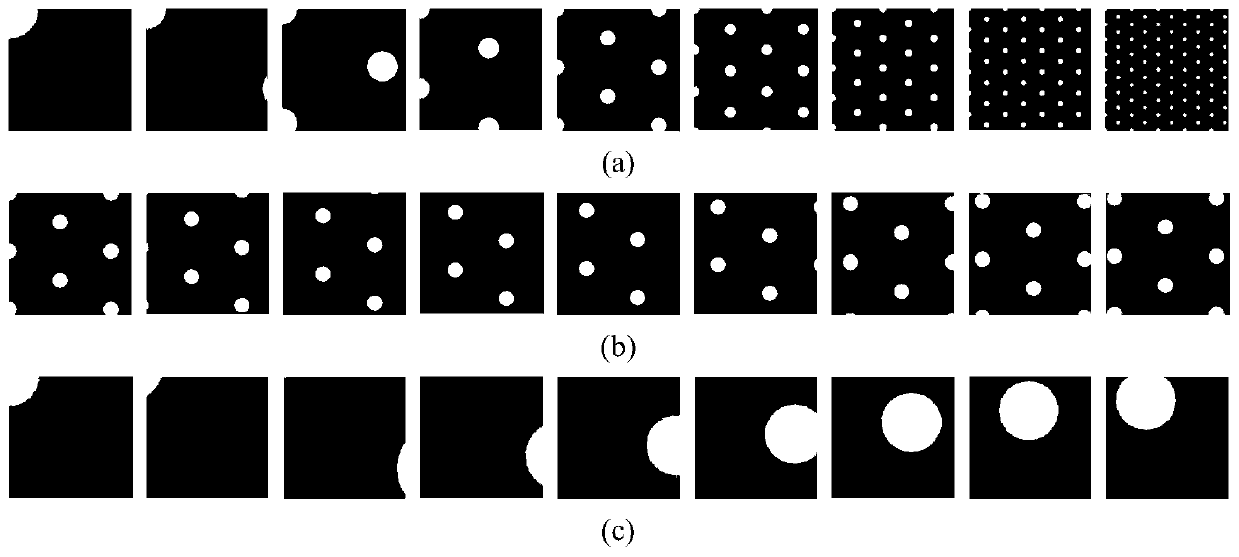

[0030] Step 1: Construct a vision-driven grid cell set, and perform memory recognition by encoding the movement vector between features. Each layer of grid cell map consists of a matrix of the same size (440×440 pixels), and uses 9 modules and 100 offsets to form a 440×440×100×9 four-dimensional grid cell; figure 2 It shows a grid cell set of 9 modules corresponding to one type of offset, a grid cell set of 10 offsets corresponding to the first type of module, and a grid of 10 offsets corresponding to the fifth type of module collection of grid cells;

[0031] Step 2: Construct the distance cell model and calculate the displacement vector between the positions encoded by the grid cell population vector;

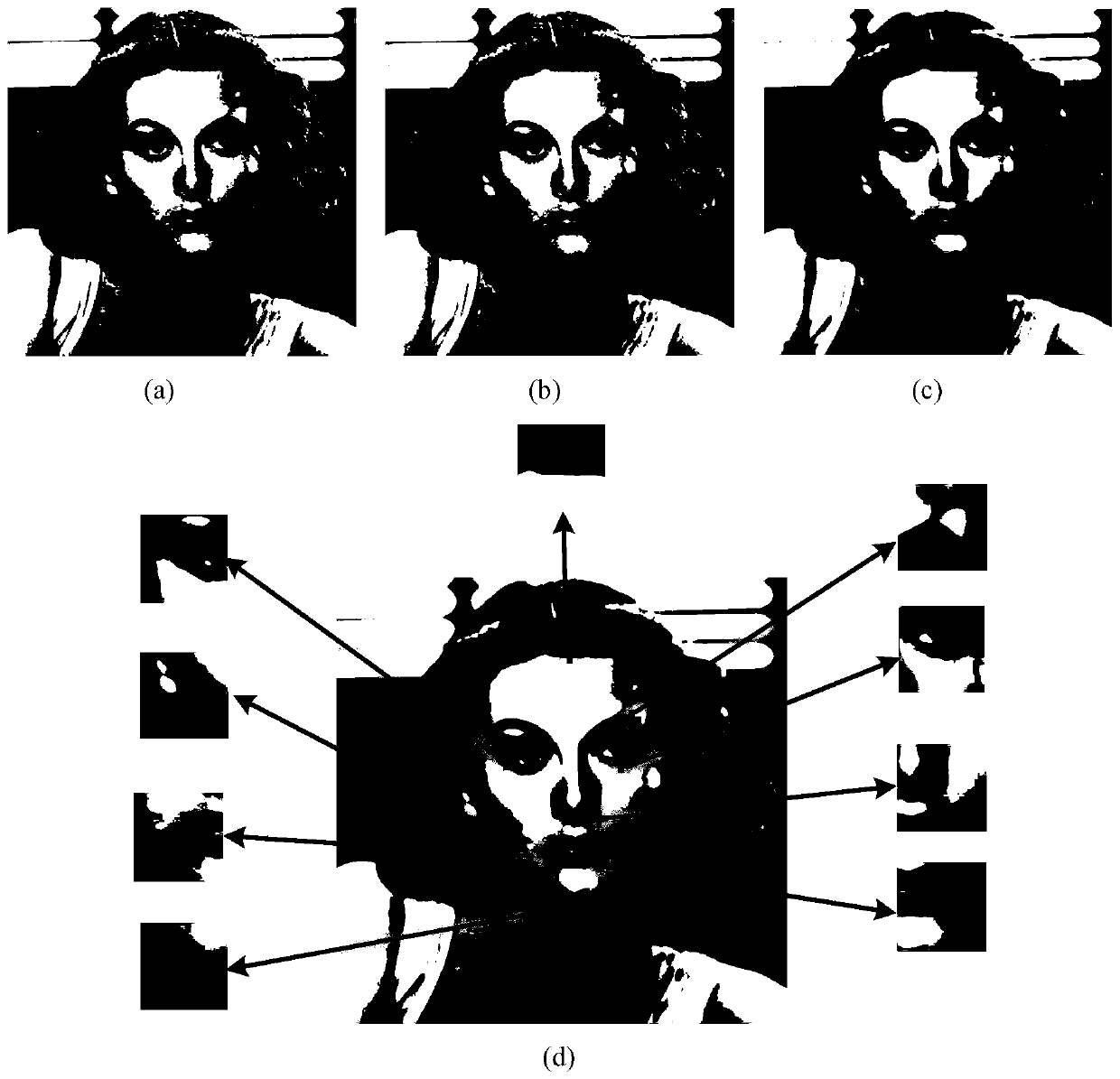

[0032] Step 3: After the grid cell and distance cell models are constructed, image training is performed...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com