High-parallelism computing system and instruction scheduling method thereof

A computing system and a technology for computing instructions, which are applied to a high-parallel computing system and its instruction scheduling method and the corresponding compiling field, can solve the problems of increasing the computing scale of neural network models, increasing the complexity, and failing to meet practical requirements.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0036] Hereinafter, preferred embodiments of the present disclosure will be described in more detail with reference to the accompanying drawings. Although the drawings show preferred embodiments of the present disclosure, it should be understood that the present disclosure can be implemented in various forms and should not be limited by the embodiments set forth herein. On the contrary, these embodiments are provided to make the present disclosure more thorough and complete, and to fully convey the scope of the present disclosure to those skilled in the art.

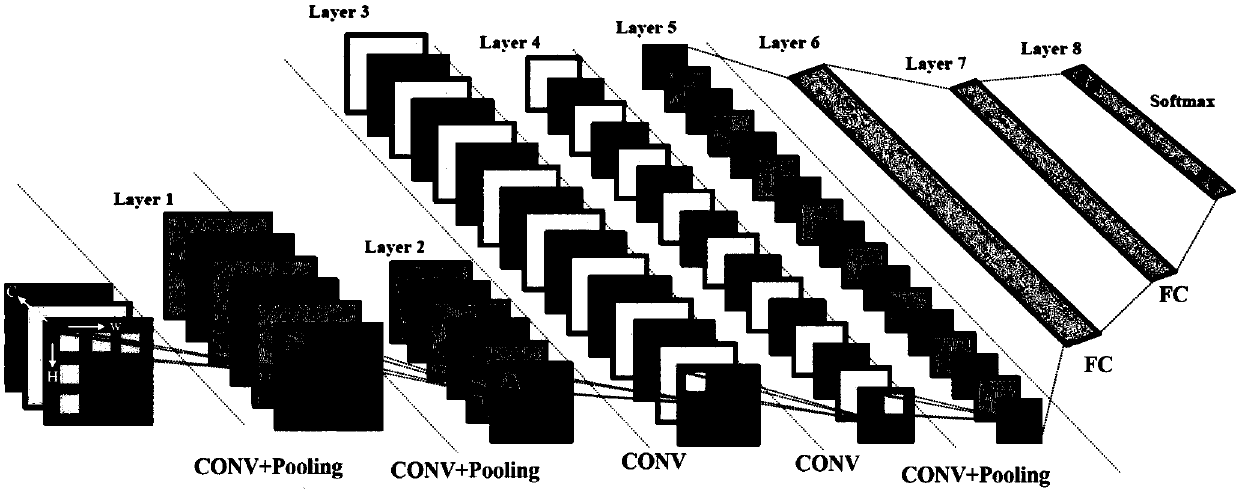

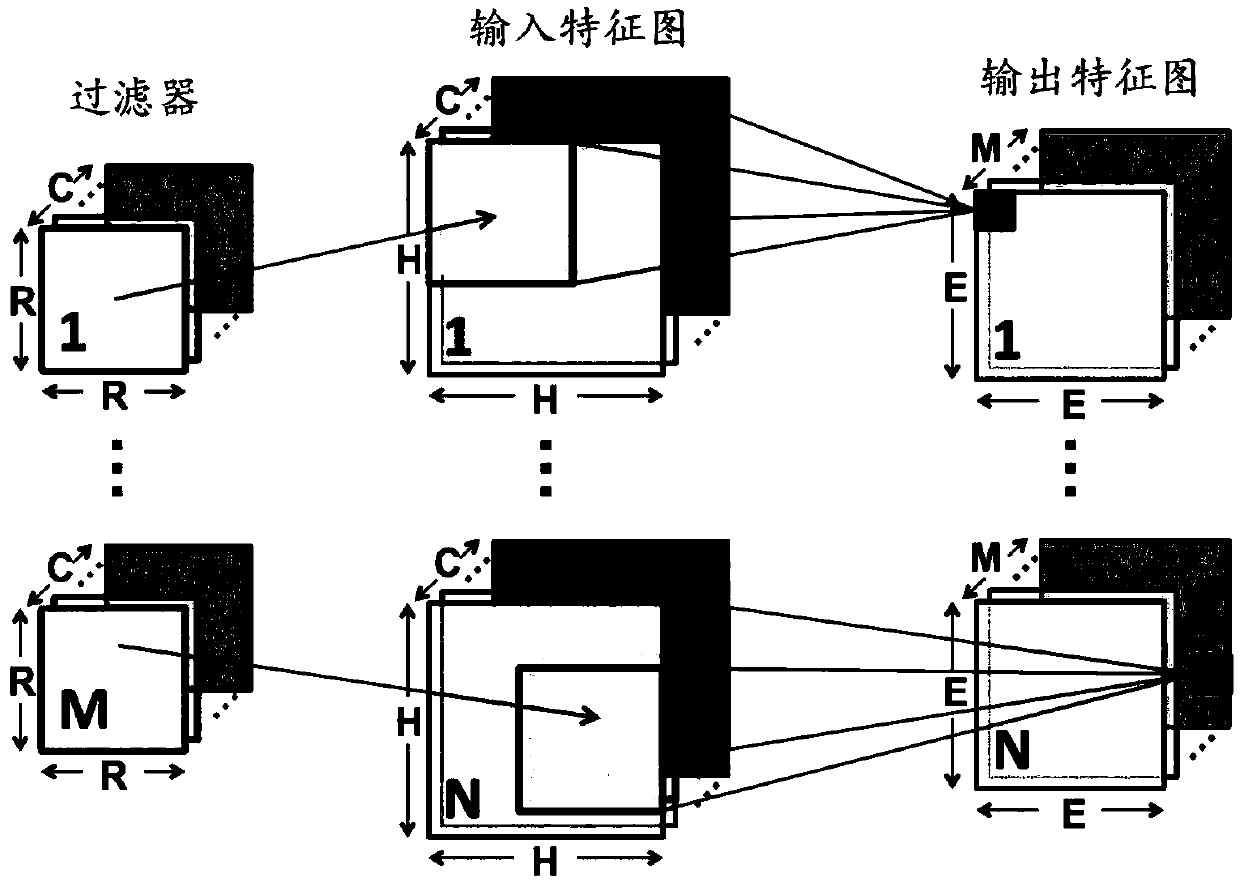

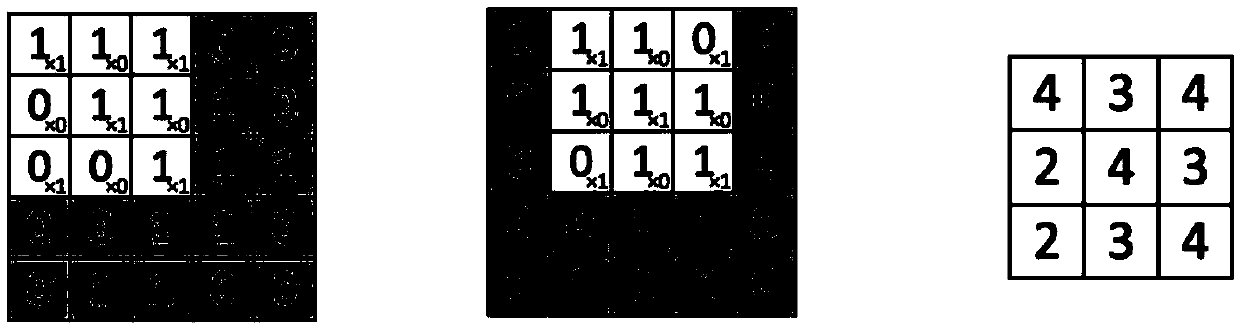

[0037] Artificial intelligence has developed rapidly in recent years, and has achieved good application effects in the fields of image classification, detection, video and voice processing, and still has great development prospects. Neural network is the core of artificial intelligence applications, and deep learning neural network algorithm is one of the most common neural network models. The workload characteristics of n...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com