A deep learning-based emotional speech synthesis method and device

A technology of speech synthesis and deep learning, applied in speech synthesis, speech analysis, instruments, etc., to enrich the emotion of conversational speech, improve naturalness, and improve the experience of human-computer communication

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

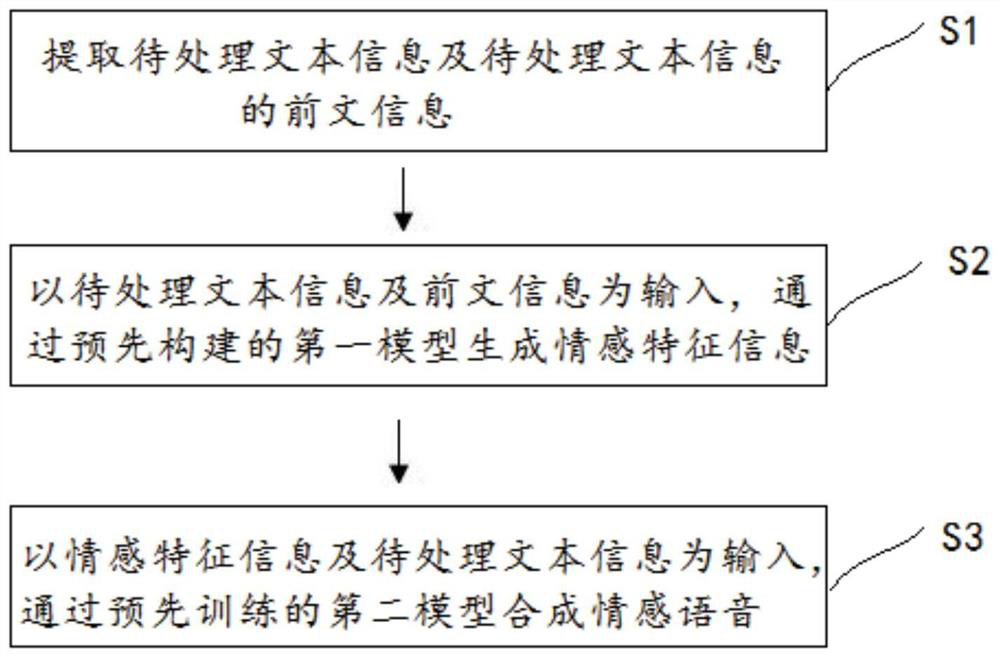

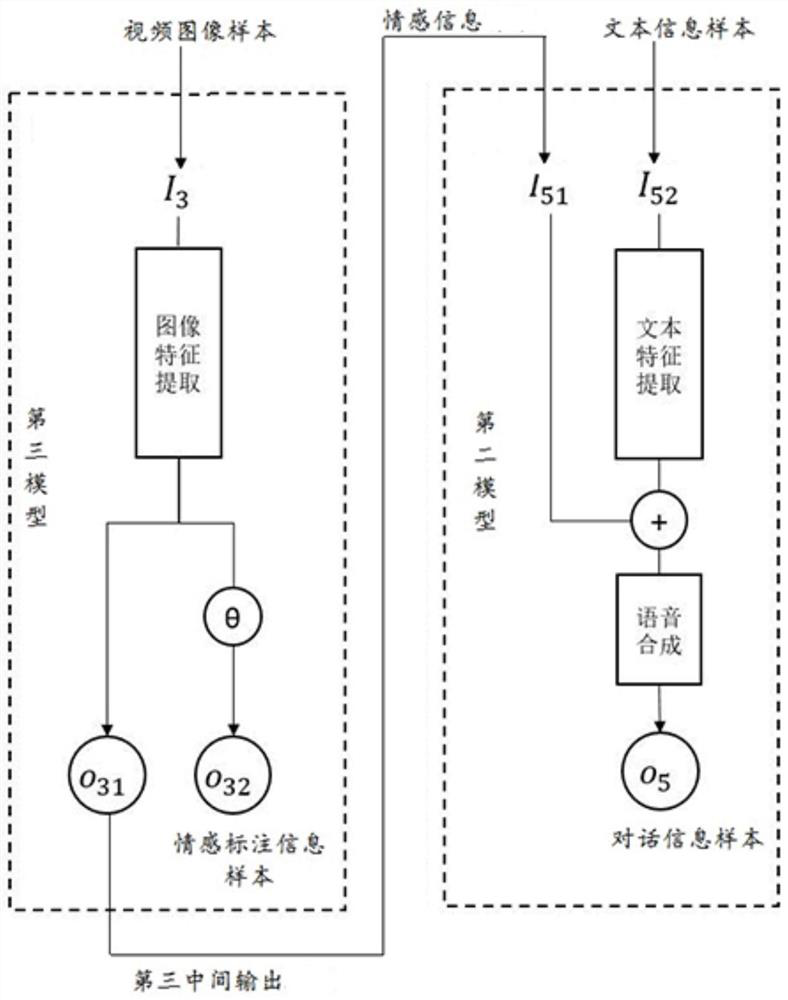

[0078] like figure 1 As shown, this embodiment provides an emotional speech synthesis method based on deep learning, which belongs to the field of speech synthesis. Through this method, emotional speech can be synthesized without the need to manually mark emotions, and the emotion of synthesized speech can be effectively improved. naturalness.

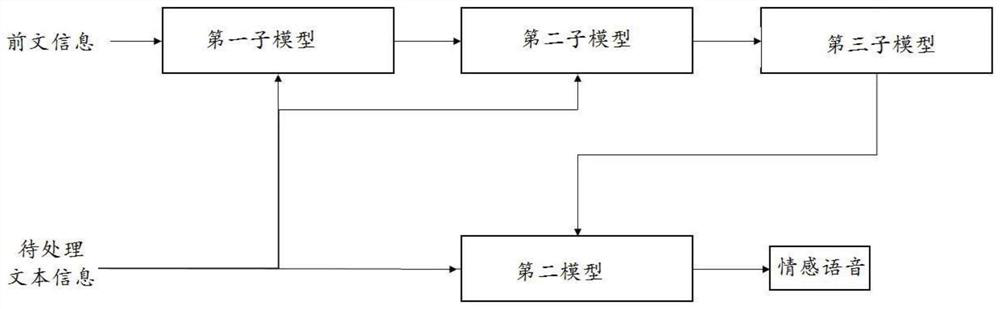

[0079] combine figure 1 , 2 As shown, the method includes the following steps:

[0080] S1. Extract the text information to be processed and the preceding information of the text information to be processed.

[0081] Specifically, when the processing object is a text object, the preceding information includes preceding text information;

[0082] When the processing object is a speech object or a video object, the preceding information includes preceding text information and preceding speech information.

[0083] It should be noted that, in this step, extracting text information from text objects, extracting text information and vo...

Embodiment 2

[0127] In order to implement the deep learning-based emotional speech synthesis method in the first embodiment, this embodiment provides a deep learning-based emotional speech synthesis apparatus 100 .

[0128] Figure 5 A schematic diagram of the structure of the deep learning-based emotional speech synthesis device 100, such as Figure 5 As shown, the device 100 includes at least:

[0129] Extraction module 1: used to extract the text information to be processed and the preceding information of the text information to be processed, and the preceding information includes the preceding text information;

[0130] Emotional feature information generation module 2: used to generate emotional feature information through a pre-built first model with the text information to be processed and the preceding information as input;

[0131] Emotional speech synthesis module 3: used for synthesizing emotional speech through the pre-trained second model using the emotional feature informa...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com