Automatic matting method based on semantic segmentation and significance analysis

A semantic segmentation and saliency technology, applied in image analysis, image enhancement, image data processing and other directions, can solve the problems of image calculation saliency errors, inability to generalize, etc., to achieve the effect of saving manpower and accurate matting results

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

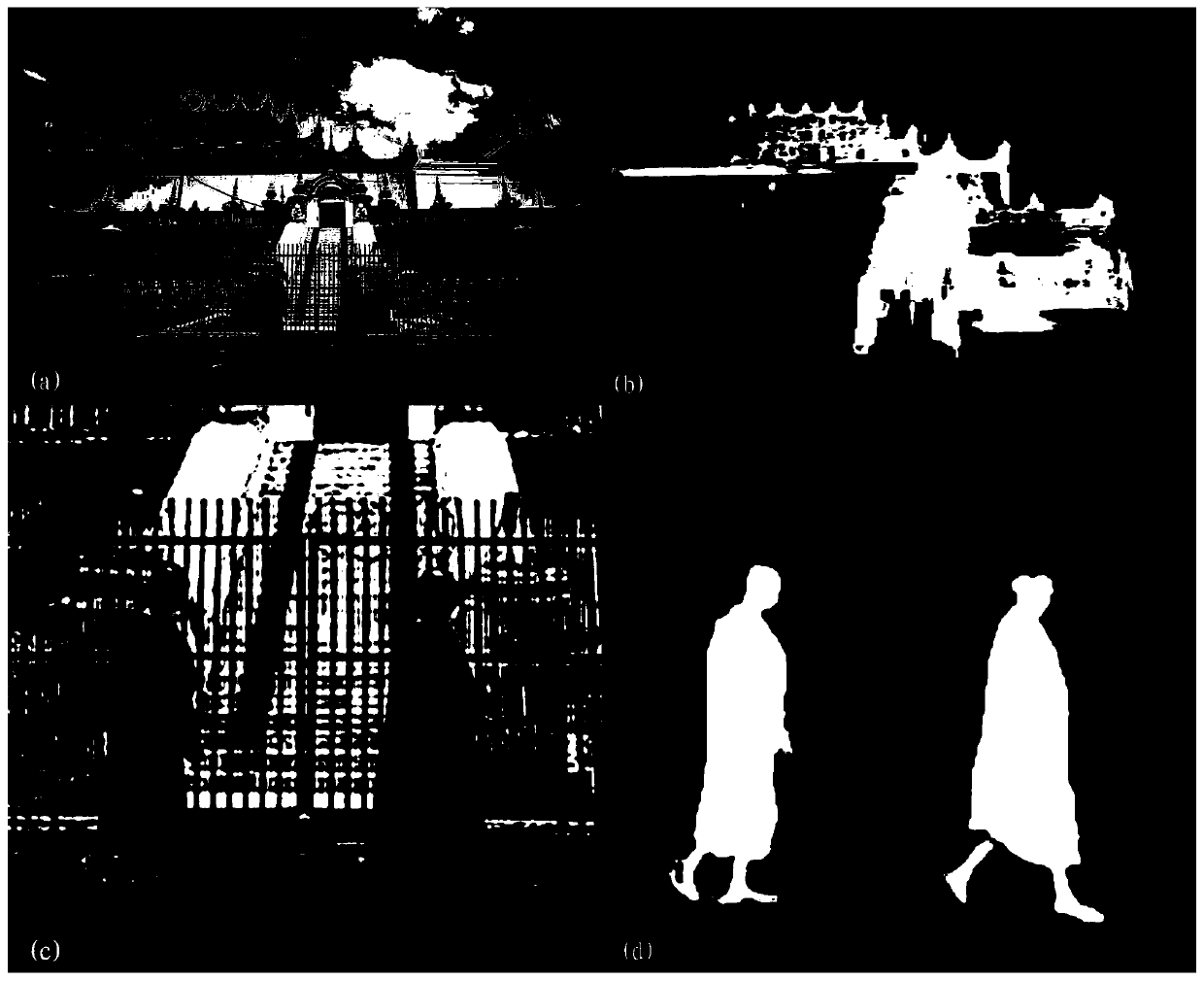

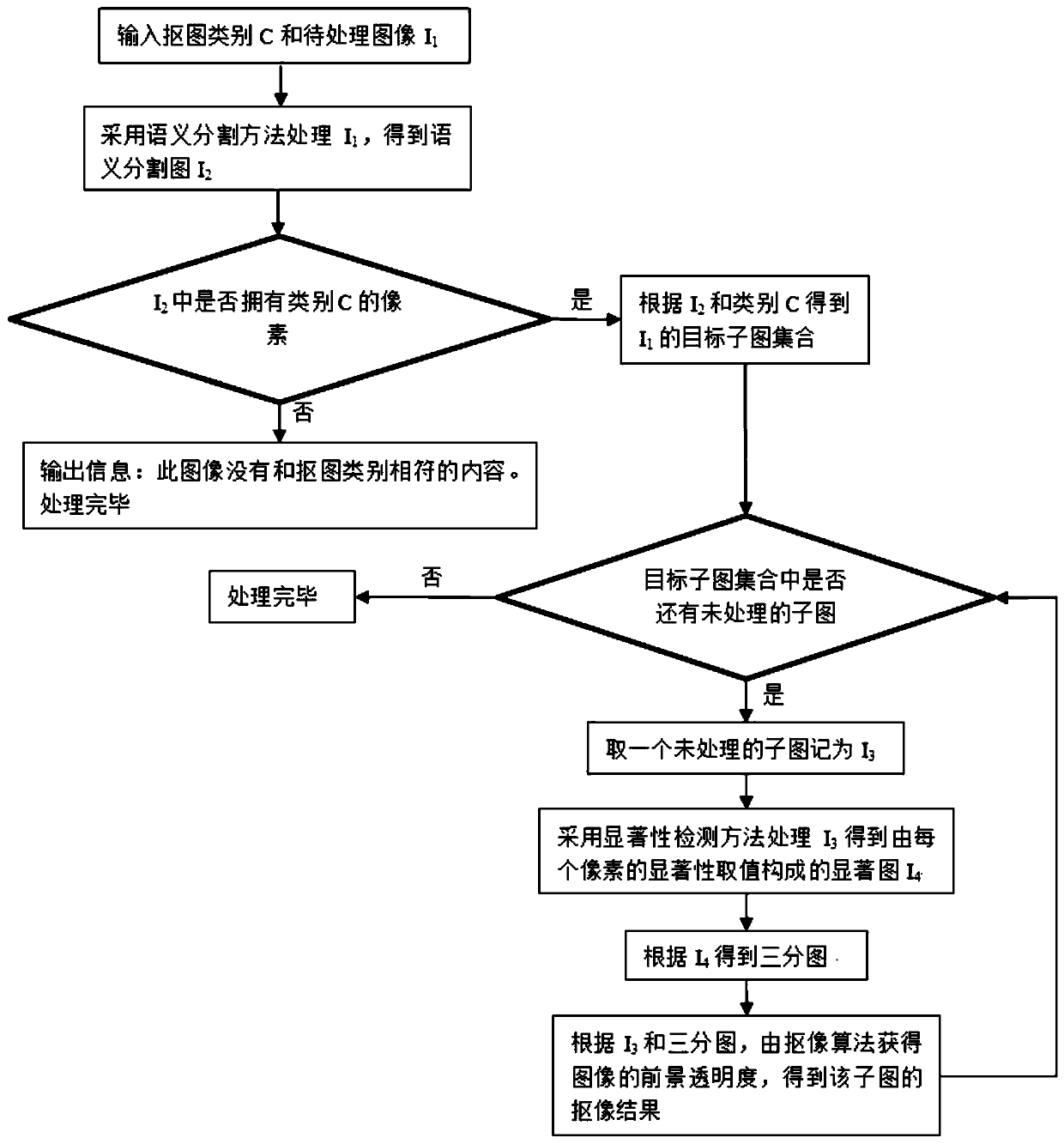

[0059] Using the automatic map-cutting method of the present invention to Figure 6 The image shown is processed, and the object to be extracted is "person". The specific steps are as follows:

[0060] Step 1), input the object you want to cut out as "person" and the image to be processed;

[0061] Step 2), use the semantic segmentation method to process Figure 6 , preferably using the method in Document 1 to obtain a semantic segmentation map, such as Figure 7 shown;

[0062] Step 3), judgment Figure 7 In the pixel that has the category "person", go to step 4);

[0063] Step 4), from Figure 7 Get the target subgraph area containing the category "person" in the Figure 6 The target subgraph set is obtained by cropping in the middle, and there are two subgraphs in the set, such as Figure 9 with Figure 10 shown;

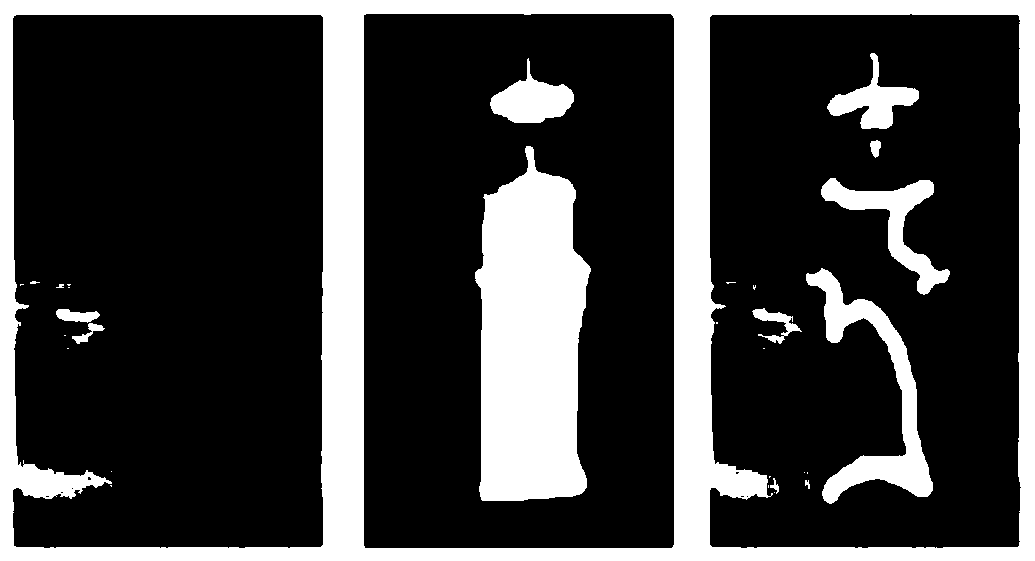

[0064] Step 5), using the significance detection method to process Figure 9 Get a saliency map, such as Figure 11 As shown in the leftmost figure, t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com