FPGA-based neural network acceleration method and accelerator

A neural network and accelerator technology, applied in the field of neural network, can solve problems such as high computational complexity, inability to meet CNN acceleration, insufficient cost and power consumption, etc., to achieve the effect of improving computing efficiency and reducing off-chip storage access

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

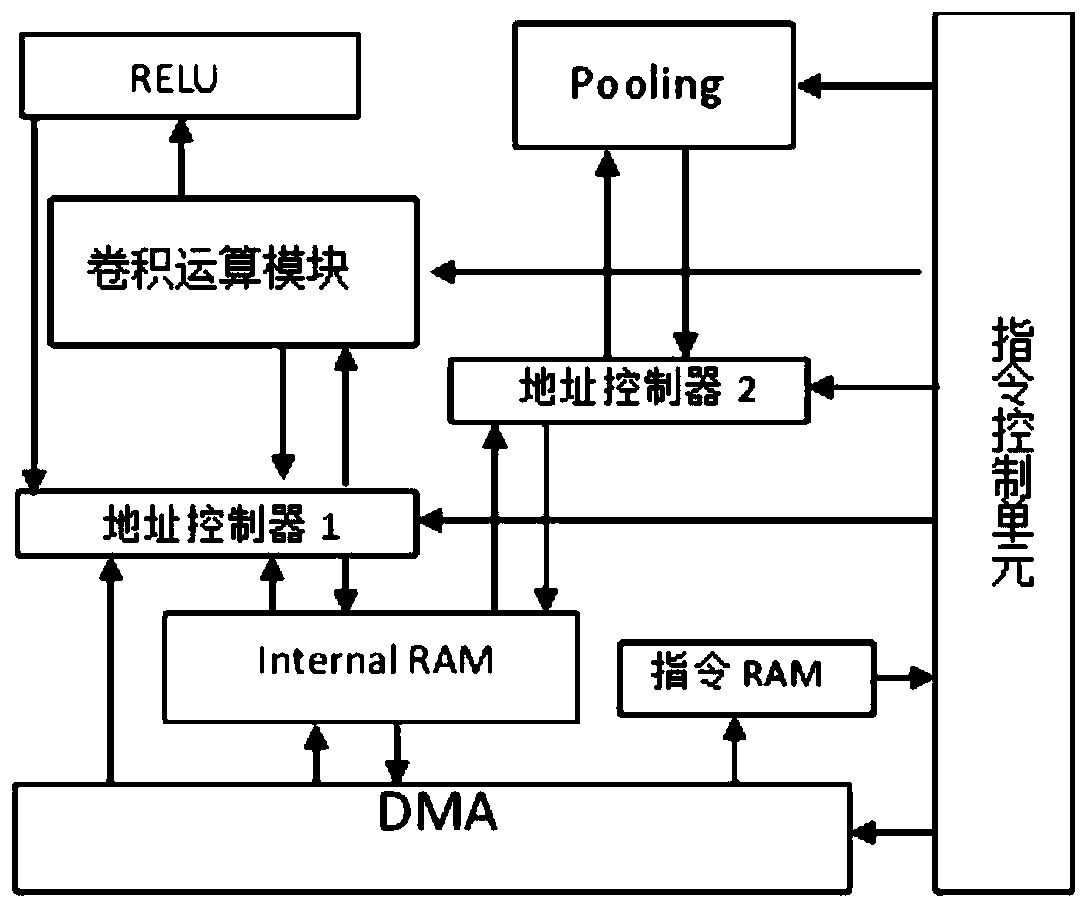

[0024] The present invention designs a FPGA-based convolutional neural network accelerator. The invention includes a convolution operation module, a pooling module, a DMA module, an instruction control module, an address control module, an internal RAM module and an instruction RAM module. The design proposed in this paper realizes parallel calculation in the convolution operation, and a single clock cycle can complete 512 times of multiplying and accumulating. The on-chip storage structure is designed to reduce off-chip storage access while realizing effective data multiplexing. The pipeline technology is used to realize the complete convolutional neural network single-layer operation process and improve the operation efficiency.

[0025] The following solutions are provided:

[0026] Including convolution operation module, pooling module, DMA module, instruction control module, address control module, internal RAM module and instruction RAM module.

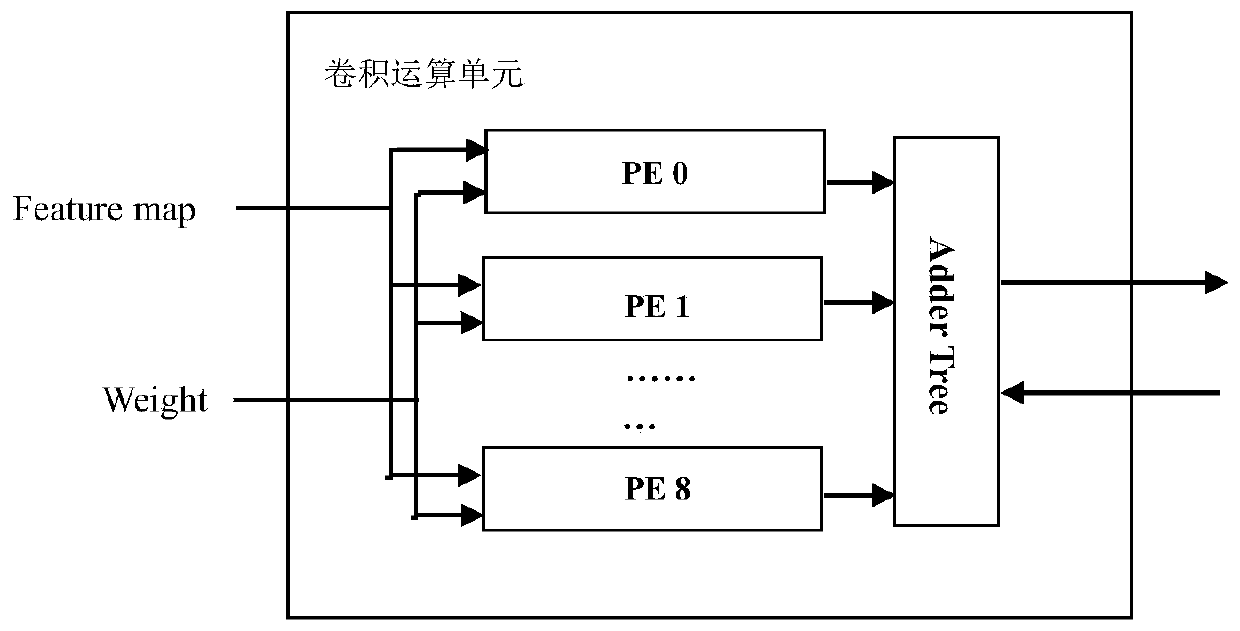

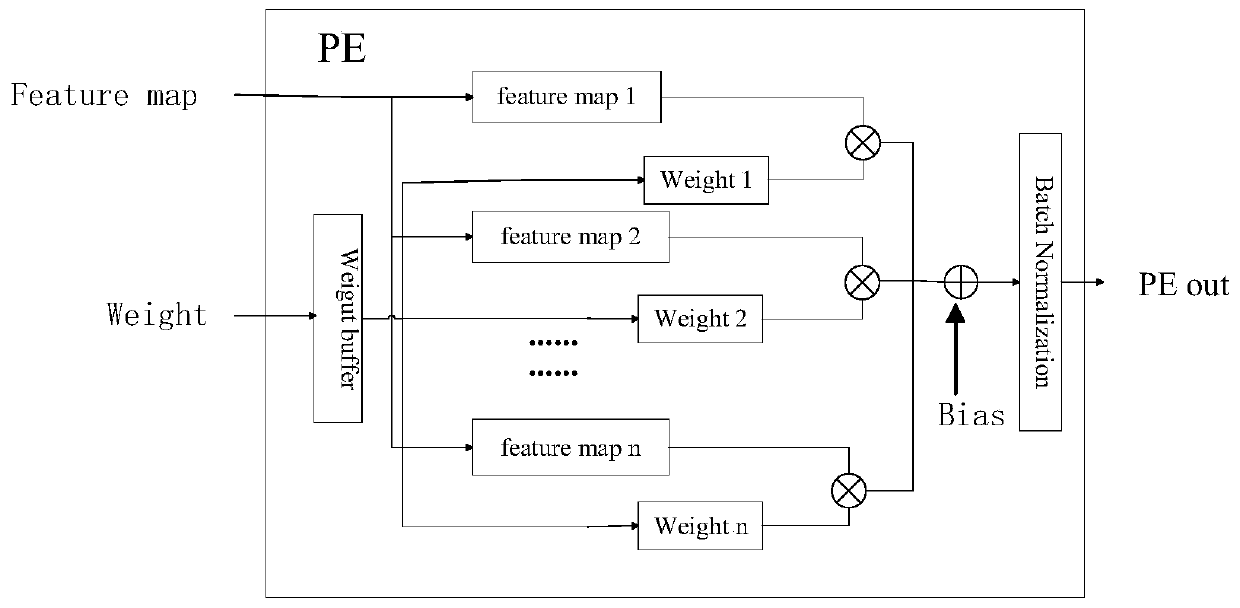

[0027] The convolution...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com