Multi-entry data caching method and storage medium

A data caching, multi-entry technology, which is applied in electrical digital data processing, special data processing applications, digital data information retrieval, etc. It can solve problems such as reducing code intrusion, improving efficiency, and reducing access pressure.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0020] In order to explain in detail the technical content, structural features, achieved goals and effects of the technical solution, the following will be described in detail in conjunction with specific embodiments and accompanying drawings.

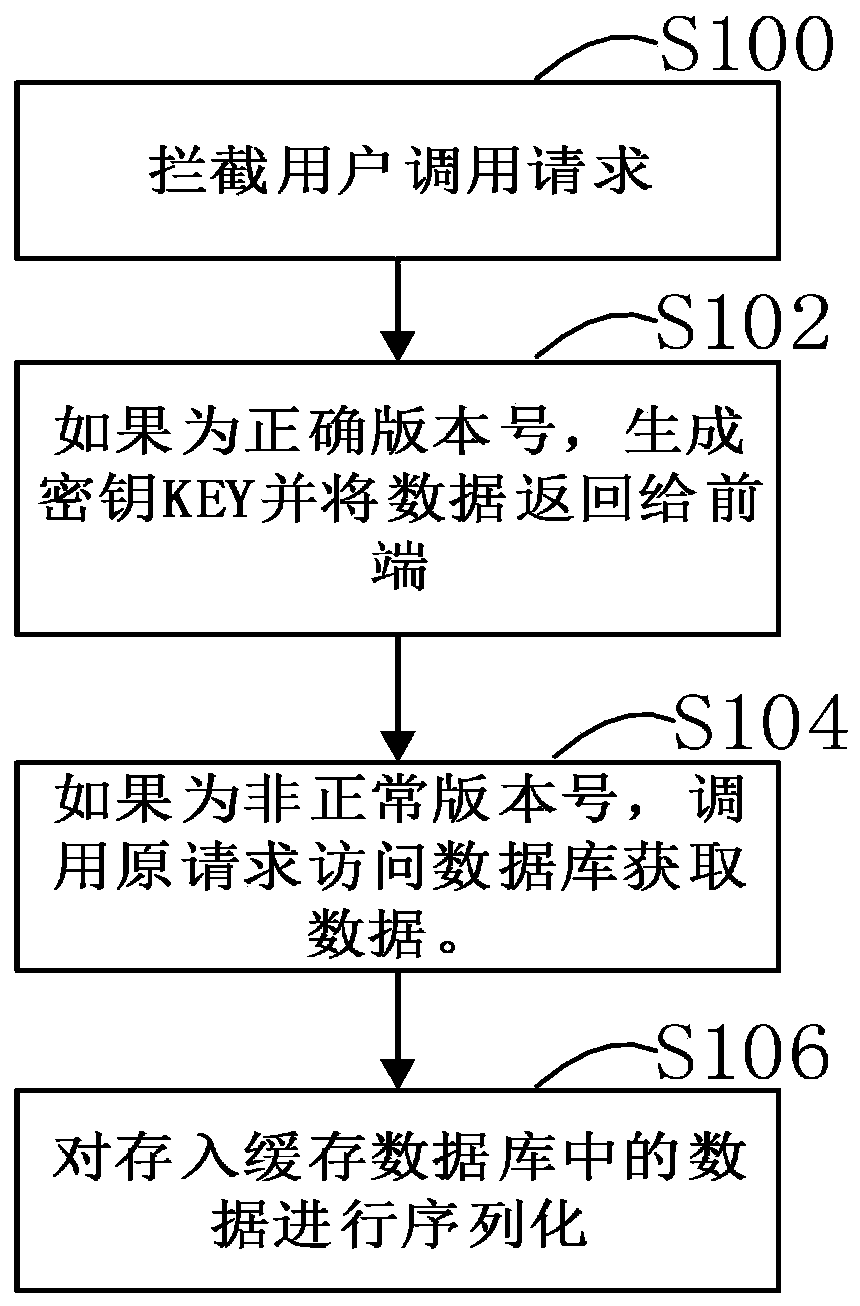

[0021] see figure 1 , is the flowchart of the scheme of the present invention, and concrete working principle is as follows:

[0022] A multi-entry data caching method, comprising the steps of intercepting an original cache call request, and generating a key KEY according to the cache call request,

[0023] The generation rule of the key KEY is: entry platform code+namespace+class name+namespace version number+identification code;

[0024] Use the above key to call the data in the cache server. If the data is obtained, the data will be returned. If the data is not hit, the original cache call request will be used directly to obtain the data in the database, and the data and the corresponding key KEY will be stored. in the cache serv...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com