Neural network pruning method based on combination of sparse learning and genetic algorithm

A neural network and genetic algorithm technology, applied in the direction of neural learning methods, biological neural network models, genetic rules, etc., can solve the problems of unordered weight removal, low neural network compression rate, and broken neural network data structure. Achieve the effect of reducing precision loss and improving compression ratio

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0031] The embodiments and effects of the present invention will be further described in detail below in conjunction with the accompanying drawings.

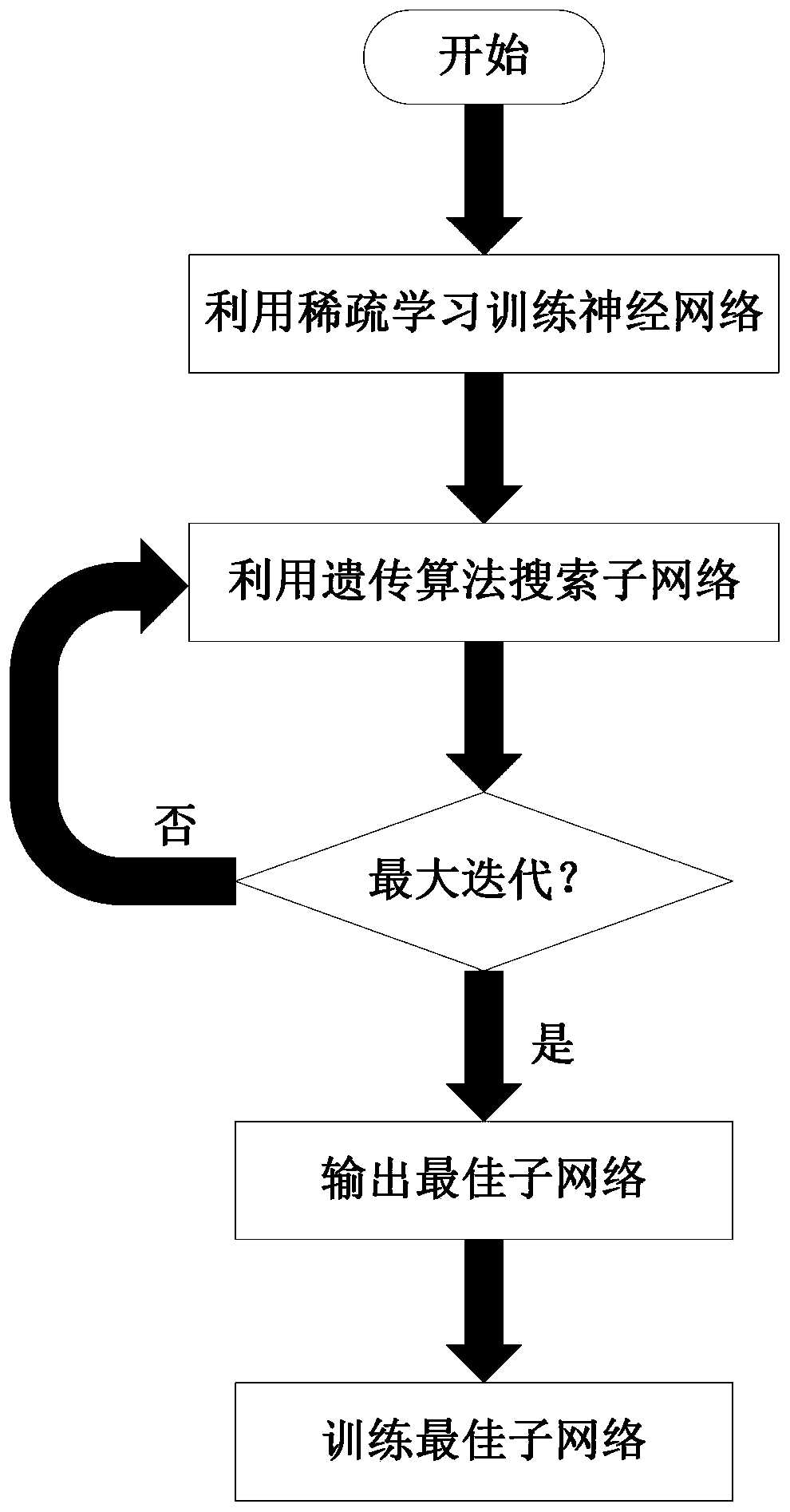

[0032] refer to figure 1 , the implementation steps of this example are as follows:

[0033] Step 1, train the neural network using sparse learning.

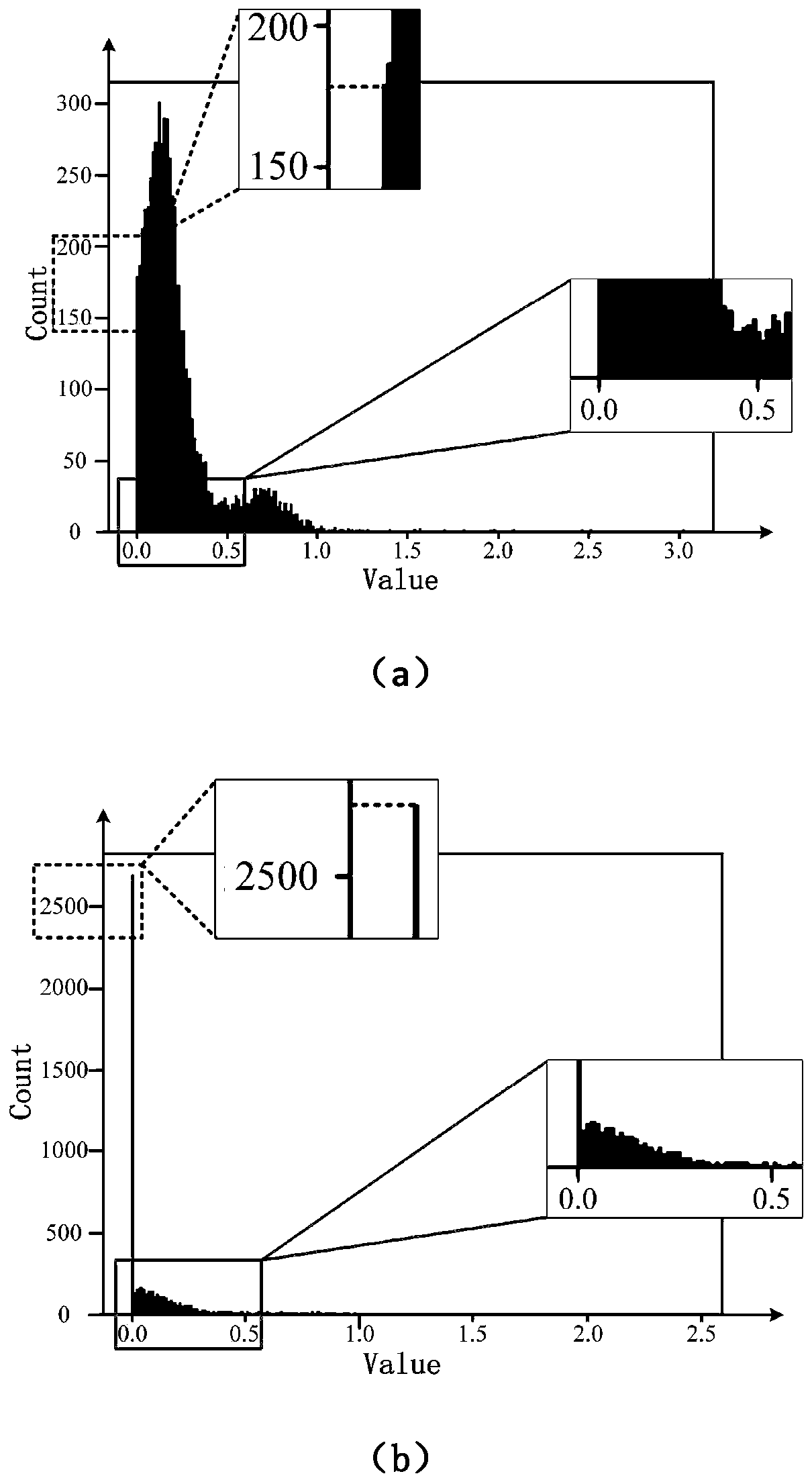

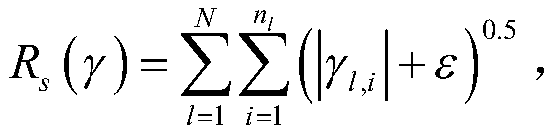

[0034] (1.1) Use the scaling factors in all channels of the neural network to construct penalty items, the formula of which is as follows:

[0035]

[0036] Among them, R s (γ) represents the penalty item, N represents the total number of layers of the neural network, n l Indicates the total number of channels in the l-th layer of the neural network, γ l,i Indicates the scaling factor of the i-th channel of the l-th layer in the neural network, |γ l,i | means gamma l,i The absolute value of , ε represents a constant constraint term;

[0037] (1.2) In the original cross-entropy loss function f of the neural network old (x) based on the penalty term R s (γ) is added to t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com