Human body motion posture capturing method and system

A technology of human motion and posture, applied in the field of motion capture, can solve the problems of large number of sensors and high cost of capture

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

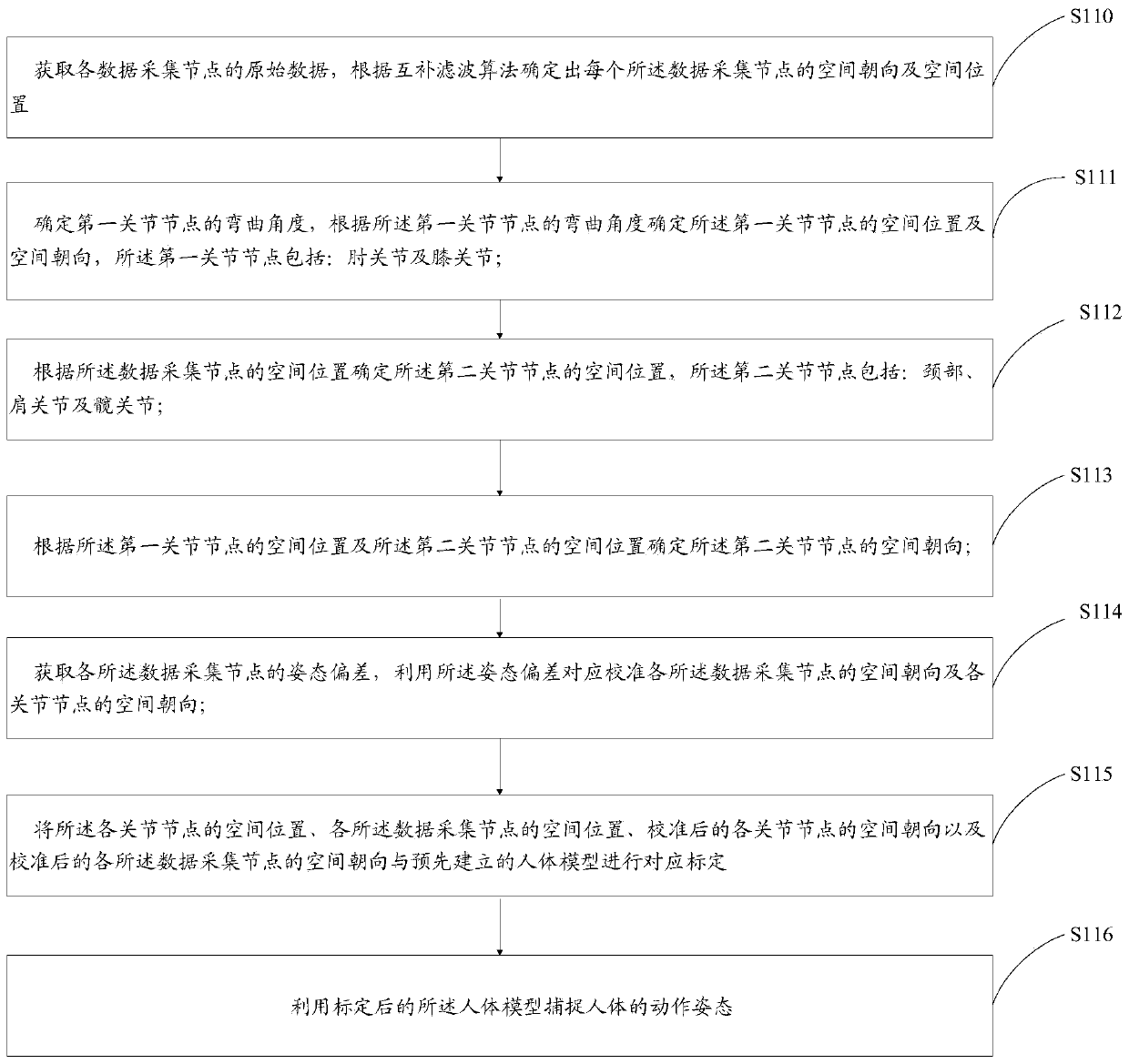

[0059] The present embodiment provides a method for capturing human body movements and gestures, characterized in that the method includes:

[0060] S110. Obtain the original data of each data collection node, and determine the spatial orientation and spatial position of each data collection node according to a complementary filtering algorithm;

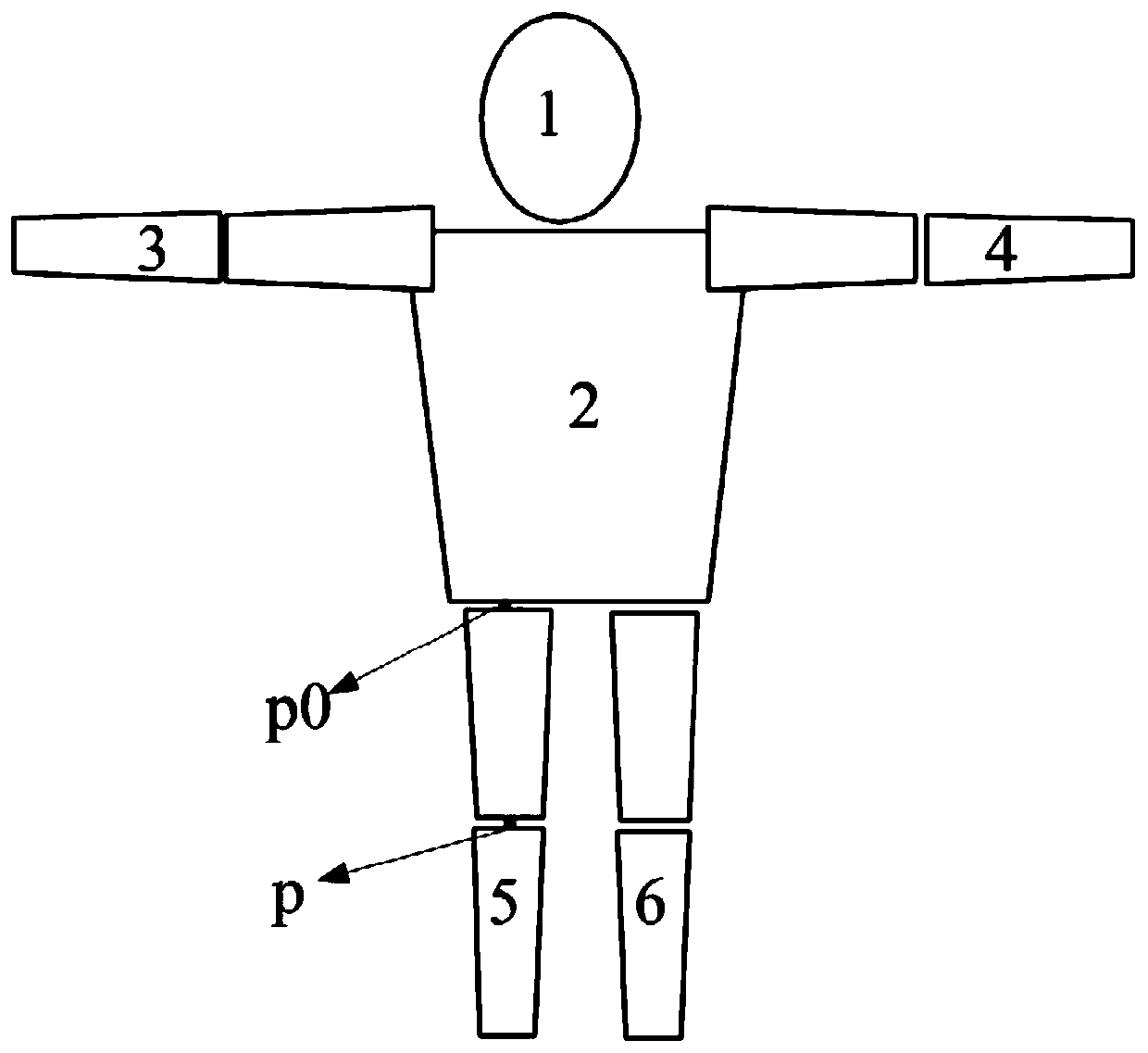

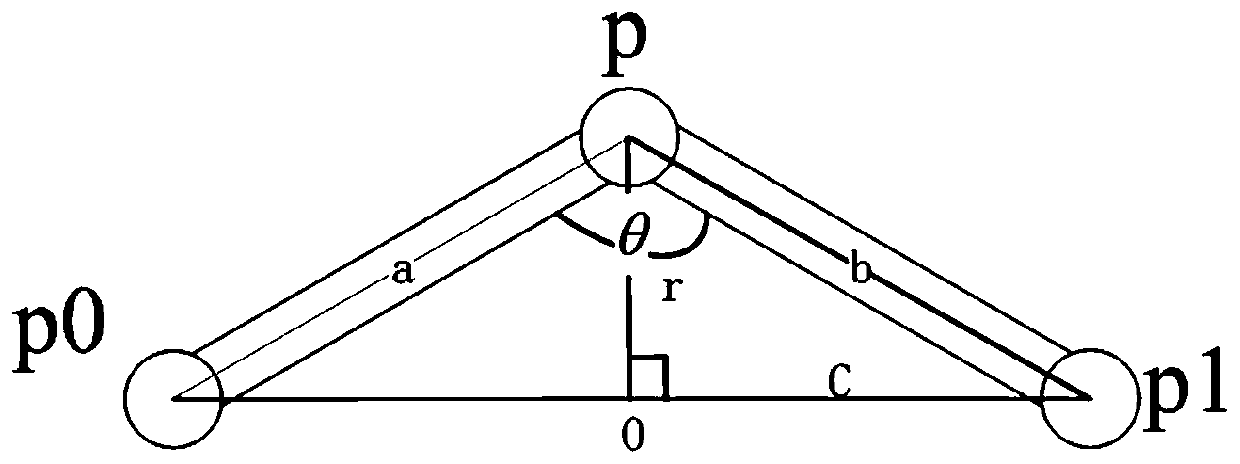

[0061] Before the application obtains the original data of each data acquisition node, it is necessary to determine the data acquisition node. In order to reduce the number of sensors used, the application sets up 6 data acquisition nodes. The data acquisition nodes include: head node 1, trunk Node 2, the first forearm node 3, the second forearm node 4, the first leg node 5 and the second leg node 6; each data acquisition node is as figure 2 indicated by the mark.

[0062] After determining the data acquisition nodes, bind the data acquisition modules to each data acquisition node. Each data acquisition module includes a six-axis I...

Embodiment 2

[0116] The present embodiment provides a system for capturing human gestures, such as Image 6 As shown, the system includes: multiple data collection modules, a data collection module 51 and a terminal 52 .

[0117] Before the data acquisition module of the present application obtains the original data of each data acquisition node, the data acquisition node needs to be determined. In order to reduce the number of sensors used, the application sets 6 data acquisition nodes, and the data acquisition node includes: the head Node 1, torso node 2, first forearm node 3, second forearm node 4, first lower leg node 5 and second lower leg node 6; each data acquisition node is as follows figure 2 indicated by the circled mark.

[0118] After determining the data acquisition nodes, bind the data acquisition modules to each data acquisition node. Each data acquisition module includes a six-axis IMU and a three-axis magnetic sensor. Each IMU includes: a three-axis accelerometer and a t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com