Text-level neural machine translation method based on context memory network

A machine translation and context technology, applied in the field of neural machine translation, can solve the problems of low computational efficiency and insufficient context information, and achieve the effect of efficient structure

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0039] The present invention will be further elaborated below in conjunction with the accompanying drawings of the description.

[0040] The present invention is a text-level neural machine translation method based on a contextual memory network, specifically comprising the following steps:

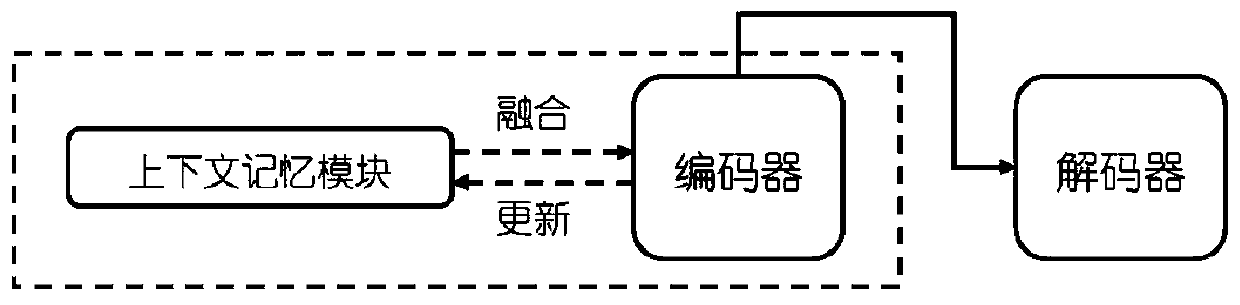

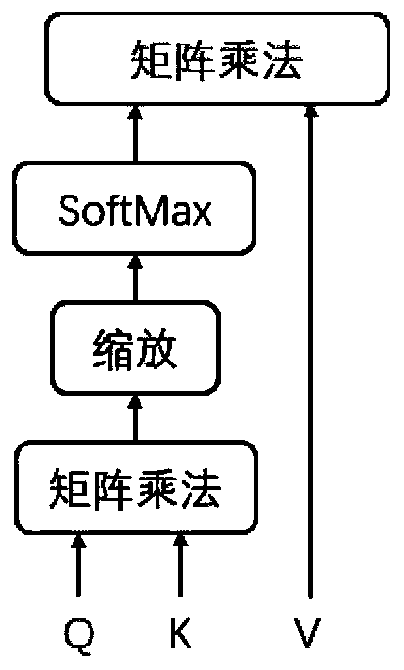

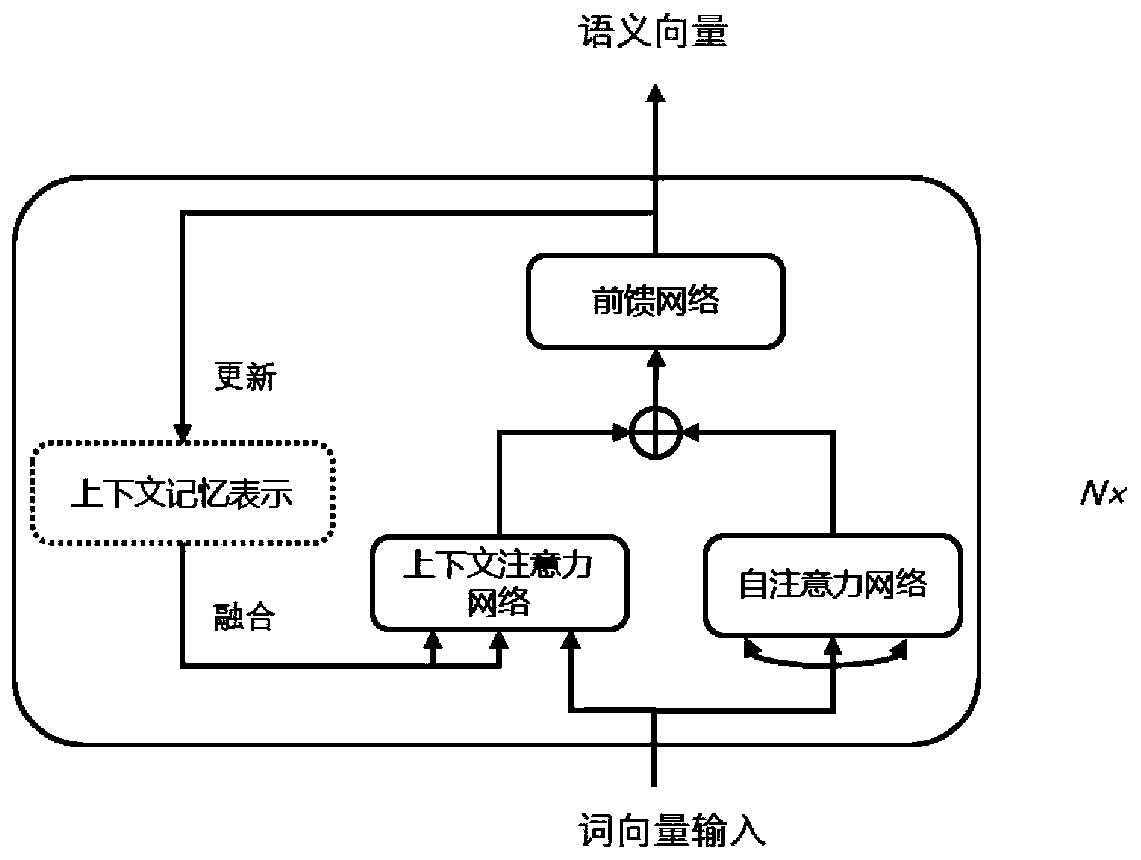

[0041] 1) Adopt the Transformer model based on the self-attention mechanism, add a context memory module on the encoder side to dynamically maintain the context memory, and form a Transformer model based on the context memory network;

[0042] 2) Construct a parallel corpus, segment the source language and the target sentence, and convert the obtained corresponding word sequence into a corresponding word vector representation;

[0043] 3) On the encoder side, layer-by-layer feature extraction is performed on the word embedding of the source language input, and the corresponding context information is introduced through the context memory module, which is fused into the current coded repre...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com