Video target segmentation method based on motion attention

A target segmentation and attention technology, applied in the fields of image processing and computer vision, can solve the problems of inability to obtain the precise position of the target object, limited motion mode of the segmentation result, and drift of the target object, so as to reduce useless features and improve robustness. , Split effect for precise effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

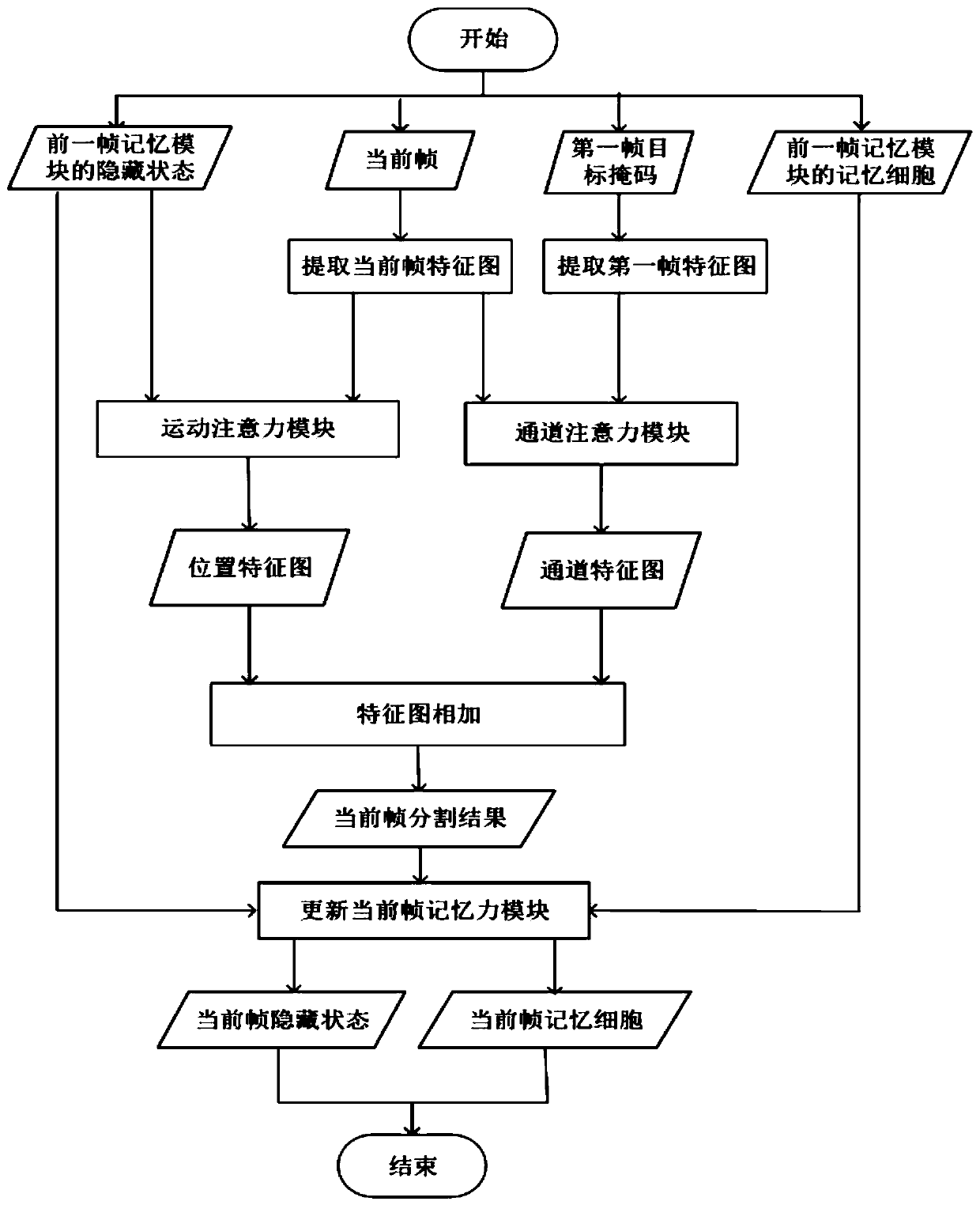

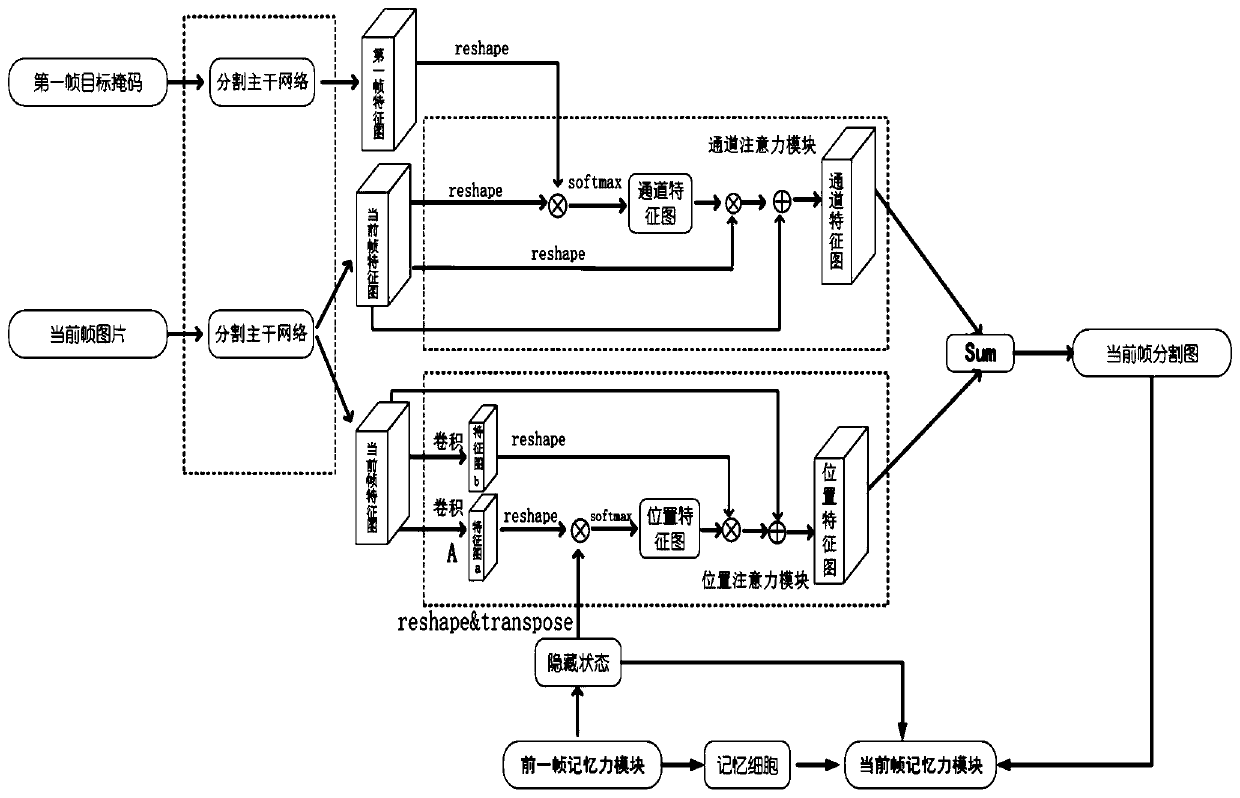

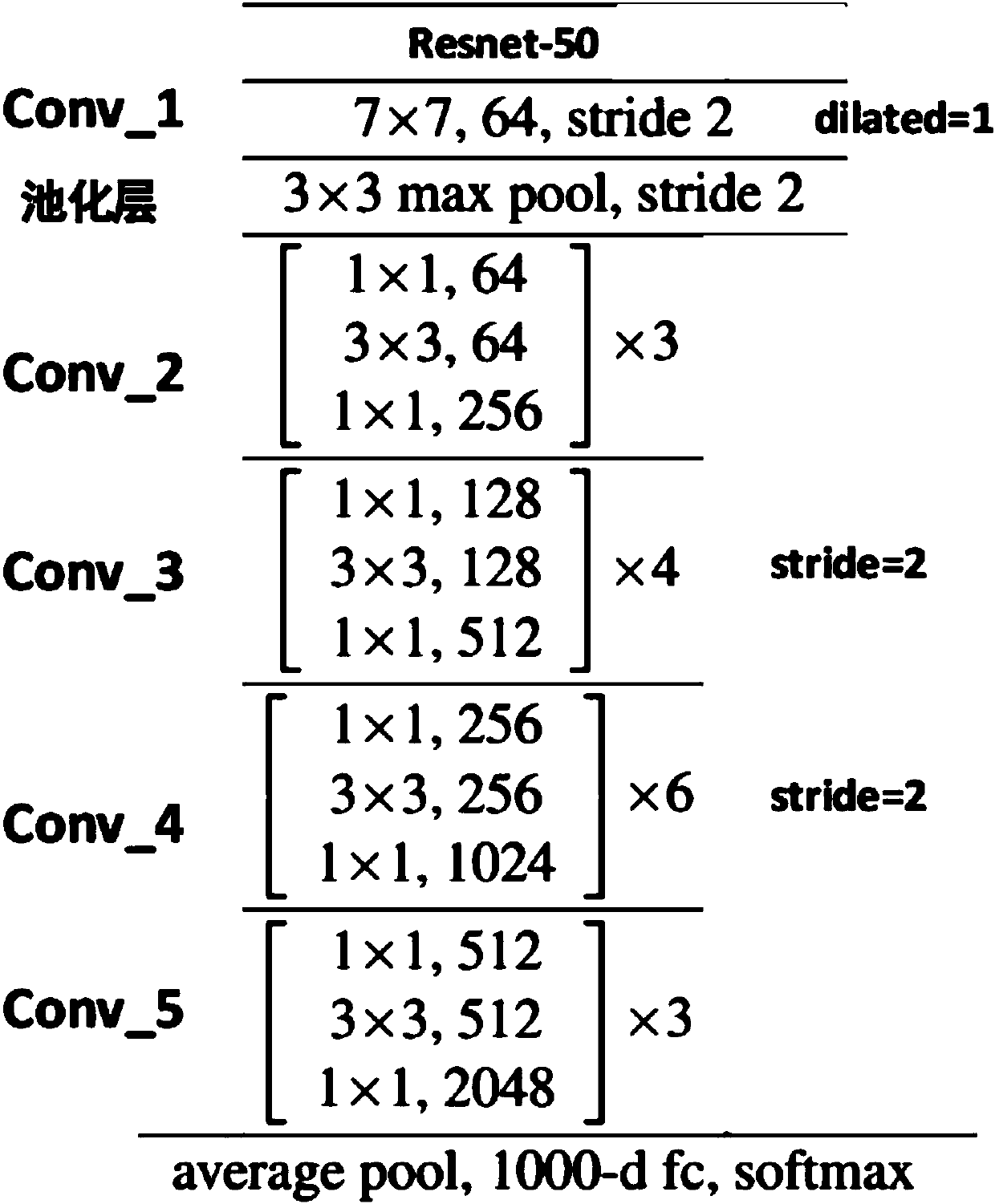

[0031] The present invention proposes a video target segmentation method based on motion attention. The method first obtains the feature maps of the first frame and the current frame, and then combines the first frame feature map, the current frame feature map and the previous frame motion attention network The position information of the target object predicted by the middle memory module is input to the motion attention network to obtain the segmentation result of the current frame. The invention is suitable for video target segmentation, has good robustness and accurate segmentation effect.

[0032] The present invention will be described in more detail below in conjunction with specific examples and drawings.

[0033] The present invention includes the following steps:

[0034] 1) Obtain the YouTube and Davis data sets as the training set and test set of the model respectively;

[0035] 2) Preprocessing the training data. Cut each training sample (video frame) and the first fram...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com