Neural machine translation decoding acceleration method based on discrete attention mechanism

A machine translation and attention technology, applied in the field of neural machine translation decoding acceleration, can solve problems such as the inability to take advantage of low-precision numerical calculations, and achieve the effects of improving real-time response speed, reducing hardware costs, and reducing computational complexity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0039] The present invention will be further elaborated below in conjunction with the accompanying drawings of the description.

[0040]The present invention will optimize the decoding speed of the neural machine translation system based on the attention mechanism from the perspective of low-precision numerical operations, aiming to greatly increase the decoding speed of the translation system at the cost of a small performance loss, and achieve a balance between performance and speed .

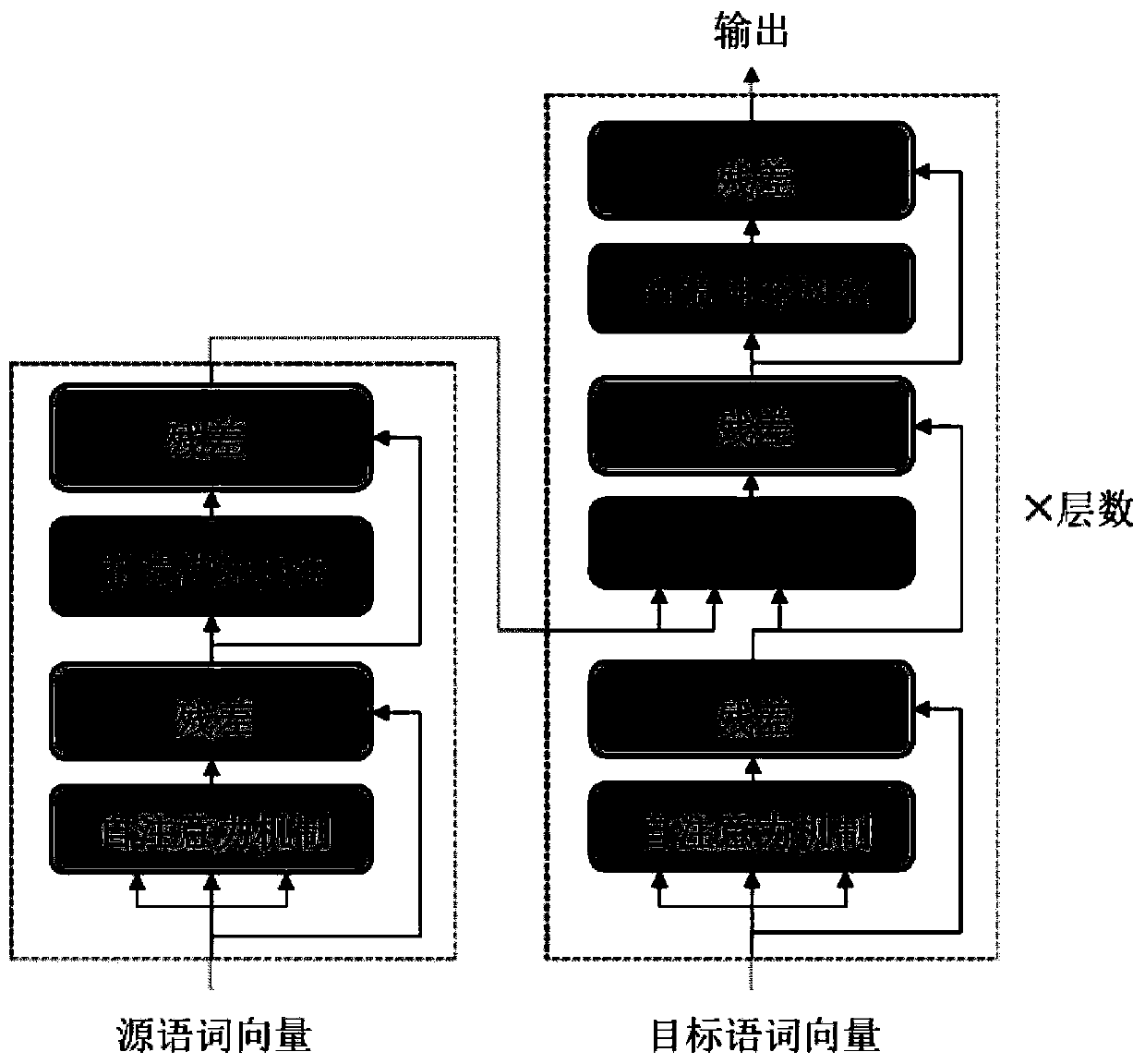

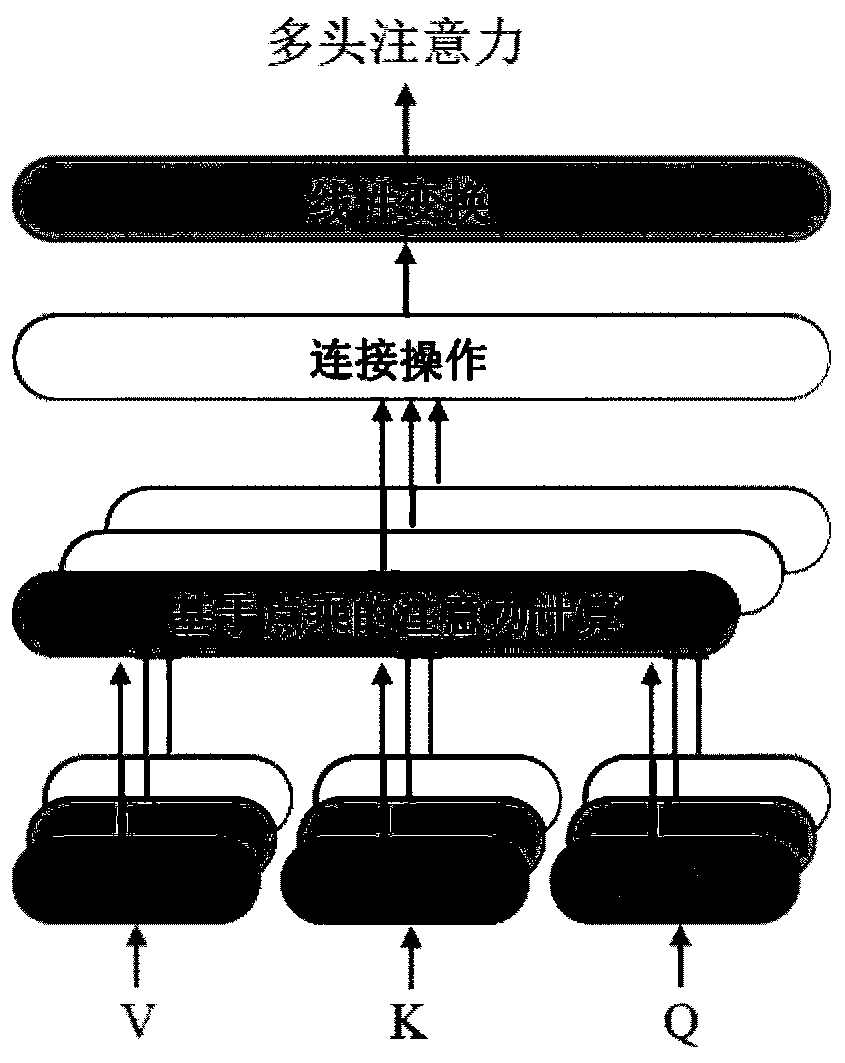

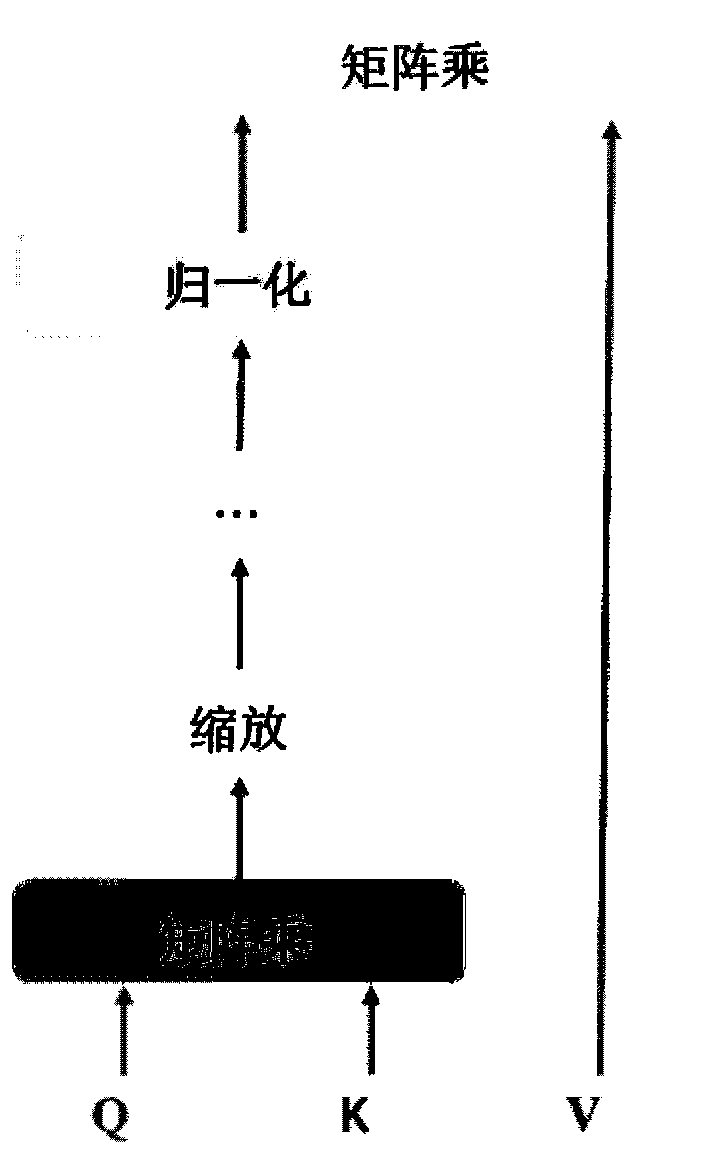

[0041] A kind of neural machine translation decoding acceleration method based on discrete attention mechanism of the present invention comprises the following steps:

[0042] 1) Construct a training parallel corpus and a neural machine translation model based on the attention mechanism, use the parallel corpus to generate a machine translation vocabulary, and further train to obtain model parameters after training convergence, as a baseline system;

[0043] 2) Convert some parameters of the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com