Multi-mechanism attention merging multi-path neural machine translation method

A technology of machine translation and attention, applied in neural learning methods, neural architecture, natural language translation, etc., can solve problems such as inconsistencies in attention calculation results, and achieve good translation results

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

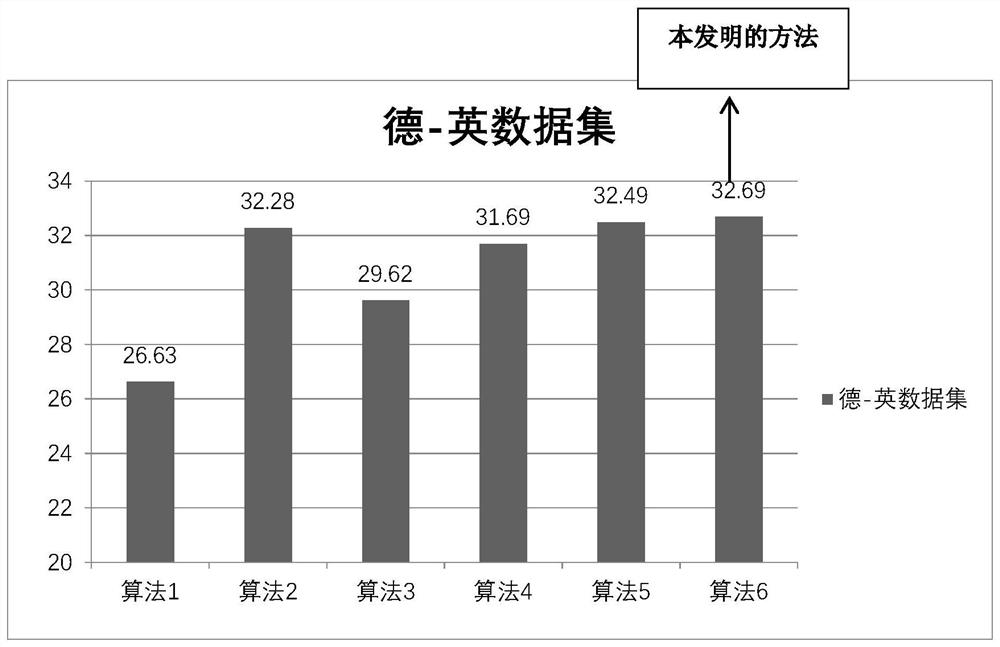

[0032] Embodiment 1: In this example, German and English materials are used as the translation corpus, and the selected multi-decision methods are CNN translation mechanism, Transformer translation mechanism, and Tree-Transformer translation mechanism.

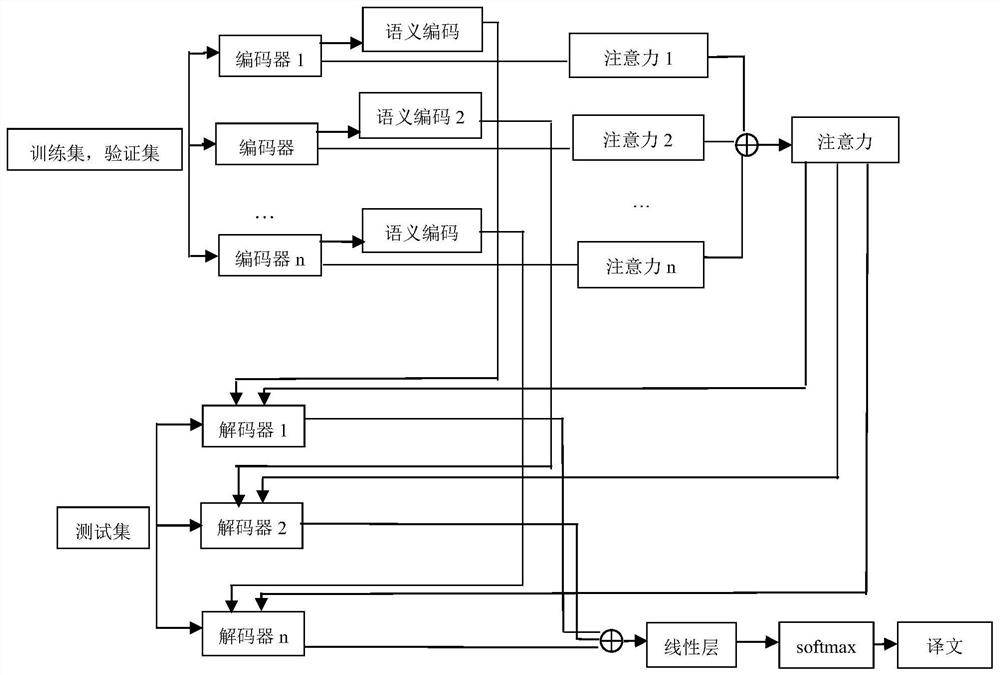

[0033] Such as Figure 1-2 As shown, the multi-path neural machine translation method of multi-mechanism combined attention, the specific steps of the method are as follows:

[0034] Model building process:

[0035] Step1. Download German and English materials from the website, and determine the multiple translation mechanisms used;

[0036] Step2. Preprocess the training corpus: use MOSES to perform word segmentation, lowercase processing and data cleaning on the bilingual corpus, and finally keep sentence pairs with a length of less than 175, and then use the BPE algorithm to perform word segmentation processing on all the preprocessed data;

[0037] Step3, generate training set, verification set and test set: randomly ext...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com