Language model training method and system, mobile terminal and storage medium

A language model and training method technology, applied in natural language data processing, speech analysis, speech recognition, etc., can solve the problems of language model training efficiency and low scalability, and achieve the goal of improving training efficiency and accuracy, and improving recognition efficiency Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

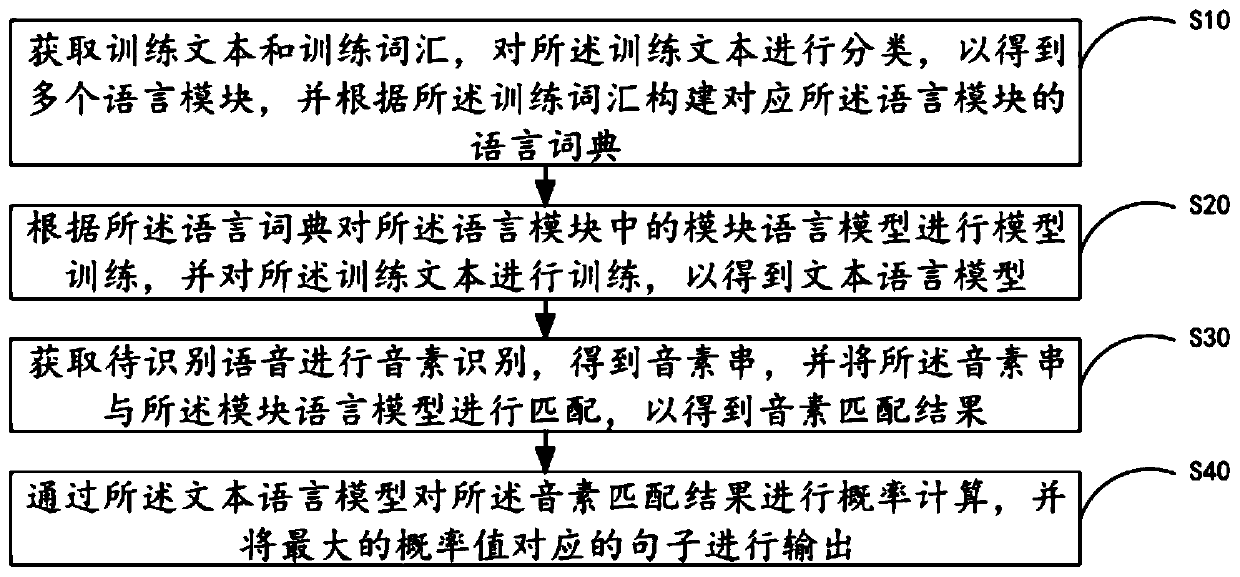

[0045] see figure 1 , is a flow chart of the language model training method provided in the first embodiment of the present invention, including steps:

[0046] Step S10, obtaining training text and training vocabulary, classifying the training text to obtain a plurality of language modules, and constructing a language dictionary corresponding to the language modules according to the training vocabulary;

[0047] Wherein, the text language in the training text can be set according to requirements, for example, the text language can be Chinese, English, Korean or Japanese, etc., the training vocabulary and training text can be obtained based on the database, the training vocabulary includes nouns vocabulary, verb vocabulary, adjective vocabulary, adverb vocabulary, etc.;

[0048] Specifically, in this step, a classifier can be used to classify the training text, and the classifier is used to classify the text in the training text according to the different word attributes, so ...

Embodiment 2

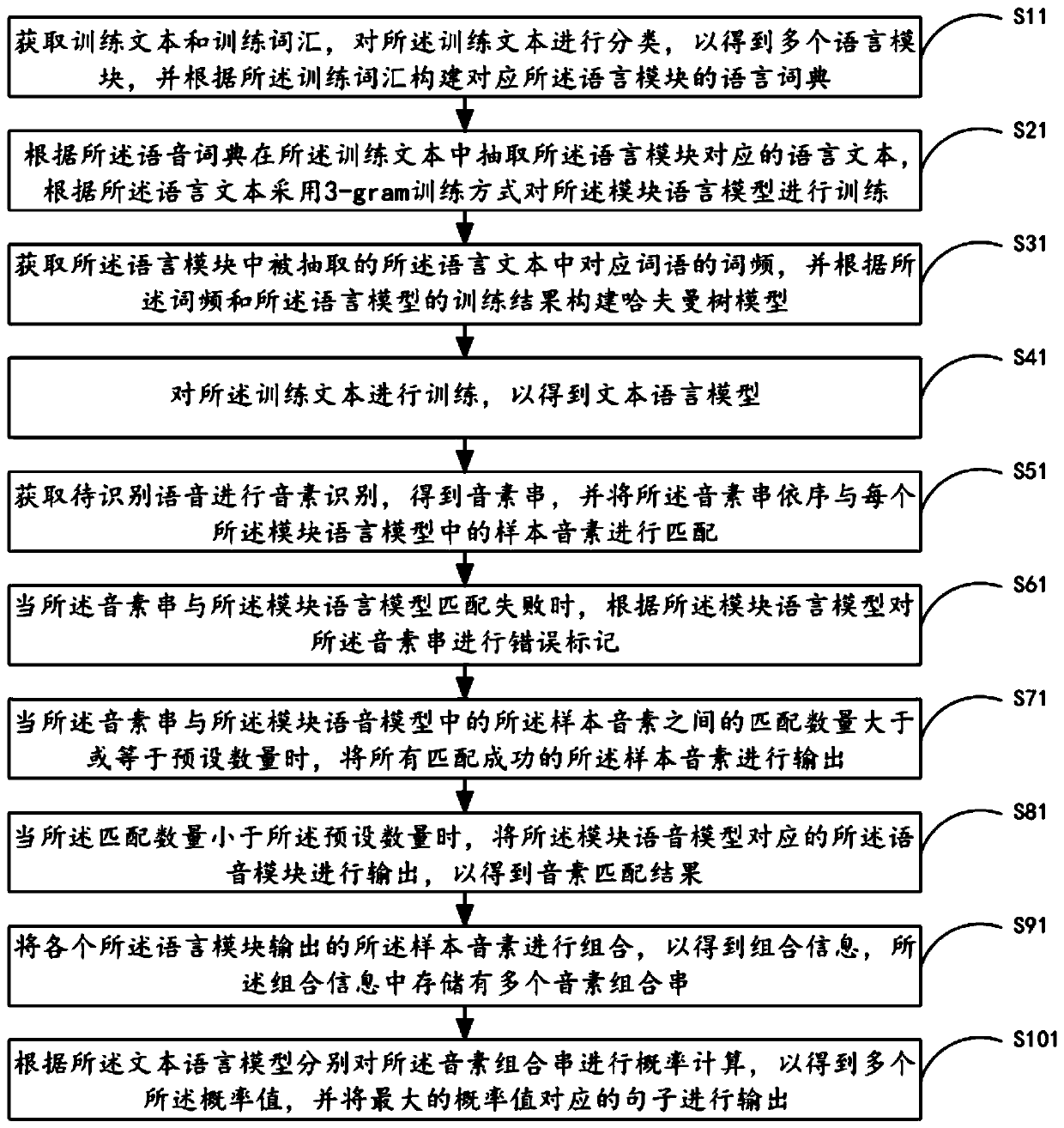

[0063] see figure 2 , is a flow chart of the language model training method provided in the second embodiment of the present invention, including steps:

[0064] Step S11, obtaining training text and training vocabulary, classifying the training text to obtain a plurality of language modules, and constructing a language dictionary corresponding to the language modules according to the training vocabulary;

[0065] Wherein, by classifying the training text, to obtain the noun module, the verb module, the adjective module and the adverb module, preferably, in other embodiments, the language module can also be divided into states according to the different text attributes in the training text Word modules, etc.;

[0066] Specifically, in this step, a one-to-one relationship is adopted between the language module and the language dictionary, therefore, by constructing a dictionary based on the training vocabulary, a dictionary of nouns, a dictionary of verbs, a dictionary of adj...

Embodiment 3

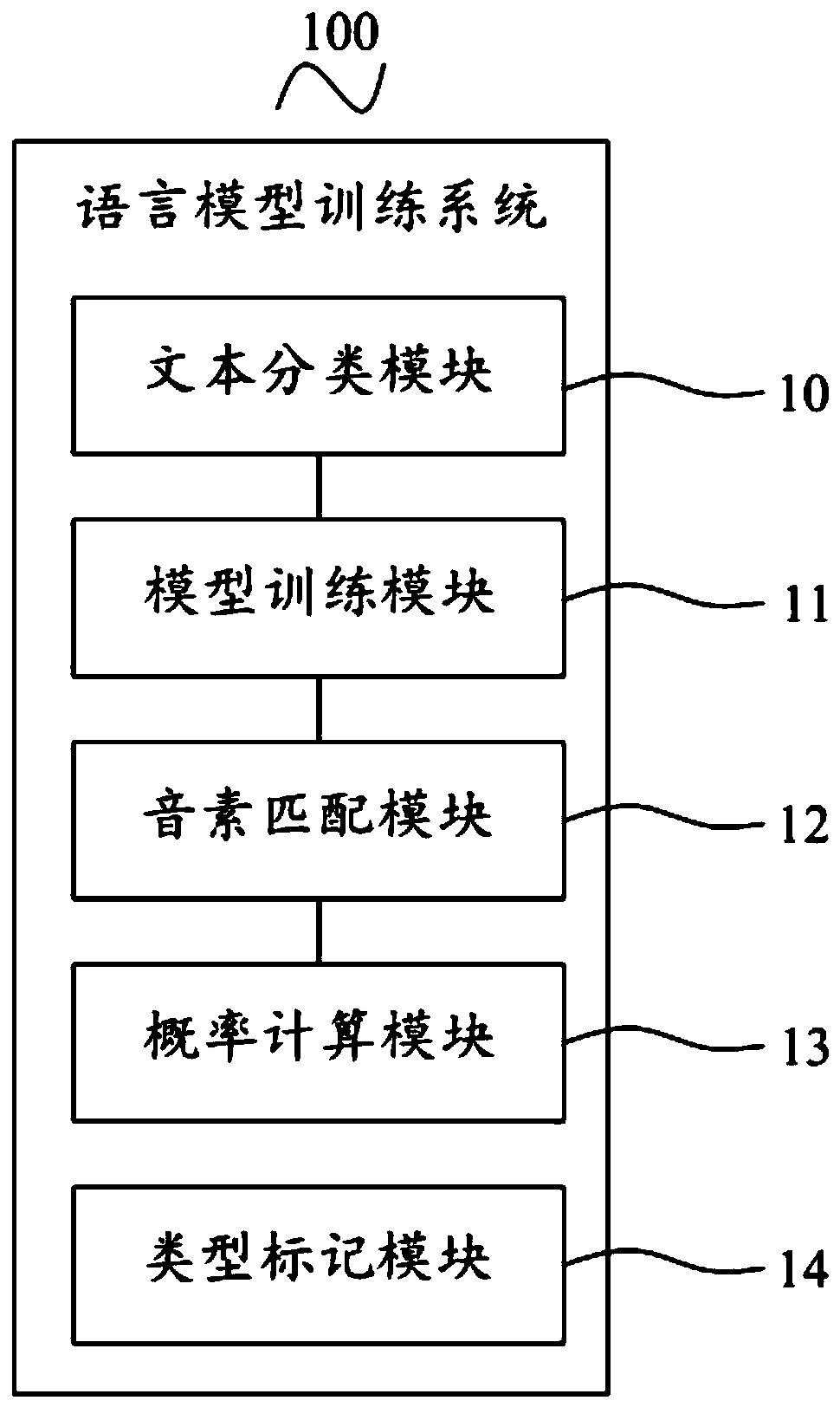

[0102] see image 3 , is a schematic structural diagram of the language model training system 100 provided by the third embodiment of the present invention, including: a text classification module 10, a model training module 11, a phoneme matching module 12 and a probability calculation module 13, wherein:

[0103] Text classification module 10, is used for obtaining training text and training vocabulary, classifies described training text, to obtain a plurality of language modules, and constructs the language dictionary corresponding to described language module according to described training vocabulary;

[0104] The model training module 11 is configured to perform model training on the module language model in the language module according to the language dictionary, and train the training text to obtain a text language model.

[0105] Wherein, the model training module 11 is also used for: extracting the language text corresponding to the language module in the training t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com