Stacked object 6D pose estimation method and device based on deep learning

A deep learning and pose estimation technology, applied in computing, computer parts, character and pattern recognition, etc., can solve the problems of consuming large computing time and computer memory, relying on manual debugging, etc., to achieve accurate pose estimation and training speed. And the effect of fast running speed and strong algorithm generalization ability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0038] Embodiments of the present invention will be described in detail below. It should be emphasized that the following description is only exemplary and not intended to limit the scope of the invention and its application.

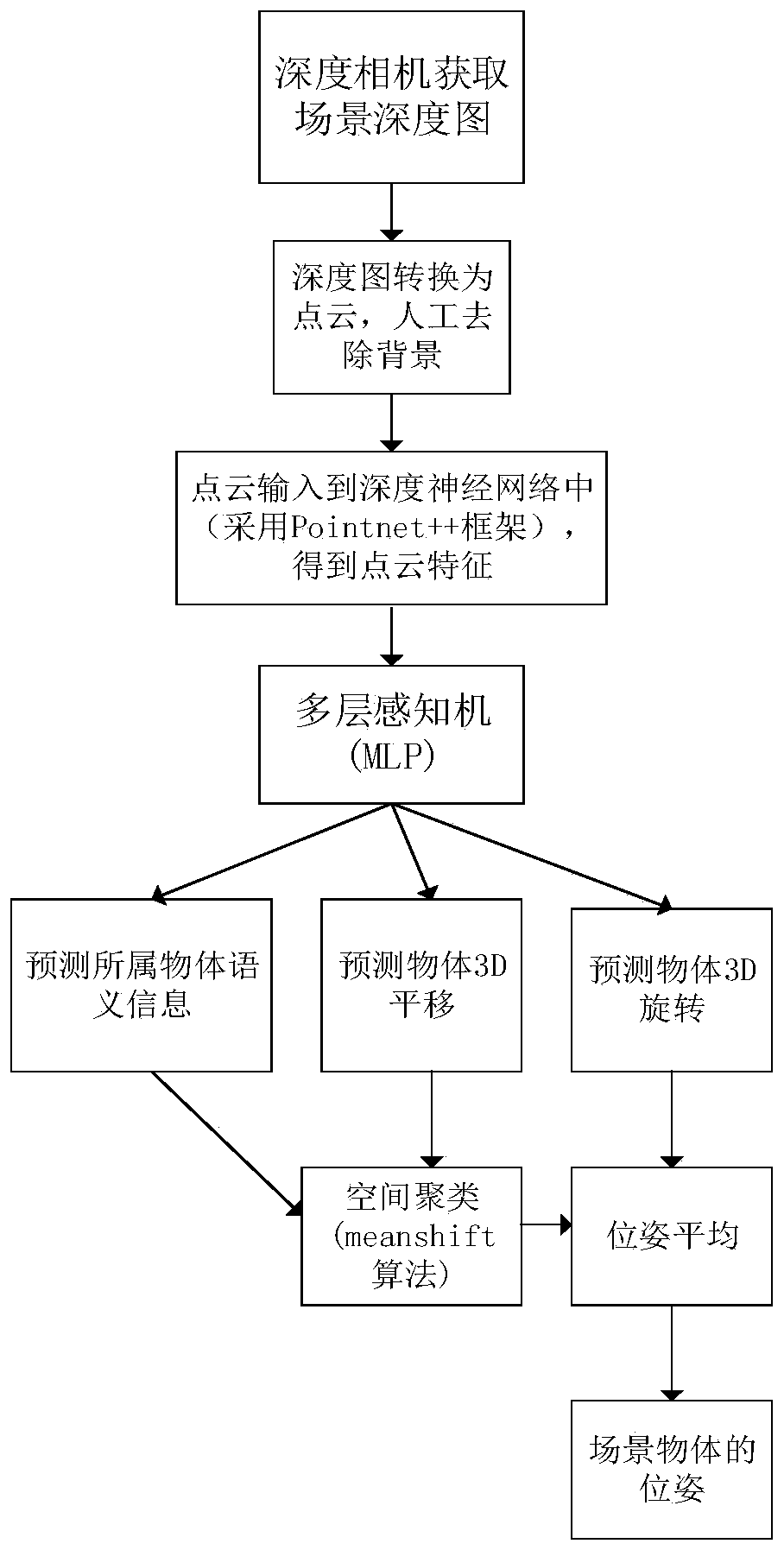

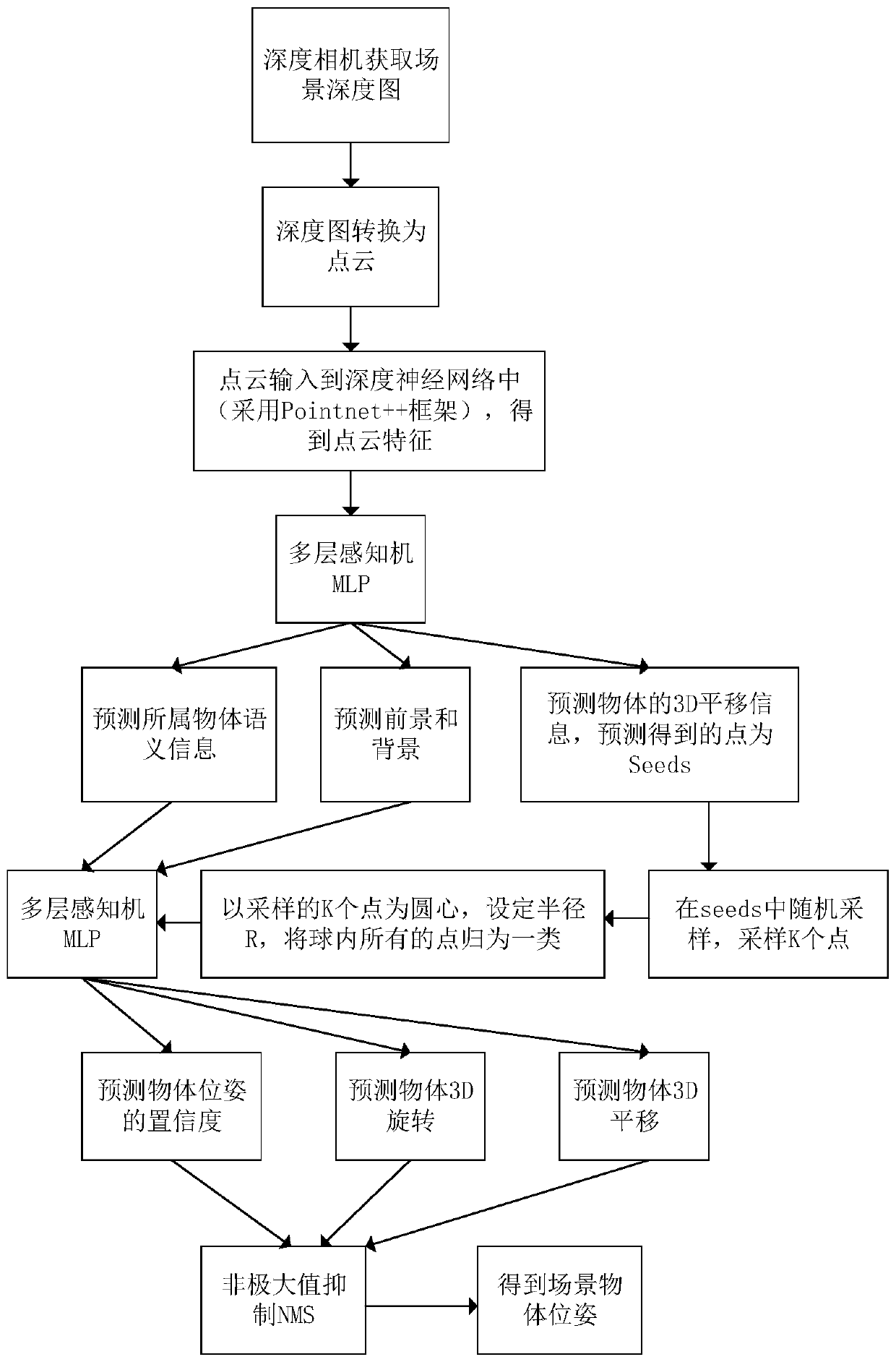

[0039] image 3 It is a flowchart of a 6D pose estimation method for stacked objects based on deep learning according to an embodiment of the present invention. refer to image 3 , the embodiment of the present invention proposes a method for estimating the 6D pose of a stacked object based on deep learning, including the following steps:

[0040] S1. Input the point cloud of scene depth information obtained by the depth camera into the point cloud deep learning network to extract point cloud features;

[0041] S2. Through the multi-layer perceptron MLP, the extracted point cloud features learn to return the semantic information of the object, the foreground and background of the scene, and the 3D translation information of the object. The points obt...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com