Video compression method based on deep learning

A video compression and deep learning technology, applied in the field of video compression based on deep learning, can solve problems such as small storage, and achieve the effect of small storage and good video restoration effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

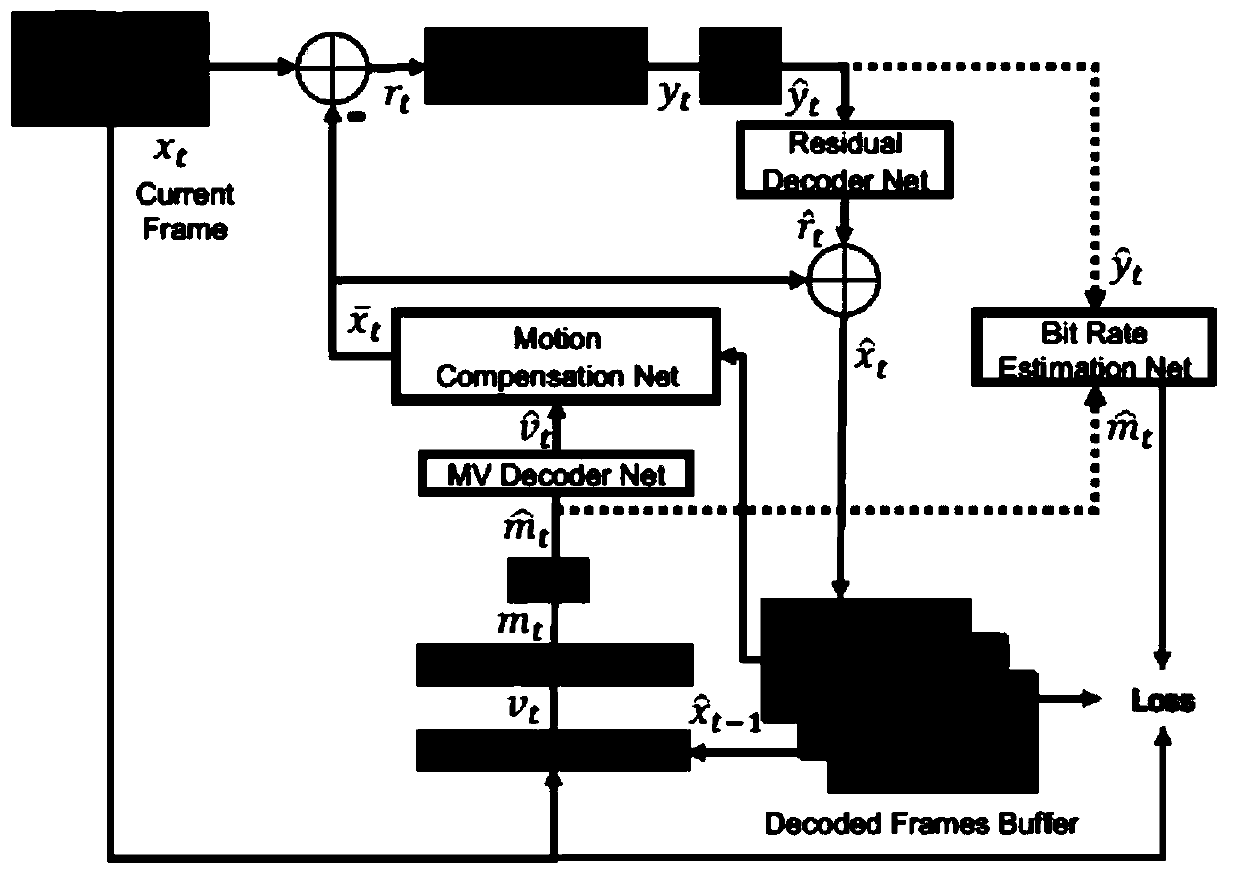

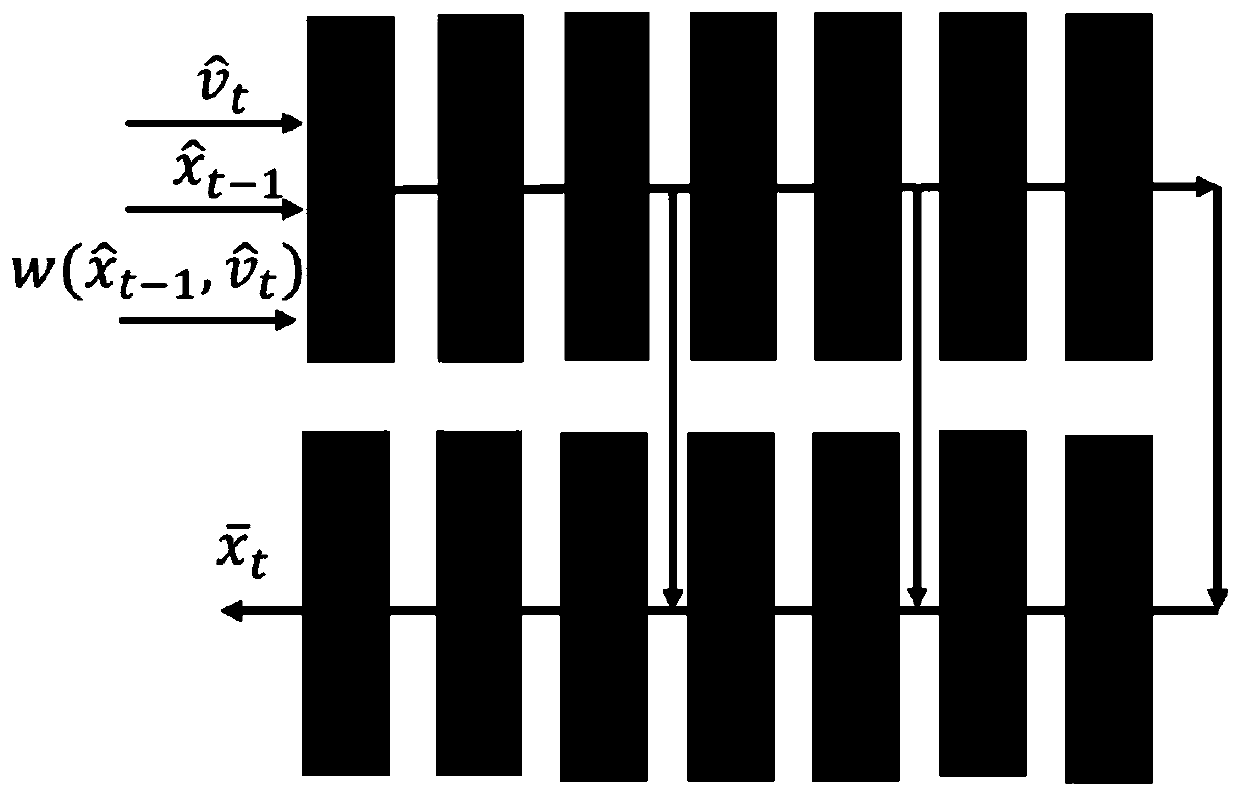

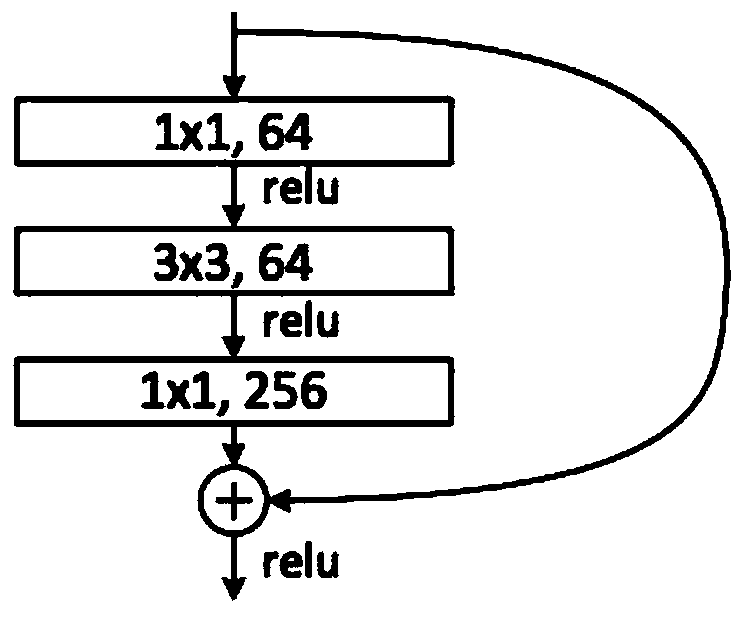

[0051] The video compression method based on deep learning of the present invention is to use the Spynet motion estimation network composed of optical flow network (opticalFlow Net), motion vector encoding network (MV Encoder Net) and motion vector decoding network (MV Decoder Net) to carry out Motion estimation and motion compensation calculation to achieve better motion estimation and motion compensation effects; the residual network used again, as attached image 3 As shown, the residual network includes two Resblock modules to achieve network training at a deeper level; then use arithmetic entropy coding operations to complete the encoding and store it as a Pickle file to achieve video compression and storage, while achieving smaller storage, to obtain better video restoration effect; as attached figure 1 As shown, the details are as follows:

[0052] S1. The video is divided into each frame picture, and the current frame picture x is input t and the reconstructed image ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com