Real-time man-machine interaction system in virtual scene

An interactive system and behavioral technology, applied in the field of human-computer interaction, can solve the problems of visual attention model error, neglect of influence, etc., and achieve the effect of high accuracy and fast speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

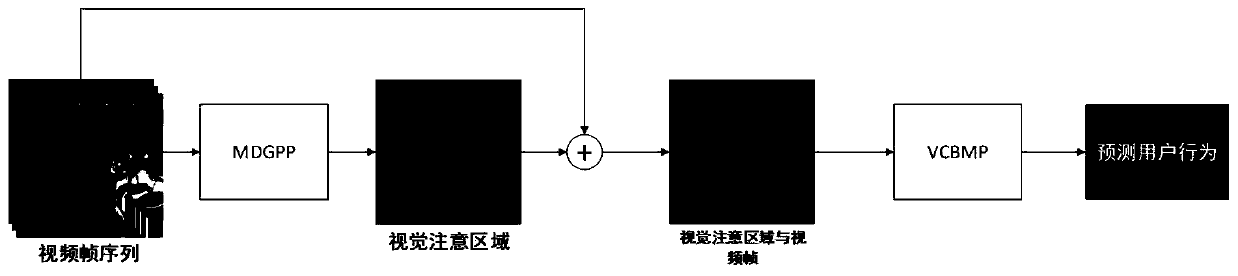

[0018] Such as figure 1 As shown, the input of this system is a sequence of RGB video frames, and the position of the user's viewpoint is calculated by the visual attention area prediction module, which is superimposed on the current video frame and input to the behavior prediction module to predict user behavior.

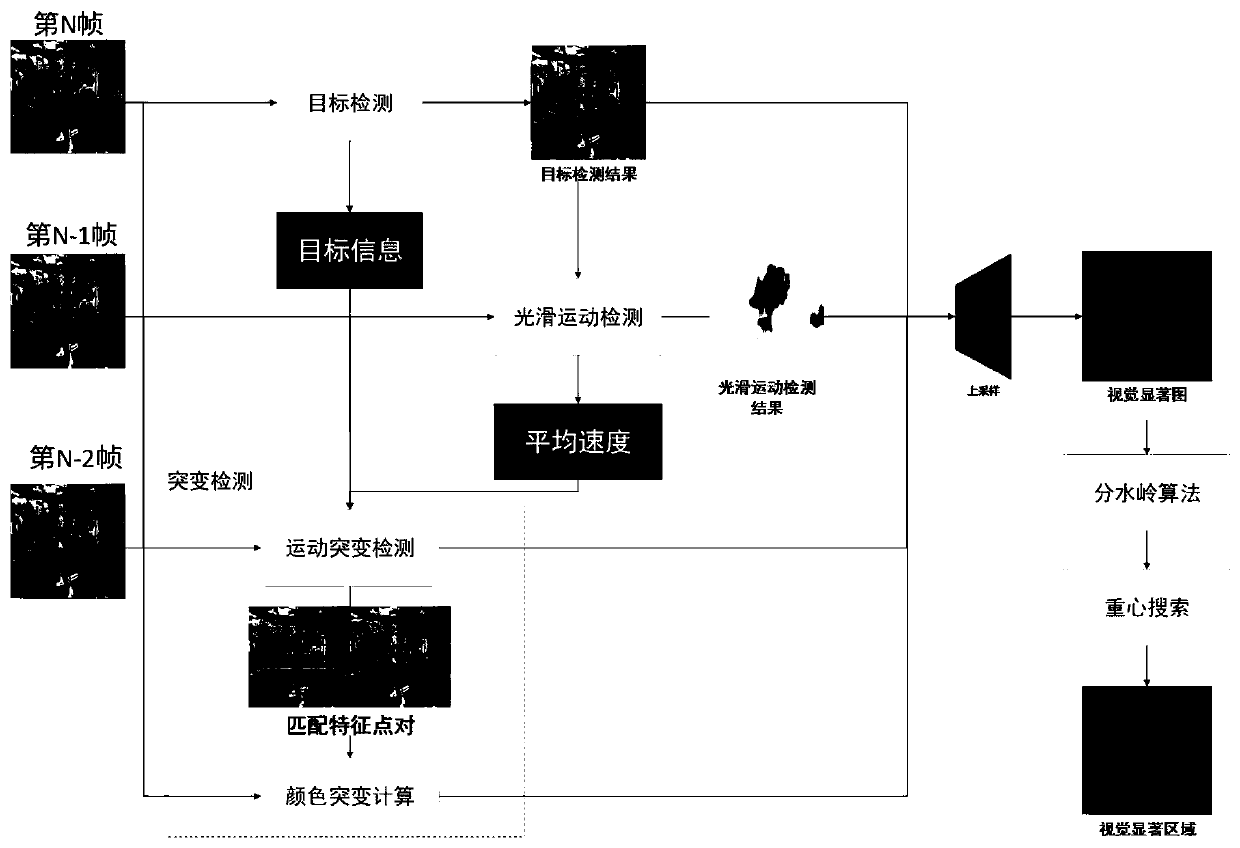

[0019] Such as figure 2 As shown, the described visual attention area prediction module includes: a target detection unit, a smooth motion detection unit, a mutation detection unit, a saliency map generation unit and a feature extraction unit, wherein: the smooth motion detection unit adopts Flownet as a computing network to accept the target detection unit The bounding box information provided modifies the calculation of motion optical flow, and the target detection unit uses YOLO v3 as the calculation network; the mutation detection unit includes: motion mutation detector and color mutation detector, and the motion mutation detector accepts smooth motion detecti...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com