FPGA configuration file arithmetic compression and decompression method based on neural network model

A neural network model and configuration file technology, which is applied in the field of arithmetic compression and decompression of FPGA configuration files based on neural network models, can solve the problem of long time-consuming configuration process, and achieve the effect of solving time-consuming and improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

[0104] Take the v5_crossbar.bit test data implemented by the Xilinx Virtex-V development board in the standard test set of the Department of Computer Science at the University of Erlangen-Nuremberg in Germany as an example. The configuration file is 8179 bytes, specifically:

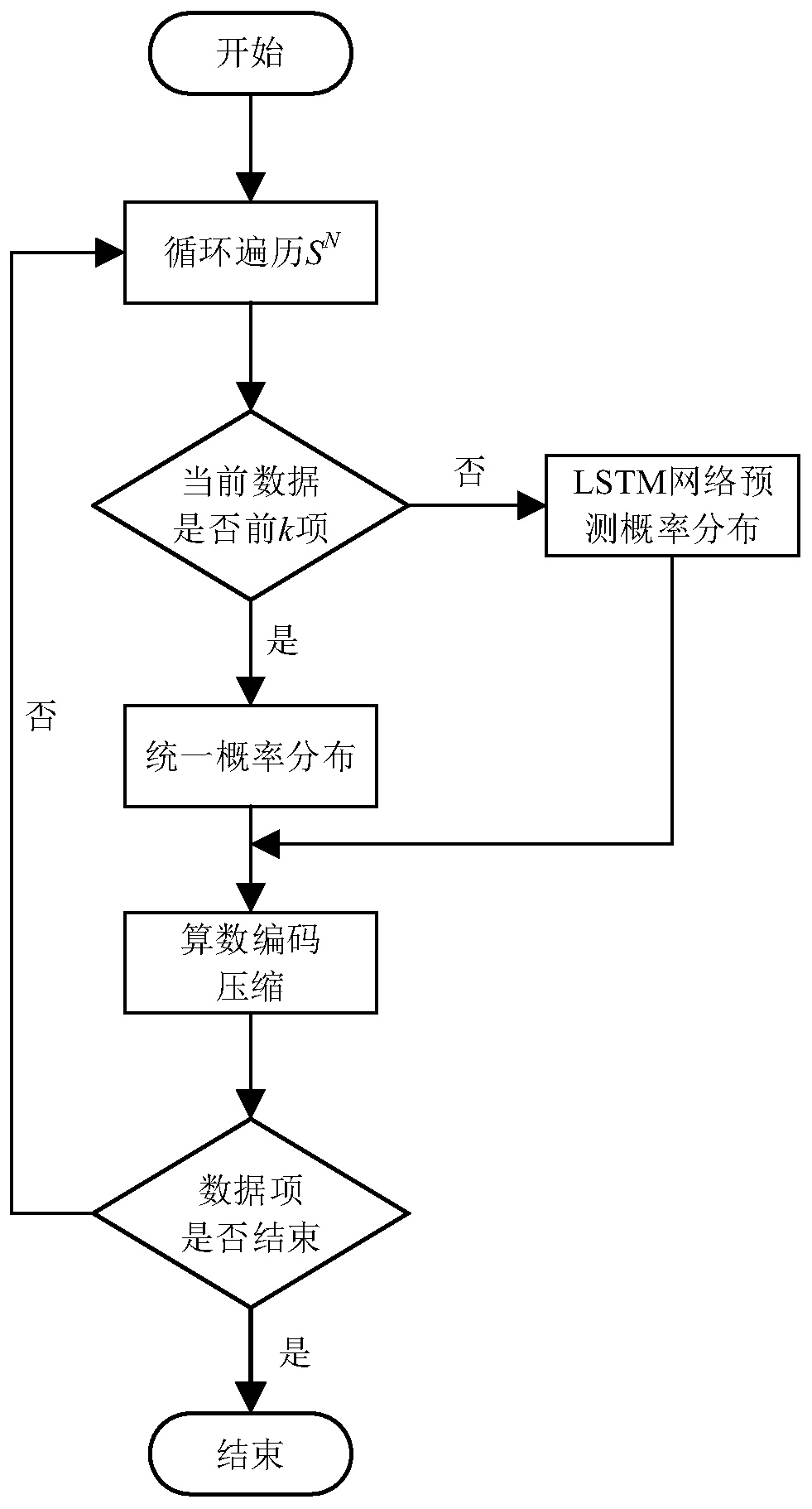

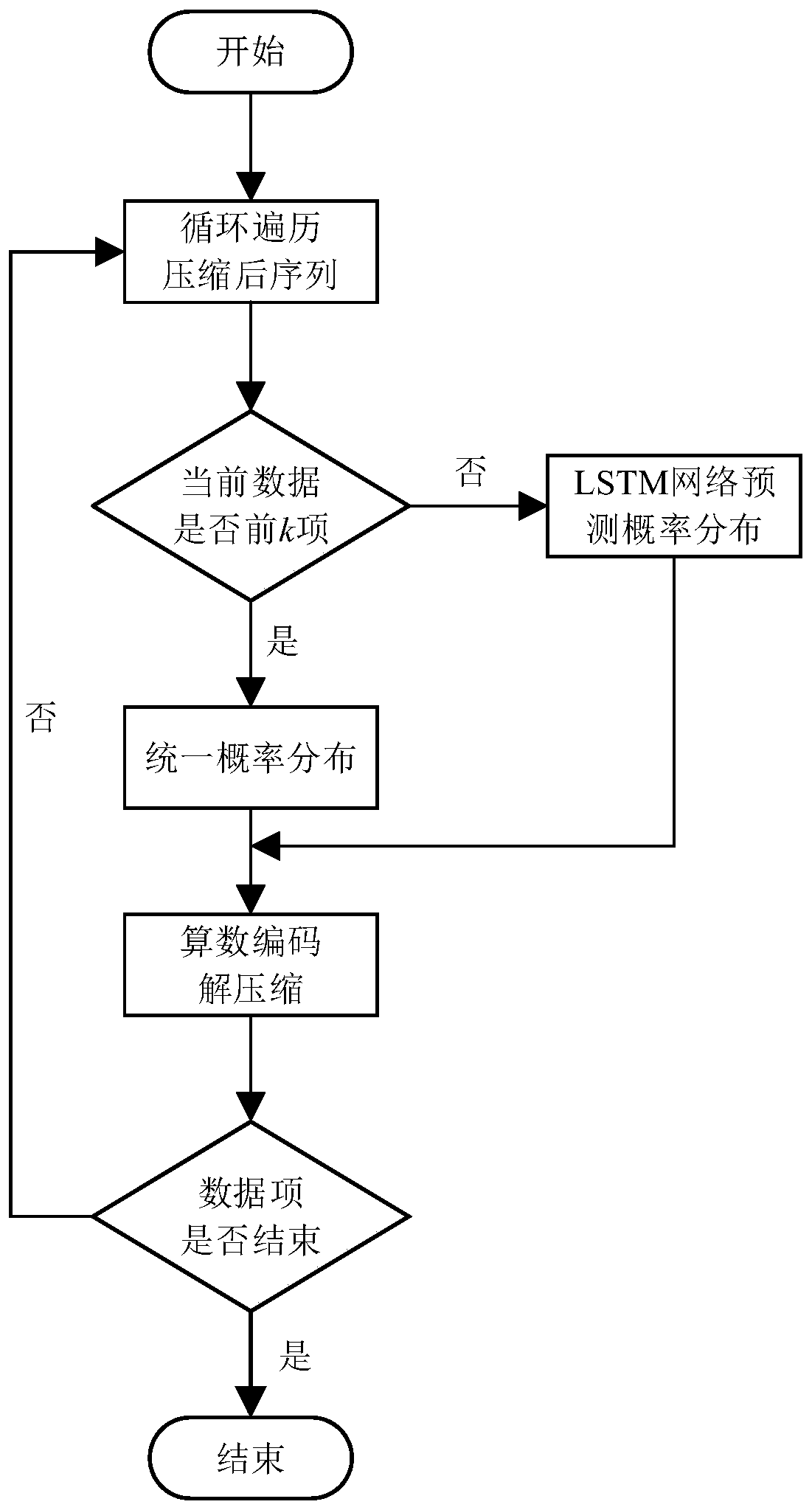

[0105] S1, adopt the FPGA configuration file compression strategy of arithmetic coding, define symbol as bit (bit), therefore, Ds={0,1}, S N It is the binary code stream of the configuration file, and k is set to 64;

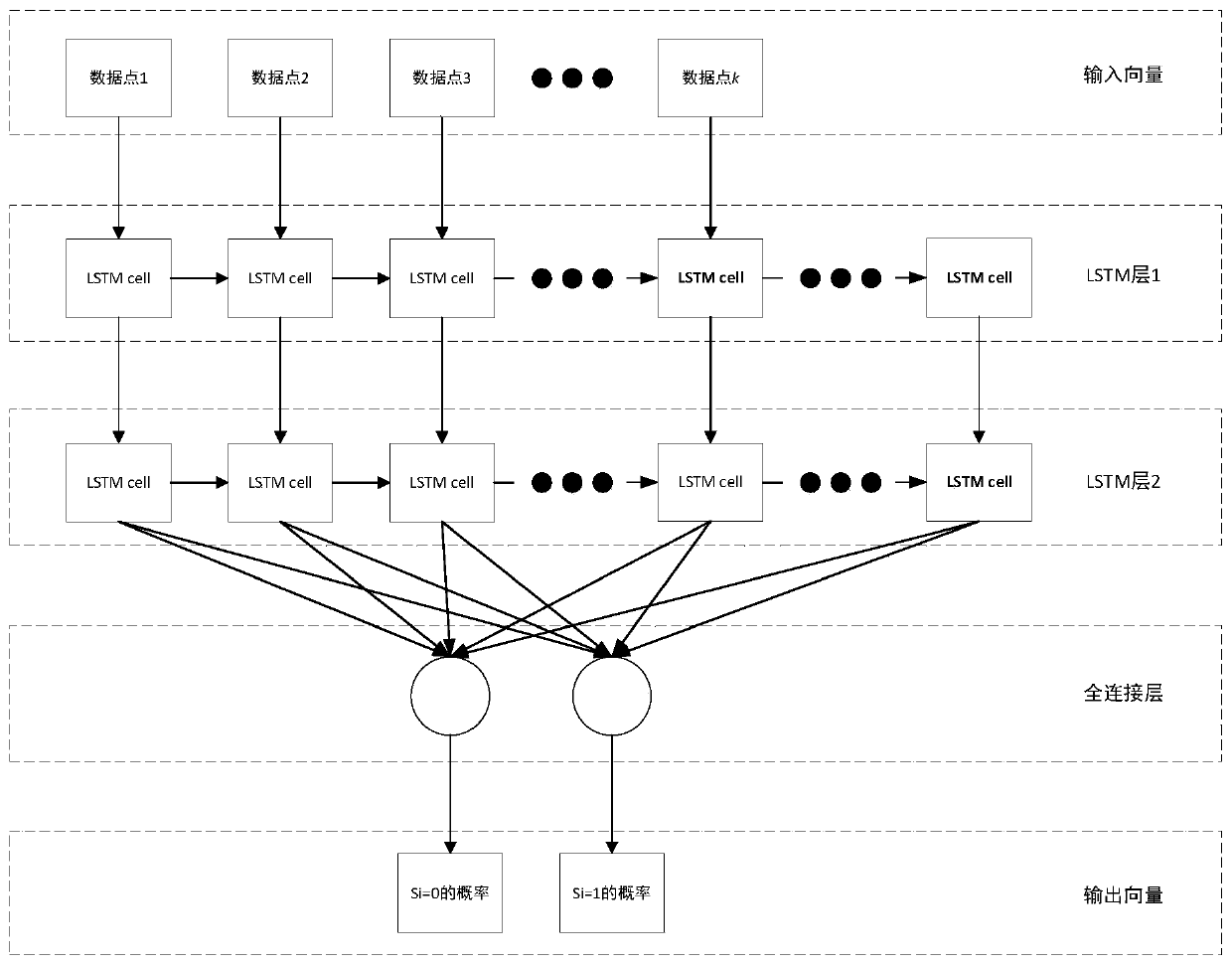

[0106] S2. Use the neural network model to estimate the probability of each symbol in the FPGA configuration file. Since the value of the symbol is correlated with the value of the previous symbol, an LSTM layer is used to construct the neural network model. The model consists of 2 layers of LSTM layers and a layer of fully connected layers. The model structure is as follows figure 1 As shown, among them, LSTM layer 1 and LSTM layer 2 each have 128 neurons; the fully connected layer has 2...

PUM

Login to view more

Login to view more Abstract

Description

Claims

Application Information

Login to view more

Login to view more - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap