Patents

Literature

3349 results about "Estimation result" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

As a result, we often refer to the estimation results or the current estimation results or the most recent estimation results or the last estimation results or the estimation results in memory. With estimates store and estimates restore, you can have many estimation results in memory.

Radio communication system, terminal apparatus and base station apparatus

InactiveUS20050232135A1Transmission path divisionTime-division multiplexCommunications systemTransmission channel

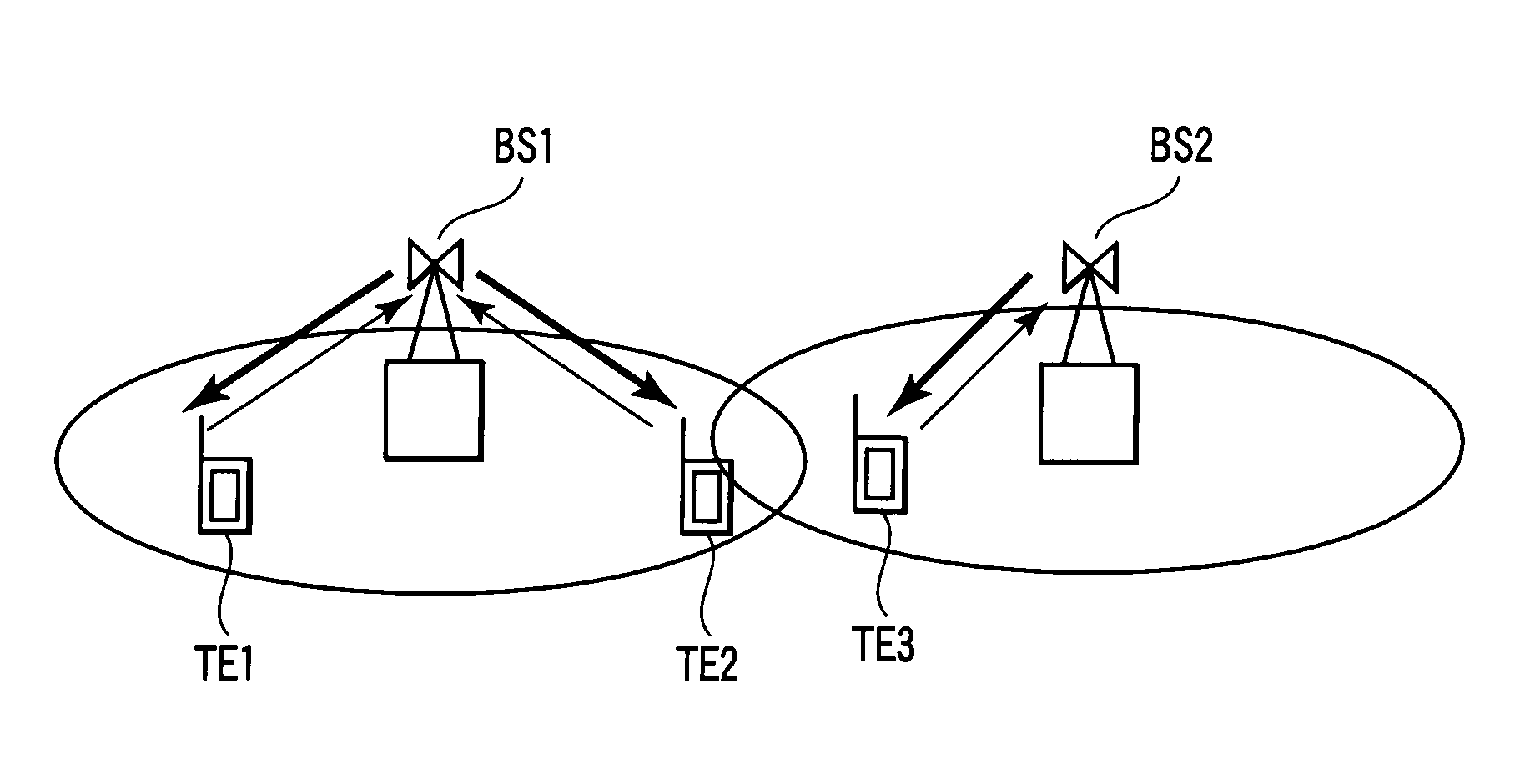

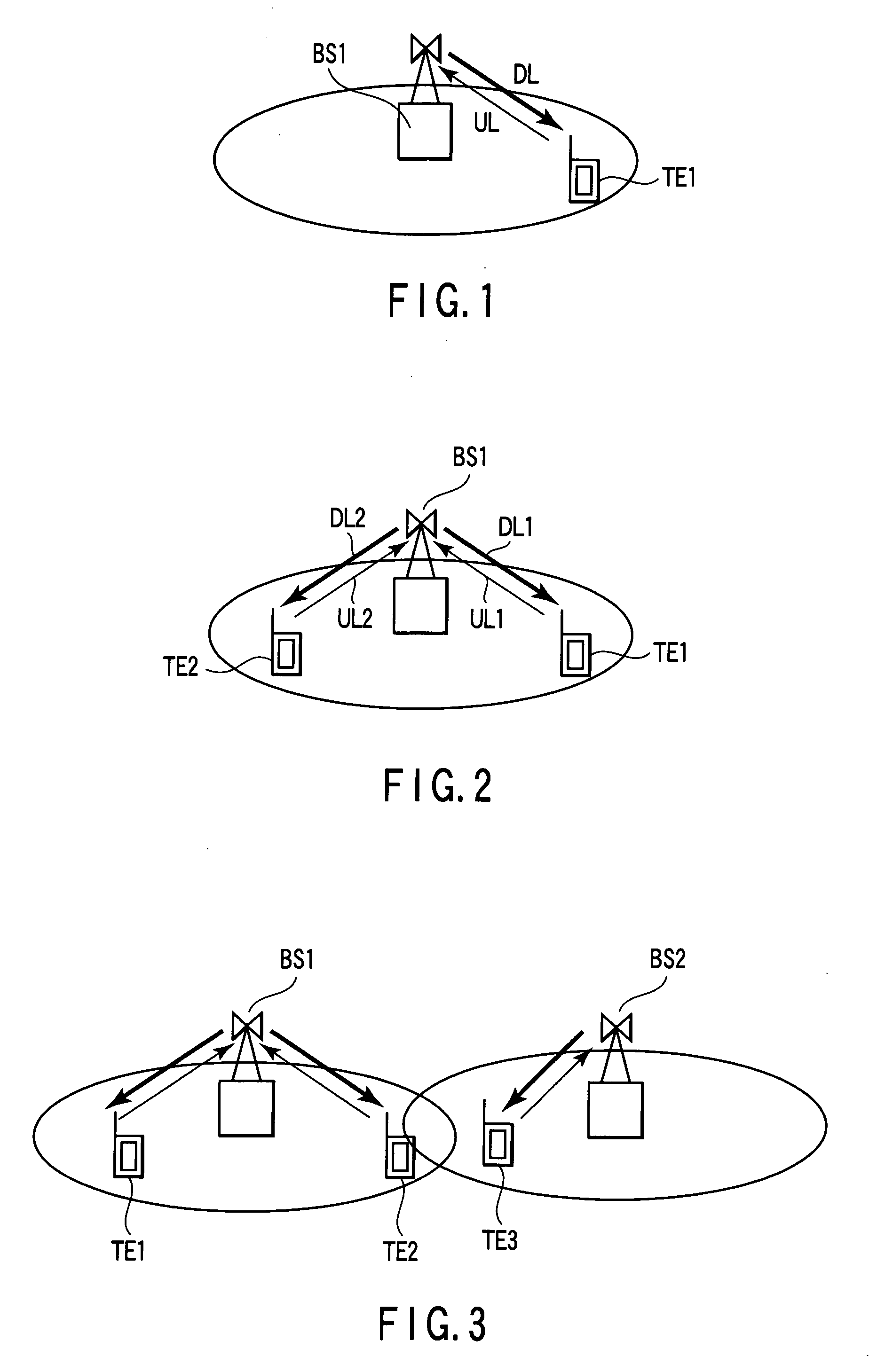

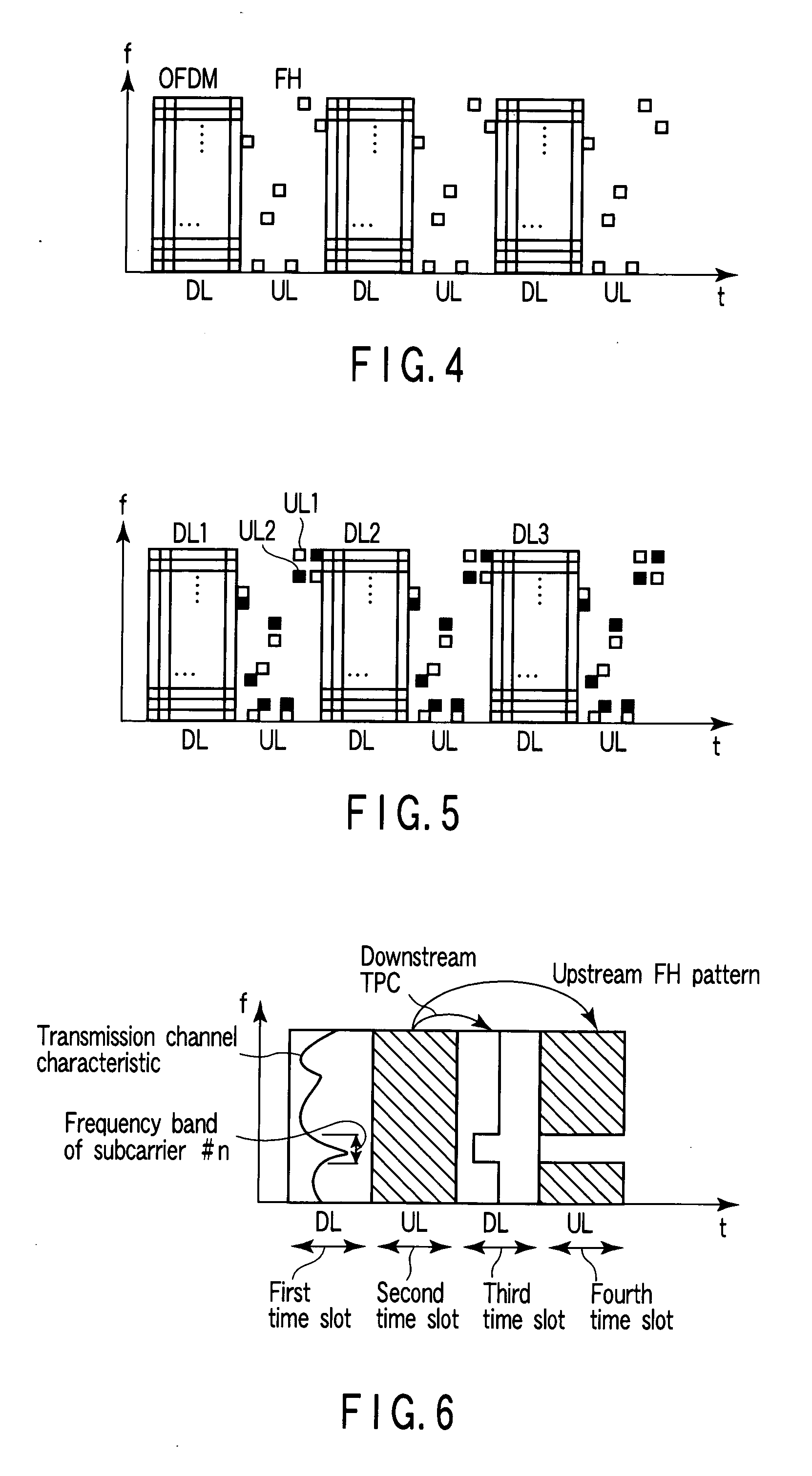

a radio communication system which includes a base station apparatus and terminal apparatuses and performs TDD two-way communications using an OFDM signal including subcarriers in a downstream communication from the base station apparatus to each terminal apparatus, and an FH signal having the same frequency band as that of the subcarriers in an upstream communication from the each terminal apparatus to the base station apparatus, the each terminal apparatus estimates transmission channel characteristics of the subcarriers based on the OFDM signal received, transmits an estimation result of the estimation unit to the base station apparatus, and the base station apparatus assigns, to the each terminal apparatus, at least one of subcarriers to be used in the downstream communication of the subcarriers and a hopping pattern to be used in the upstream communication, based on the estimation result transmitted from the each terminal apparatus.

Owner:KK TOSHIBA

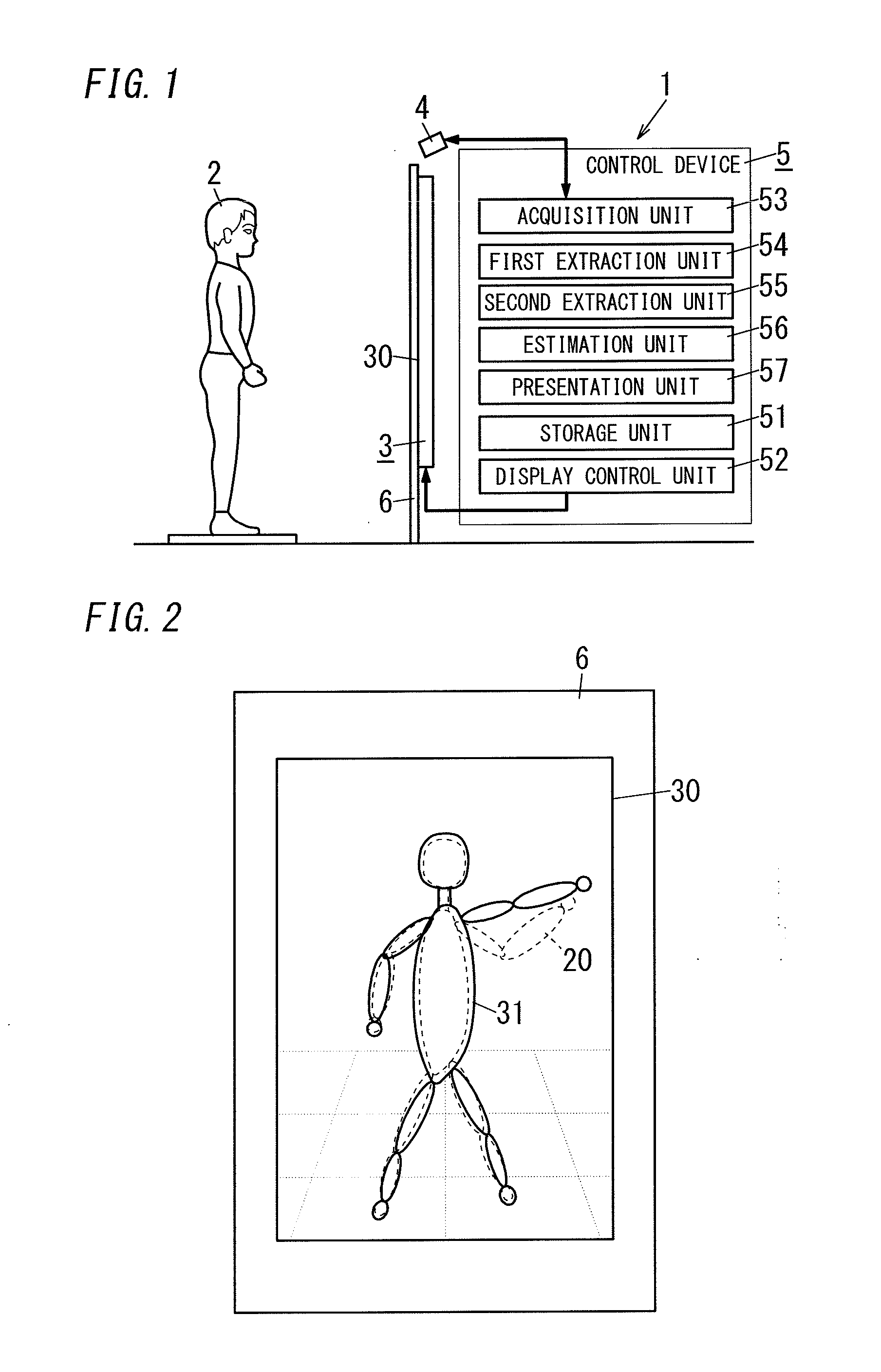

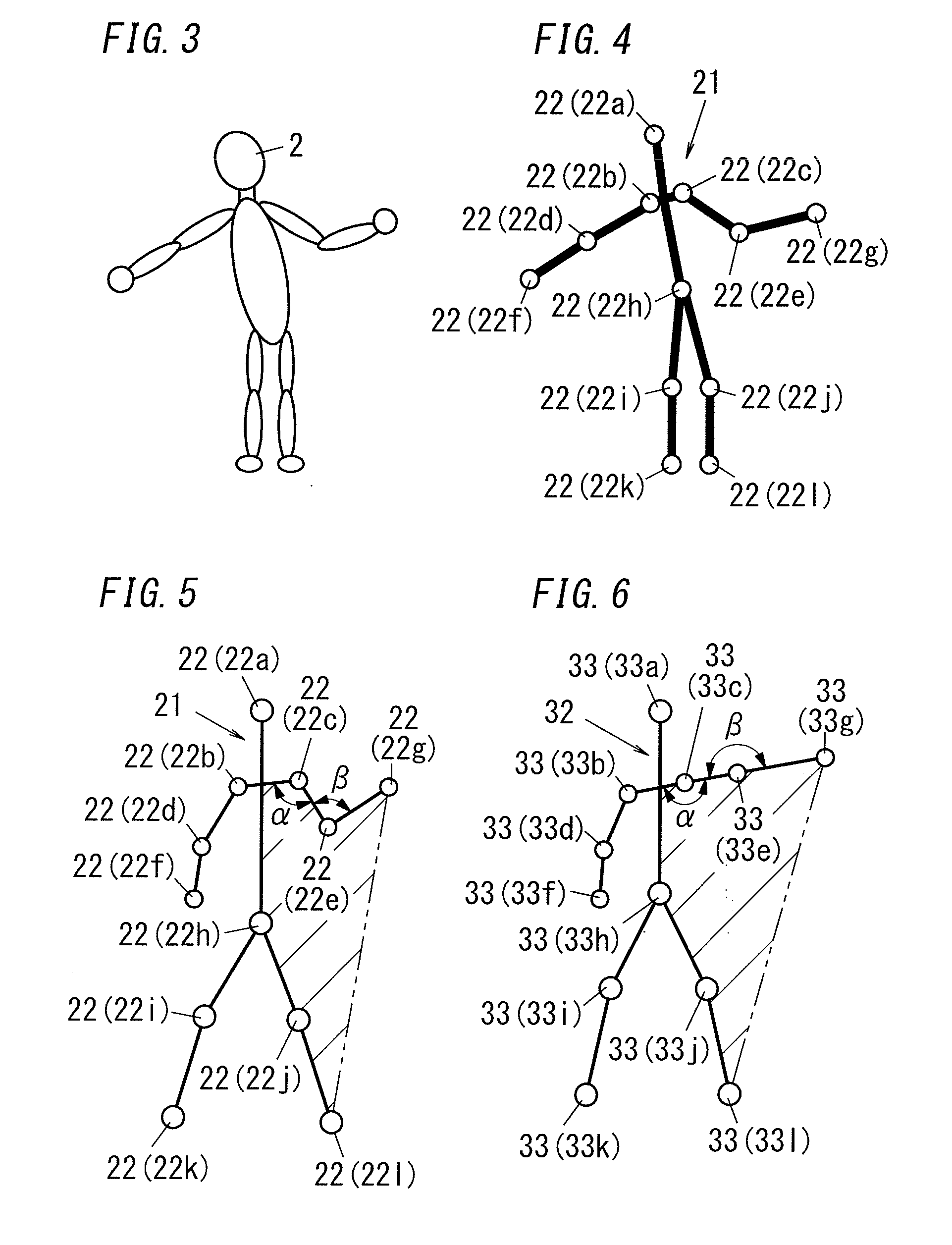

Exercise assisting system

InactiveUS20130171601A1Physical therapies and activitiesDiagnostics using lightComputer graphics (images)Display device

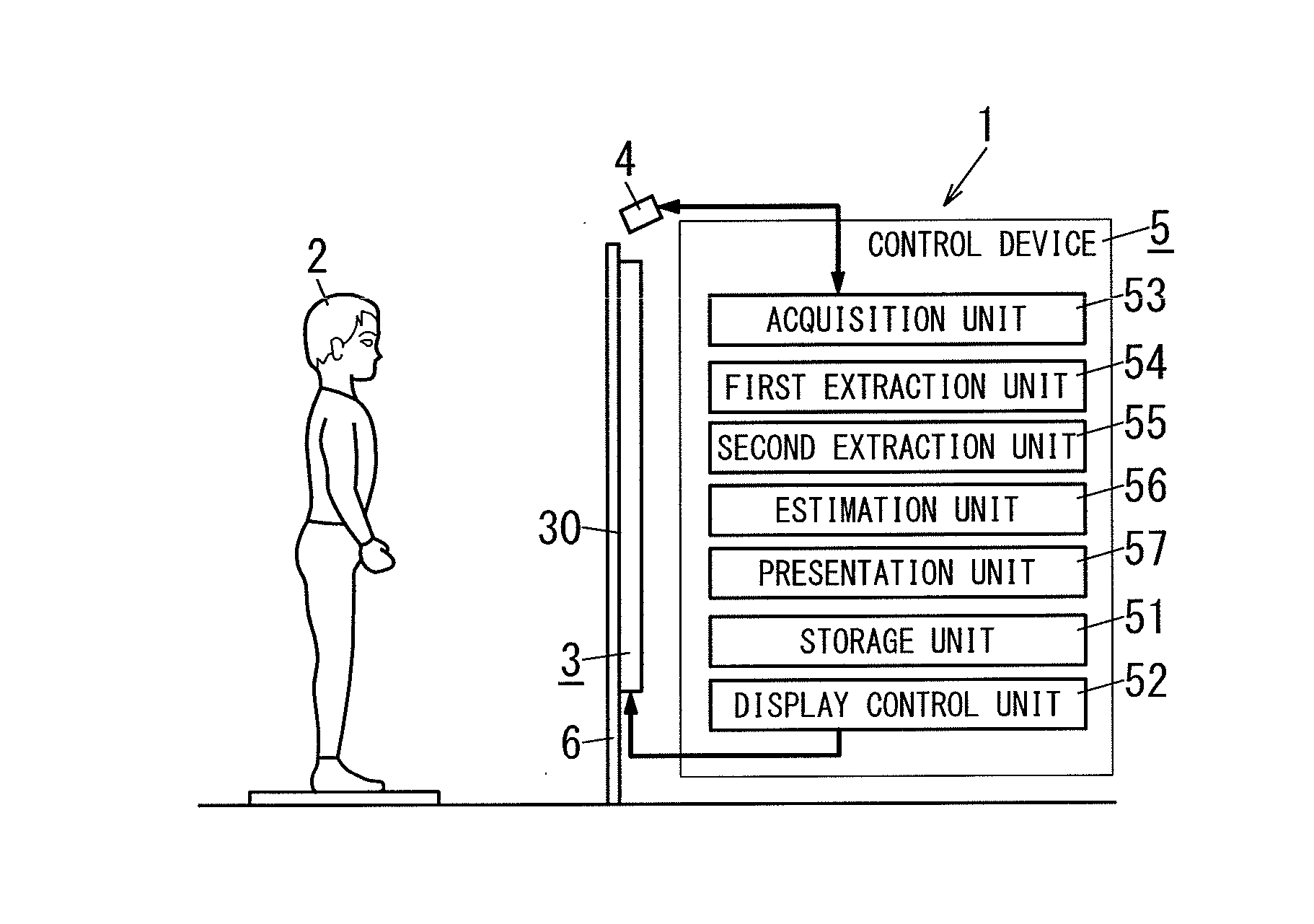

The exercise assisting system includes: a display device including a display screen displaying an image to a user; a comparison image storing unit storing a comparison image representing an image of an exerciser performing a predetermined exercise; a comparison image display unit displaying the comparison image stored in the storing unit on the screen; a mirror image displaying means displaying a mirror image of the user so as to overlap the comparison image; a characteristic amount extraction unit detecting positions of sampling points of a body of the user and calculating a characteristic amount representing a posture of the user based on the position; a posture estimation unit comparing the characteristic amount from the extraction unit with a criterion amount representing a posture of the exerciser and estimating a deviation between postures of the user and the exerciser; and a presentation unit presenting an estimation result of the estimation unit.

Owner:PANASONIC INTELLECTUAL PROPERTY MANAGEMENT CO LTD

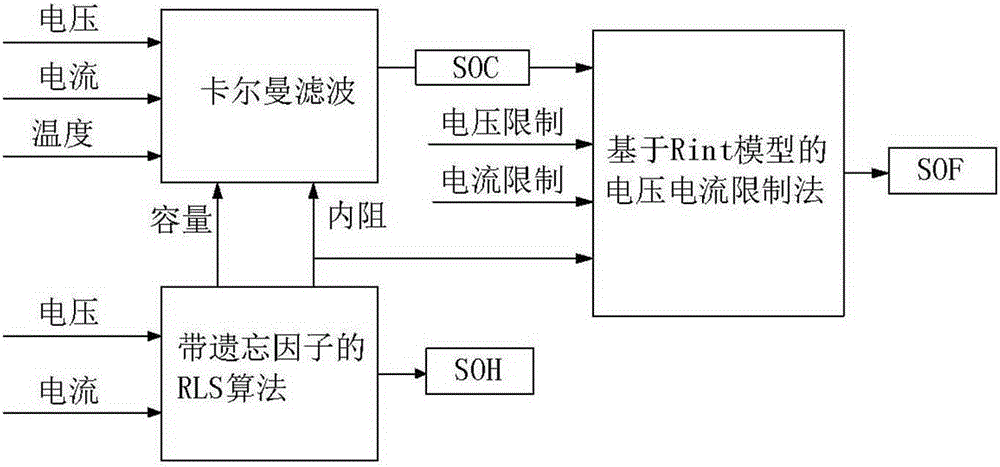

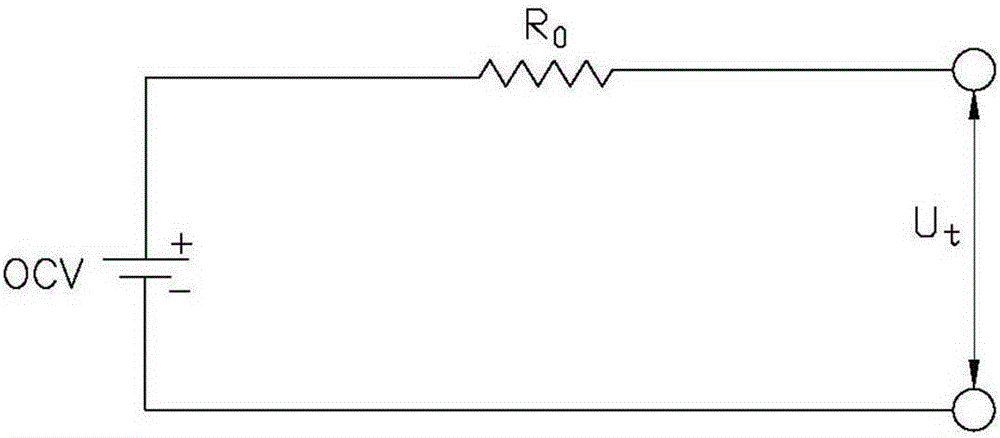

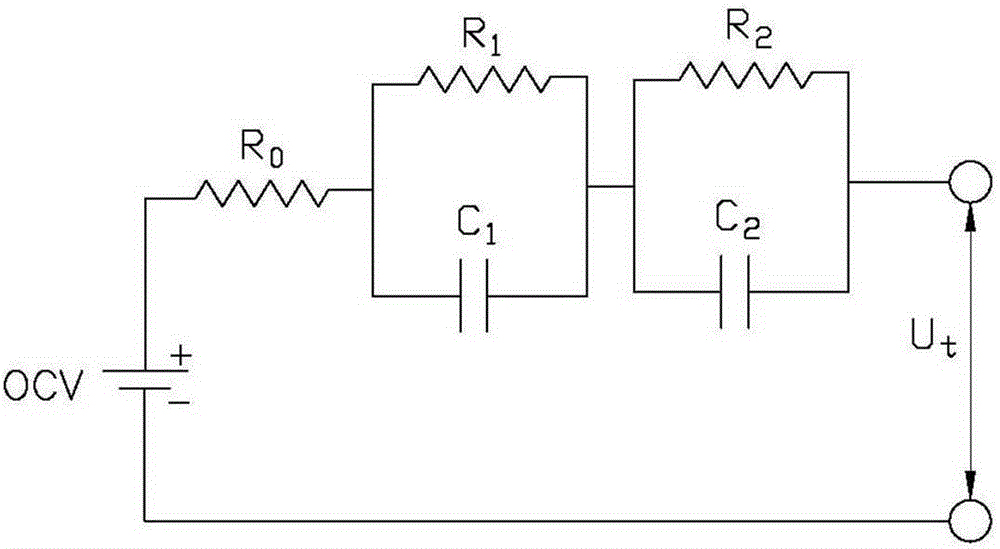

Combined estimation method for lithium ion battery state of charge, state of health and state of function

ActiveCN105301509AGuaranteed estimation accuracyImprove state estimation performanceElectrical testingInternal resistanceState of health

The invention provides a combined estimation method for lithium ion battery state of charge, state of health and state of function. The combined estimation method comprises the steps that the state of he---alth of a battery is estimated online: open circuit voltage and internal resistance are identified online by adopting a recursive least square method with a forgetting factor, the state of charge is indirectly acquired according to a pre-established OCV-SOC corresponding relation, and then the size of battery capacity is estimated according to cumulative charge and discharge electric charge between two SOC points; the state of charge of the battery is estimated online: the state of charge of the battery is estimated by adopting the Kalman filter algorithm based on a two-order RC equivalent circuit model, and the battery capacity parameter in the Kalman filter algorithm is updated according to the estimation result of battery capacity; and the state of function of the battery is estimated online: the maximum chargeable and dischargeable current is calculated based on the voltage limit and the current limit of the battery according to internal resistance obtained by online identification, and then the maximum chargeable and dischargeable function can be obtained through further calculation.

Owner:TSINGHUA UNIV

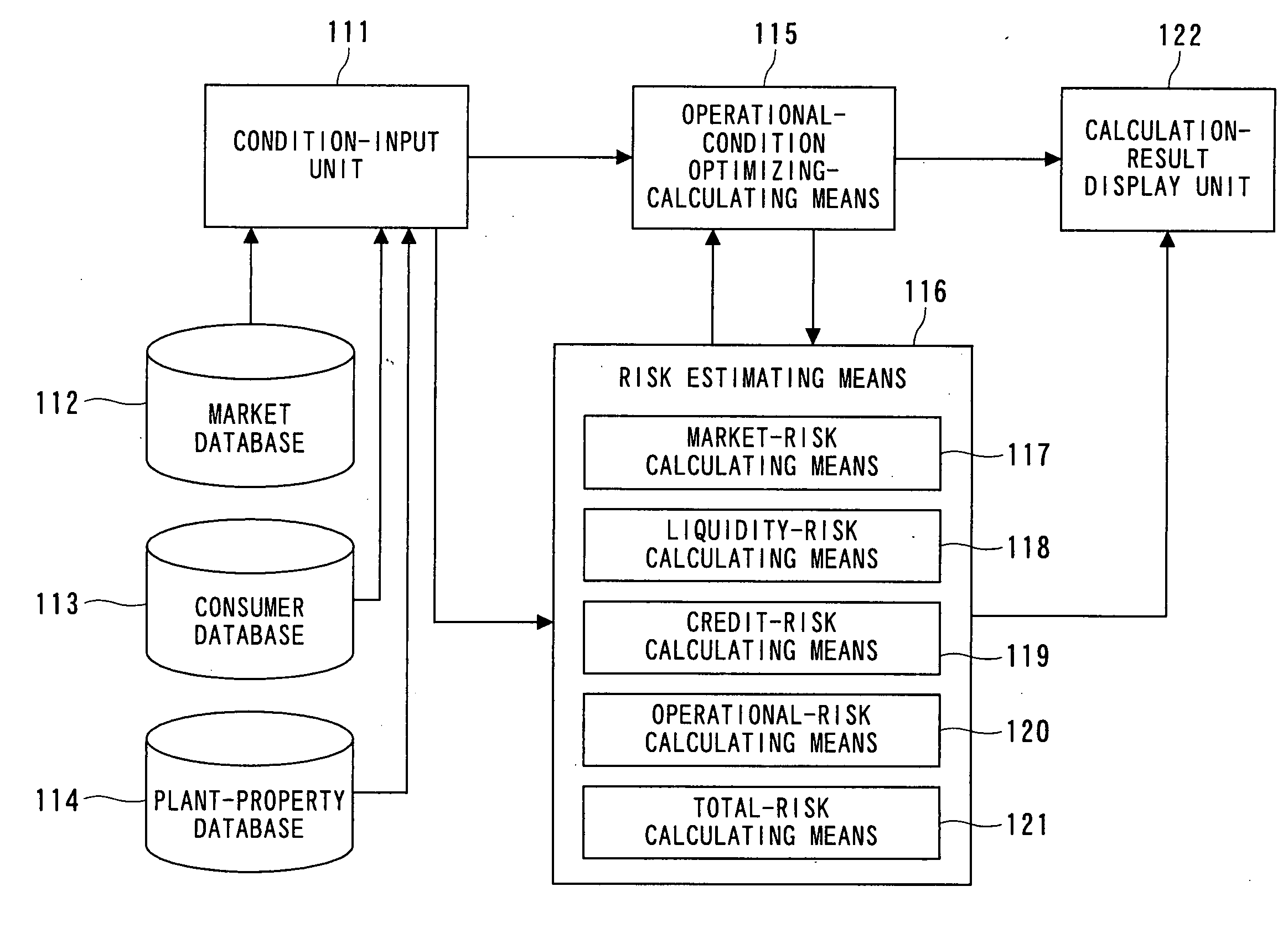

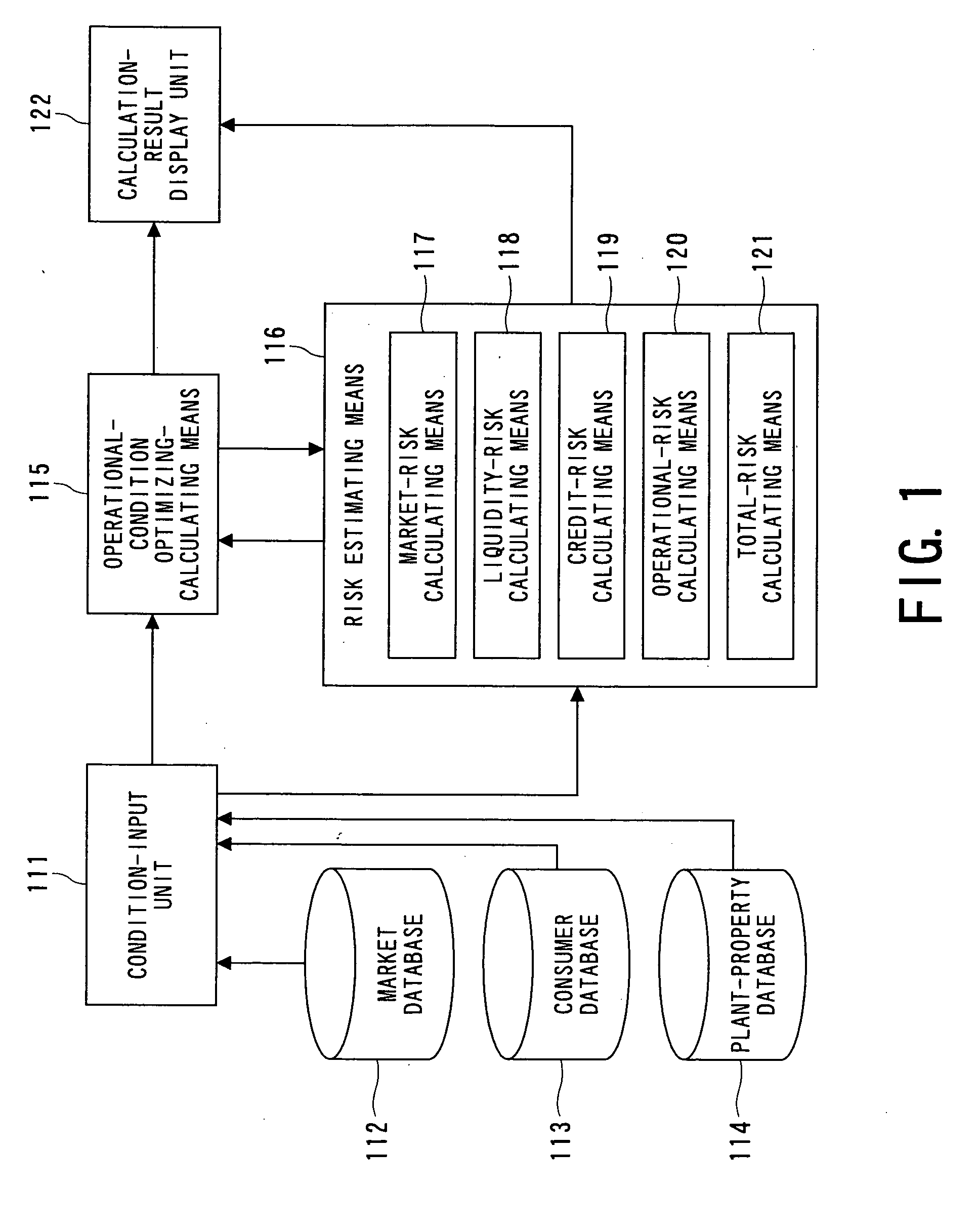

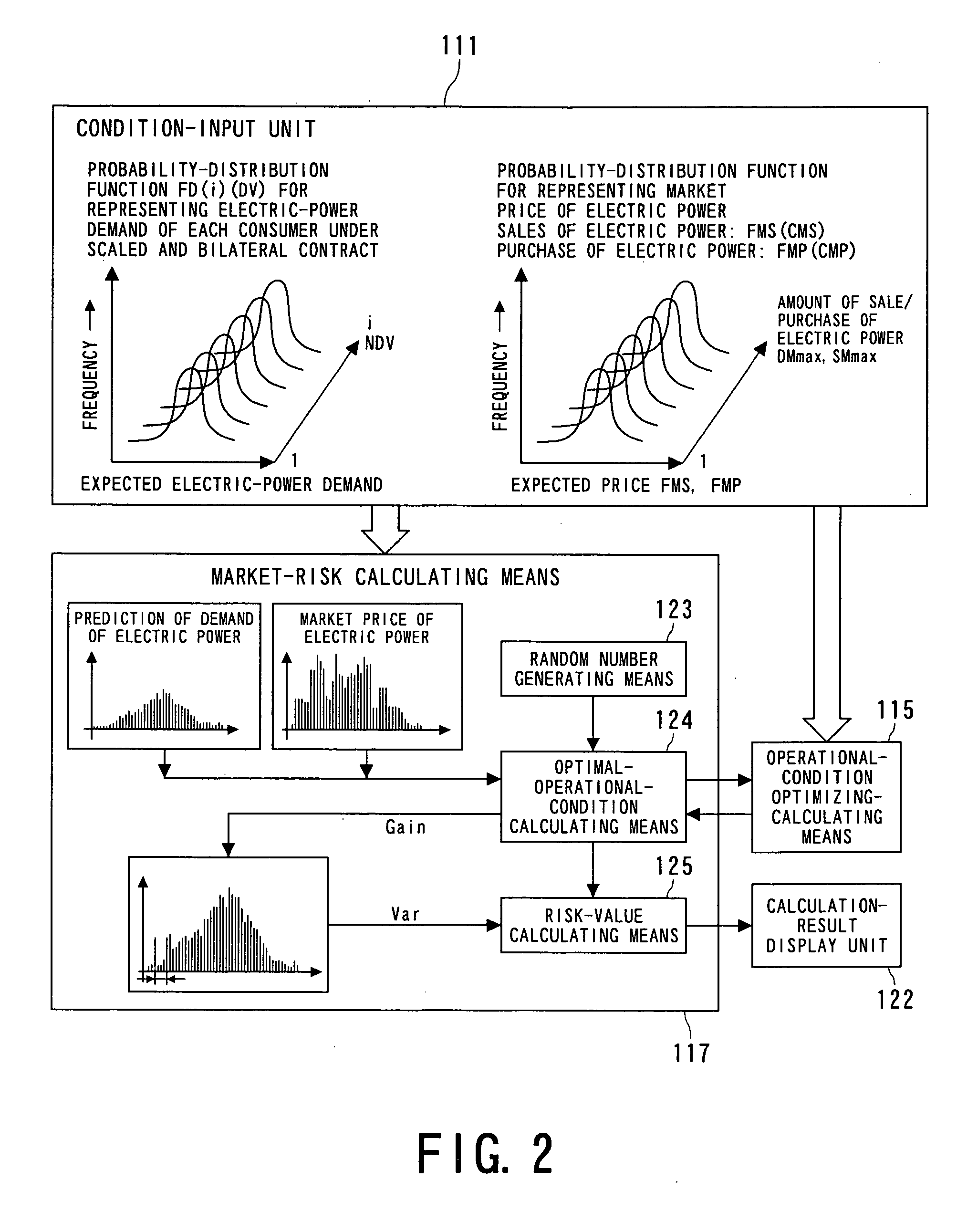

Electric-power-generating-facility operation management support system, electric-power-generating-facility operation management support method, and program for executing support method, and program for executing operation management support method on computer

InactiveUS20050015283A1Improve operating conditionsGuaranteed uptimeGeneration forecast in ac networkMarket predictionsManagement support systemsManagement support

An electric-power-generating-facility operation management support system includes a condition-input unit inputting costs of electric power generation in electric power generating facilities, probability distribution of predicted values of the demand for electric power, and probability distribution of predicted values of the transaction price of power on the market. An optimal-operational-condition calculating unit calculates the performance of power generation of the power generating facilities based upon the costs of power generation, the probability distribution of predicted values of the demand for power and the probability distribution of predicted values of the transaction price of power on the market, input from the condition-input unit so as to obtain the optimal operational conditions which exhibit the maximum performance of power generation A risk estimating unit calculates and estimates a risk value of damage of the optimal operation under the optimal operational conditions. A calculation-result display unit displays the optimal operational conditions and the risk-estimation results.

Owner:KK TOSHIBA

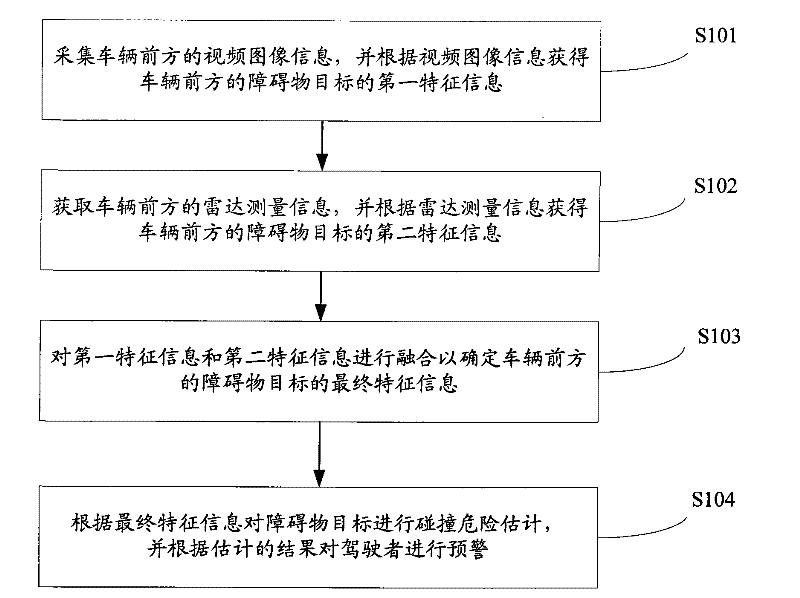

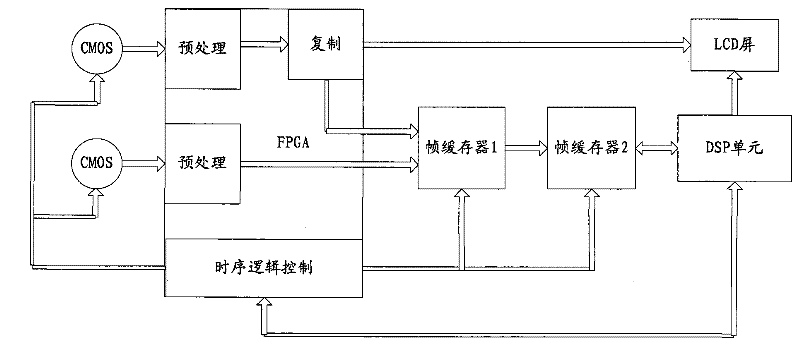

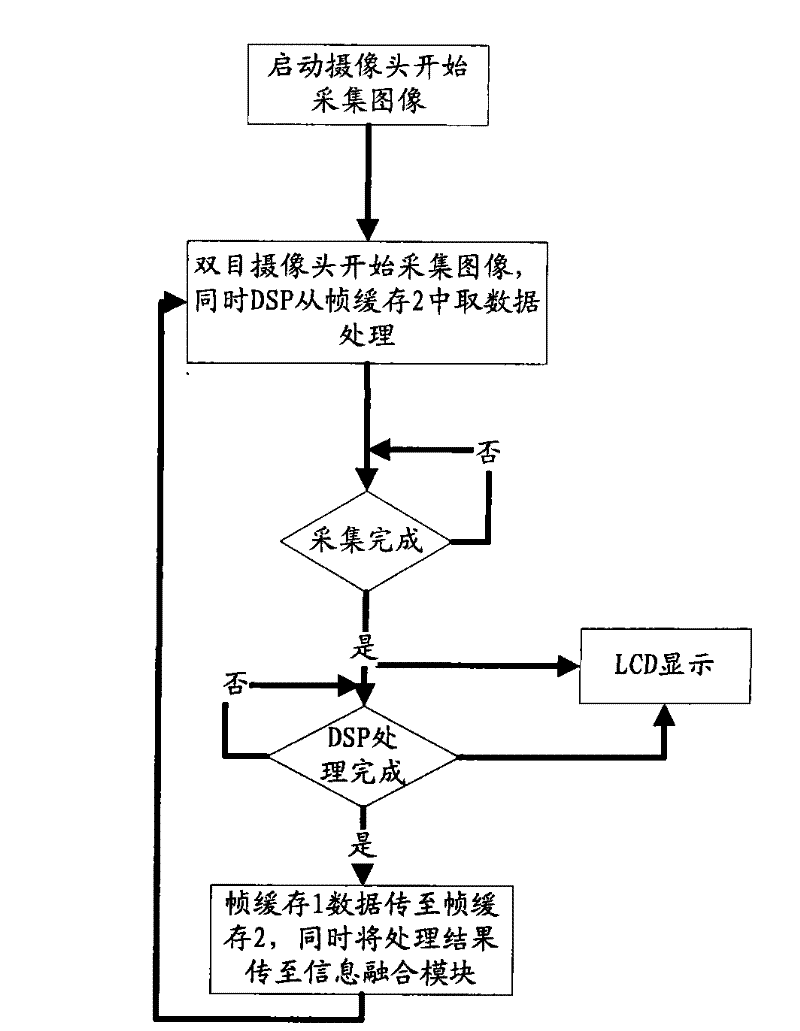

Early warning method for preventing vehicle collision and device

InactiveCN102542843ARealize anti-collision alarmHigh precisionPedestrian/occupant safety arrangementAnti-collision systemsGoal recognitionSimulation

The invention provides an early warning method for preventing vehicle collision and a device. The method includes steps of acquiring video image information of the front of a vehicle, and obtaining first characteristic information of an obstacle target of the front of the vehicle according to the video image information; acquiring radar measurement information of the front of the vehicle, and determining second characteristic information of the obstacle target of the front of the vehicle according to the radar measurement information; fusing characteristics of the first characteristic information and the second characteristic information so as to determine final characteristic information of the obstacle target of the front of the vehicle; estimating collision risks to the obstacle target according to the final characteristic information, and realizing early warning for a driver according to a collision risk estimation result. The obstacle target of the front of the vehicle and state information of the obstacle target are identified by means of fusing information, which are acquired by double cameras and a radar device, of the front of the vehicle, target identification precision is improved, and a collision preventive alarm function of the vehicle can be well realized.

Owner:BYD CO LTD

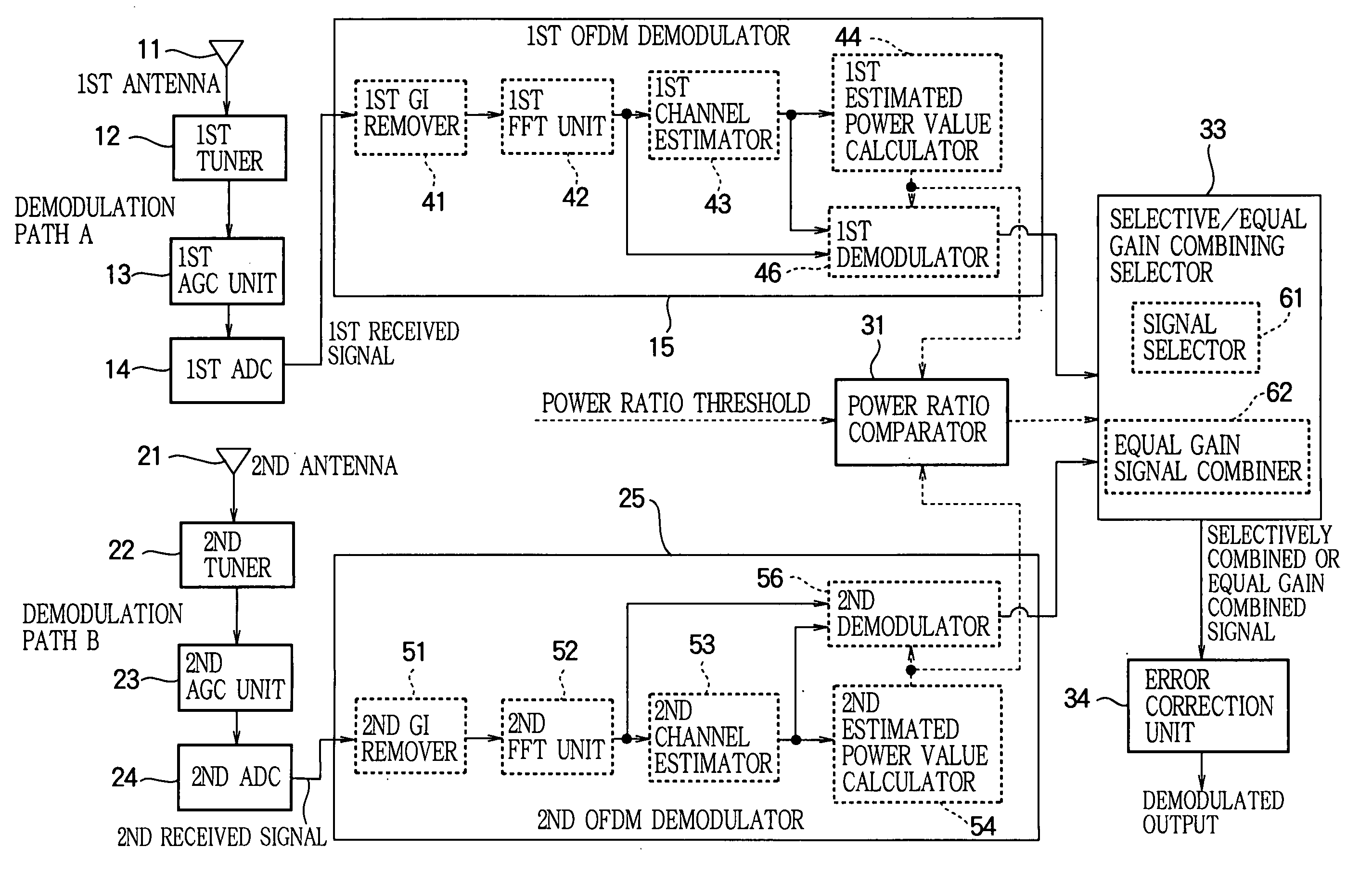

Diversity receprtion device and diversity reception method

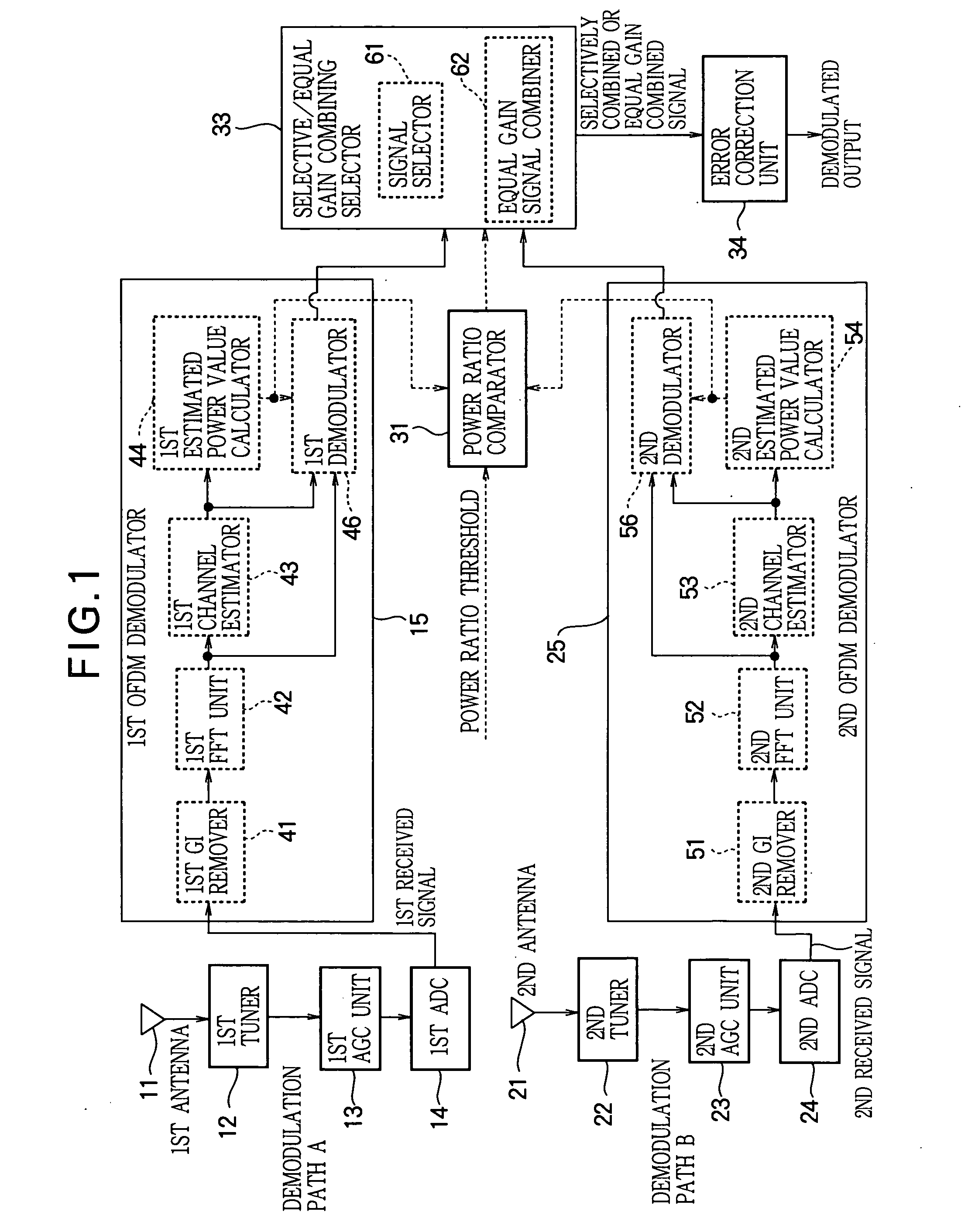

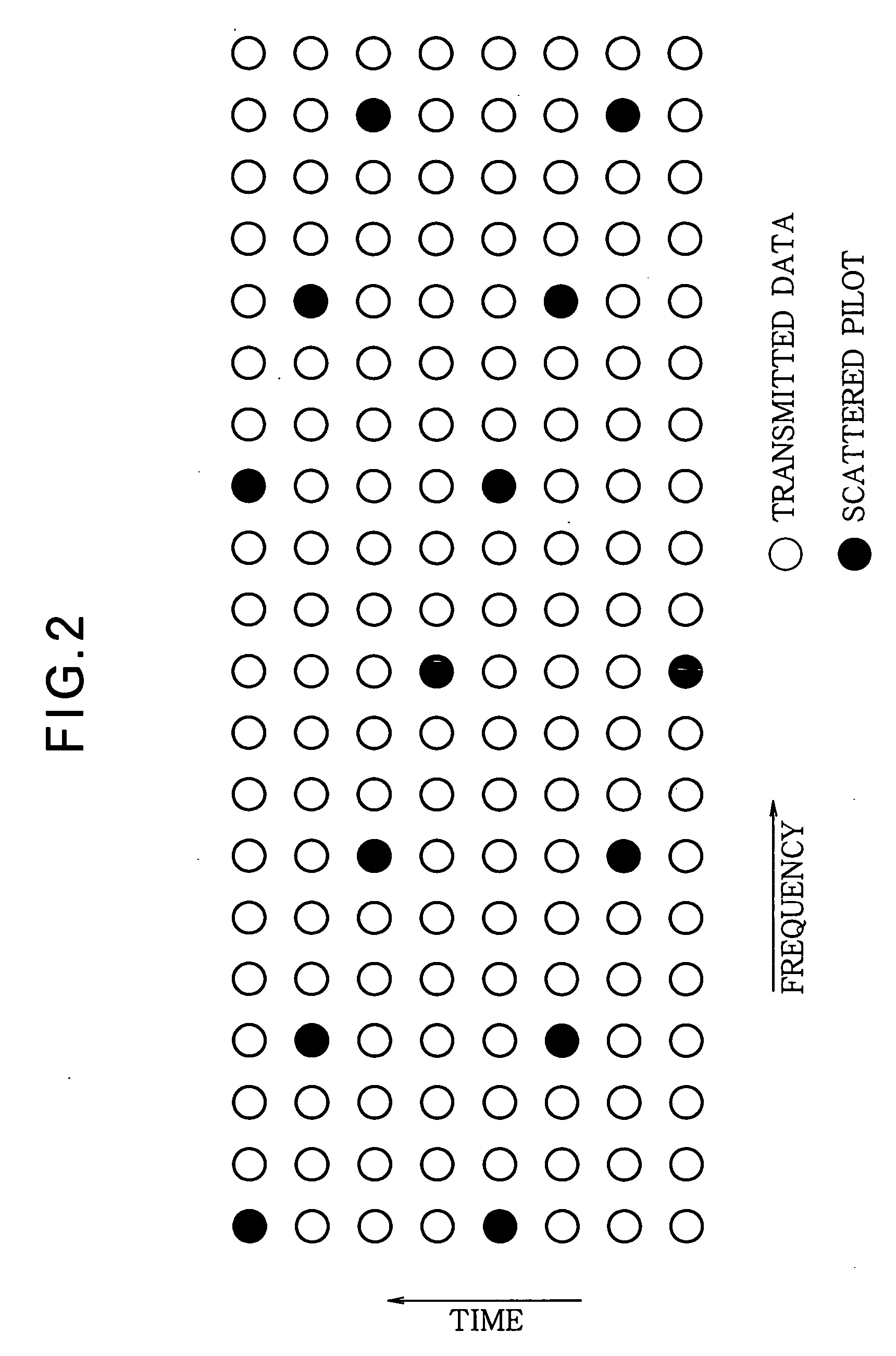

InactiveUS20060166634A1Large diversity effectImprove reception performanceSpatial transmit diversityError detection/prevention using signal quality detectorCarrier signalEngineering

The circuit size of a diversity receiver for an orthogonal frequency division multiplexing signal is reduced, and the diversity effect is increased, by providing a power ratio comparator that calculates a difference value as a ratio of powers derived from channel estimation results for subcarrier components received from two antennas (11), (21) and compares the calculated difference value with a predetermined threshold, and a selective / equal gain combining selector (33) that outputs one of the received demodulated signals when the comparison result indicates that the calculated difference value is greater than the threshold value.

Owner:MITSUBISHI ELECTRIC CORP

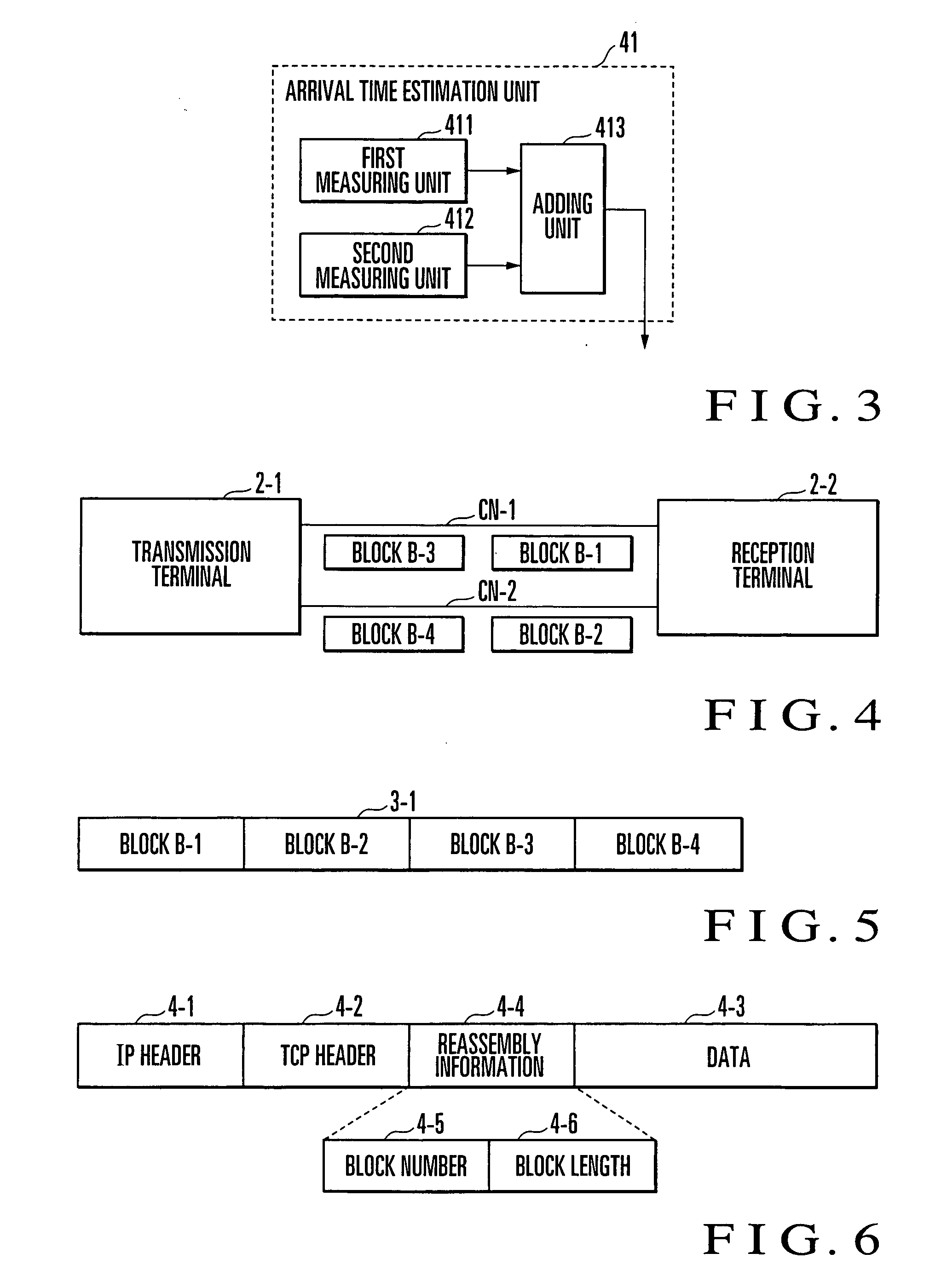

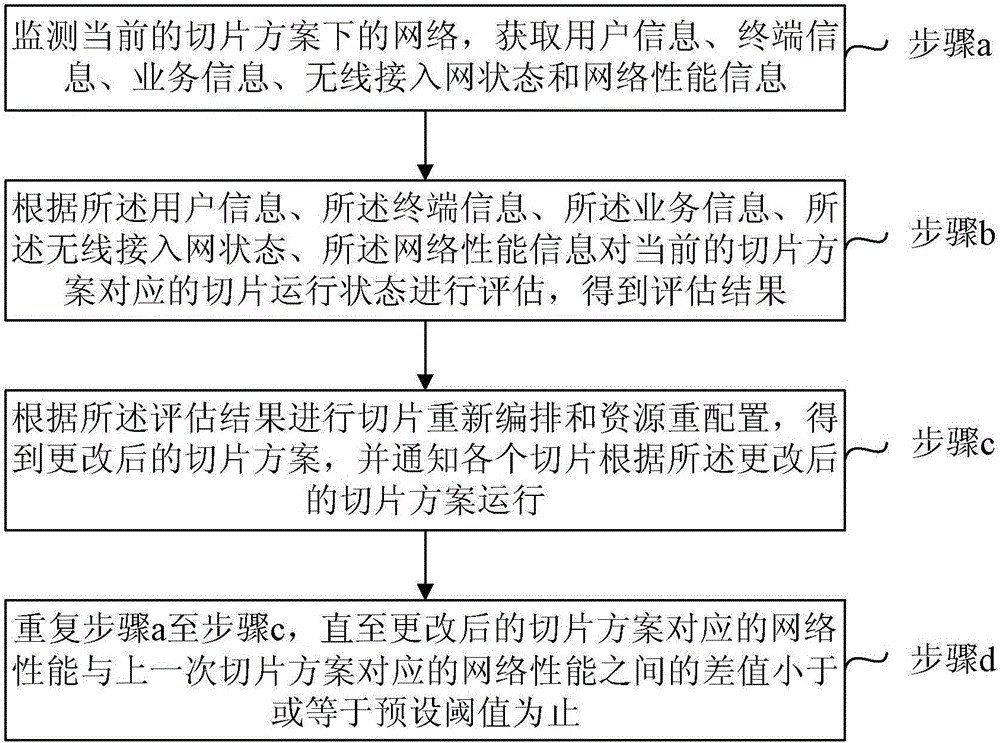

Communication apparatus and data communication method

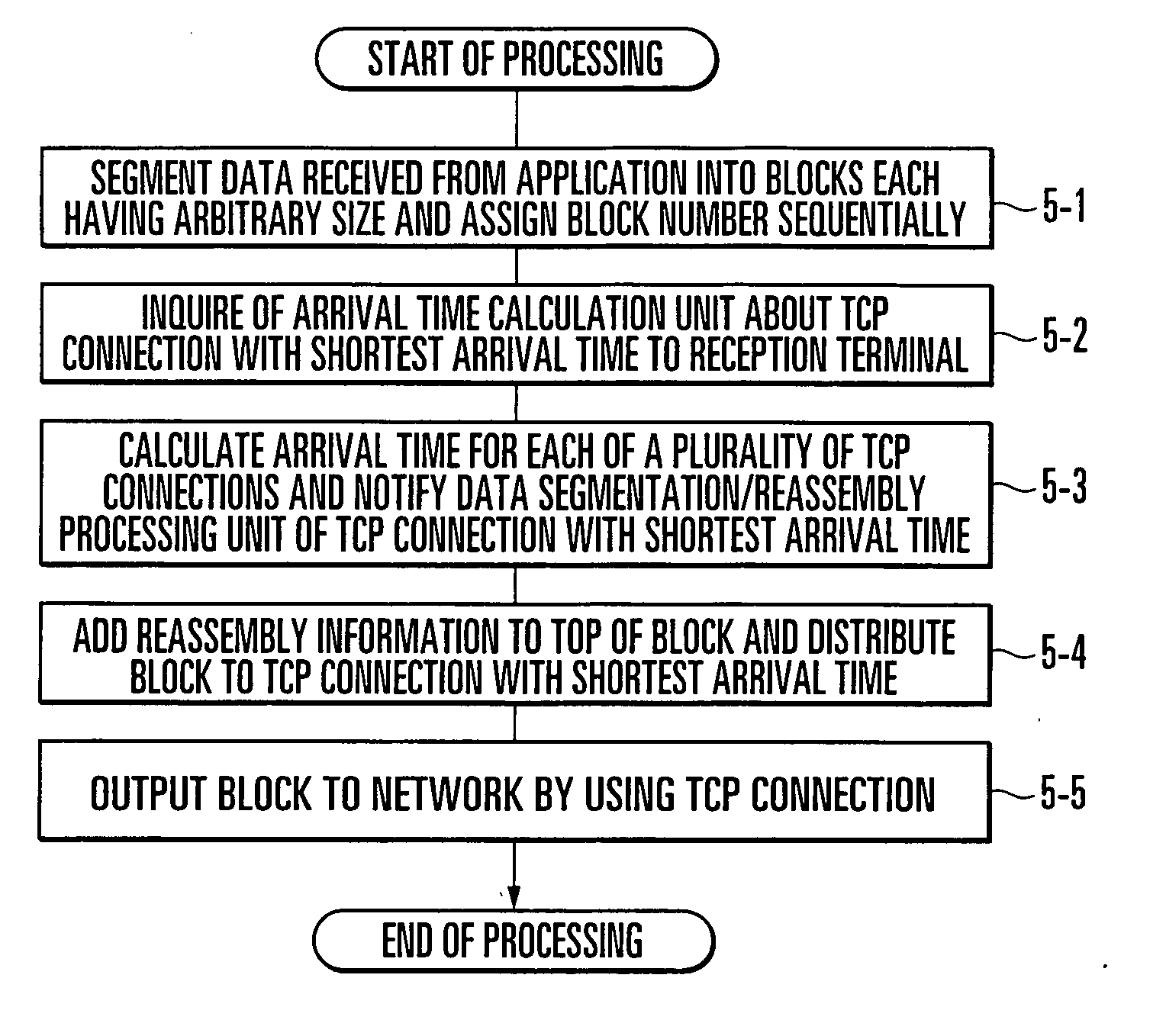

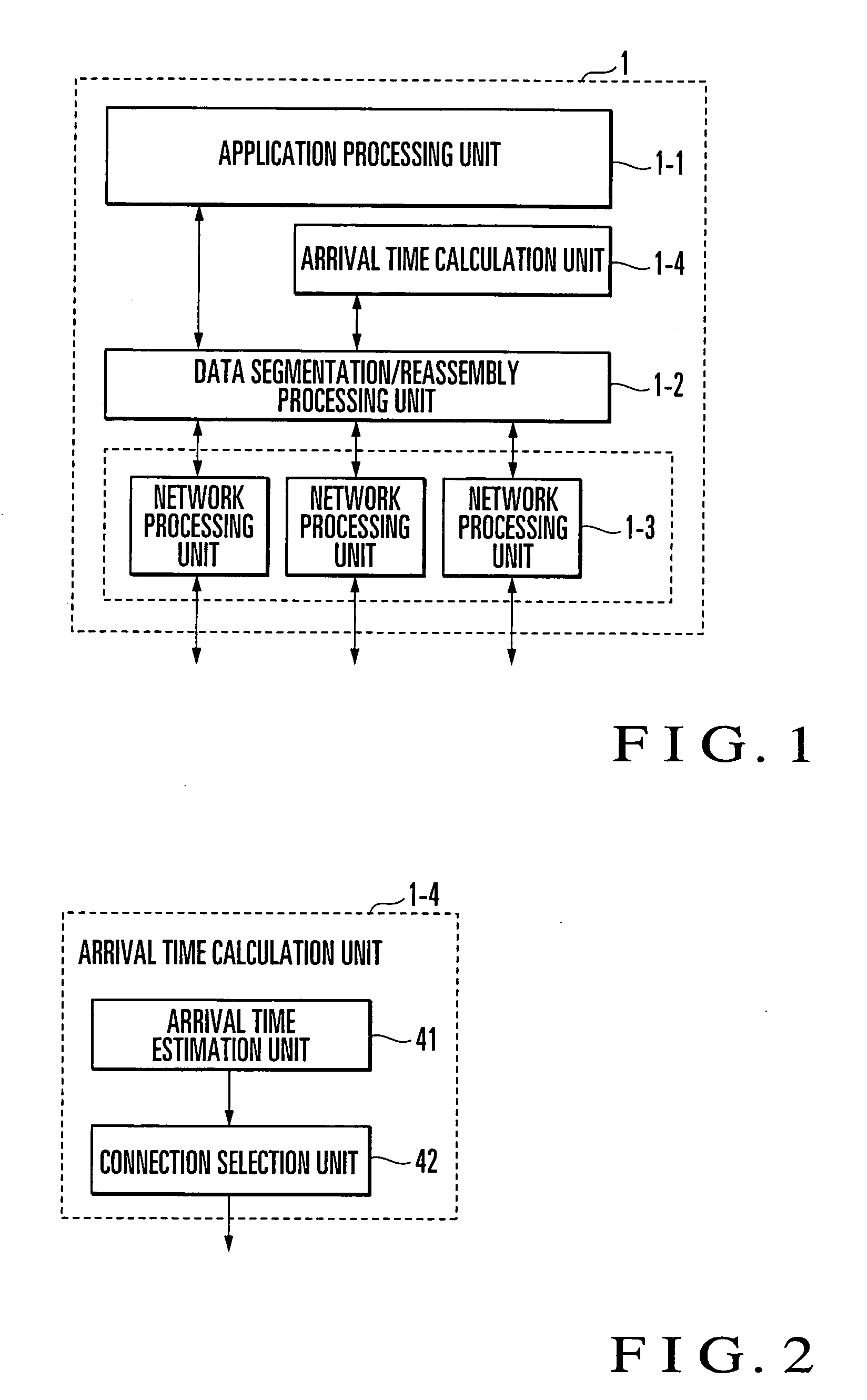

ActiveUS20060039287A1Efficient implementationError preventionTransmission systemsNetwork processing unitArrival time

A communication apparatus includes an arrival time estimation unit, connection selection unit, and network processing unit. The arrival time estimation unit estimates, for each block and each of a plurality of connections, an arrival time until a block generated by segmenting transmission data arrives from the apparatus at a final reception terminal or a merging apparatus through a network. The connection selection unit selects, for each block, a connection with the shortest arrival time from the plurality of connections on the basis of the estimation result. The network processing unit outputs each block to the network by using the selected connection. A data communication method and a data communication program are also disclosed.

Owner:NEC CORP

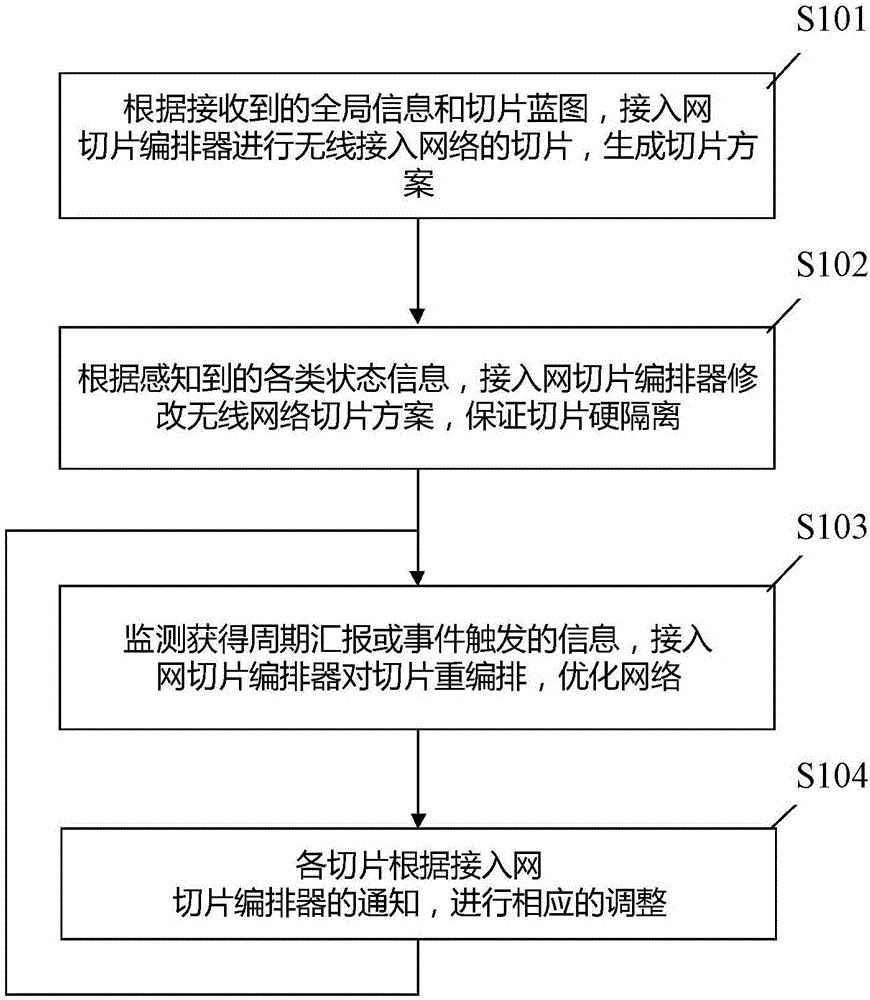

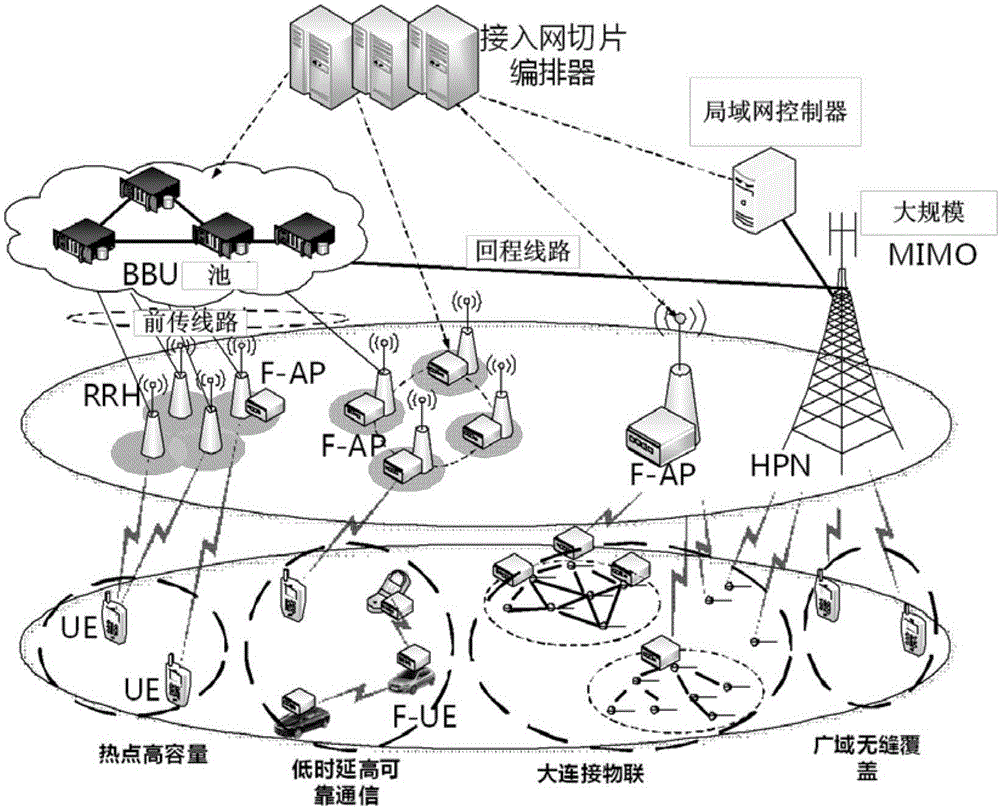

Network slice method and device and equipment

ActiveCN106792739AImplement reconfigurationFlexible and convenient networkingData switching networksNetwork planningAccess networkHigh performance networking

The embodiment of the invention provides a network slice method and device and equipment. The method comprises the steps of monitoring a network under a current slice scheme and obtaining user information, terminal information, service information, a wireless access network state and network performance information; estimating a slice operation state corresponding to a current slice scheme according to the user information, the terminal information, the service information, the wireless access network state and the network performance information, thereby obtaining an estimation result; carrying out slice rearrangement and resource reconfiguration according to the estimation result, thereby obtaining a modified slice scheme and informing each slice to operate according to the modified slice scheme; and repeating the process until the difference value between the network performance corresponding to the modified slice scheme and the network performance corresponding to the last slice scheme is smaller than or equal to a preset threshold value. Network reconfiguration based on information sensing is realized; and the purpose of adapting to various service applications and flexible, convenient and high-performance networking can be realized.

Owner:BEIJING UNIV OF POSTS & TELECOMM

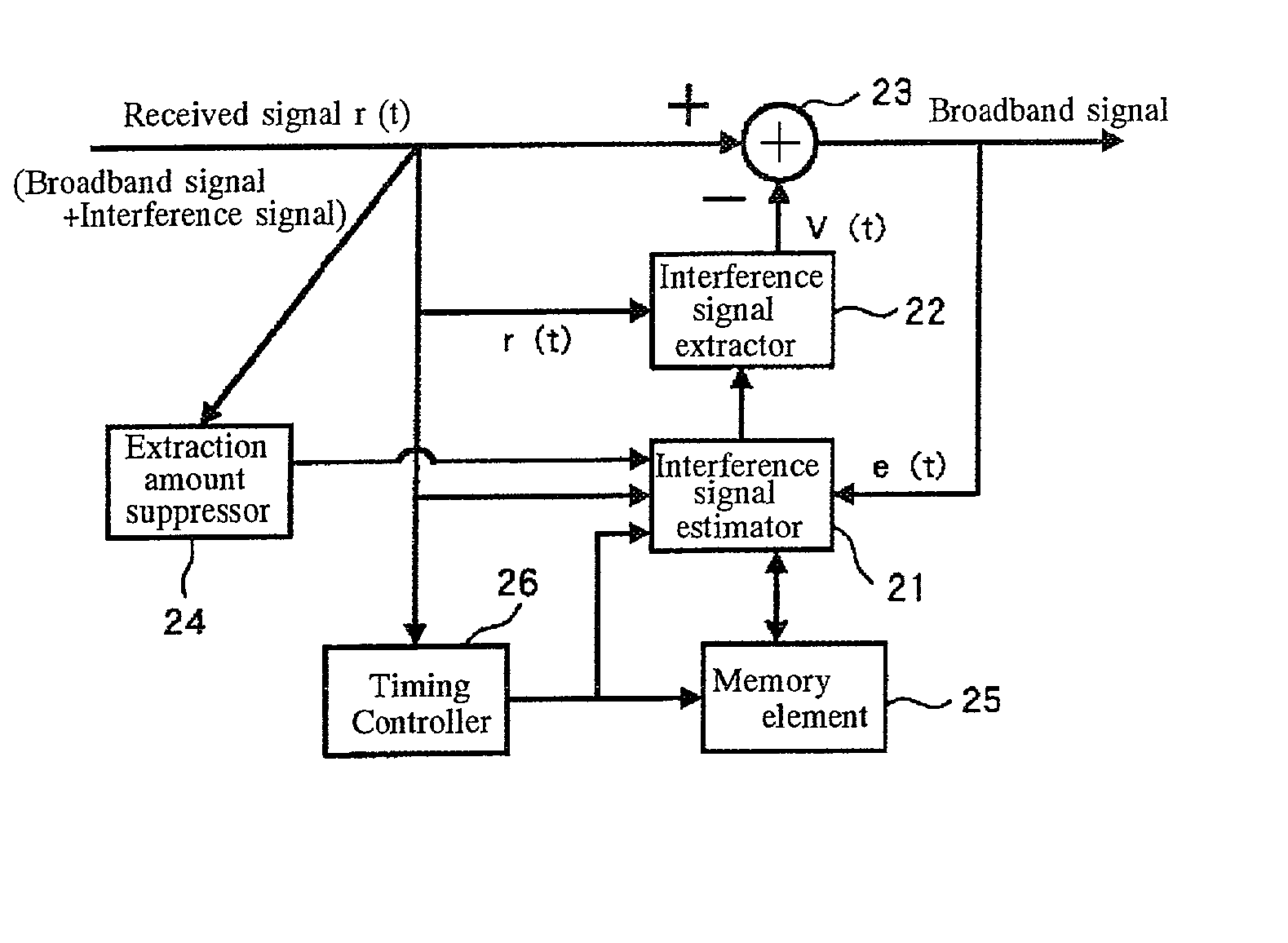

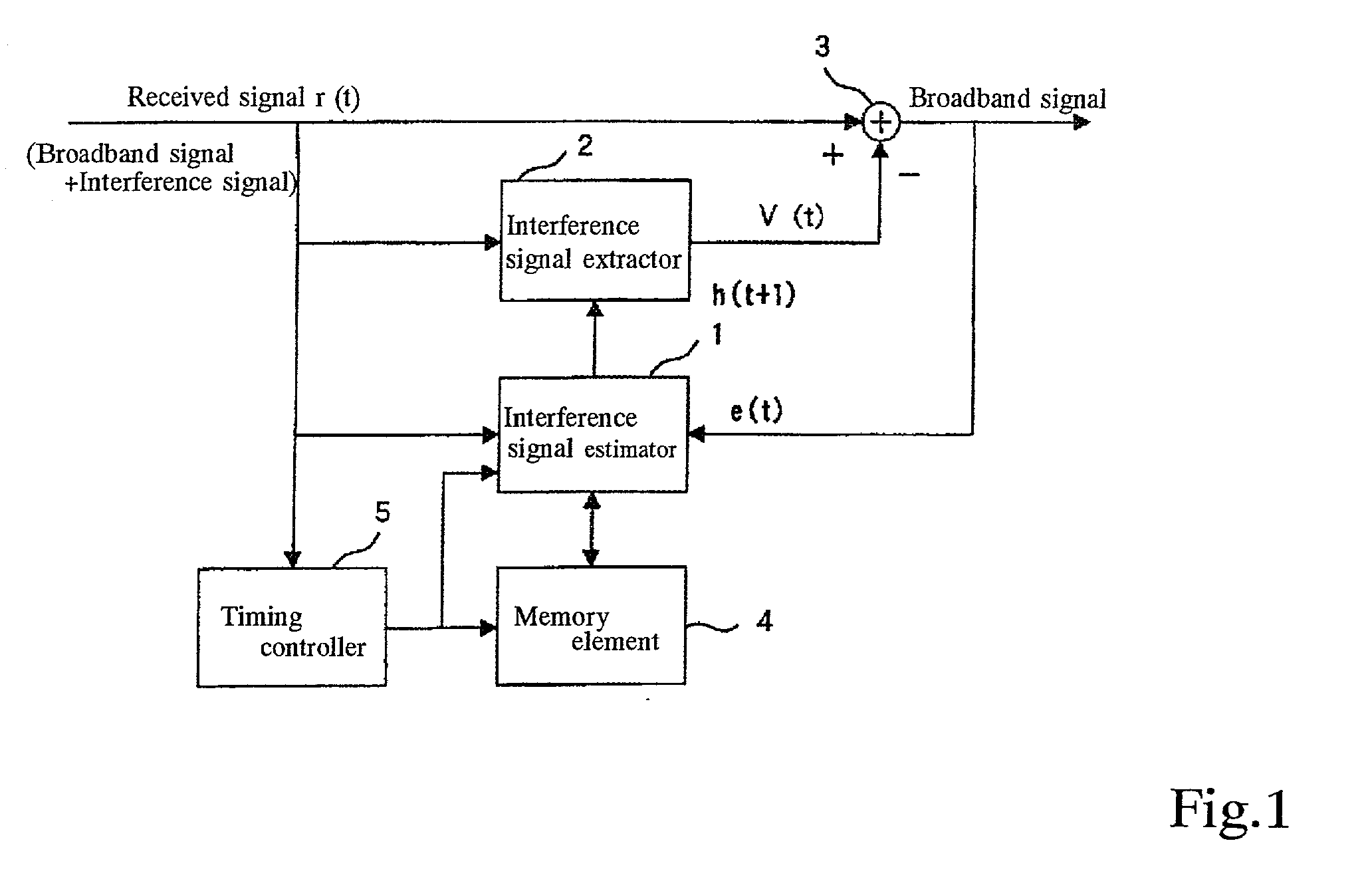

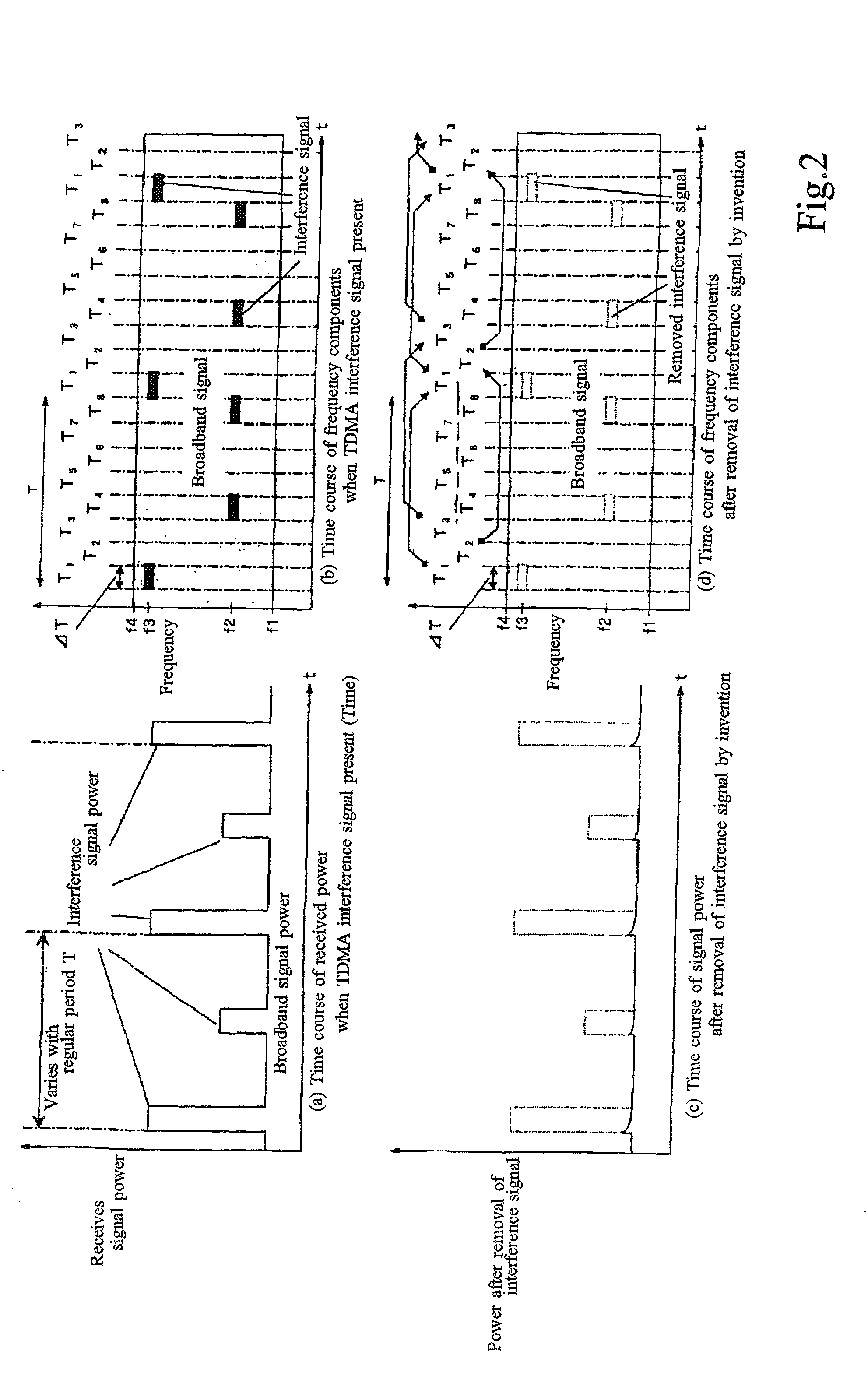

Interference signal removal system

InactiveUS20020196876A1Polarisation/directional diversityLine-faulsts/interference reductionEstimation resultElectrical and Electronics engineering

An interference signal removal system includes interference signal estimating means for estimating an interference signal contained in a received signal based on the received signal and a result obtained by removing an interference signal from the received signal, interference signal removing means for removing from the received signal the interference signal estimated by the interference signal estimating means; and interference signal estimation controlling means for storing the interference signal estimation result of the interference signal estimating means in memory and controlling the interference signal estimation by the interference signal estimating means so as to estimate an interference signal contained in the received signal based on a past interference signal estimation result stored in memory.

Owner:KOKUSA ELECTRIC CO LTD

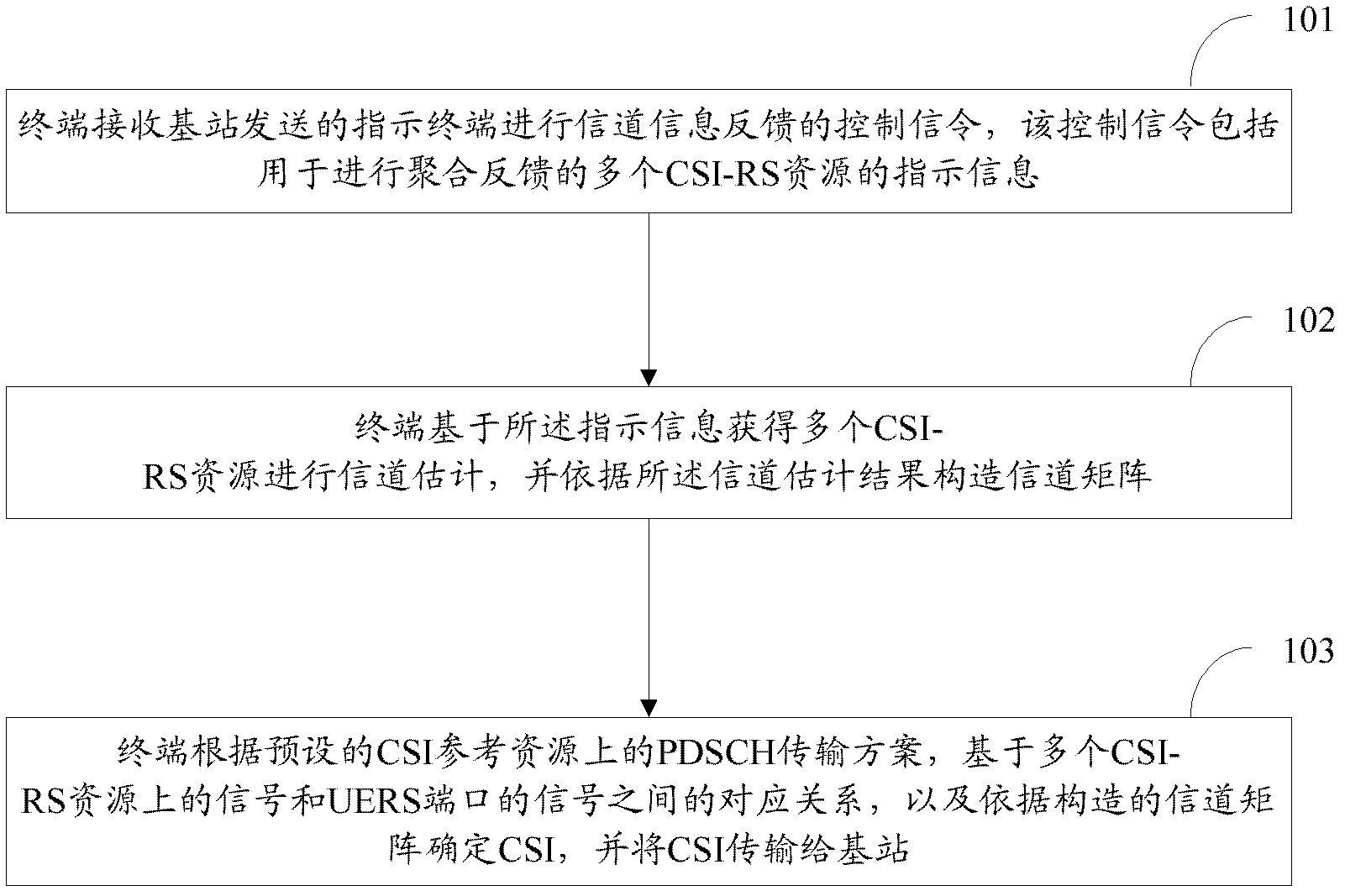

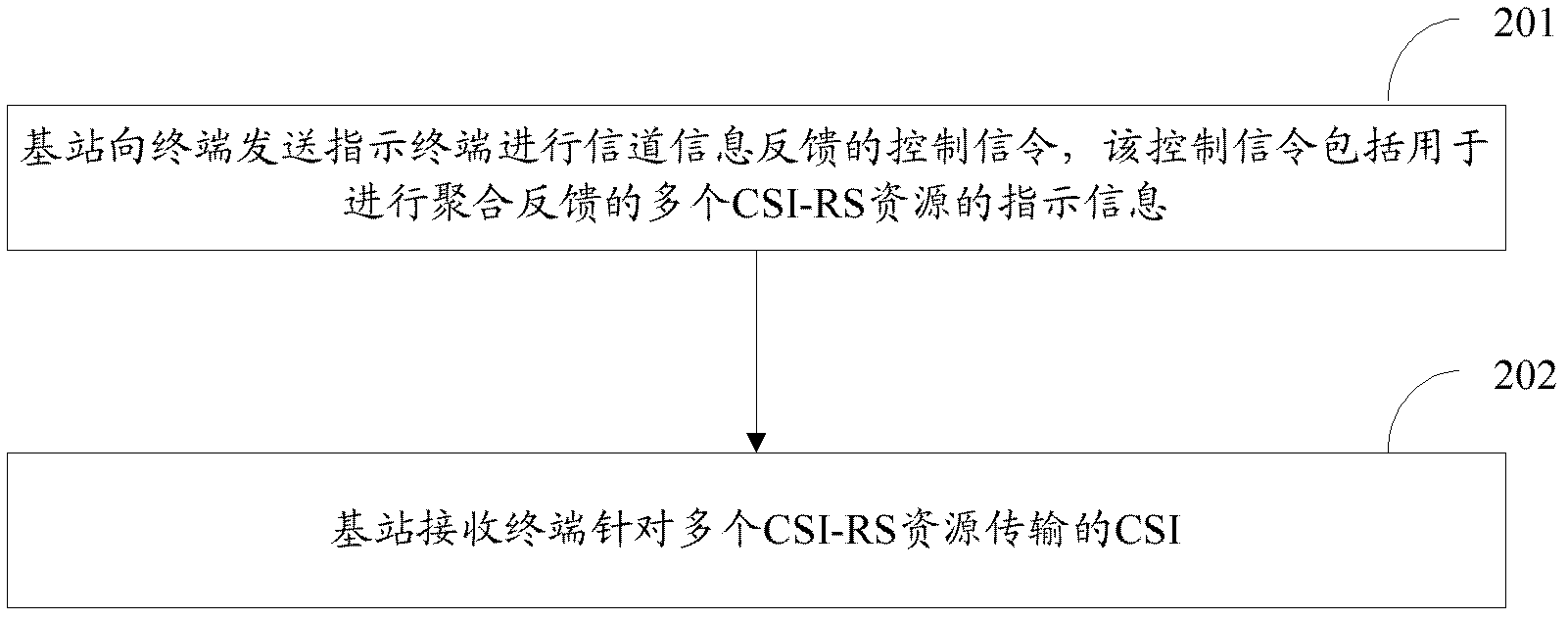

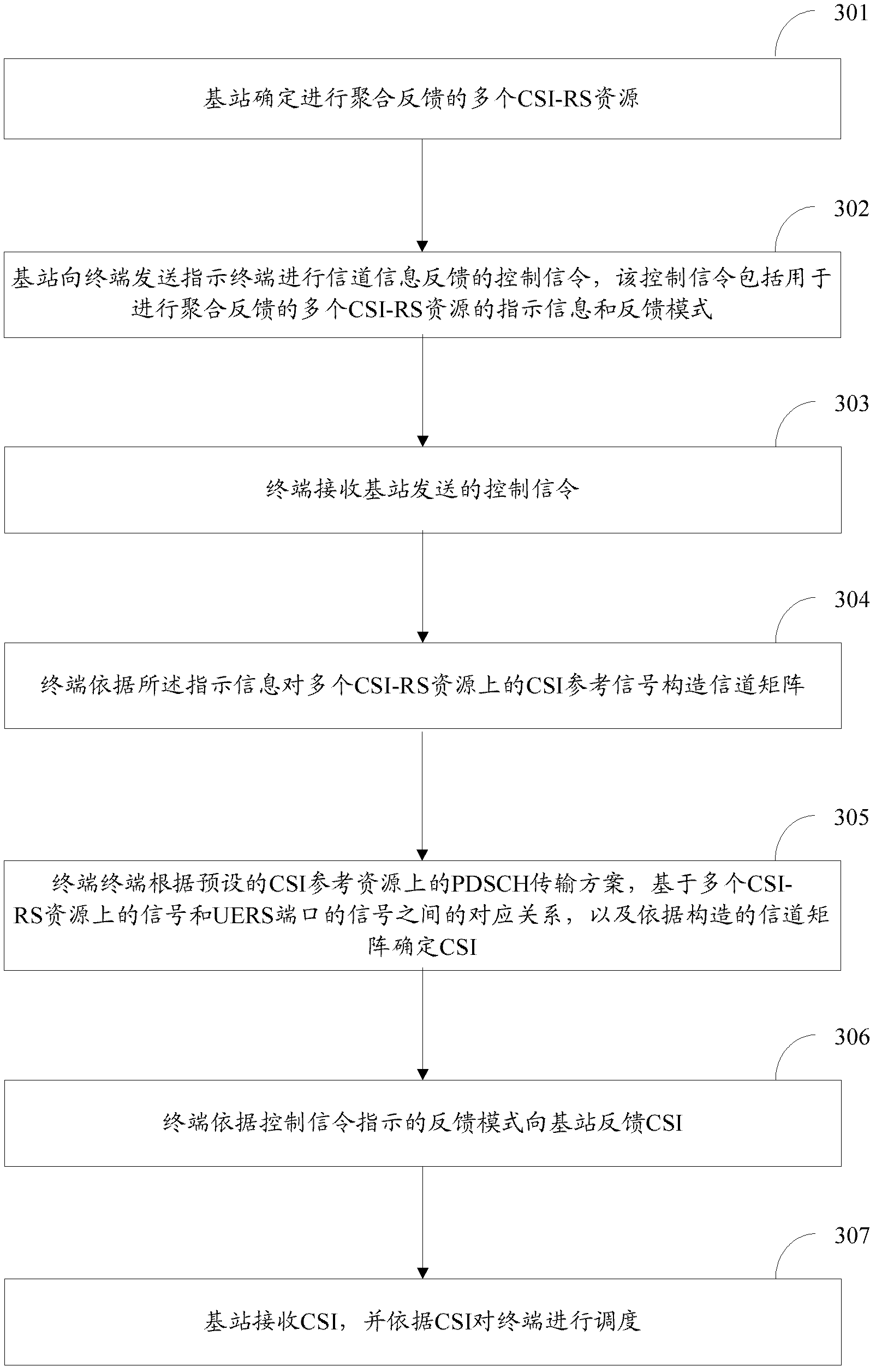

Channel state information transmission method and device

InactiveCN102546110ASite diversitySpatial transmit diversityChannel state informationReferral service

The invention discloses a channel state information transmission method used for realizing the transmission of CoMP transmission supporting channel information. The method comprises the following steps that: a terminal receives a control signal sent by a base station and used for indicating the terminal to carry out channel information feedback, wherein the control signal comprises the indication information of a plurality of CSI-RS (Channel State Information-Reference Signal) resources for aggregation feedback; the terminal obtains the plurality of CSI-RS resources based on the indication information to carry out channel estimation, and a channel matrix is constructed according to a channel estimation result; and the terminal determines CSI based on a corresponding relation between each of signals on the plurality of CSI-RS resources and a signal of a UERS (Used Equipment Referral Service) port and according to a preset PDSCH (Physical Downlink Shared Channel) transmission scheme on a CSI reference resource and the constructed channel matrix and transmits the CSI to the base station. The invention also discloses a device for realizing the method.

Owner:CHINA ACAD OF TELECOMM TECH

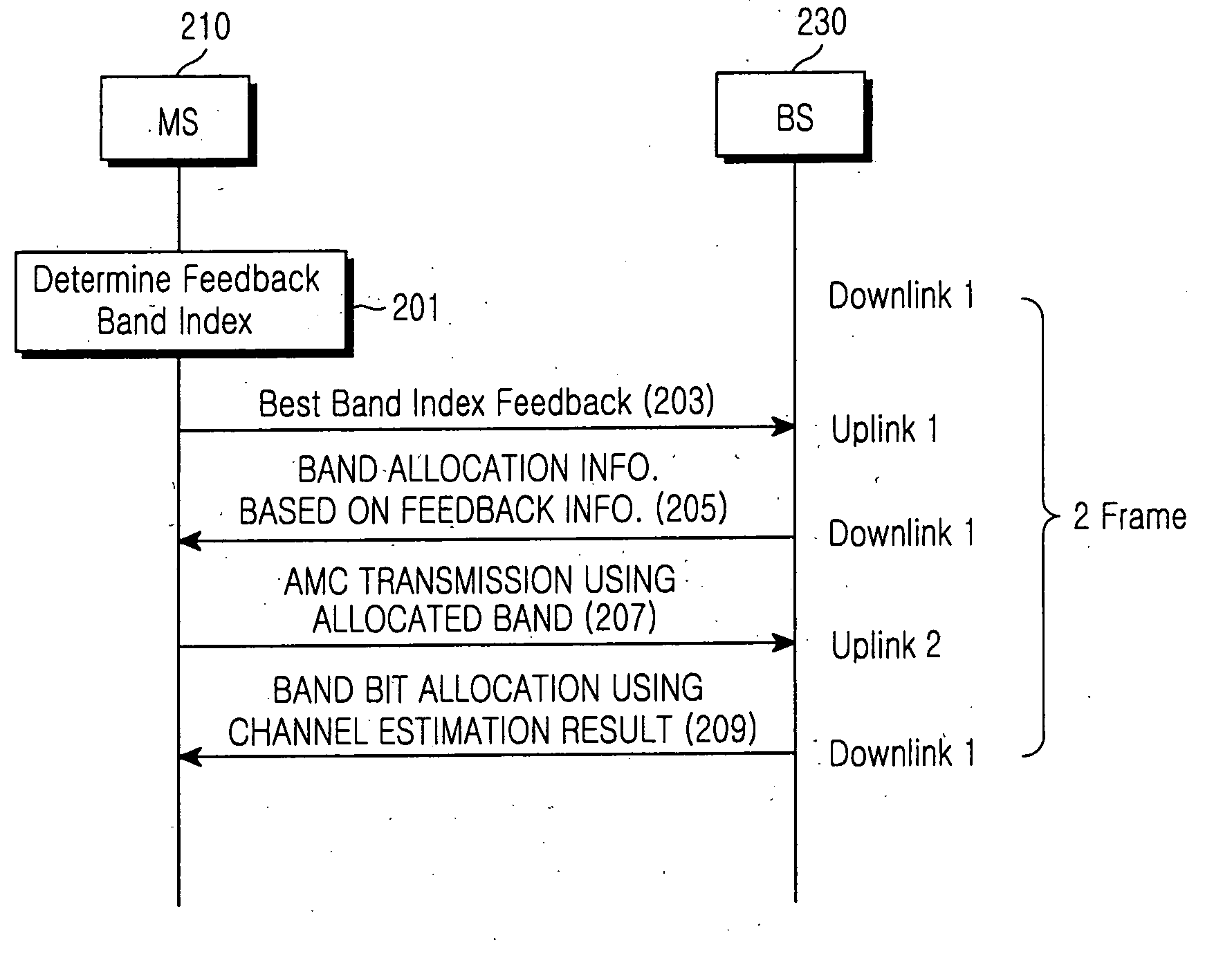

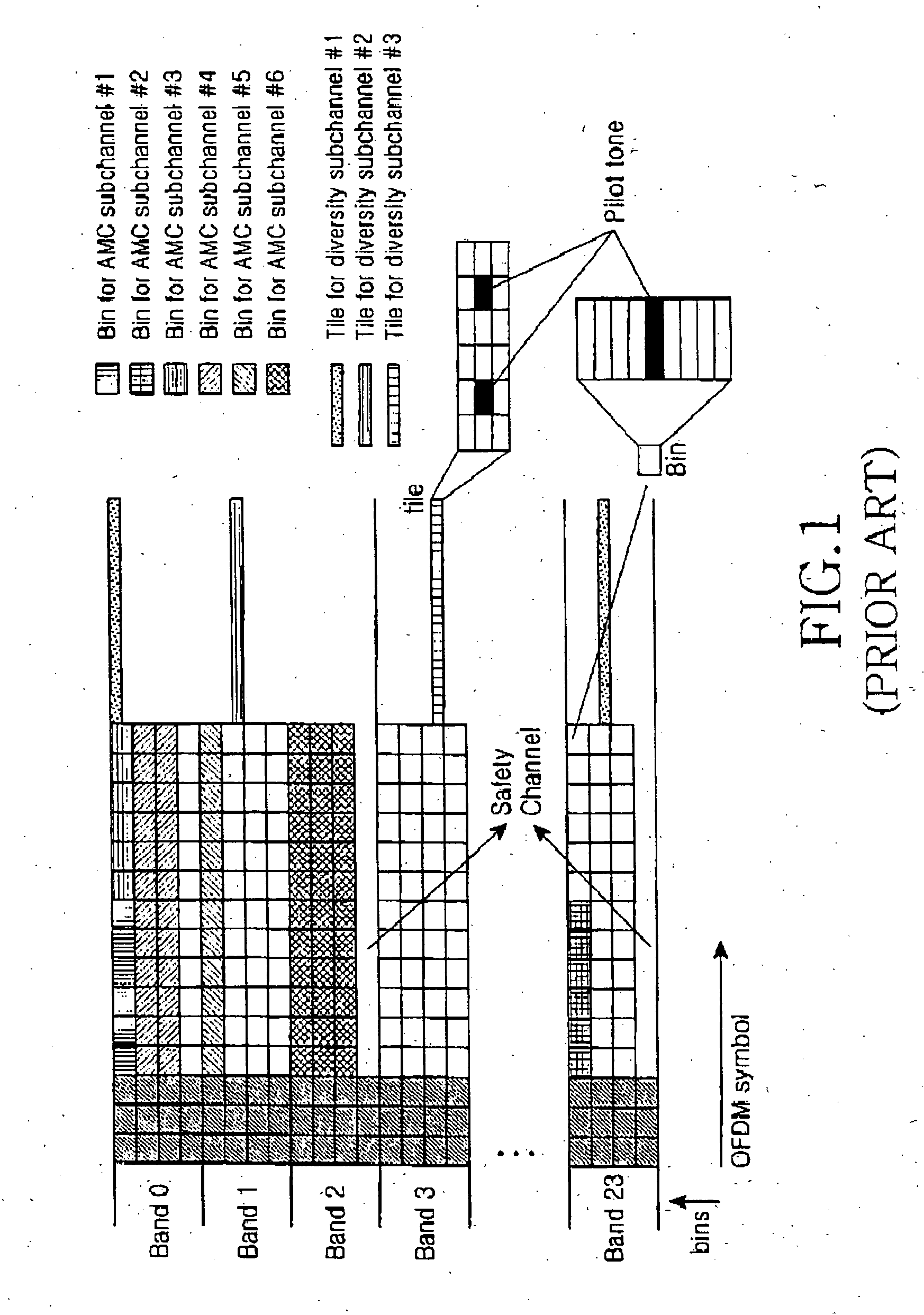

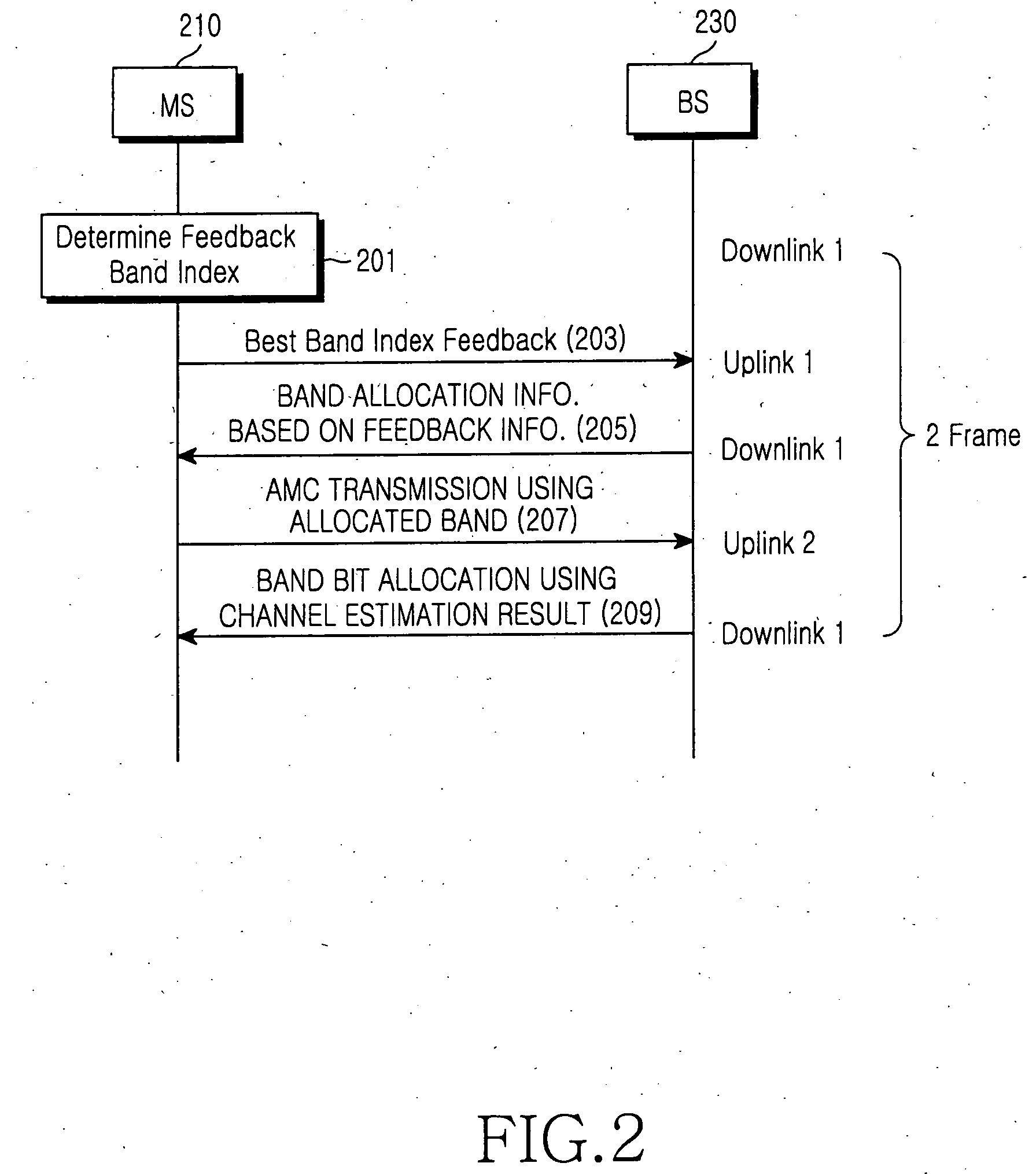

Adaptive subchannel and bit allocation method using partial channel information feedback in an orthogonal frequency division multiple access communication system

ActiveUS20060146856A1Improve transmission efficiencyHigh data rateNetwork traffic/resource managementTransmission path divisionCommunications systemBit allocation

An adaptive subchannel and bit allocation method in a wireless communication system. A mobile station analyzes channel quality information of a subchannel at a predetermined period and determines a feedback band index with a maximum decision criterion. The mobile station feeds back the determined feedback band index with the maximum decision criterion to a base station. The base station generates band allocation information using the feedback information from the mobile station, and transmits the band allocation information to the mobile station. The mobile station transmits AMC information using the band allocation information received from the base station. The base station estimates a channel using the AMC information transmitted from the mobile station and allocates bits to the allocated band according to the channel estimation result.

Owner:SAMSUNG ELECTRONICS CO LTD

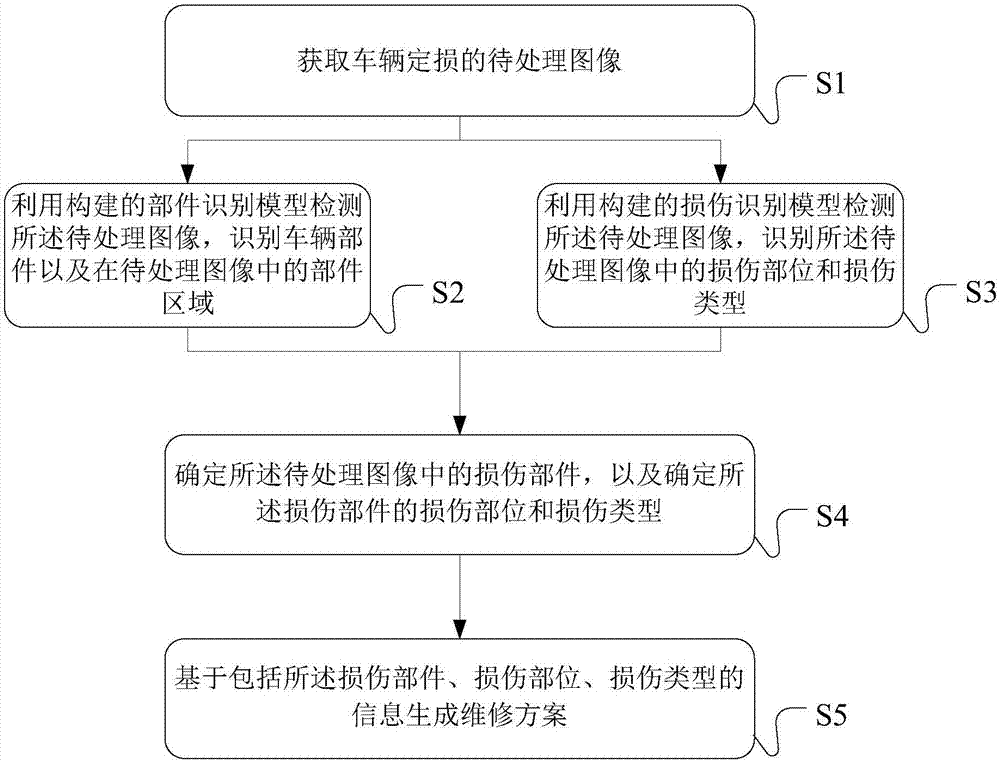

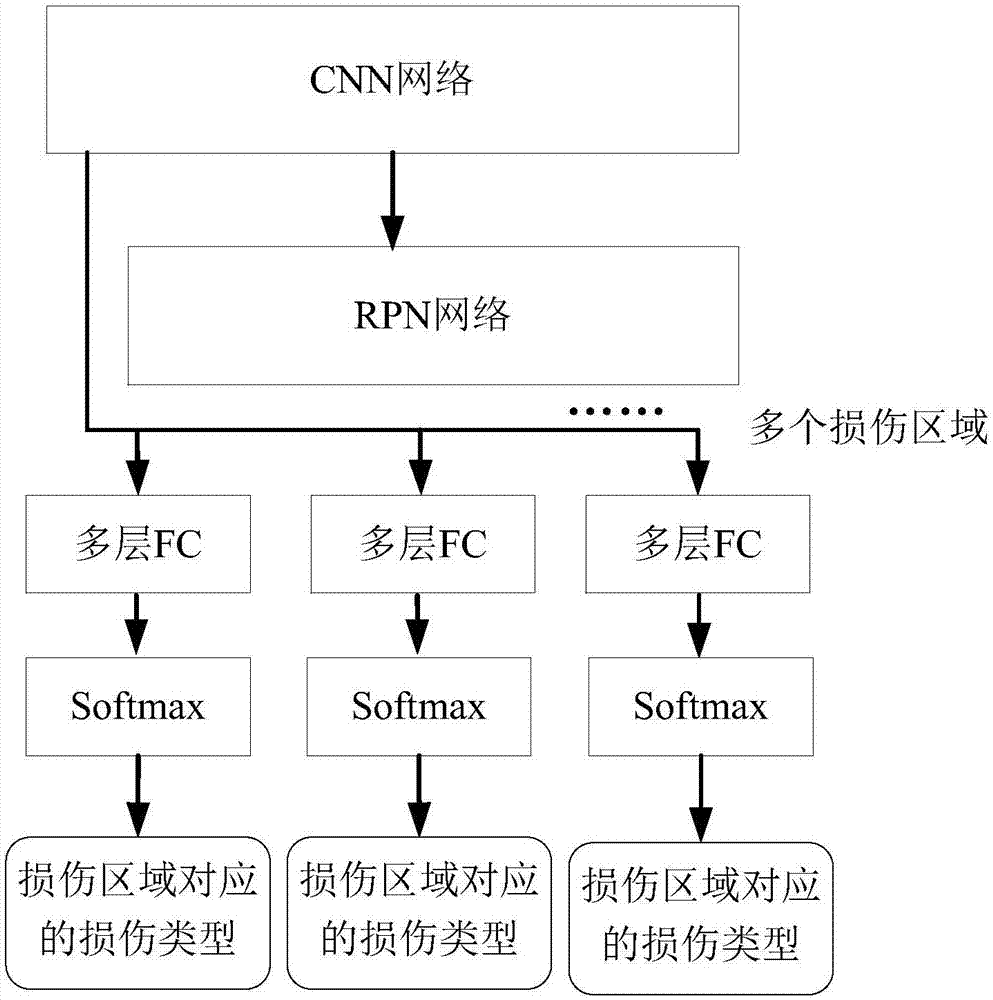

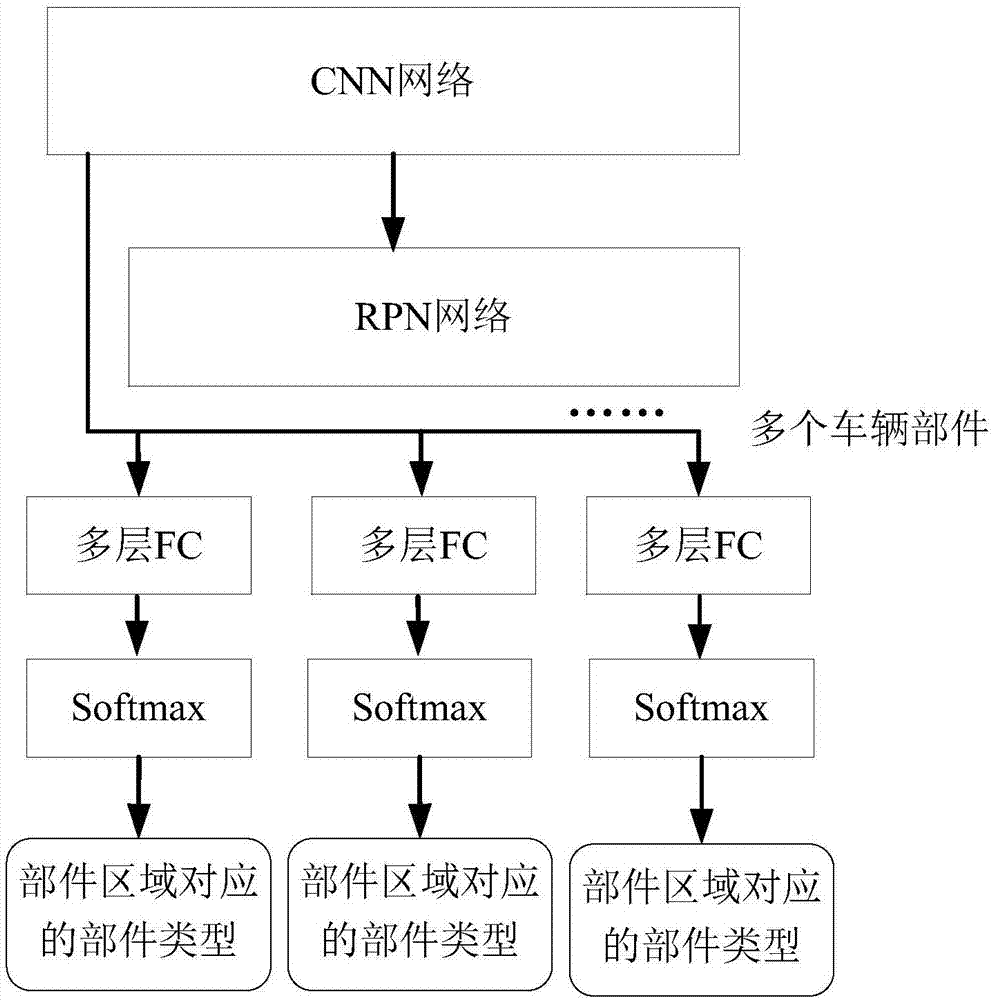

Image-based vehicle damage estimation method and device and electronic equipment

ActiveCN107403424AImprove service experienceImprove accuracyImage enhancementImage analysisEstimation methodsEstimation result

Embodiments of the invention disclose an image-based vehicle damage estimation method and device and electronic equipment. The method comprises the following steps of: obtaining a to-be-processed image of vehicle damage estimation; identifying a vehicle component in the to-be-processed image and confirming a component area, in the to-be-processed image, of the vehicle component; recognizing damage parts and damage types in the to-be-processed image; determining a damage component in the to-be-processed image and a corresponding damage part and damage type according to the damage parts and the component area; and generating a maintenance scheme on the basis of information comprising the damage component, the damage parts and the damage types. By utilizing the method and device and the electronic equipment, specific information such as damage parts and degrees of vehicle components can be detected rapidly, correctly and reliably, the damage estimation result is more correct and reliable, and maintenance scheme information is provided for the users to rapidly and efficiently carry out vehicle damage estimation processing, so that the service experience of the users is greatly improved.

Owner:ADVANCED NEW TECH CO LTD

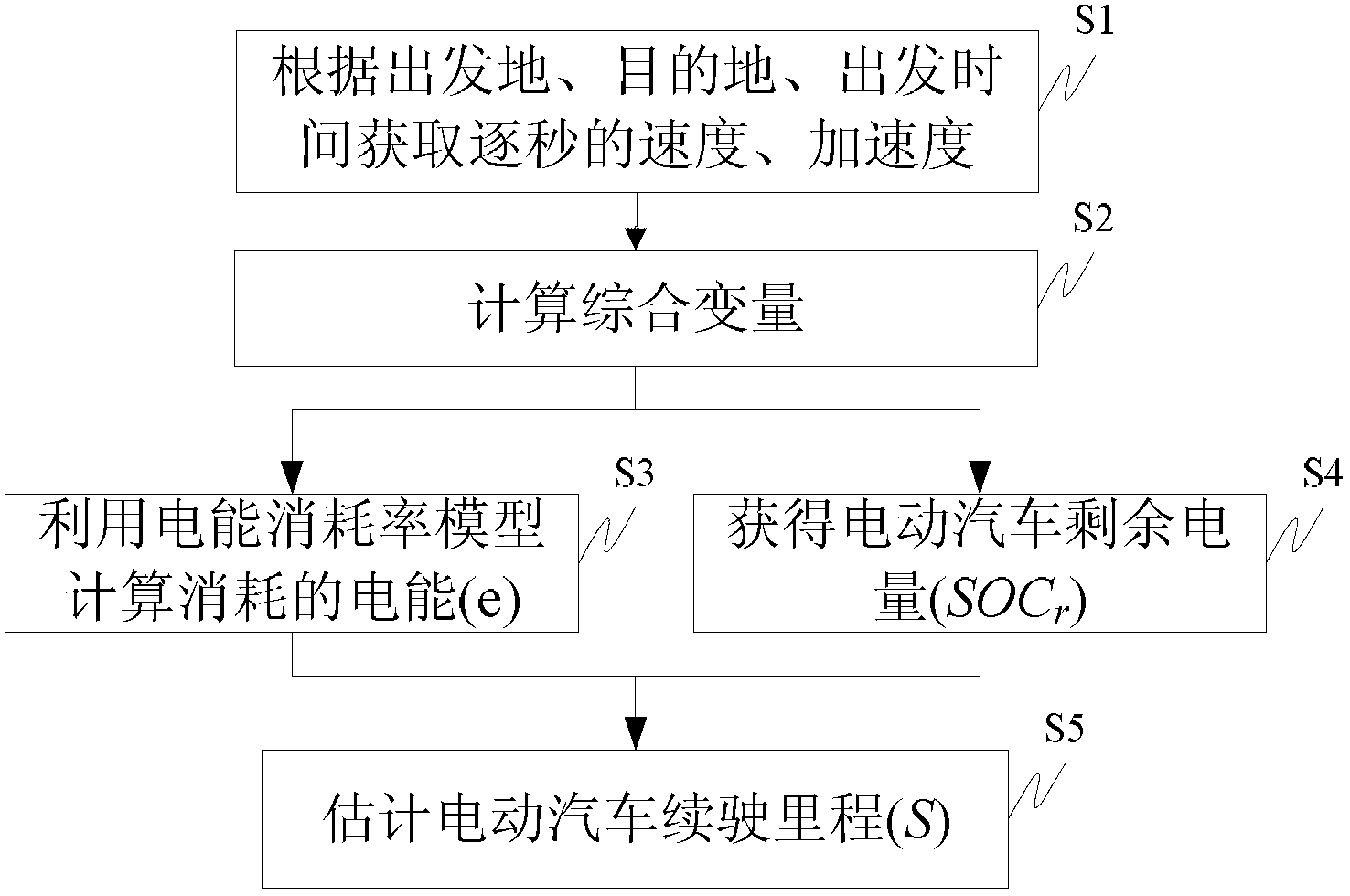

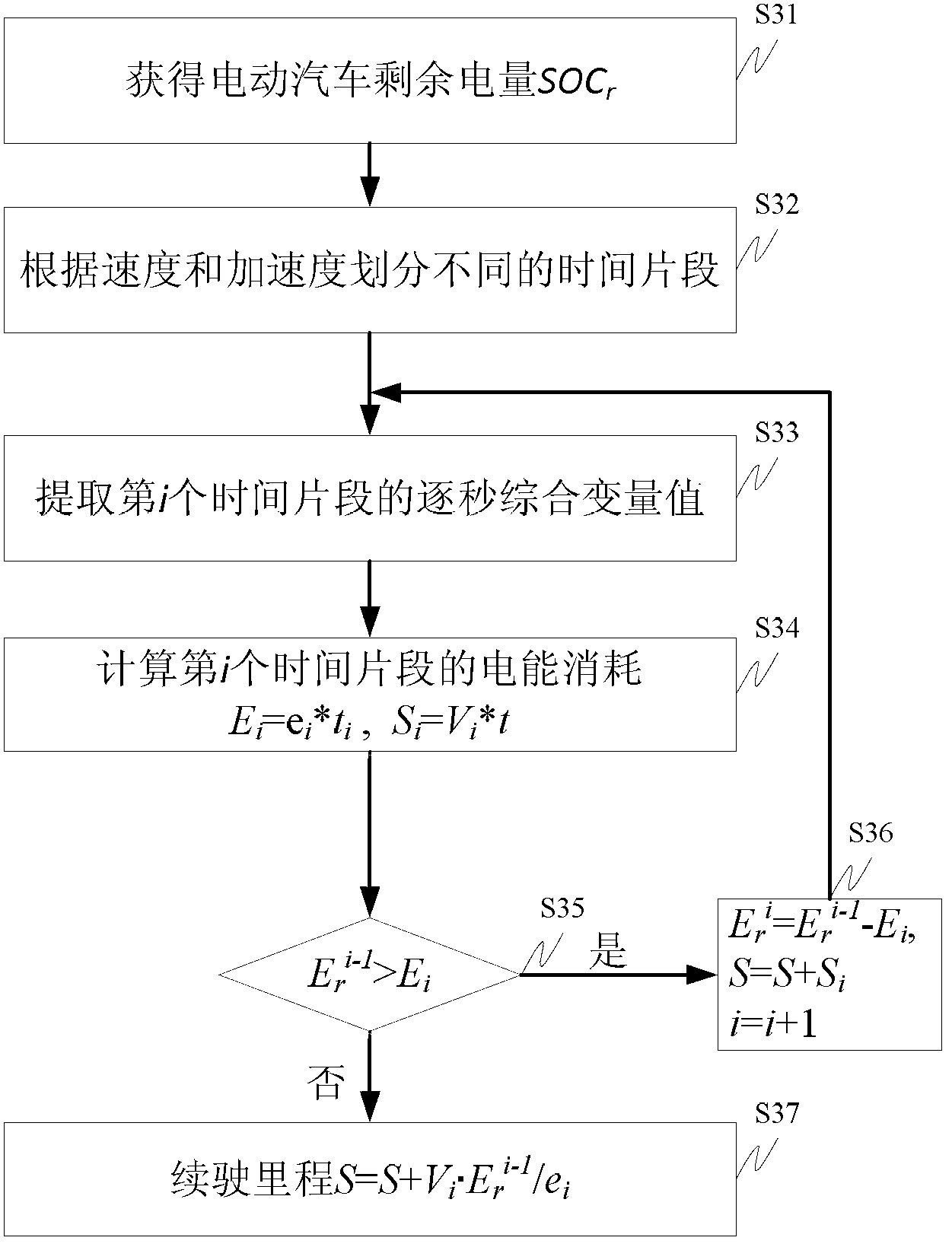

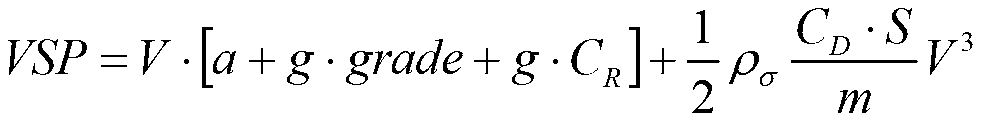

Driving range estimation method of electric car

The invention discloses a driving range estimation method of an electric car. The driving range estimation method of the electric car comprises the following steps: 1.1 a planned route and the future microcosmic traffic state of the route are obtained according to a set departure site, a destination and the departure time; 1.2 an aggregative variable is calculated based on the obtained speed per second and an acceleration; 1.3 a built electric car electricity consumption rate model is selected according to different driving conditions, and electricity consumption per second is calculated by combining the calculated aggregative variable; and 1.4 residual electric quantity of a current battery is obtained, residual energy of the battery is calculated, and residual range is obtained through circular calculation by combing the electricity consumption of the car. The driving range estimation method of the electric car takes the influence of a real traffic state on the energy consumption of the electric car into account, and overcomes the defect that the estimation result of an existing method is not accurate enough.

Owner:BEIJING JIAOTONG UNIV

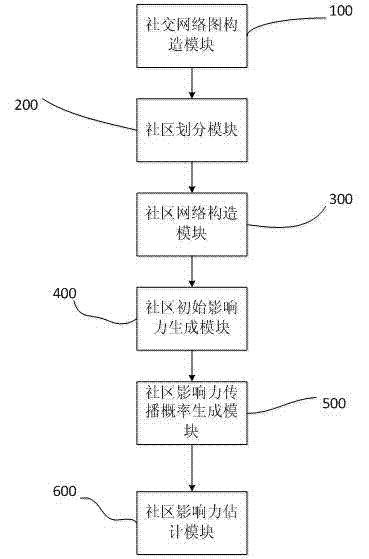

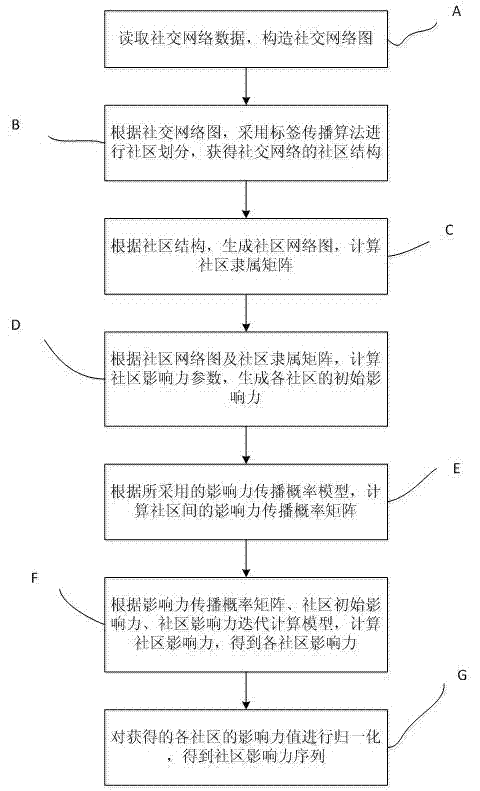

Evaluating system and method for community influence in social network

InactiveCN103678669AReasonable assessmentData processing applicationsWebsite content managementSocial graphComputational model

The invention relates to an evaluating system and method for community influence in a social network. The method comprises the steps that a social network chart with social network users as nodes and user relationships as sides is built; according to the social network chart, the community structure of the social network is obtained by carrying out community division through the label propagation algorithm; according to the social network chart and matrixes which communities belong to, the parameter of the community influence is calculated, and the initial influence of each community is generated; according to the transmission probability model of the influence, an influence transmission probability matrix is generated; according to the influence transmission probability matrix and the community influence iterative computation model, the community influence is iterated and upgraded until the iteration end condition is met, the influence value of each community is obtained, and the sequence of the community influence, namely, the influence estimation result of each community in the social network is obtained after normalization. The system and method can effectively analyze the distribution of the community influence in the social network and can be used for high-influence community mining, thereby being capable of being applied to the fields of network marketing and the like.

Owner:FUZHOU UNIV

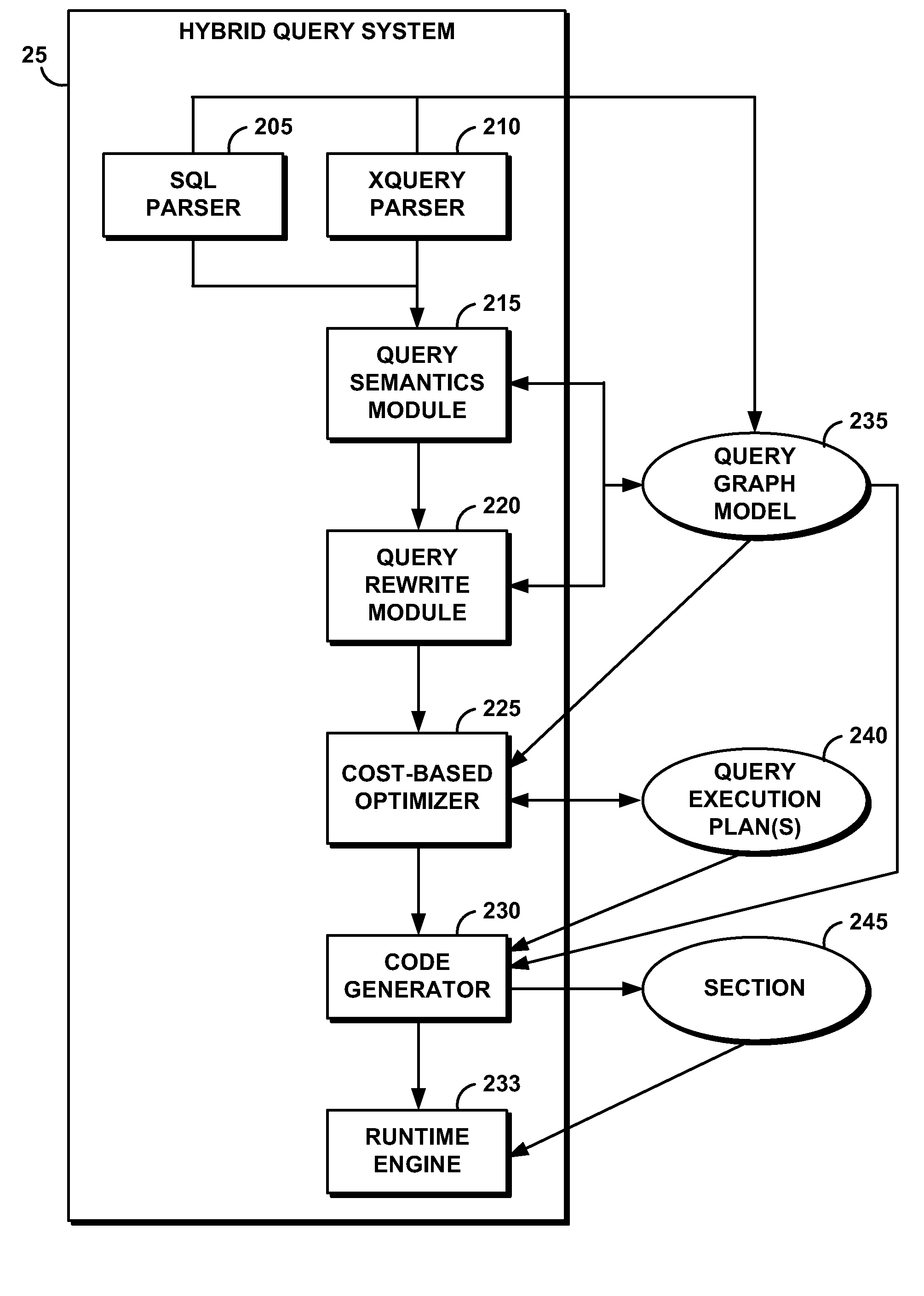

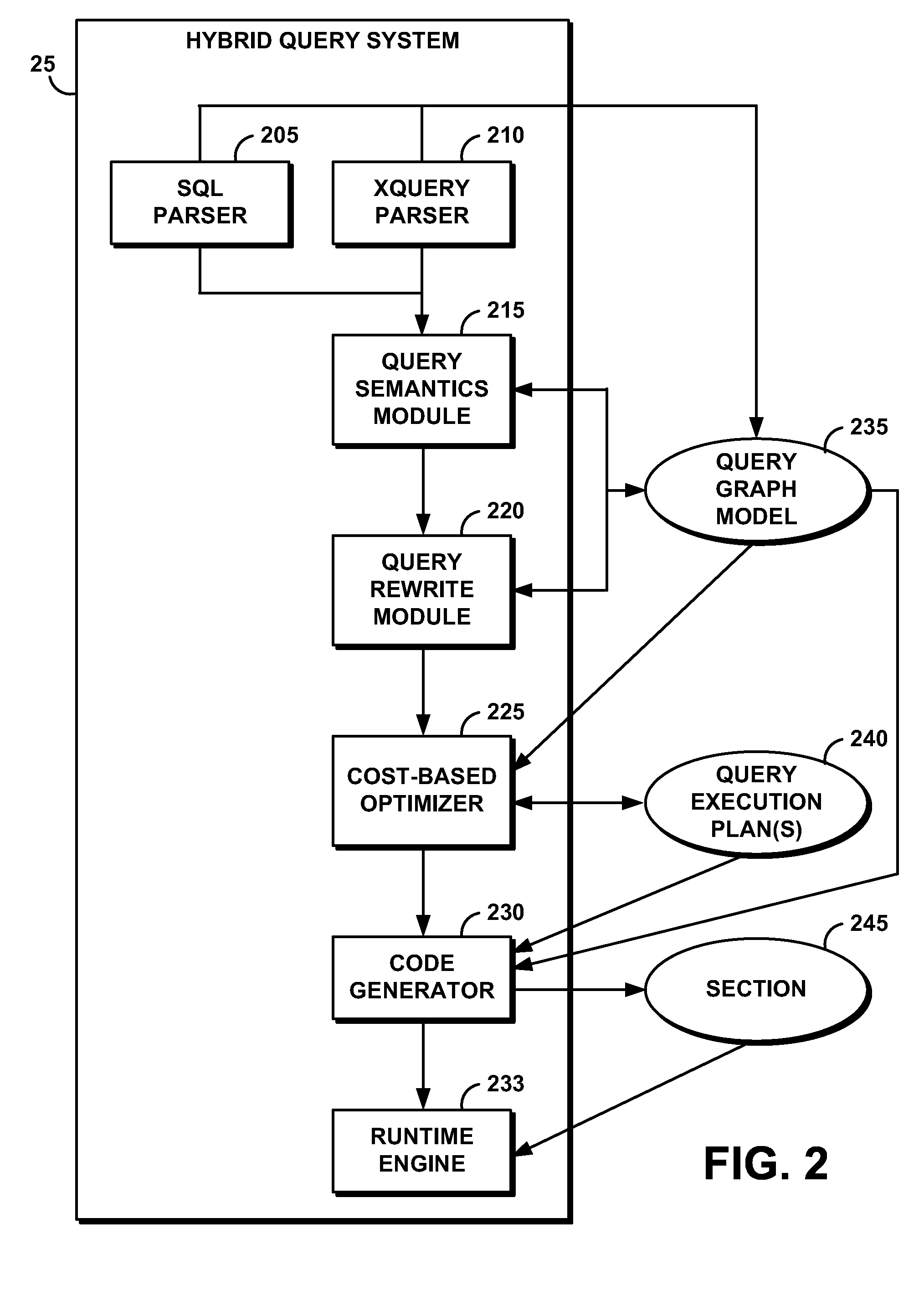

System and Method for Optimizing Query Access to a Database Comprising Hierarchically-Organized Data

InactiveUS20080222087A1Easy accessDigital data information retrievalSpecial data processing applicationsExecution planTheoretical computer science

An cost based optimizer optimizes access to at least a portion of hierarchically-organized documents, such as those formatted using eXtensible Markup Language (XML), by estimating a number of results produced by the access of the hierarchically-organized documents. Estimating the number of results comprises computing the cardinality of each operator executing query language expressions and further computing a sequence size of sequences of hierarchically-organized nodes produced by the query language expressions. Access to the hierarchically-organized documents is optimized using the structure of the query expression and / or path statistics involving the hierarchically-organized data. The cardinality and the sequence size are used to calculate a cost estimation for execution of alternate query execution plans. Based on the cost estimation, an optimal query execution plan is selected from among the alternate query execution plans.

Owner:IBM CORP

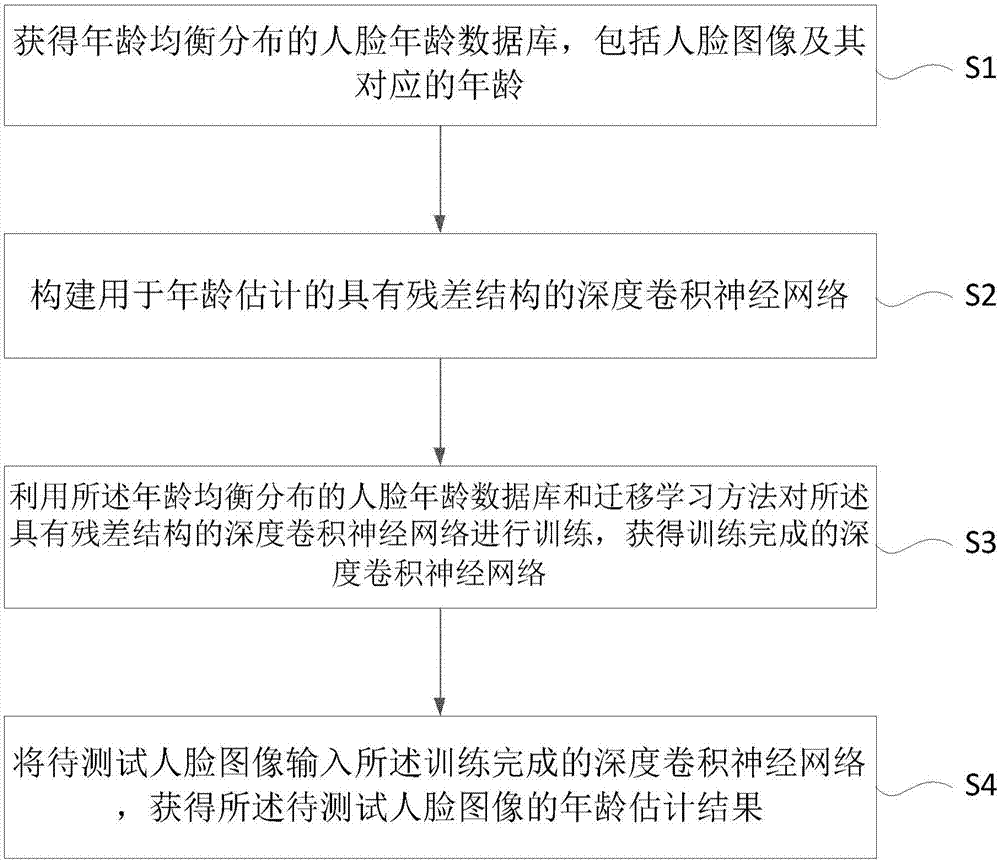

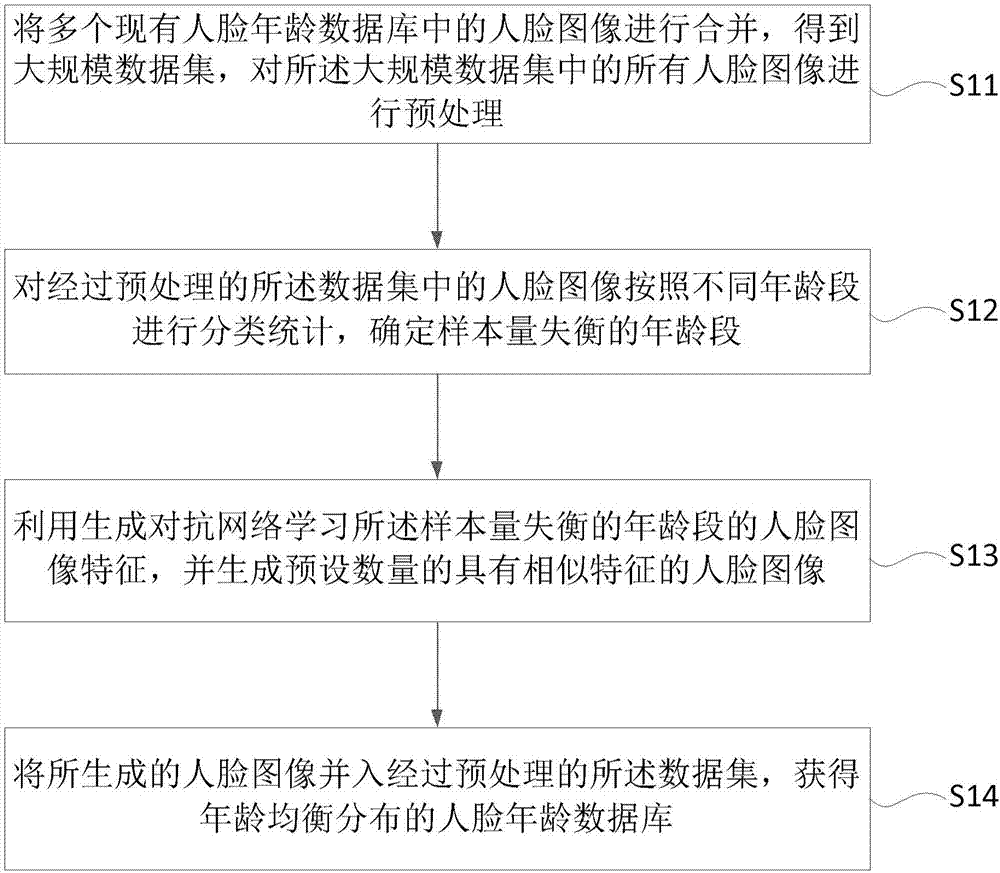

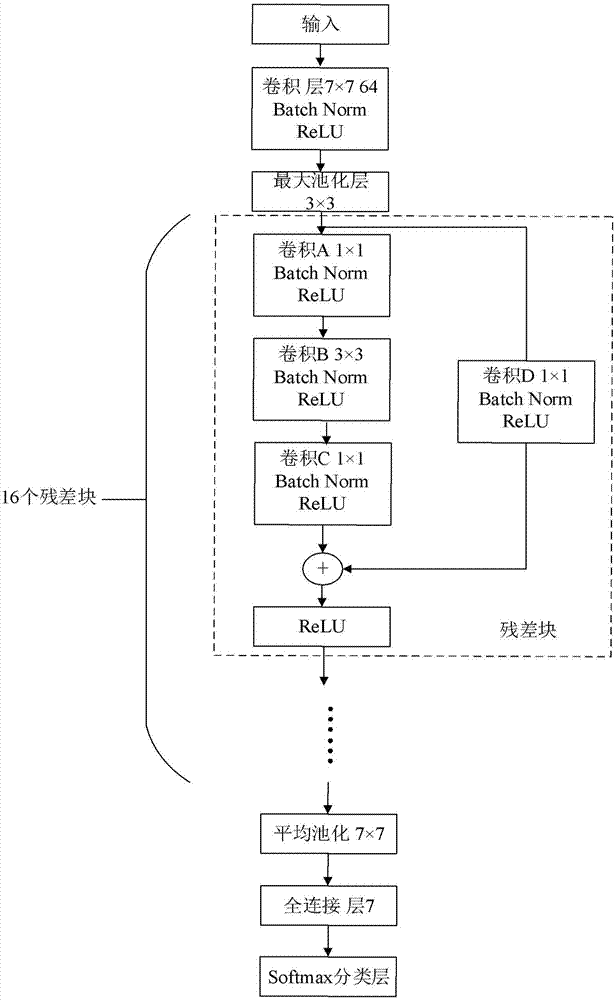

Age estimation method and device

InactiveCN107545245ARealize automatic extractionImprove accuracyCharacter and pattern recognitionNeural architecturesEstimation resultConvolutional neural network

The invention discloses an age estimation method and device. The method comprises the steps of inputting a pre-processed to-be-tested human face image into a trained deep convolutional neural network,and acquiring an age estimation result of the to-be-tested human face image. The age estimation method and device have the beneficial effect of acquiring highly accurate age estimation result.

Owner:INST OF SEMICONDUCTORS - CHINESE ACAD OF SCI

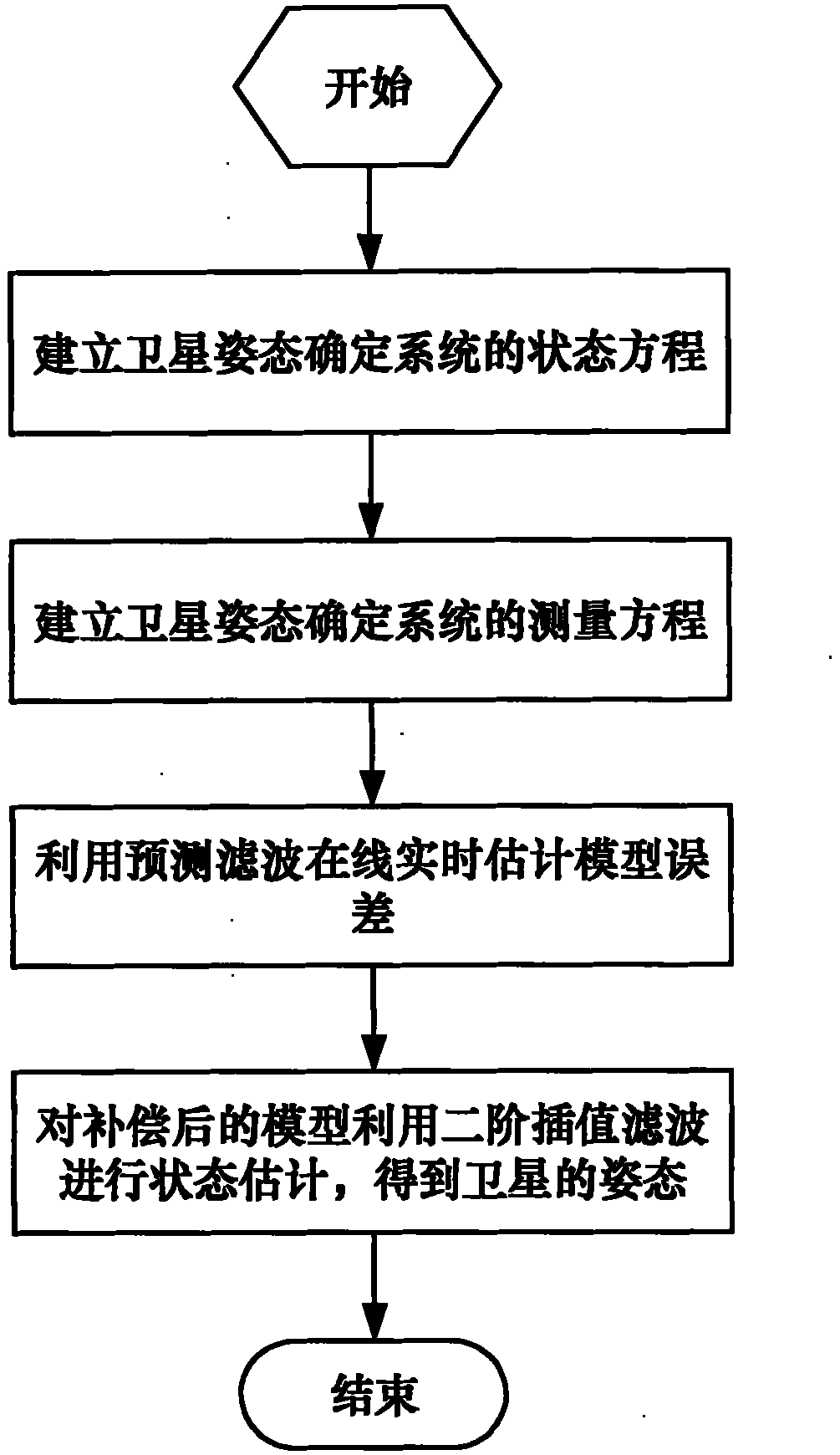

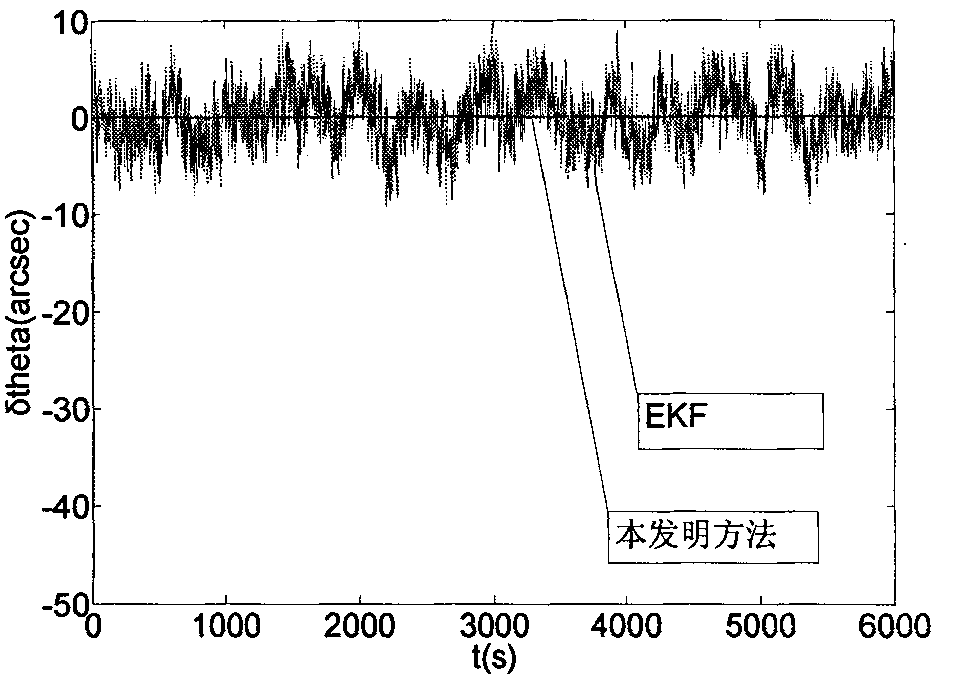

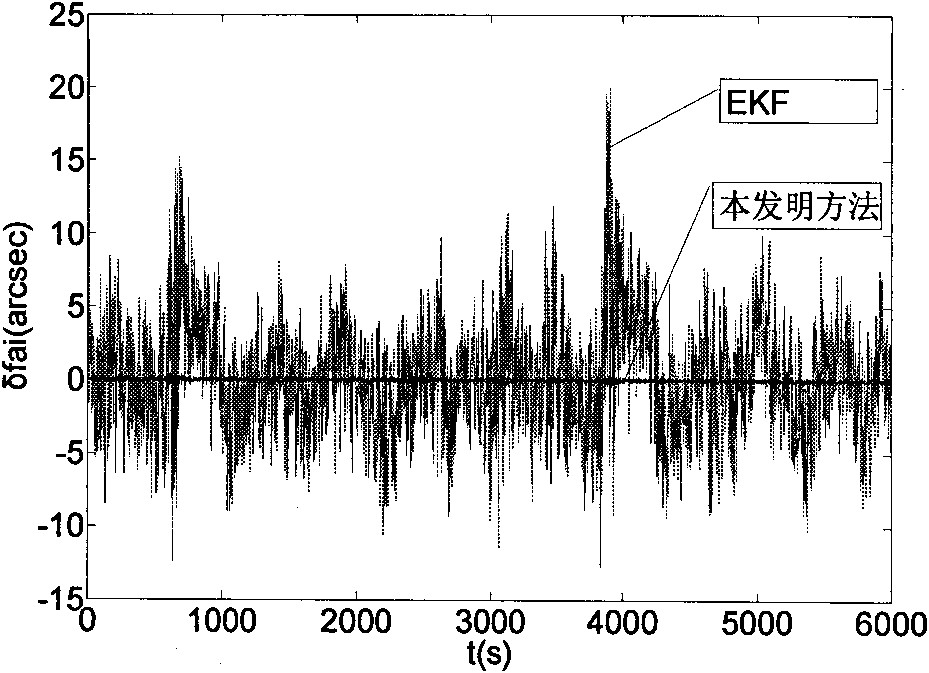

High-precision satellite attitude determination method based on star sensor and gyroscope

InactiveCN101846510AOvercome the disadvantage of error processing as zero-mean white noiseHigh precisionAngle measurementNavigation instrumentsGyroscopeZero mean

The present invention discloses a high-precision satellite attitude determination method based on star sensor and gyroscope, which comprises the following steps: step 1. establishing a status equation of a satellite attitude determination system; step 2. establishing a measurement equation of the satellite attitude determination system; step 3. performing an online real-time model error estimation through predictive filtering; and step 4. performing a status estimation on a compensated model through 2-order interpolation filtering to obtain the attitude of a satellite. By applying predictive filtering to performing an online real-time model error estimation and correcting the system model, the invention overcomes the shortcoming existing in the conventional estimation process that error is processed into zero-mean white noise; and in addition, the invention can process any nonlinear system and noise conditions to obtain an estimation result of higher precision and is applicable to thefield of high-precision attitude determination.

Owner:BEIHANG UNIV

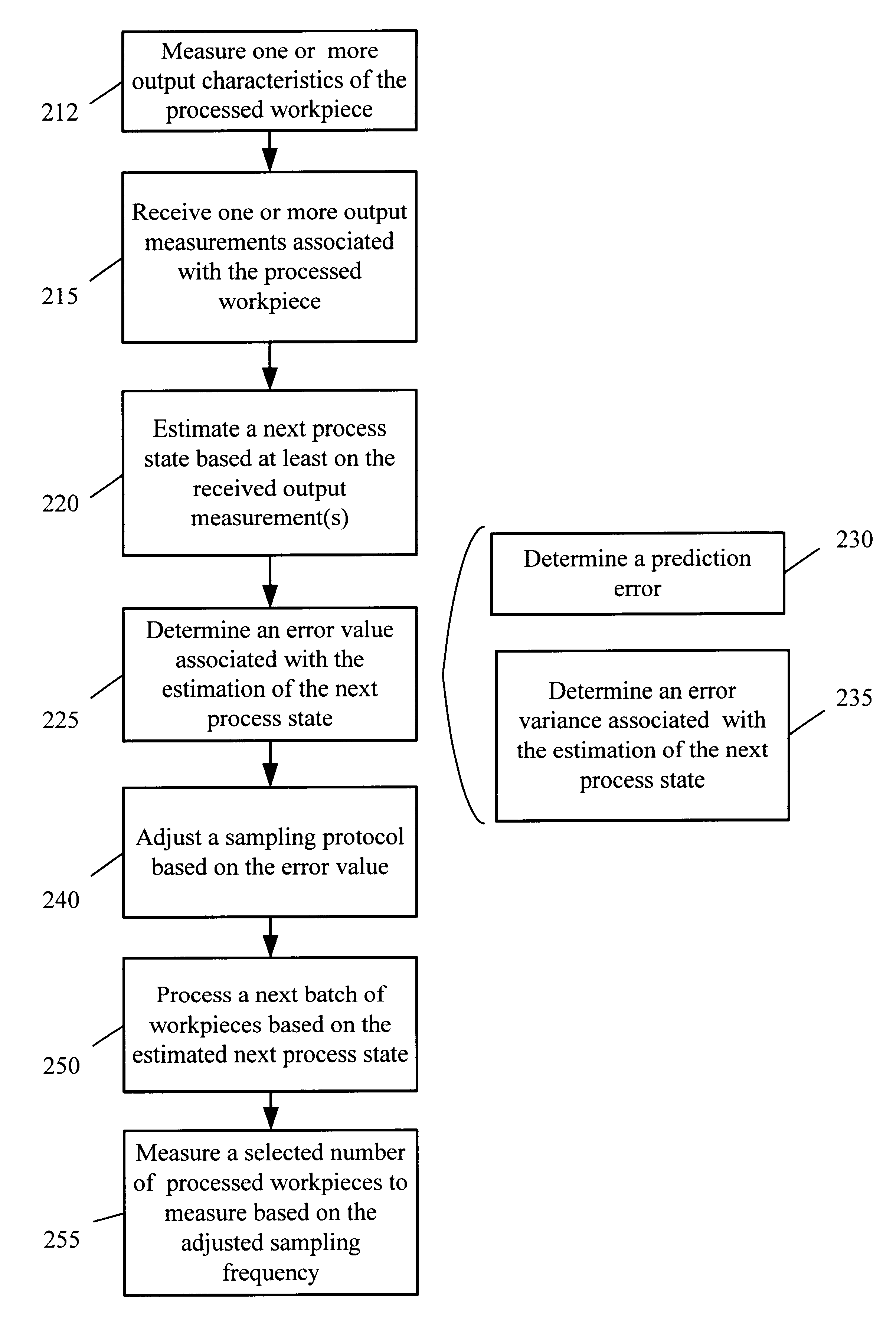

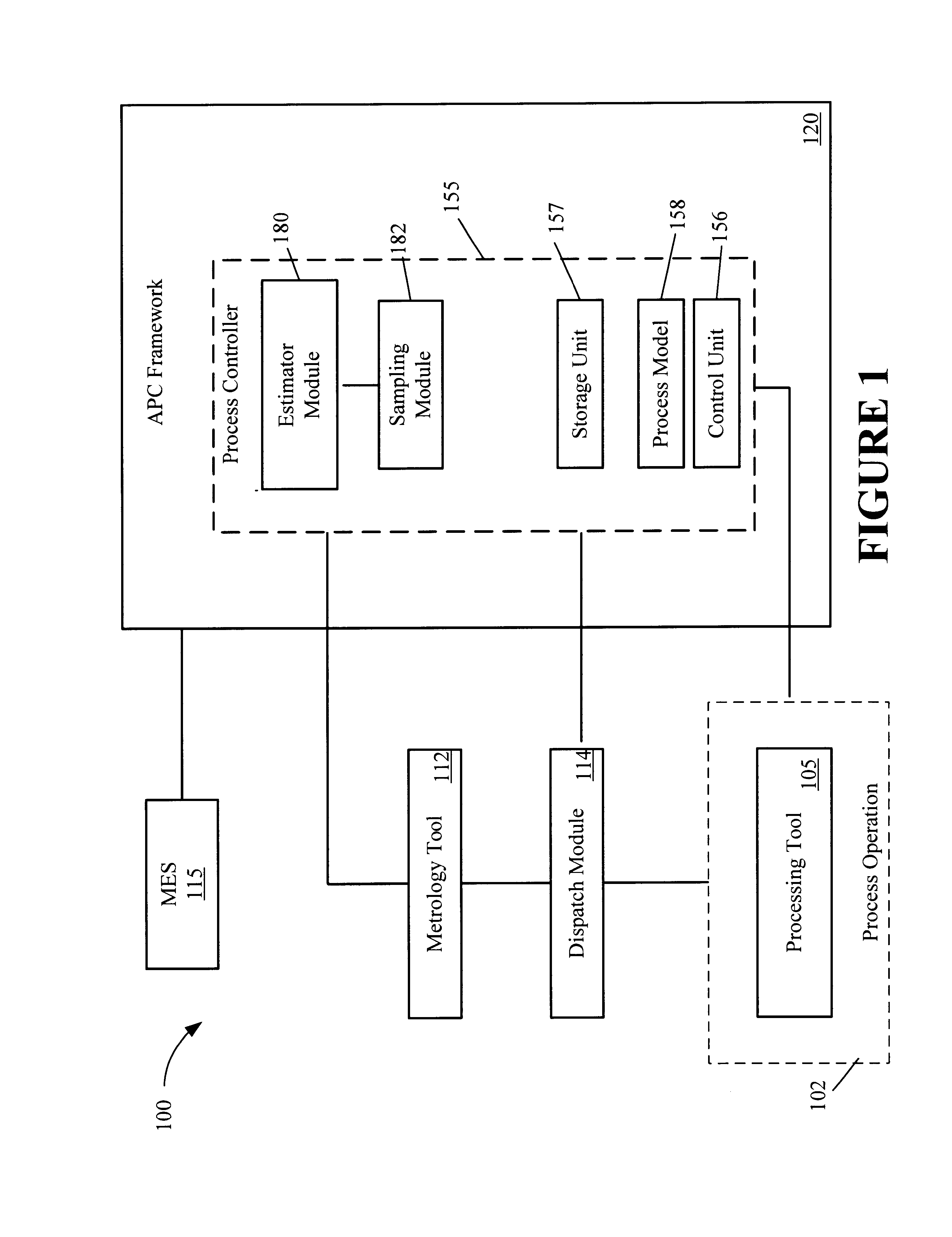

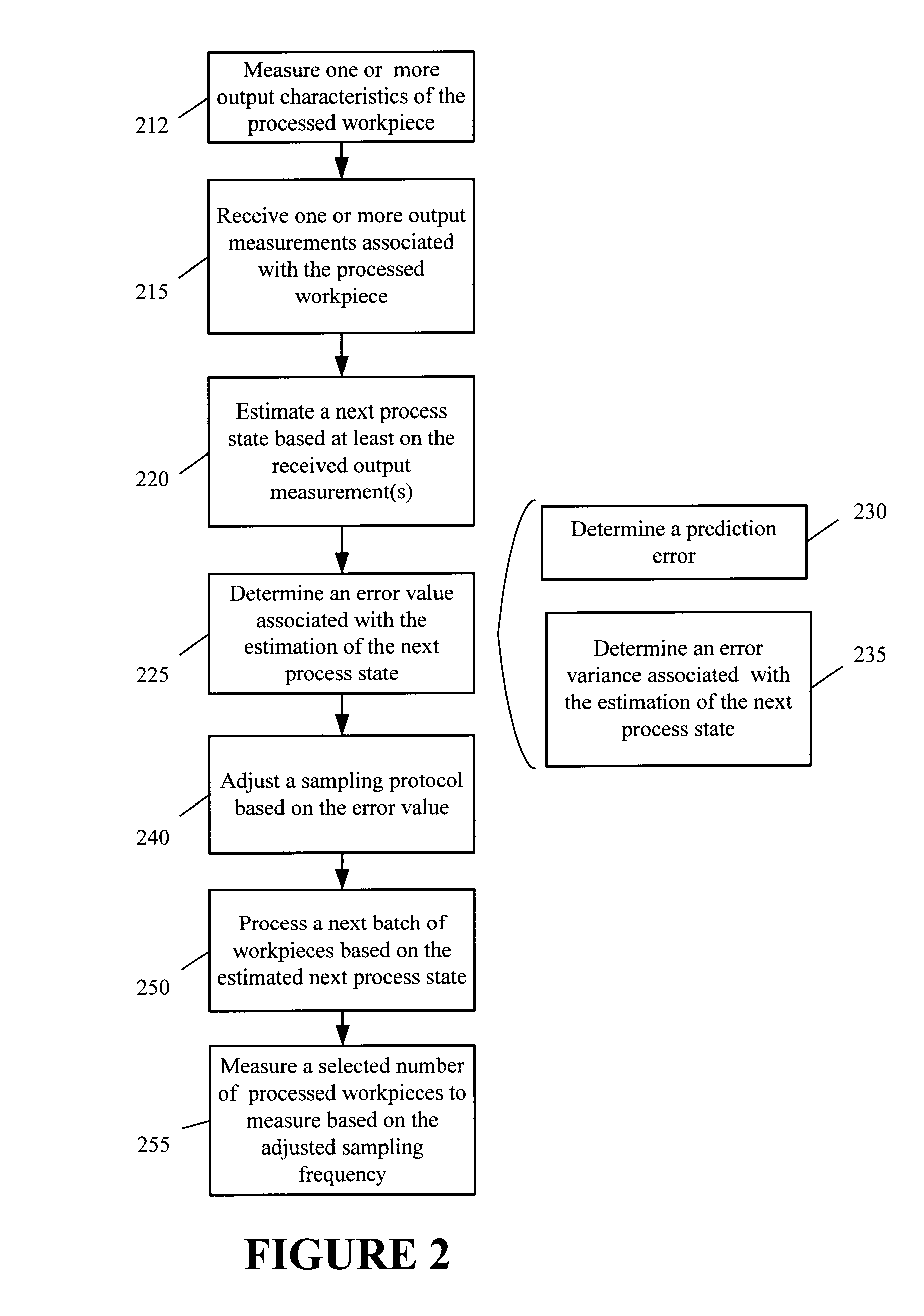

Adjusting a sampling rate based on state estimation results

InactiveUS6766214B1Semiconductor/solid-state device testing/measurementSemiconductor/solid-state device manufacturingMetrologyState dependent

A method and an apparatus are provided for adjusting a sampling rate based on a state estimation result. The method comprises receiving metrology data associated with processing of workpieces, estimating a next process state based on at least a portion of the metrology data and determining an error value associated with the estimated next process state. The method further comprises processing a plurality of workpieces based on the estimated next process state and adjusting a sampling protocol of the processed workpieces that are to be measured based on the determined error value.

Owner:FULLBRITE CAPITAL PARTNERS

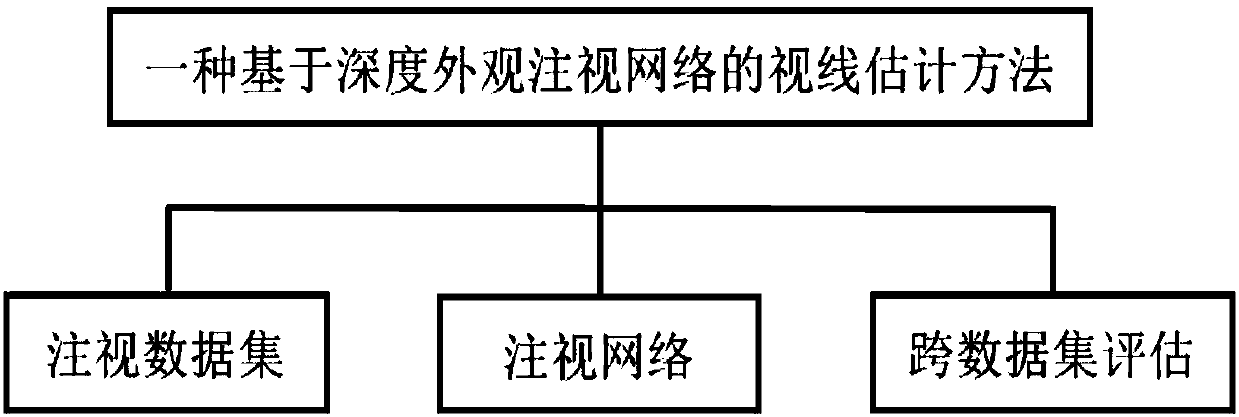

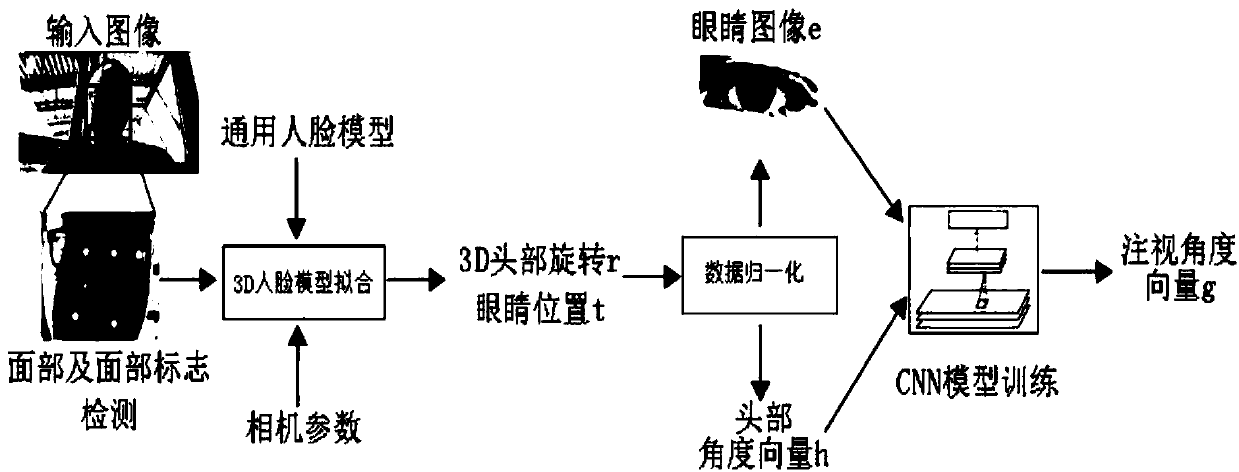

Line-of-sight estimation method based on depth appearance gaze network

The invention provides a line-of-sight estimation method based on a depth appearance gaze network. Main content of the method includes a gaze data set, a gaze network, and cross-data set evaluation. The method comprise the following steps: a large number of images from different participants are collected as a gaze data set, face marks are manually annotated on subsets of the data set, face calibration is performed on input images obtained via a monocular RGB camera, a face detection method and a face mark detection method are adopted to position the marks, a general three-dimensional face shape model is fitted to estimate a detected three-dimensional face posture, a spatial normalization technique is applied, the head posture and eye images are distorted to a normalized training space, and a convolutional neural network is used to learn mapping of the head posture and the eye images to three-dimensional gaze in a camera coordinate system. According to the method, a continuous conditional neural network model is employed to detect the face marks and average face shapes for performing three-dimensional posture estimation, the method is suitable for line-of-sight estimation in different environments, and accuracy of estimation results is improved.

Owner:SHENZHEN WEITESHI TECH

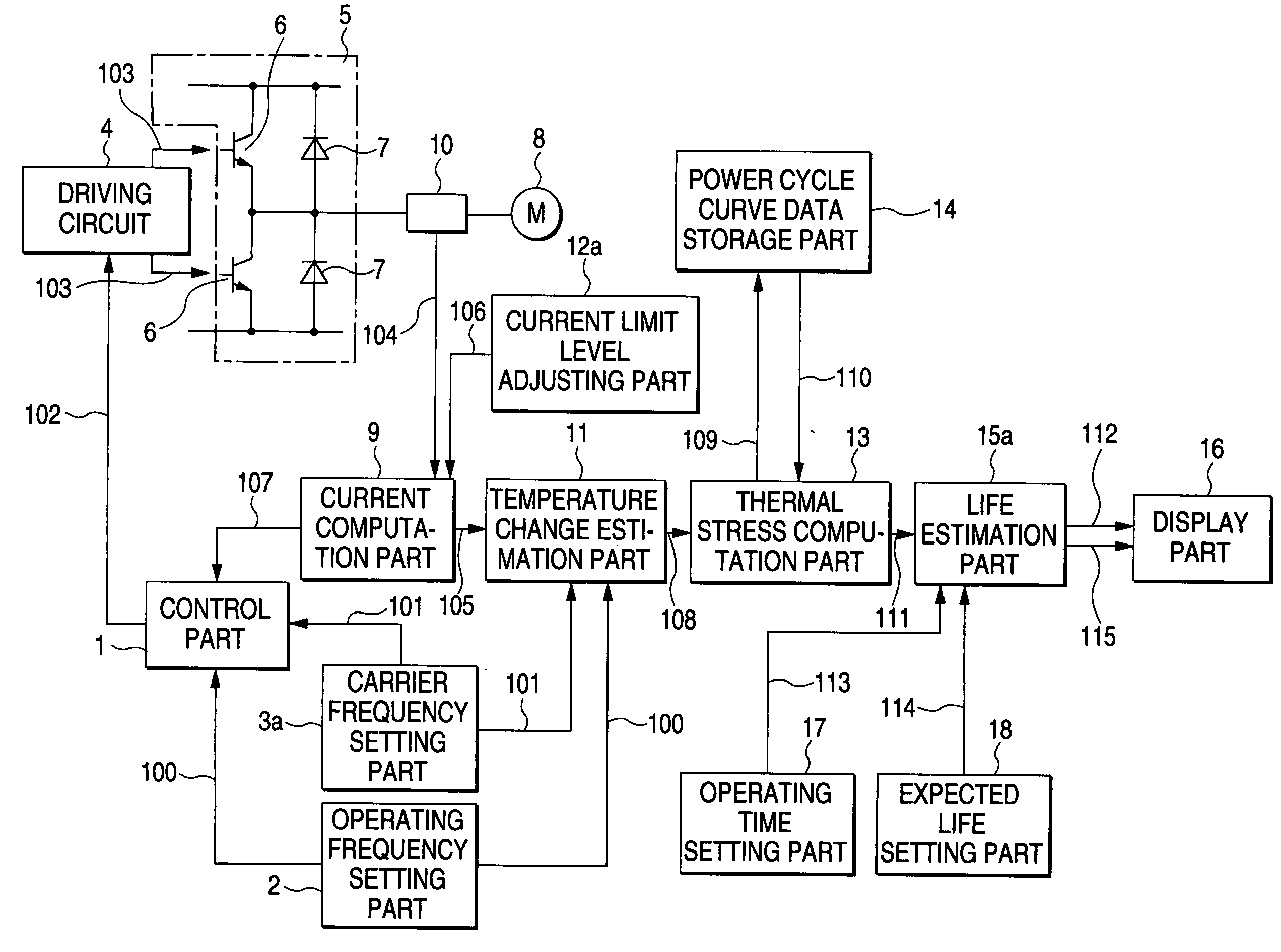

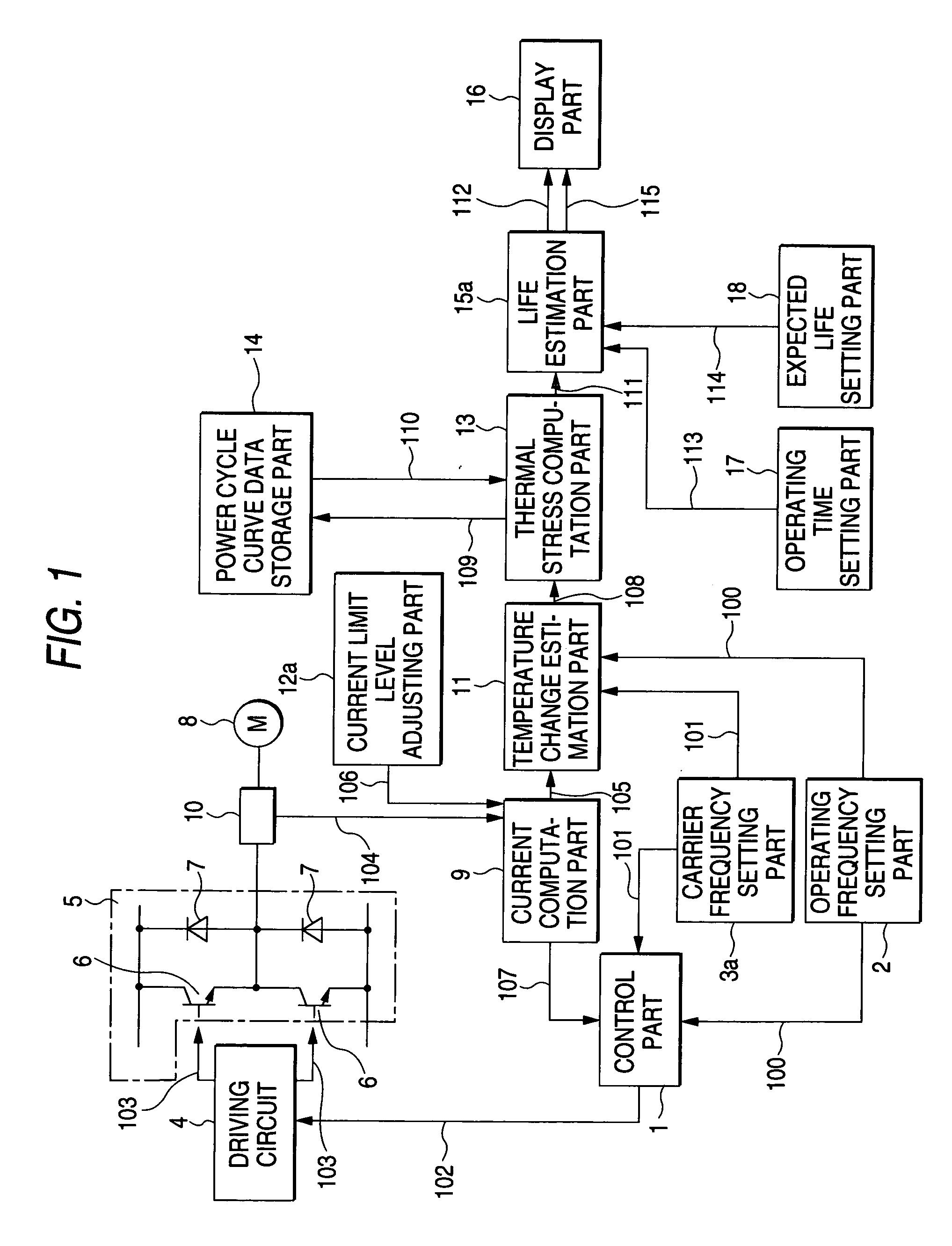

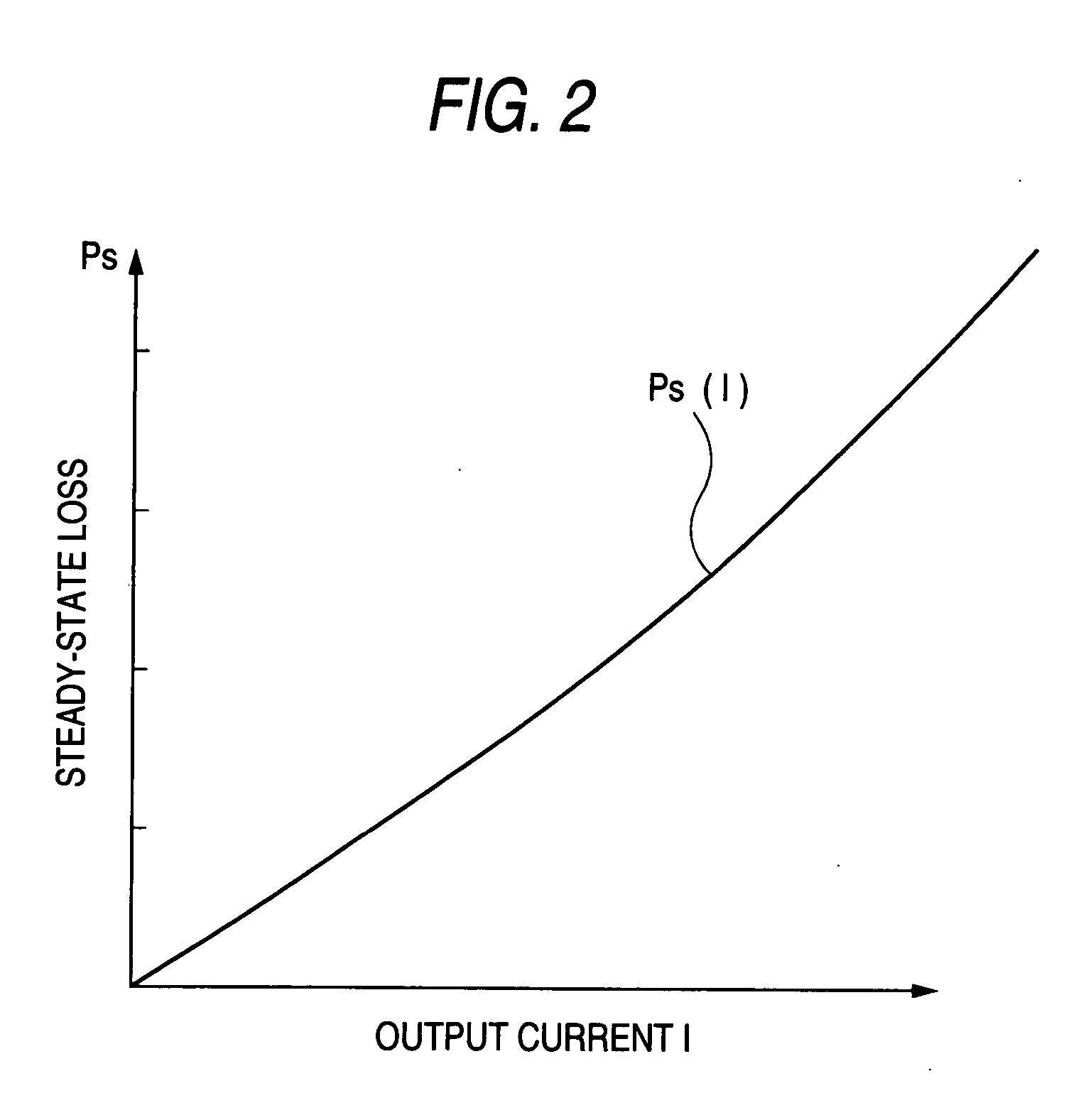

Motor controller

InactiveUS20050071090A1Reduce the amplitudeReduce temperature changesPlug gaugesVector control systemsPower cycleMotor controller

An electric motor control apparatus of this invention estimates changes in temperature of a semiconductor device to compute temperature change amplitude 108 based on an output current signal 105 computed from a current flowing through the semiconductor device of a switching circuit 5, an operating frequency signal and a carrier frequency signal by a temperature change estimation part 11, and makes conversion into the number of power cycles 110 corresponding to the temperature change amplitude 108 from power cycle curve data stored in a power cycle curve data storage part 14 and computes a thermal stress signal 111 by a thermal stress computation part 13, and does life estimation of the semiconductor device based on the thermal stress signal 111 and produces an output to a display part 16 as a life estimation result signal 112 by a life estimation part 15a.

Owner:MITSUBISHI ELECTRIC CORP

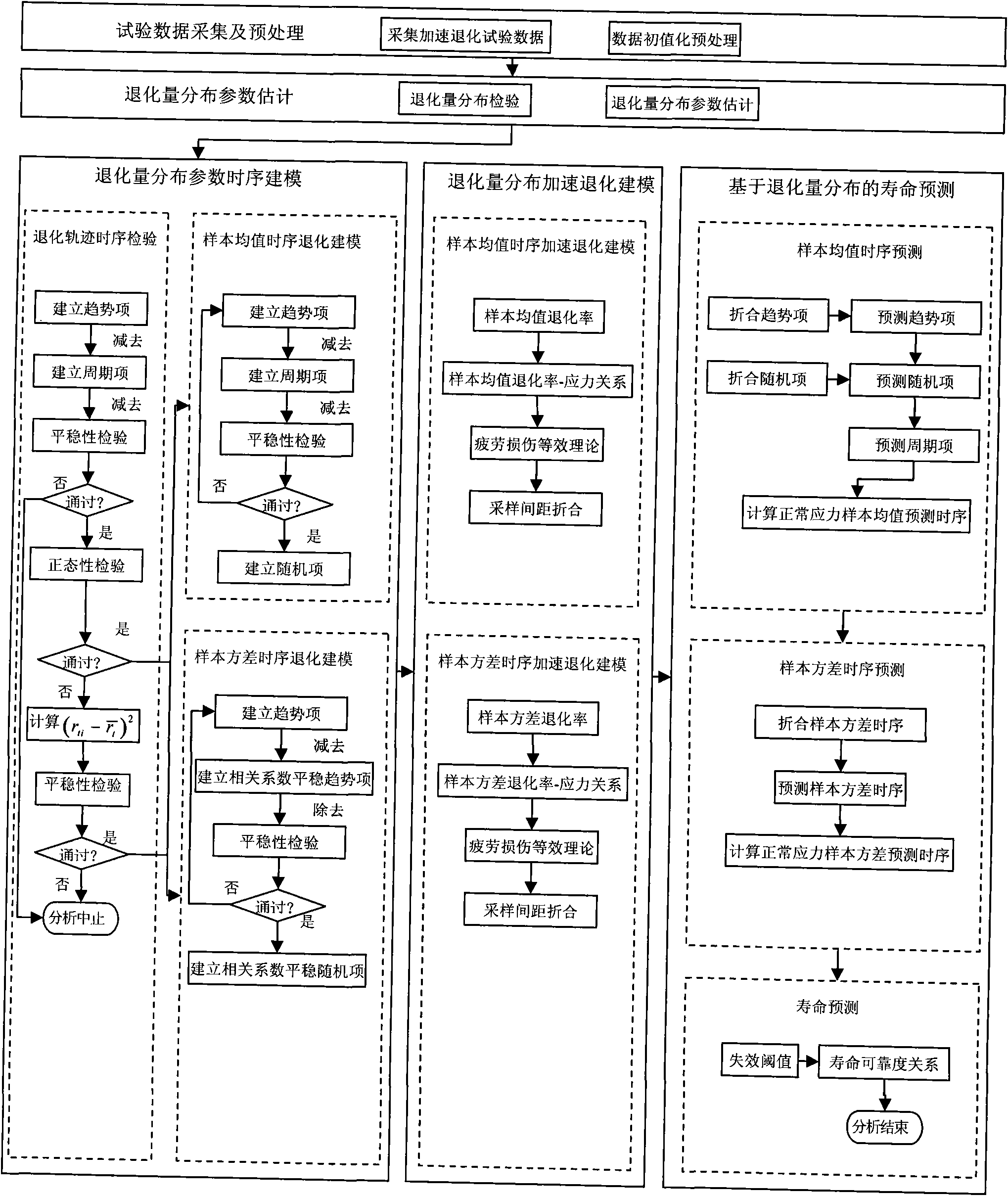

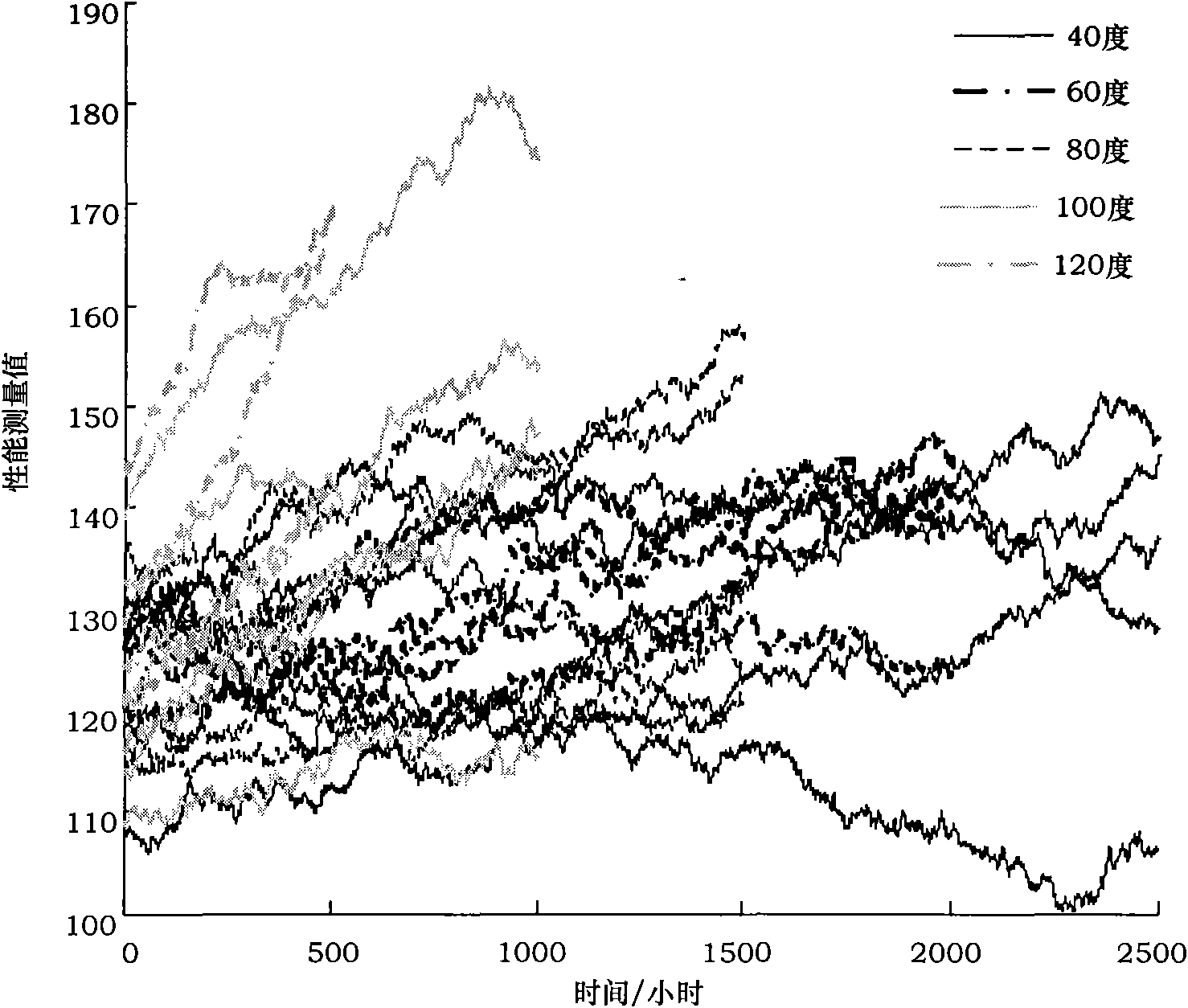

Method for predicting service life of product by accelerated degradation testing based on degenerate distribution non-stationary time series analysis

ActiveCN101894221AIncrease credibilityMacro descriptionSpecial data processing applicationsStress levelDependability

The invention discloses a method for predicting service life of a product by an accelerated degradation testing based on degradation distribution non-stationary time series analysis. The method comprises the following steps of: 1, acquisition and preprocessing of test data; 2, parameter estimation of degradation distribution; 3, time series modeling of degradation distribution parameters; 4, accelerated degradation modeling based on the degradation distribution; and 5, service life prediction based on degradation distribution. The method can macroscopically describe degradation statistical regulation of all samples under accelerated stress, overall analyze the time series of the degradation distribution in an accelerated degradation process, can extrapolate the degradation distribution under the accelerated stress to normal stress to obtain product reliability reflecting the product accelerated degradation random process volatility regulation and service life relationship prediction, improve the credibility on estimation result of service life prediction and reliability, and save more time and is more efficient compared with performance degradation prediction under normal stress level.

Owner:BEIHANG UNIV

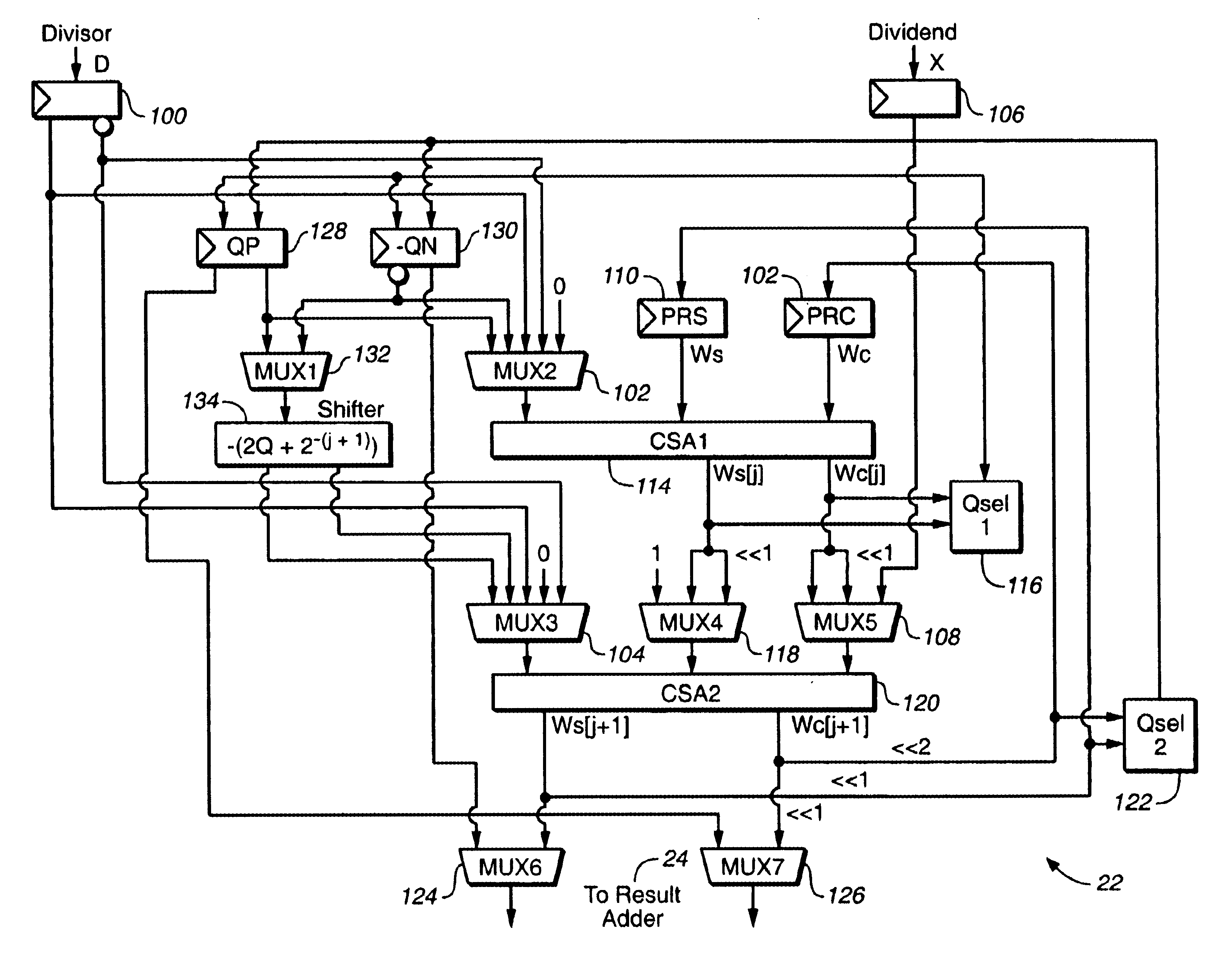

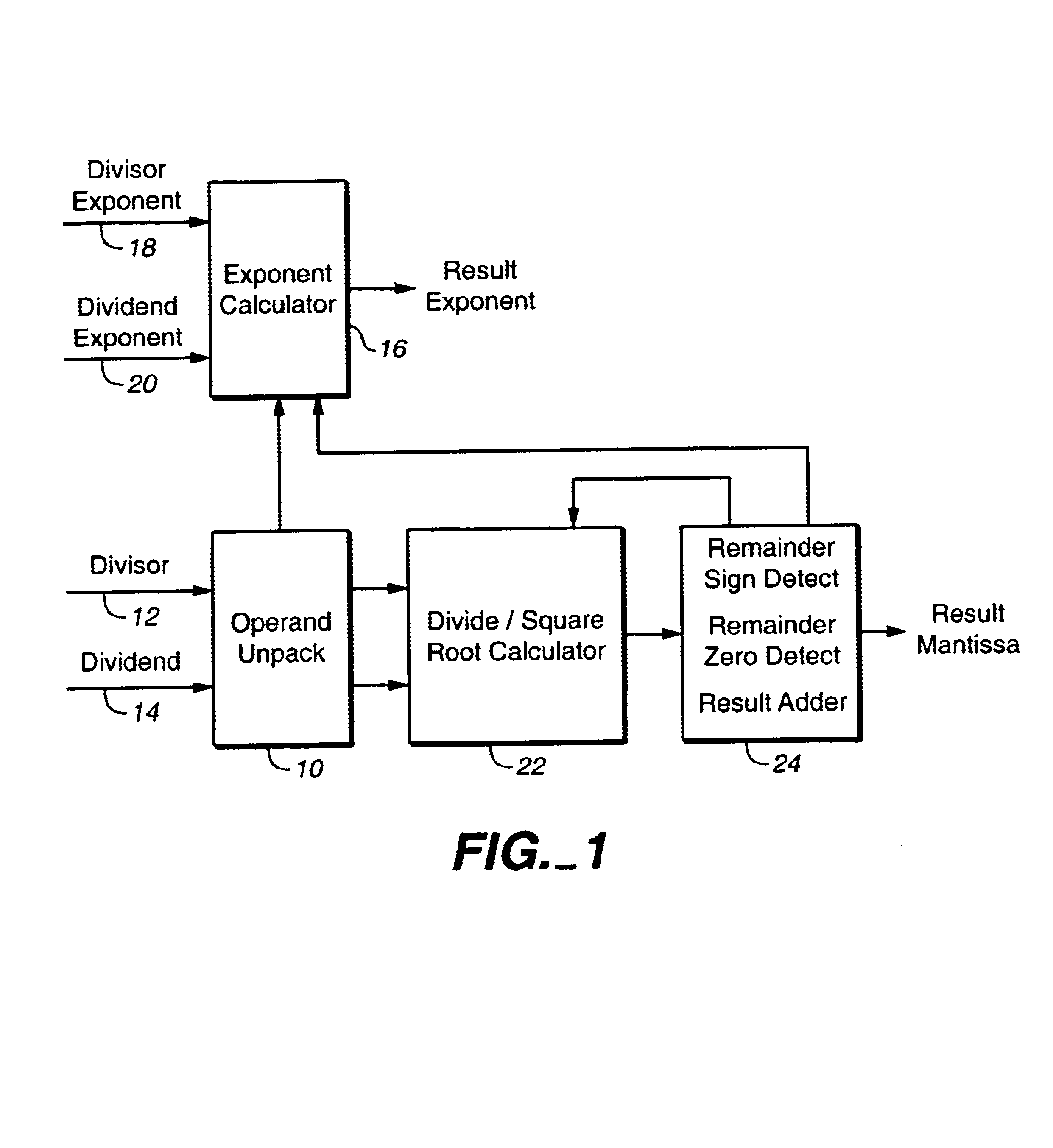

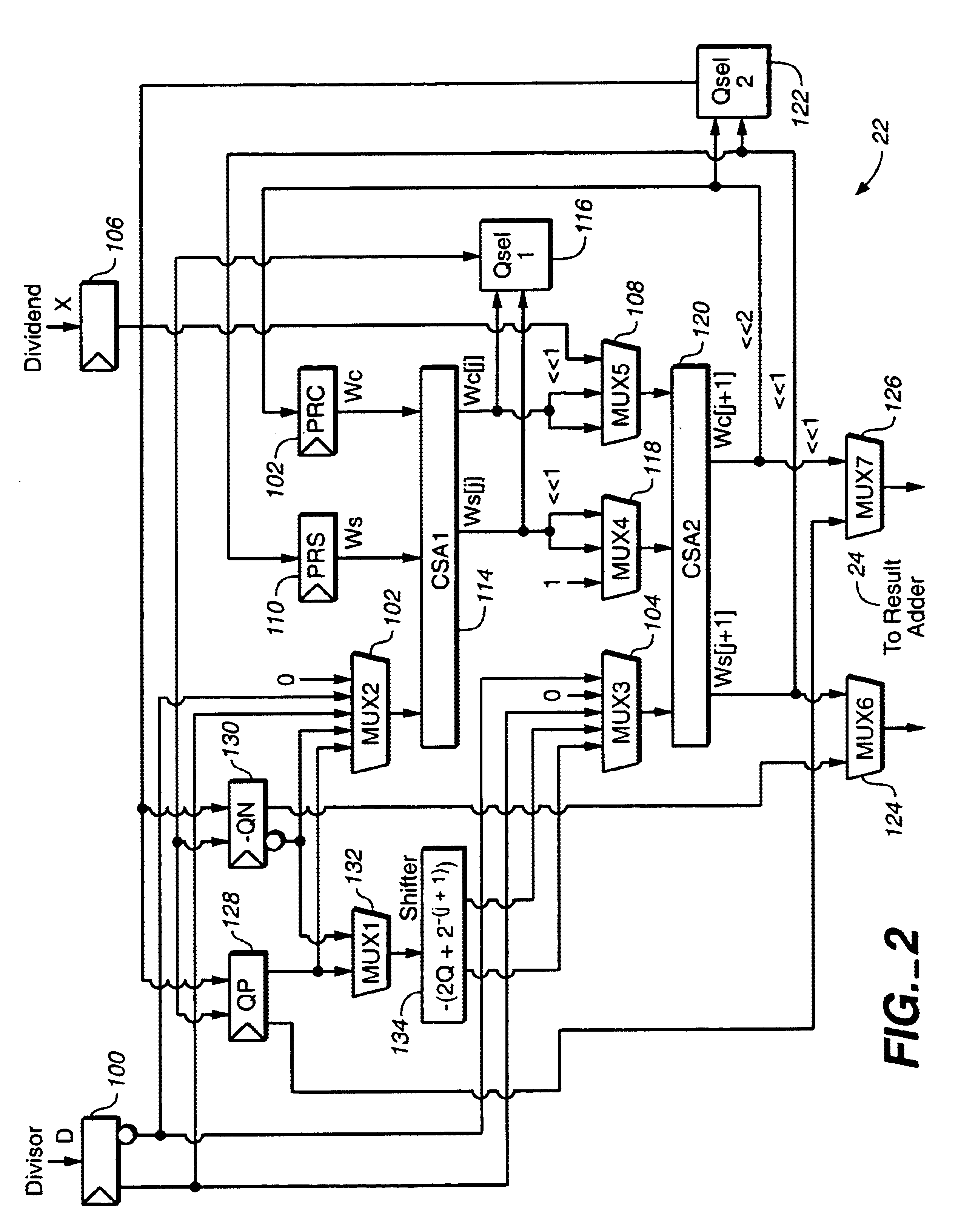

Floating point divide and square root processor

An iterative mantissa calculator calculates a quotient mantissa for a divide mode or a result mantissa for a square-root mode. The calculator includes at least first and second summing devices. In the divide mode, each summing device calculates a respective estimated partial remainder W[j+1] for the next iteration, j+1, as 2*W[j]−Sj+1*D, where W[j] is the estimated partial remainder for the current iteration calculated during the prior iteration, Sj+1 is the quotient bit estimated for the next iteration, and D is the respective divisor bit. The estimated quotient bit for the next iteration is selected based on the calculated partial remainder. In the square-root mode, the first summing device calculates 2W[j]−2S[j]Sj+1, where W[j] is the estimated partial remainder and Sj+1 is the estimated result generated during the current iteration, j. A shift register shifts the value of the estimated result, Sj+1, to generate −Sj+12·2−(j+1), which is summed with the result from the first summing device to generate the estimated partial remainder for the square root mode.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

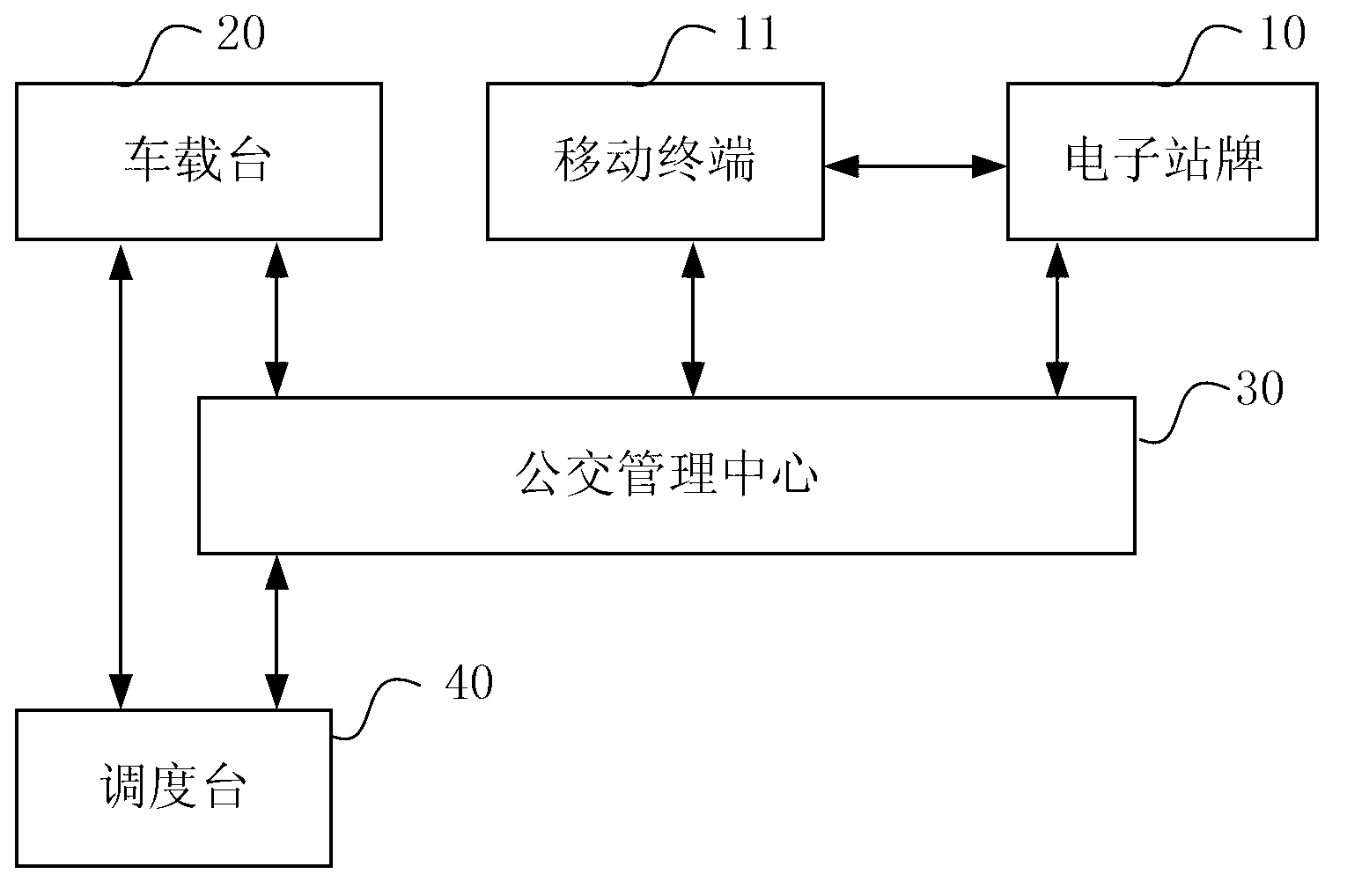

Intelligent bus scheduling management system

ActiveCN103077606AConvenient travelHigh intelligenceRoad vehicles traffic controlTransit busEmbedded system

The invention discloses an intelligent bus scheduling management system, and is applied to the fields of traffic and communication. The system comprises an electronic station board, a vehicular station, a scheduling station and at least one bus management center, wherein the electronic station board acquires bus route information of a passenger and sends the information to the bus management center; the vehicular station sends bus positioning information and passenger card-swiping records to the bus management center; and the bus management center counts and / or estimates the number of passengers waiting for a bus in each route according to the acquired information, plans a bus scheduling scheme according to a statistical result and / or an estimation result and the actual bus condition in each route, and sends a command to the scheduling station to schedule buses. The system schedules the buses according to passenger requirements and the actual bus condition to improve intelligence and efficiency, save energy and reduce emission, and ensures that the passengers select the optimal bus route, understand the current position of the bus, do not wait for the bus blindly and go out easily.

Owner:NUBIA TECHNOLOGY CO LTD

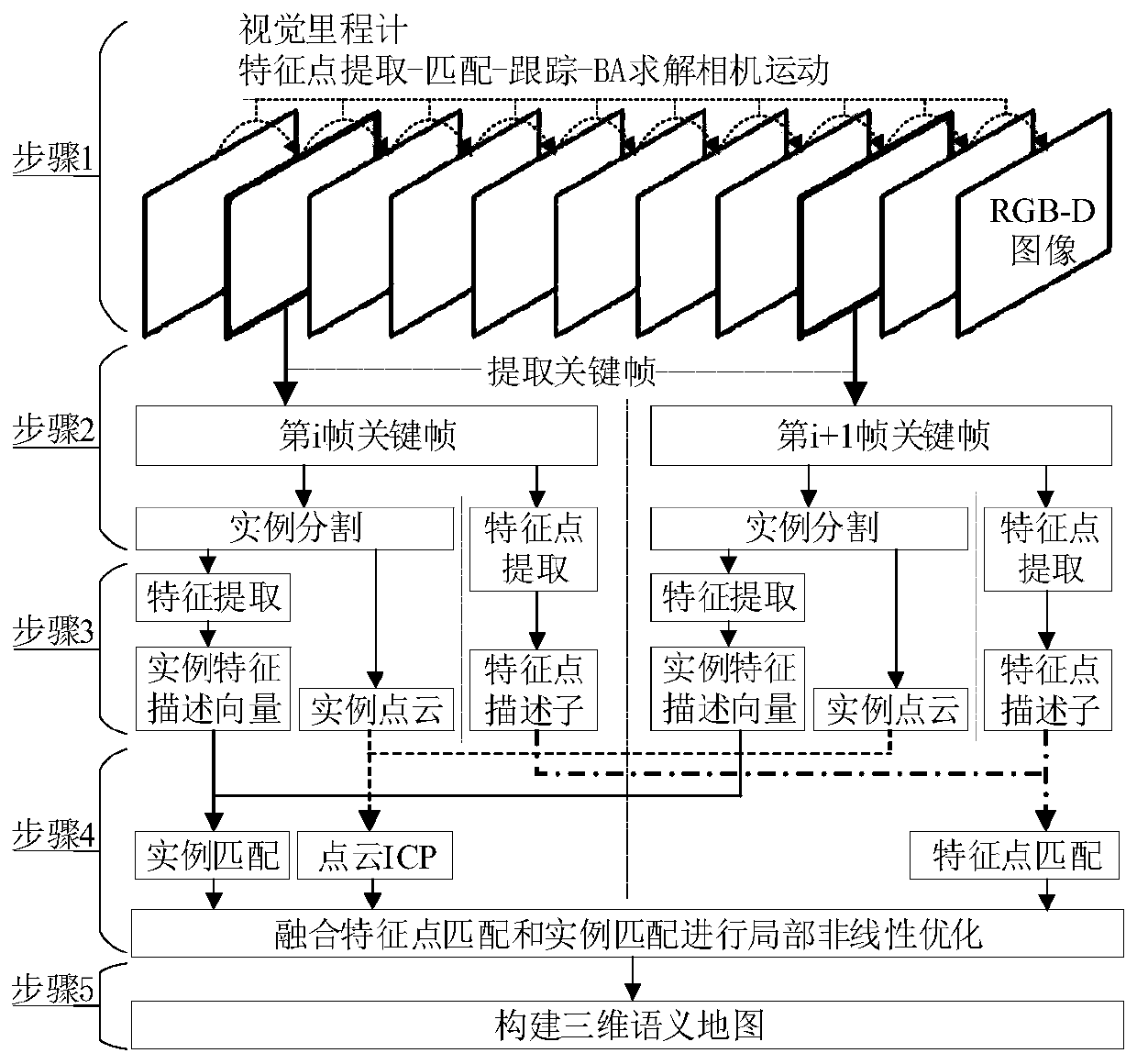

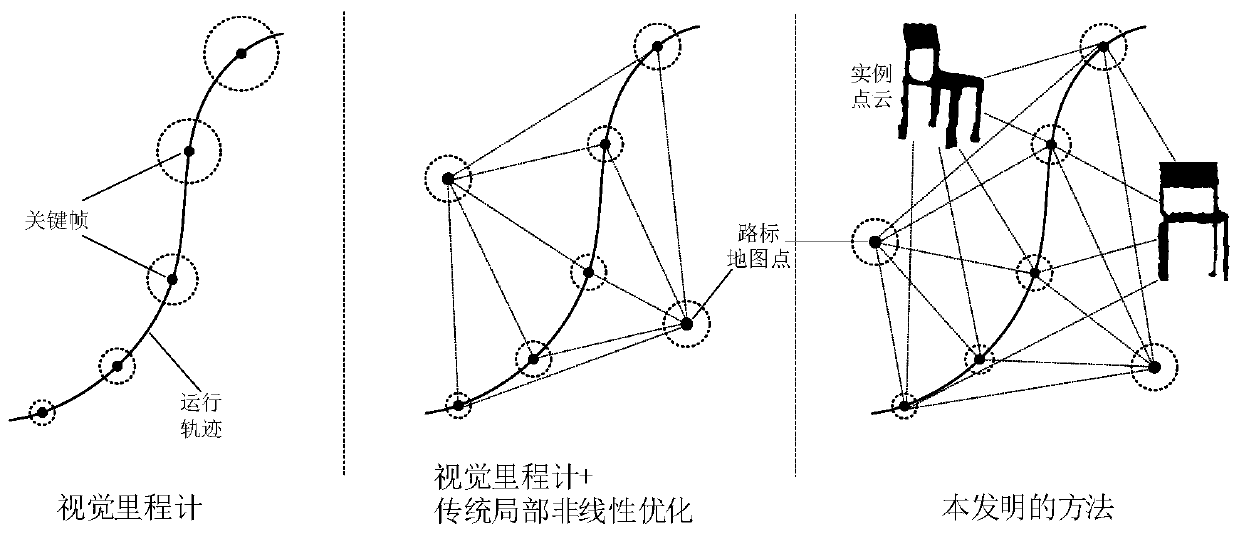

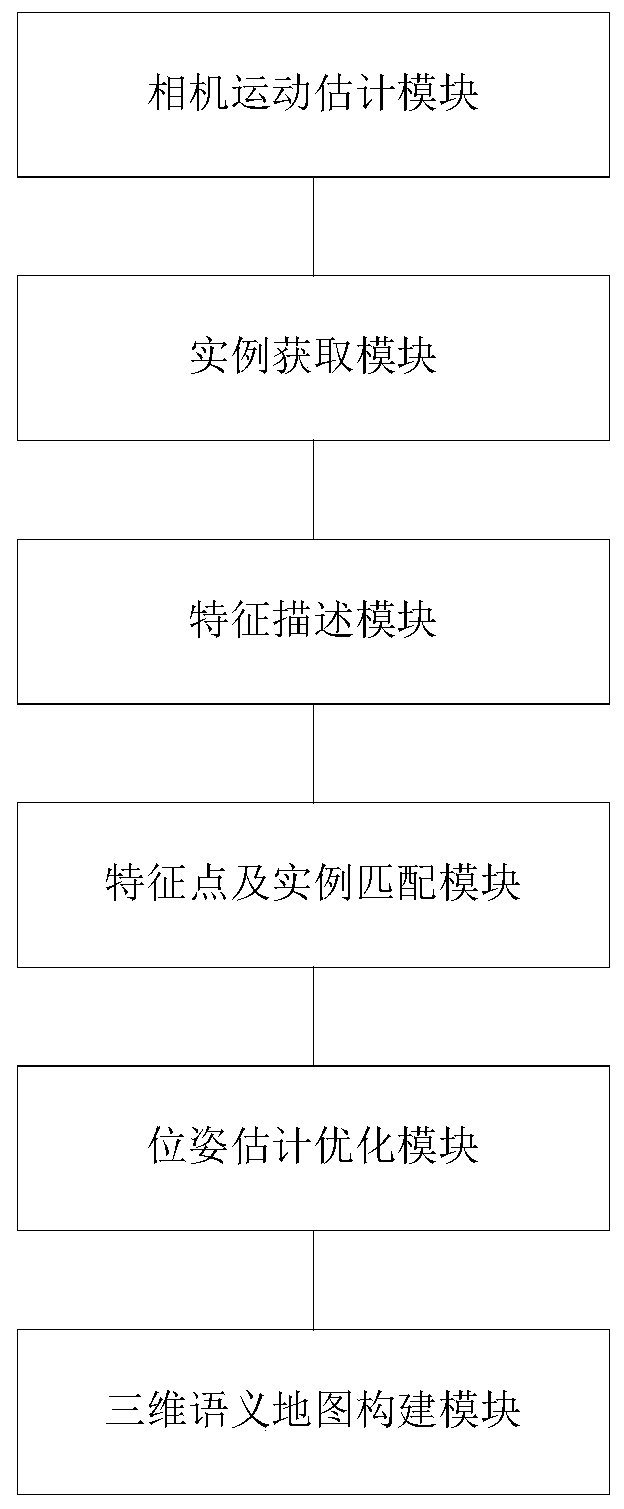

Robot semantic SLAM method based on object instance matching, processor and robot

InactiveCN109816686AGood estimateHigh positioning accuracyImage analysisNeural architecturesPoint cloudFeature description

The invention provides a robot semantic SLAM method based on object instance matching, a processor and a robot. The robot semantic SLAM method comprises the steps that acquring an image sequence shotin the operation process of a robot, and conducting feature point extraction, matching and tracking on each frame of image to estimate camera motion; extracting a key frame, performing instance segmentation on the key frame, and obtaining all object instances in each frame of key frame; carrying out feature point extraction on the key frame and calculating feature point descriptors, carrying outfeature extraction and coding on all object instances in the key frame to calculate feature description vectors of the instances, and obtaining instance three-dimensional point clouds at the same time; carrying out feature point matching and instance matching on the feature points and the object instances between the adjacent key frames; and performing local nonlinear optimization on the pose estimation result of the SLAM by fusing the feature point matching and the instance matching to obtain a key frame carrying object instance semantic annotation information, and mapping the key frame intothe instance three-dimensional point cloud to construct a three-dimensional semantic map.

Owner:SHANDONG UNIV

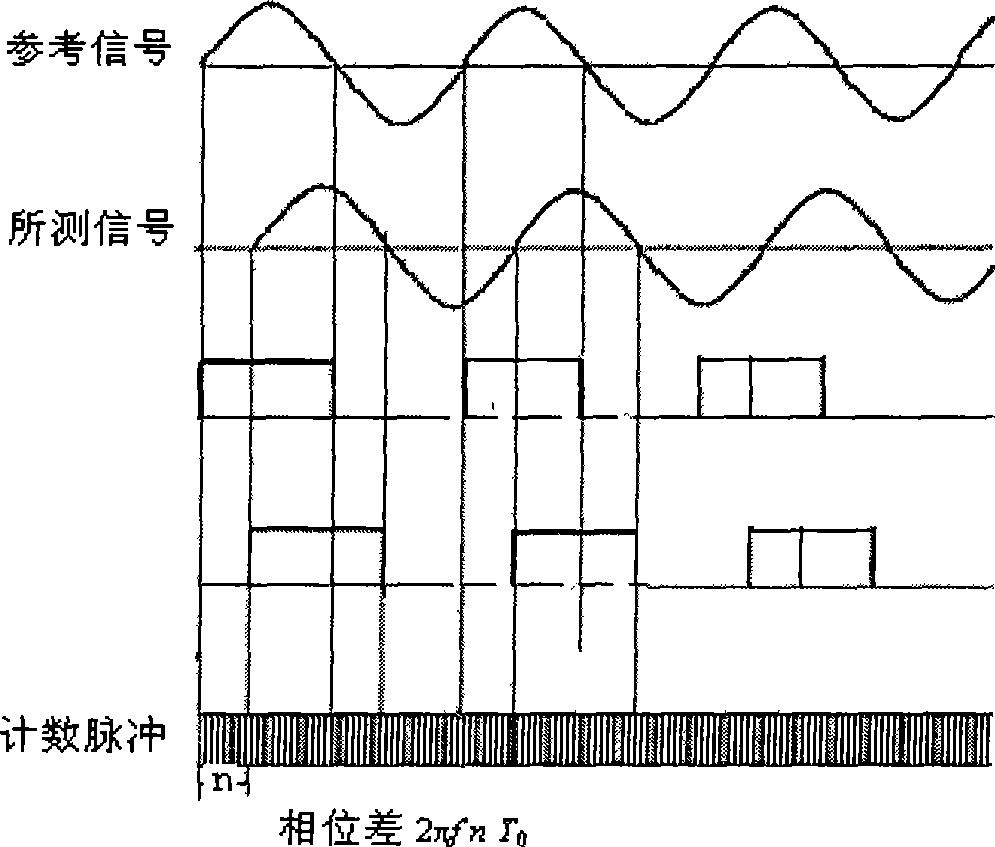

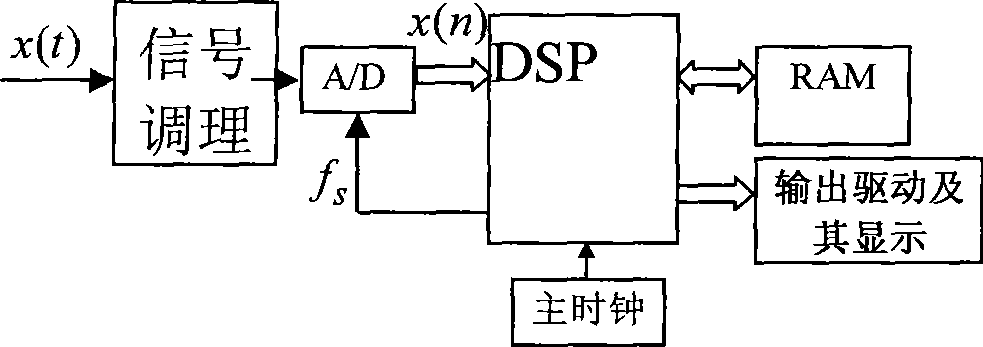

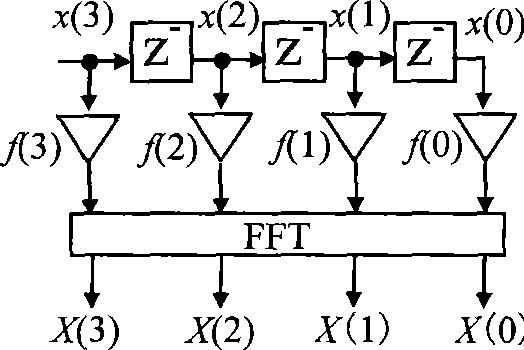

High precision instant phase estimation method based on full-phase FFT

InactiveCN101388001ALow costComplex mathematical operationsDigital signal processingEstimation methods

The invention belongs to the technical field of digital signal processing, and relates to a high-accuracy instantaneous phase estimation method based on the full-phase FFT. The steps of the estimation method comprise directly sampling an input signal x(t) to obtain a discrete data sequence (x(n-N+1)..., x(n), ..., x(n+N-1)), storing 2N-1 data obtained by sampling in the RAM of a DSP, utilizing a convolution window of which the length is 2N-1 in number to perform windowing for the sequence, superimposing the data of which the distance is N sampling intervals, fast performing Fourier transformation to the N data, outputting N plurals which namely correspond as N spectral values Y(k), performing spectral peak searching, finding a spectral Y(k*) with the highest amplitude in the N spectral values Y(k), and performing rating to the imaginary part and the real part of the spectral Y(k*), thereby an instantaneous phase estimation result of a time n can be obtained by performing arctangent calculation to the ratio.

Owner:TIANJIN UNIV

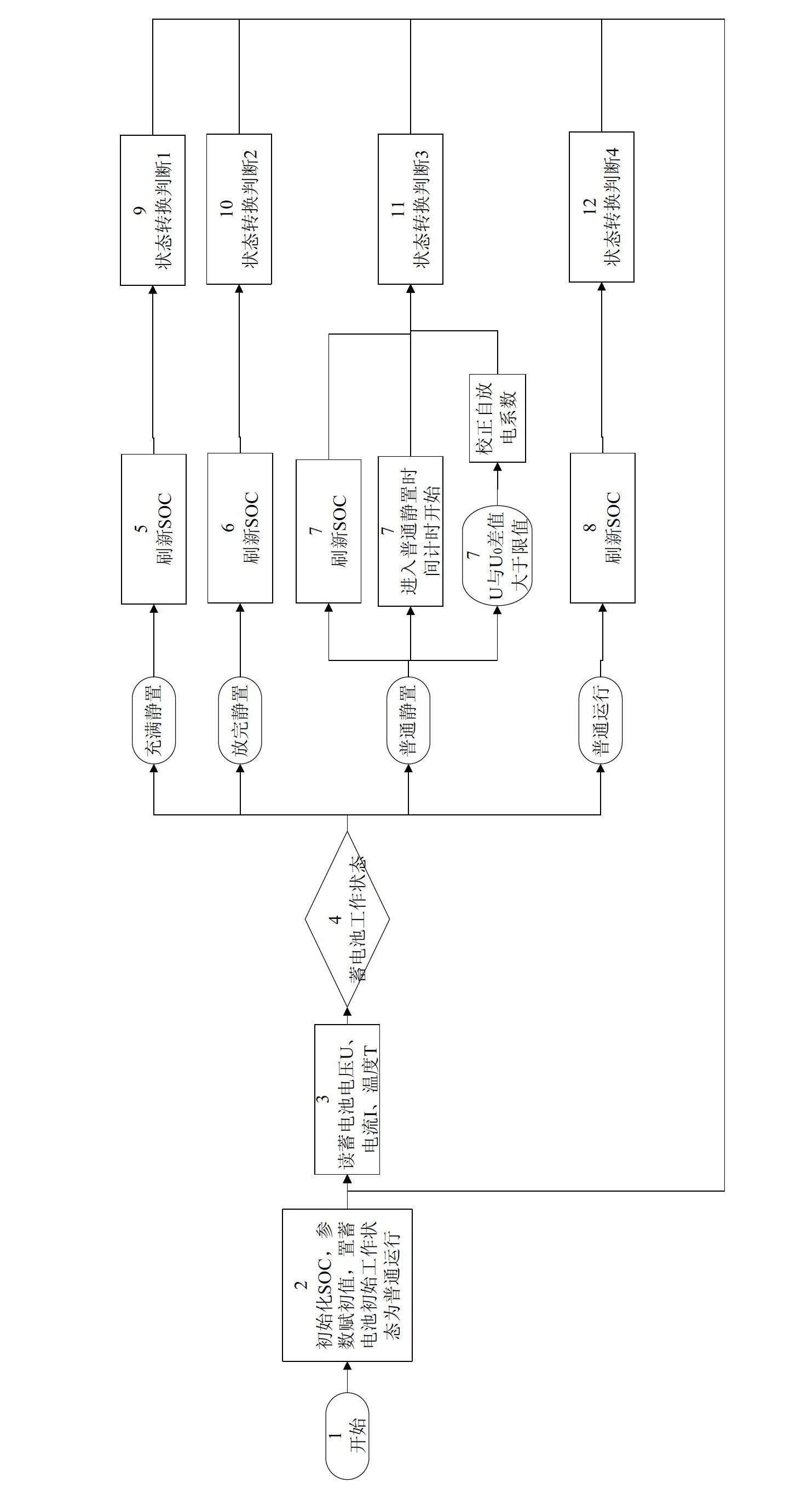

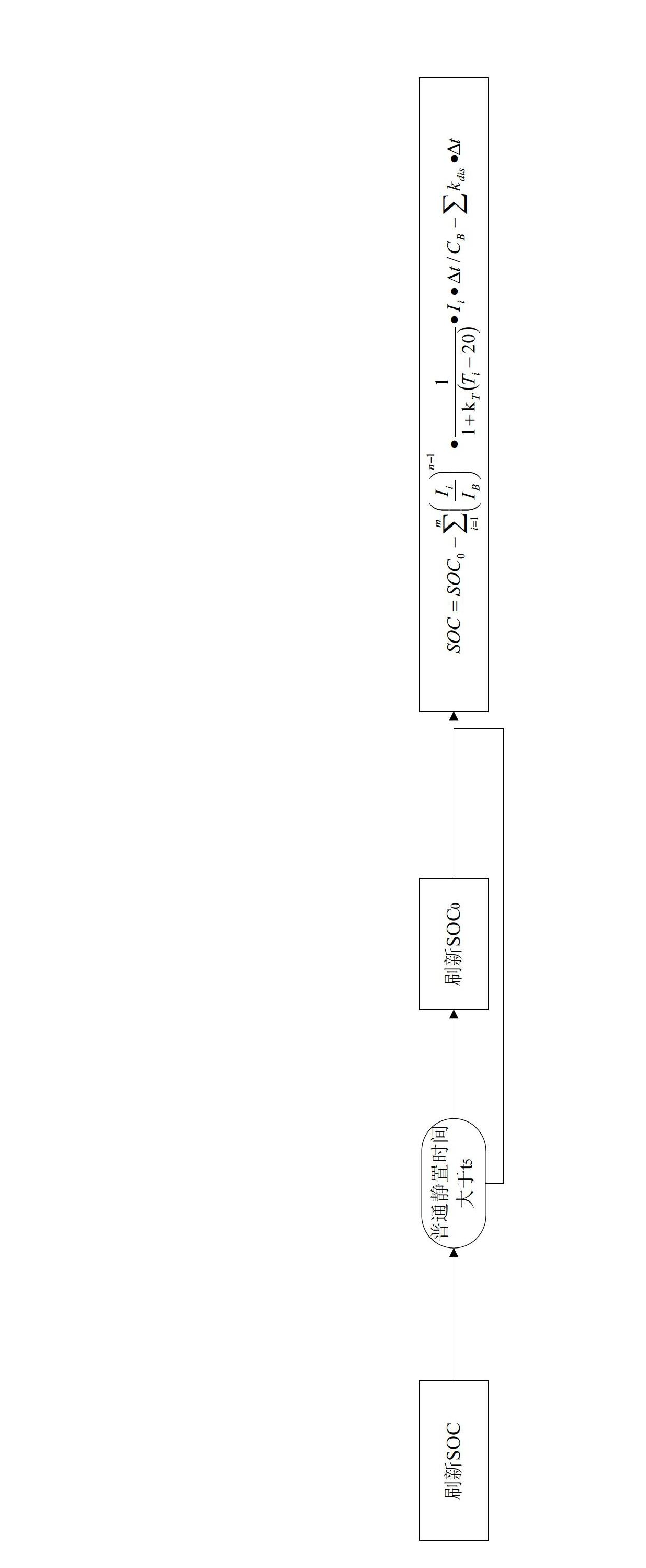

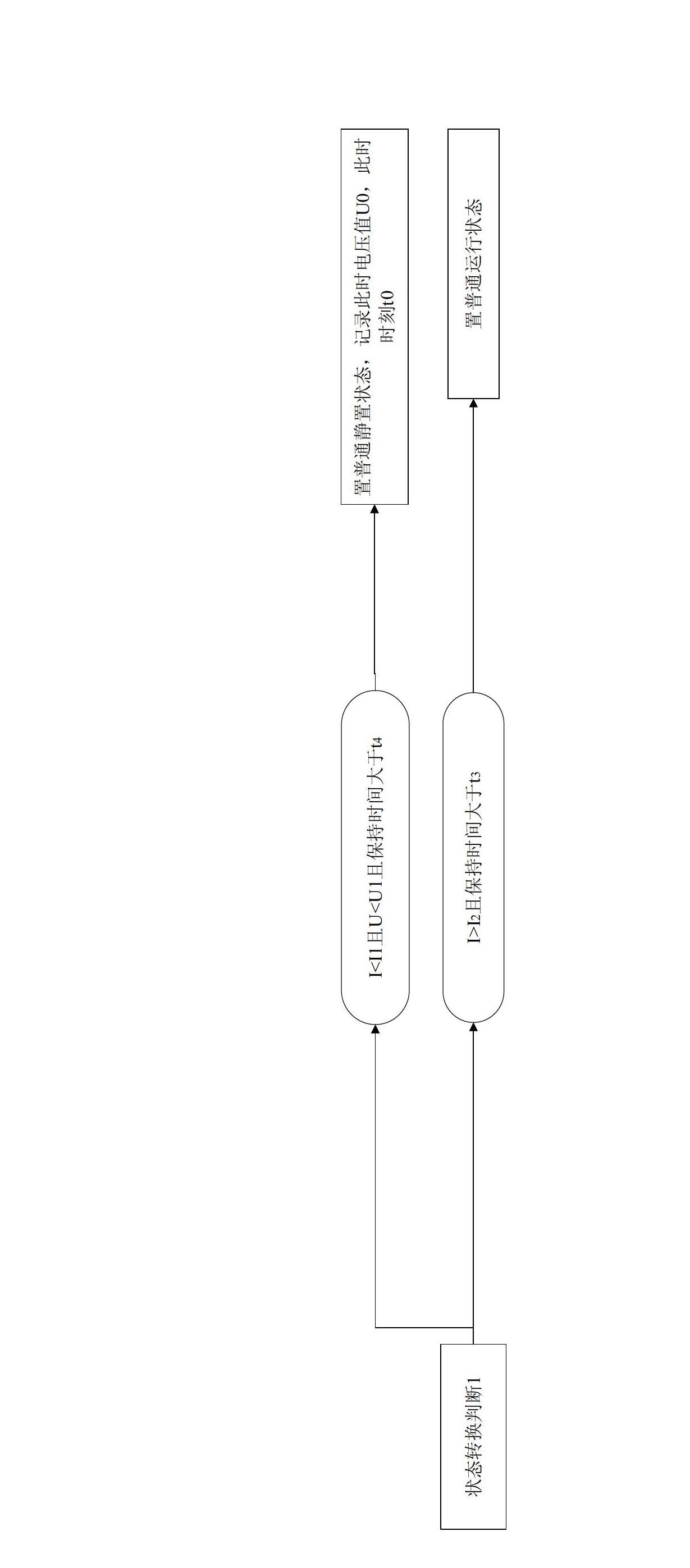

On-line feedback battery state of charge (SOC) predicting method

InactiveCN102662148AThe estimated result is close to the true valueImprove accuracyElectrical testingBattery state of chargeModel parameters

The invention relates to the technical field of storage battery state of charge prediction, and discloses an on-line feedback battery state of charge (SOC) predicting method. According to the method, SOC valuation model parameters are corrected according to historical data in the on-line operating process of a storage battery. The influence of temperature, coulomb efficiency and self discharge on battery SOC is considered, basic operating parameters of the storage battery are only required to be monitored, related coefficients are corrected as long as conditions are met in the operating process of the battery, coefficient values are repeatedly corrected, and an SOC estimation result is close to a true value along time, so the accuracy is high and the storage battery SOC can be predicted on line.

Owner:CHINA AGRI UNIV

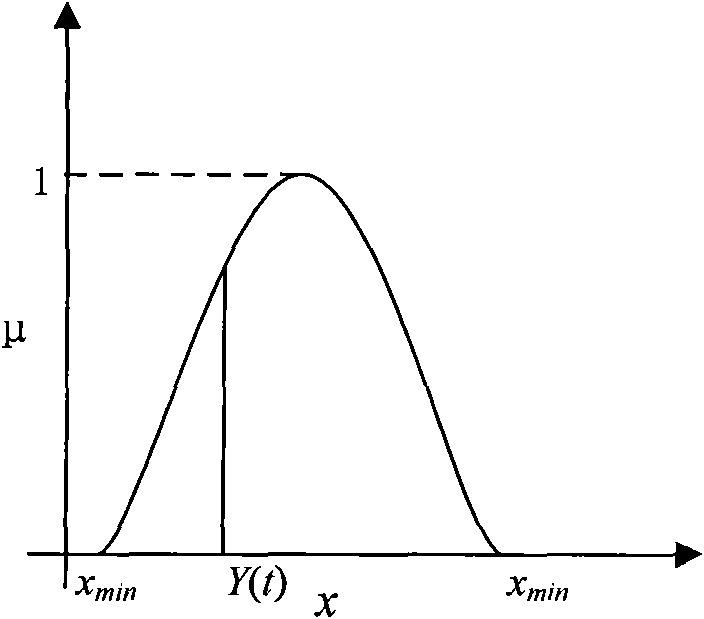

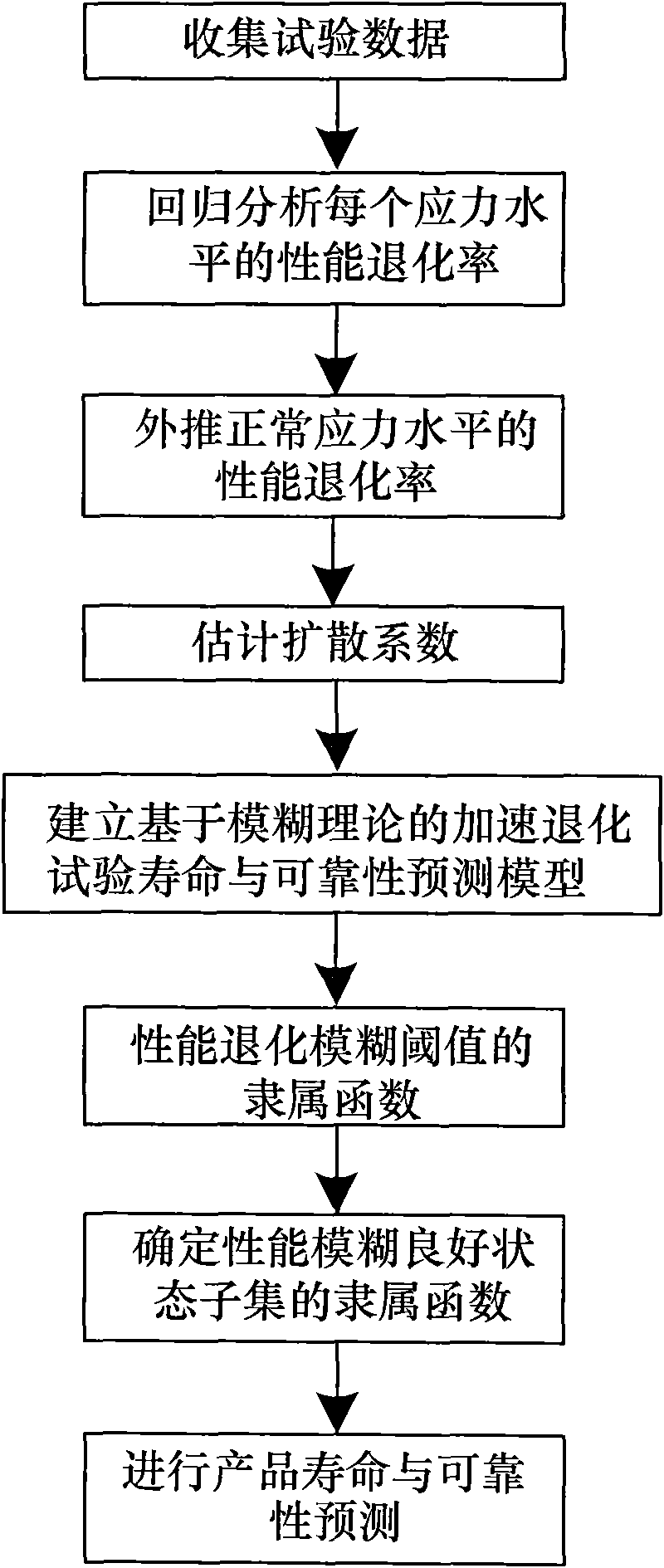

Accelerated degradation test prediction method based on fuzzy theory

InactiveCN101666662AReasonable forecastAvoid aggressive situationsMeasurement devicesComplex mathematical operationsRegression analysisBrownian excursion

The invention discloses an accelerated degradation test prediction method based on fuzzy theory, which comprises the following steps: collecting test data; performing the analysis of regression aimingat performance degradation data under each stress level; extrapolating the performance degradation rate of the product under a normal stress level; estimating a diffusion coefficient sigma in an excursion Brownian motion with drift by adopting a maximum likelihood estimation method; establishing an accelerated degradation test life and reliability predication model based on the fussy theory; andpredicting the life and the reliability of the product by adopting the fussy life and reliability prediction model. The method firstly introduces the fussy concept into an accelerated degradation testto enable the prediction result of the accelerated degradation test to be more reasonable, avoids the condition of rash routine reliability estimation result through considering the fussiness fuzziness of the performance degradation threshold, solves the problem of failed performance degradation in the engineering reality, and is suitable for the accelerated degradation tests of step stress and progressive stress and unaccelerated performance degradation prediction for the problem of the performance degradation failure.

Owner:BEIHANG UNIV

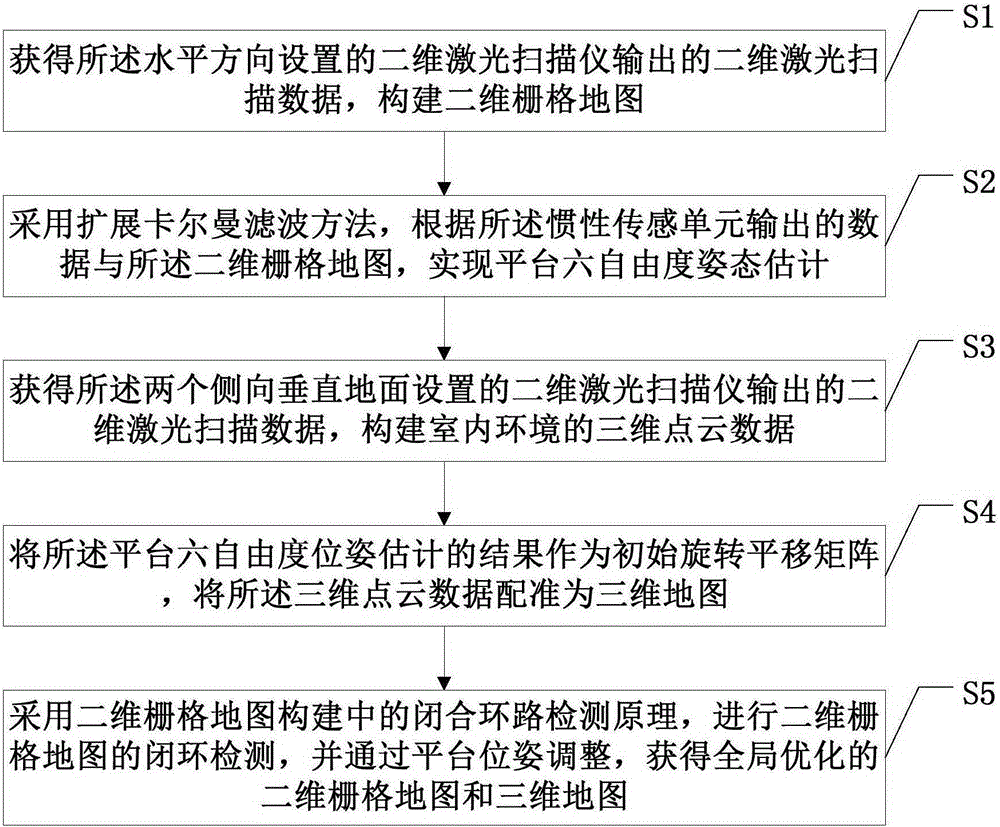

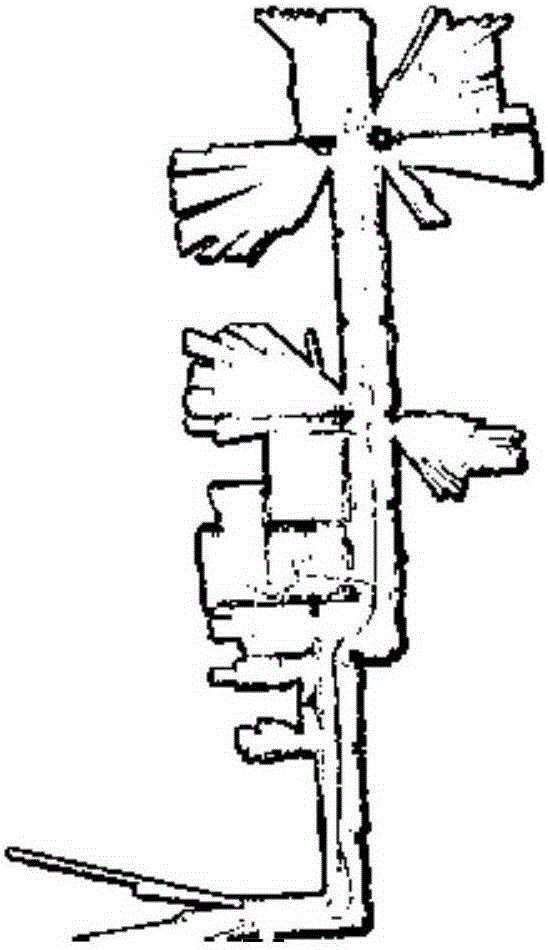

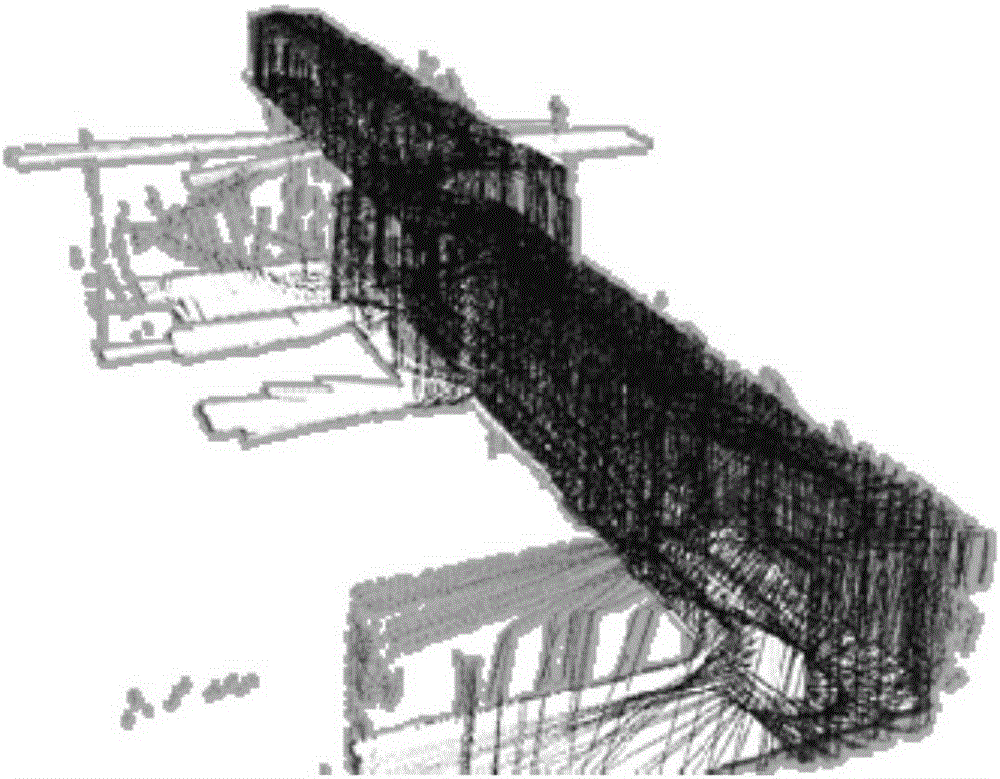

Construction method and system for two-dimensional and three-dimensional joint model of indoor environment

ActiveCN105354875AImprove accuracySolve problems with limited exploration capabilities3D modellingPoint cloudLaser scanning

The invention discloses a construction method and system for a two-dimensional and three-dimensional joint model of an indoor environment. The method comprises: obtaining two-dimensional laser scanning data output by a two-dimensional laser scanning instrument arranged in a horizontal direction, and constructing a two-dimensional grid map; performing platform six-degree-of-freedom pose estimation according to data output by an inertial sensing unit and the two-dimensional grid map with an extended Kalman filtering method; obtaining two-dimensional laser scanning data output by two two-dimensional laser scanning instruments with bottoms mounted in a vertical direction, and constructing three-dimensional point cloud data of the indoor environment; registering the three-dimensional point cloud data to be a three-dimensional map by taking a platform six-degree-of-freedom pose estimation result as an initial rotary translation matrix; and performing closed-loop detection of the two-dimensional grid map by adopting a closed-loop detection principle in two-dimensional grid map construction, and obtaining globally optimized two-dimensional grid map and three-dimensional map through platform pose adjustment, thereby achieving an effect of efficiently and accurately constructing two-dimensional and three-dimensional maps.

Owner:厦门思总建设有限公司

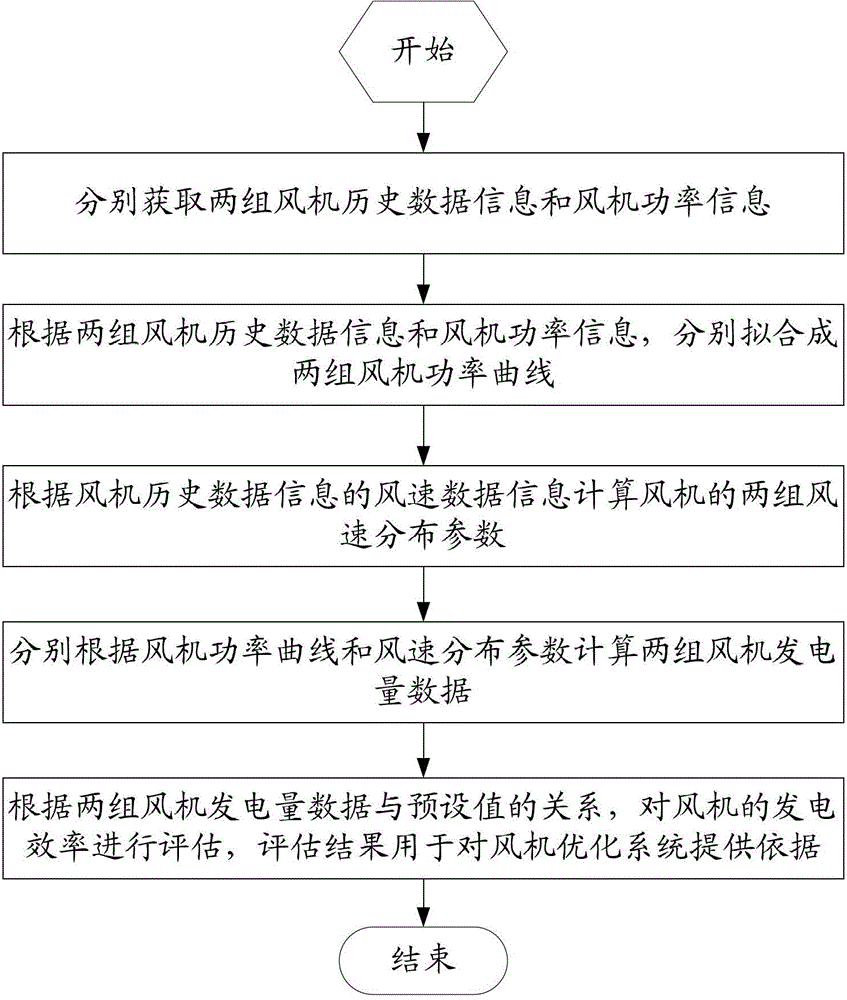

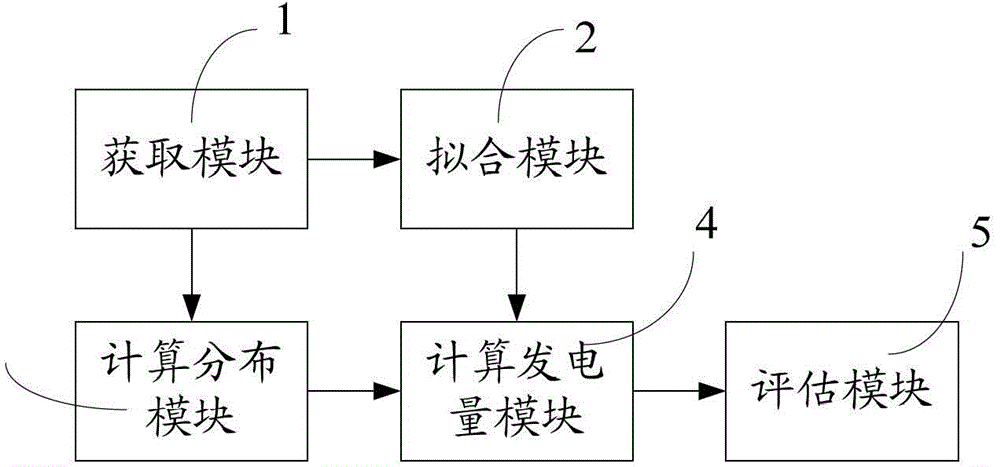

Method and device for monitoring and diagnosing generating efficiency of wind turbine generator in real-time manner

InactiveCN103150473APower characteristics for easy comparisonDetermine Power LossSpecial data processing applicationsElectricityData information

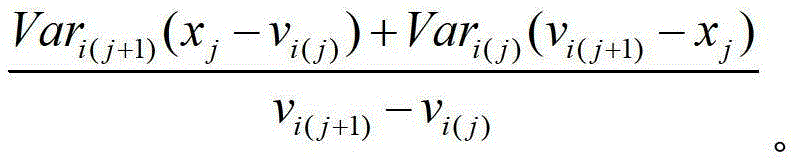

The invention relates to a method and a device for monitoring and diagnosing the generating efficiency of a wind turbine generator in a real-time manner. The method comprises following steps: obtaining historical data information and power information of two groups of air blowers respectively; fitting power curves of two groups of air blowers respectively as per the historical data information and power information of two groups of air blowers; calculating wind speed distribution parameters of two groups of air blowers as per the historical data information and power information of two groups of air blowers; calculating generating capacity data of two groups of air blowers as per the power curves and wind speed distribution parameters of two groups of air blowers; processing the generating capacity data of two groups of air blowers to obtain result data, estimating the generating efficiency of air blowers as per a relation between the result data and a preset value, and optimizing the control systems of the air blowers as per the estimation result. The invention can perform real time monitoring to the generating efficiency of the wind turbine generator and estimates the generating efficiency as per monitoring data, adjusts the control optimizing scheme of the wind turbine generator as per the estimation result, and is favorable for improvement of the generating efficiency of the wind turbine generator.

Owner:风脉能源(武汉)股份有限公司

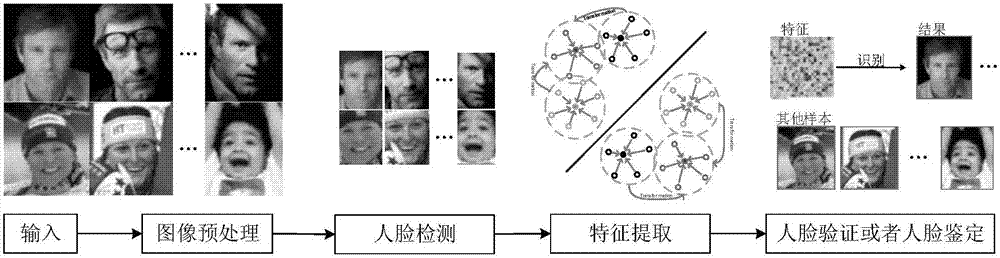

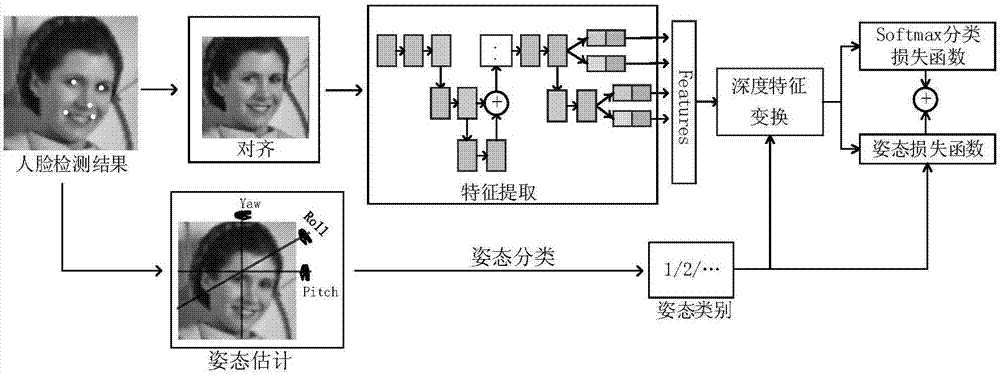

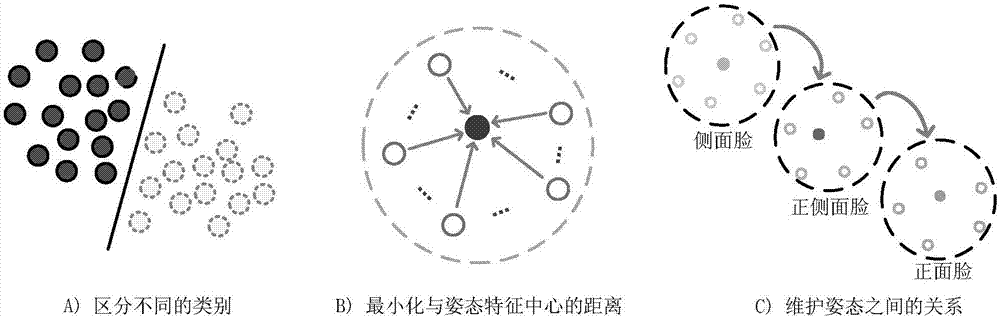

Face recognition method based on deep transformation learning in unconstrained scene

ActiveCN107506717AEnhancing Feature Transformation LearningImprove robustnessCharacter and pattern recognitionNeural learning methodsCrucial pointCharacteristic space

The invention discloses a face recognition method based on deep transformation learning in an unconstrained scene. The method comprises the following steps: obtaining a face image and detecting face key points; carrying out transformation on the face image through face alignment, and in the alignment process, minimizing the distance between the detected key points and predefined key points; carrying out face attitude estimation and carrying out classification on the attitude estimation results; separating multiple sample face attitudes into different classes; carrying out attitude transformation, and converting non-front face features into front face features and calculating attitude transformation loss; and updating network parameters through a deep transformation learning method until meeting threshold requirements, and then, quitting. The method proposes feature transformation in a neural network and transform features of different attitudes into a shared linear feature space; by calculating attitude loss and learning attitude center and attitude transformation, simple class change is obtained; and the method can enhance feature transformation learning and improve robustness and differentiable deep function.

Owner:唐晖

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com