Wheeled robot semantic mapping method and system fusing point cloud and images

A technology that integrates points and point clouds, applied in character and pattern recognition, radio wave measurement systems, instruments, etc., can solve the problem of insufficient map information, and achieve the effect of low cost, wide application range, and rich semantic information

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

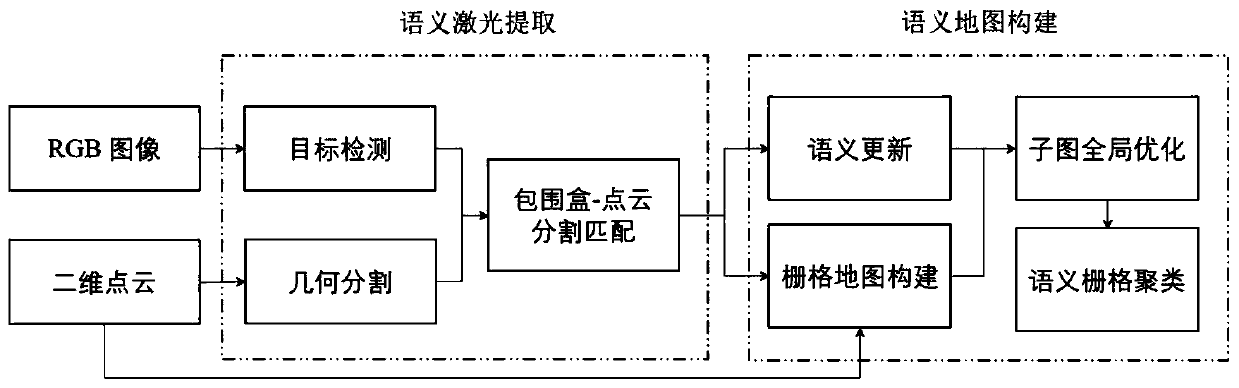

[0049] This embodiment provides a method for semantic mapping of wheeled robots that fuses point clouds and images, and the method includes:

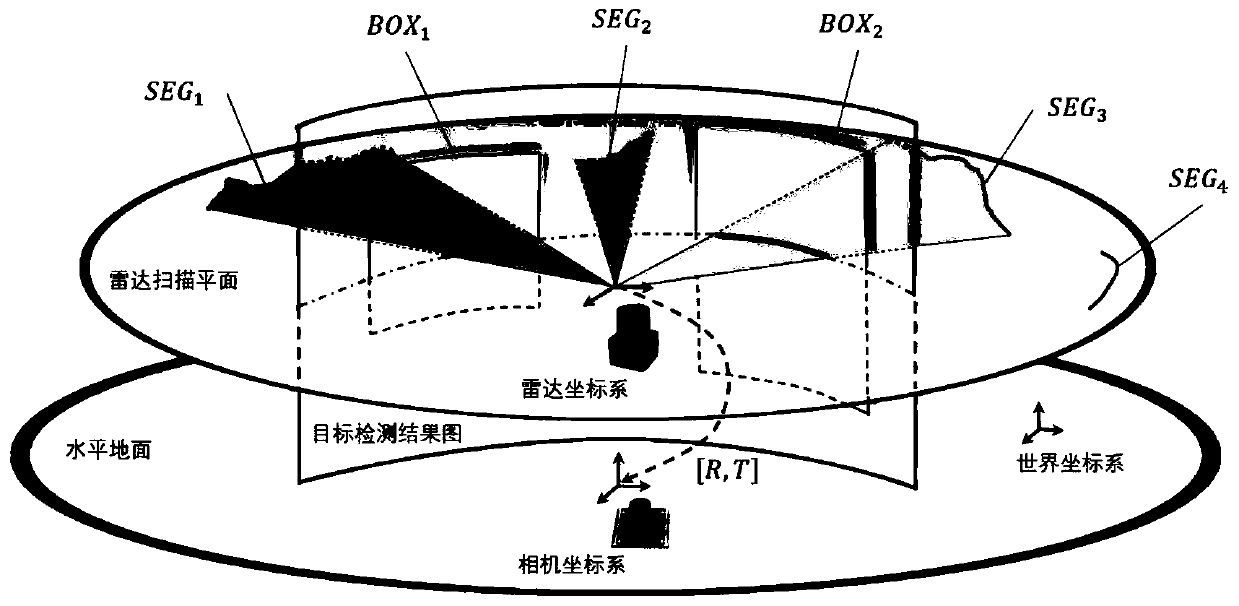

[0050] S1: Use the target detection convolutional neural network to extract the bounding box identifying the position of the object and the corresponding semantic category and confidence level from the image read by the monocular camera;

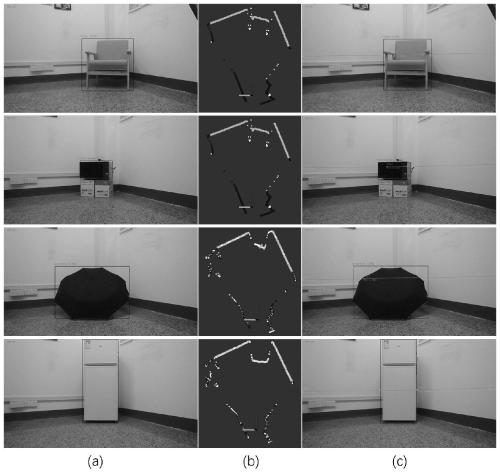

[0051] S2: Segment the two-dimensional point cloud read by the single-line lidar based on geometric features to obtain point cloud segmentation;

[0052] S3: Match the bounding box with the point cloud segmentation, and combine the matched point cloud segmentation, semantic category and confidence corresponding to the bounding box into a semantic laser;

[0053] S4: Taking semantic laser as input, using laser SLAM algorithm to construct a two-dimensional grid map, adding a semantic structure composed of cumulative confidence and update times of each category to the grid containing only occupancy proba...

Embodiment 2

[0086] Based on the same inventive concept, this embodiment provides a wheeled robot semantic mapping system that fuses point clouds and images, please refer to Figure 5 , the system consists of:

[0087] The semantic extraction module 201 is used to extract the bounding box identifying the position of the object and the corresponding semantic category and confidence level from the image read by the monocular camera by using the target detection convolutional neural network;

[0088] The point cloud segmentation module 202 is used to segment the two-dimensional point cloud read by the single-line lidar based on geometric features to obtain point cloud segmentation;

[0089] The semantic matching module 203 is used to match the bounding box with the point cloud segmentation, and combine the matched point cloud segmentation, semantic category and confidence corresponding to the bounding box into a semantic laser;

[0090] The two-dimensional grid map construction module 204 is u...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com